This post originally appeared on the Telerik Developer Blog.

Our industry's advancements in agile methodologies, DevOps, and even cloud engineering have brought a key capability to focus on: shared responsibility.

If you've been a developer for a while, you might remember throwing operation problems to an Ops team, testing to a QA team, and so on. These days, developing applications isn't just about developing applications. When developers are invested in the entire lifecycle of the application process, it results in a more invested and productive team.

This doesn't come without drawbacks, of course. While having specialists in those areas will always have value—and seeing these specialists on the development team can be tremendously helpful—developers can feel overwhelmed with all they need to take on. I often see this when it comes to automated testing. As developers, we know we need to test our code, but how far should we take it? After a certain point, isn't the crafting of automated tests the responsibility of QA?

The answer: it's everyone's responsibility and not something you should throw over the wall to your QA department (if you're lucky enough to have one). This post attempts to cut through the noise and showcase best practices that will help you craft better automated tests.

Defining Automated Testing

When I talk about automated tests, I'm referring to a process that uses tools to execute predefined tests based on predetermined events. This isn't meant to be an article focused on differentiating concepts like unit testing or integration testing—if they are scheduled or triggered based on events (like checking in code to the main branch), these are both automated tests.

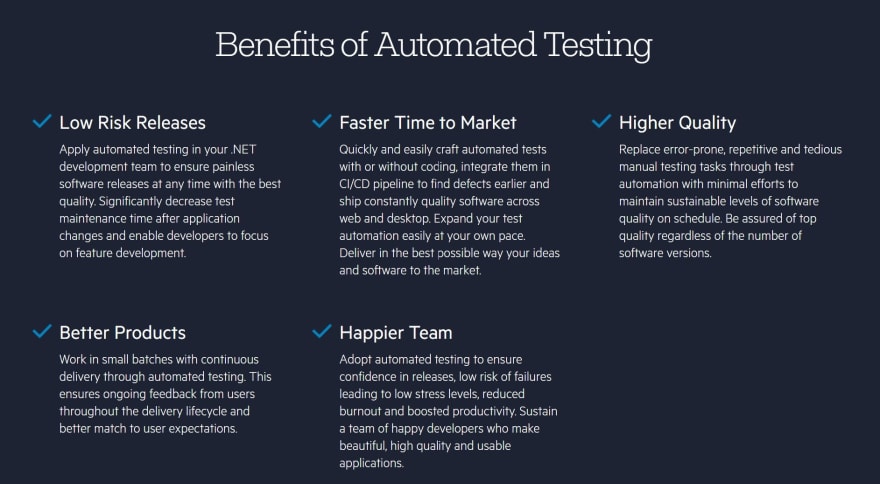

As a result of having an automated testing pipeline, you can confidently ship low-risk releases and ship better products with higher quality. Of course, automated testing isn't a magic wand—but done appropriately, it can give your team confidence that you're shipping a quality product.

Decide Which Tests to Automate

It isn't practical to say that you want to automate all your test cases. In a perfect world, would you want to? Sure. This isn't a perfect world: you have lots to get done, and you can't expect to test everything. Also, many of your tests need human judgment and intervention.

How often do you need to repeat your test? Is it something that is rarely encountered and only done a few times? A manual test should be fine. However, if tests need to be run frequently, it's a good candidate for an automated test.

Here are some more scenarios to help you identify good candidates for automated tests:

- When tested manually, it is hard to get right? Do folks spend a lot of time setting up conditions to test manually?

- Does it cause human error?

- Do tests need to be integrated across multiple builds?

- Do you require large data sets for performing the same actions on your tests?

- Does the test cover frequent, high-risk, and hot code paths in your application?

Test Early, Often, and Frequently

Your test strategy should be an early consideration and not an afterthought. If you're on a three-week sprint cycle and you're repeatedly rushing to write, fix, or update your tests on the third week, you're headed for trouble. When this happens, teams often rush to get the tests working—you'll see tests filled with inadequate test conditions and messy workarounds.

When you make automated testing an important consideration, you can run tests frequently and detect issues as they arise. In the long run, this will save a lot of time—plus, you'd much rather fix issues early than have an angry customer file a production issue because your team didn't give automated testing the attention it deserved.

Consistently Evaluate Valid and Accurate Results

To that end, your team should always be comfortable knowing your tests are consistent, accurate, and repeatable. Does the team check-in code without ensuring that previous testing is still accurate? Saying all the tests pass is not the same as saying that the tests are accurate. When it comes to unit testing, in many automated CI/CD systems, you can integrate build checks to ensure code coverage does not consistently drop whenever new features enter the codebase.

If tests are glitchy or unstable, take the time to understand why and not repeatedly manually restart runs because sometimes it works and sometimes it doesn't. If needed, remove these tests until you can resolve these issues.

Ensure Quality Test Result Reporting

What good is an automated test suite if you don't have an easy way to detect, discover, and resolve issues? When a test fails, development teams should be alerted immediately. While the team might not know how to fix it right away, they should know where to look, thanks to having clearly named tests and error messages, robust tools, and a solid reporting infrastructure. Developers should then spend their time resolving issues and not going in circles to understand what the issue is and where it lies.

Pick The Right Tool

Automated testing can become more manageable with the right tools. So I hope you're sitting down for this: I recommend Telerik Test Studio. With Telerik Test Studio, everyone can and drive value from your testing footprint: whether it's developers, QA, or even your pointy-haired bosses.

Developers can use the Test Studio Dev Edition to automate your complex .NET applications right from Visual Studio. QA engineers can use Test Studio Standalone to facilitate developing functional UI testing. Finally, when you're done telling your managers, "I'm not slacking, I'm compiling," you can point them to the web-based Executive Dashboard for monitoring test results and building reports.

Top comments (0)