Big O notation describes the performance or complexity of an algorithm. It specifically looks at the worst-case scenario to describe the execution time required and the space used by an algorithm. Without a solid foundation of mathematics, Big O notation can be intimidating to learn. It's important, especially for large applications that manipulate large data sets, because inefficient algorithms will have a significant impact on the processing time. Understanding Big O notation is also useful for interviewing since there's a good chance you'll be asked about the runtime complexity of your algorithm at the technical interview. I hope this article will help you break down Big O notation so that you can better analyze your own algorithms.

O(1) - Constant Time

Simply put, O(1) describes an algorithm that will always execute in the same time or space regardless of the size of the input. Examples of constant time operations would be assigning a value to a variable, inserting an element into an array, or retrieving a value from a hash table with a key.

int n = 1000

O(n) - Linear Time

O(n) describes an algorithm whose performance will grow in direct proportion to the size of the input. A common example of a linear time algorithm would be a for loop with a constant time operation inside. Other examples could be finding an item in an unsorted collection or sorting an array with bubble sort.

for (int i =0; i < n; i++) {

// some constant time operation

}

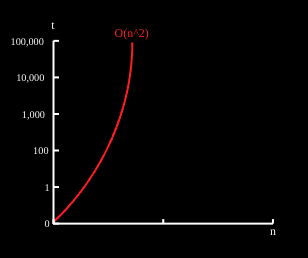

O(n^2) - Quadratic Time

O(n^2) represents an algorithm whose performance is directly proportional to the square of the size of the input. These algorithms often involve nested iterations. Deeper nesting will result in O(n^3), O(n^4), etc. Other examples of quadratic time algorithms could be performing a linear search of a matrix or insertion sort.

for (int i = 0; i < n; i++) {

for (int j = 0; j < n; j++) {

// some constant time operation

}

}

O(Log n) - Logarithmic Time

While constant time algorithms are (asymptotically) the quickest, logarithmic are the next quickest. Logarithmic time grows slower as the input grows. Logarithmic time algorithms are tricky but a simple way to check if a loop is log n is to see if the counting variable doubles instead of incrementing by 1 like in the following example:

for (int i = 0; i < n; i *= 2) {

// some constant time operation

}

O(n Log n) - Linearithmic Time

Linearithmic algorithms are good with a very large data set. Examples would be quick sort, merge sort, and heap sort. In the following example, note that the first loop is linear and the nested loop is logarithmic.

for (int i = 0; i < n; i++ )

for (int j = 1; j < n; j *= 2) {

// some constant time operation

}

}

Conclusion

Understanding Big O notation can help you understand the time and space complexity of an algorithm. For quick reference here is a comparison of the complexities mentioned above. Remember that Big O notation describes the worst case and to strive towards logarithmic or linearithmic time with your own algorithms.

This article is meant to be an introduction to Big O notation or used as a quick reference. For additional information, check out the following resources:

Top comments (1)

How is that possible that for some values LogN > N (last picture)?