Get it? Throwing stuff? Like errors?

For my fourth and final act... I will make this bug, disappear!

Wait... what the hell's a Flask?

CANNOT RUN PROGRAM: CREATEPROCESS ERROR=5 ACCESS IS DENIED

ATTRIBUTE ERROR: NAVIGABLESTRING OBJECT HAS NO ATTRIBUTE "FIND_ALL"

CANNOT RUN PROGRAM: CREATEPROCESS ERROR=5 ACCESS IS DENIED

ATTRIBUTE ERROR: NAVIGABLESTRING OBJECT HAS NO ATTRIBUTE "TEXT"

CANNOT RUN PROGRAM: CREATEPROCESS ERROR=5 ACCESS IS DENIED

ATTRIBUTE ERROR: NAVIGABLESTRING OBJECT HAS NO ATTRIBUTE "CONTENTS"

⢀⣠⣾⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⠀⠀⠀⠀⣠⣤⣶⣶

⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⠀⠀⠀⢰⣿⣿⣿⣿

⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣧⣀⣀⣾⣿⣿⣿⣿

⣿⣿⣿⣿⣿⡏⠉⠛⢿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⡿⣿

⣿⣿⣿⣿⣿⣿⠀⠀⠀⠈⠛⢿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⠿⠛⠉⠁⠀⣿

⣿⣿⣿⣿⣿⣿⣧⡀⠀⠀⠀⠀⠙⠿⠿⠿⠻⠿⠿⠟⠿⠛⠉⠀⠀⠀⠀⠀⣸⣿

⣿⣿⣿⣿⣿⣿⣿⣷⣄⠀⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⢀⣴⣿⣿

⣿⣿⣿⣿⣿⣿⣿⣿⣿⠏⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠠⣴⣿⣿⣿⣿

⣿⣿⣿⣿⣿⣿⣿⣿⡟⠀⠀⢰⣹⡆⠀⠀⠀⠀⠀⠀⣭⣷⠀⠀⠀⠸⣿⣿⣿⣿

⣿⣿⣿⣿⣿⣿⣿⣿⠃⠀⠀⠈⠉⠀⠀⠤⠄⠀⠀⠀⠉⠁⠀⠀⠀⠀⢿⣿⣿⣿

⣿⣿⣿⣿⣿⣿⣿⣿⢾⣿⣷⠀⠀⠀⠀⡠⠤⢄⠀⠀⠀⠠⣿⣿⣷⠀⢸⣿⣿⣿

⣿⣿⣿⣿⣿⣿⣿⣿⡀⠉⠀⠀⠀⠀⠀⢄⠀⢀⠀⠀⠀⠀⠉⠉⠁⠀⠀⣿⣿⣿

⣿⣿⣿⣿⣿⣿⣿⣿⣧⠀⠀⠀⠀⠀⠀⠀⠈⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⢹⣿⣿

⣿⣿⣿⣿⣿⣿⣿⣿⣿⠃⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⢸⣿⣿

So, this will be my fourth and final pull request for ODS600's release 0.2 assignment (and also Hacktoberfest). Let's jump right into it.

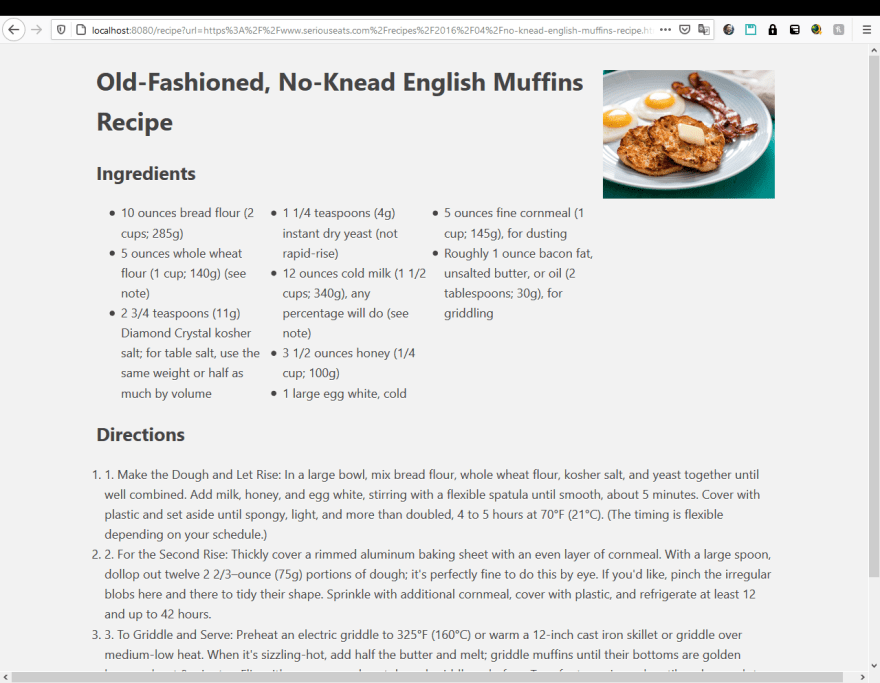

Plain Old Recipe is a Flask-based website (lovingly made with markup based on https://evenbettermotherfucking.website/) that I use occasionally that solves a really interesting problem I never knew I had until I started noticing it. Have you ever been on a recipe site and wondered why the hell there's so much extra text or a story? It's actually related to search engine optimization. (It's actually really interesting) But listen Delish, I don't care about your SEO ranking or how super-fantabulous this recipe will make my nonexistent kids love me more, just give me the fraking recipe- I have people to disappoint doncya'know.

tl;drs aside, I had heard through the grapevine that it's actually open source. Neat. I took a peek and stumbled upon an issue that jumped at me: A particular recipe website was generating two sets of ordered list numbers. Interestingly the author was already talking with someone else about how to fix this issue and mentioned that the issue might be caused by either the website or the underlying recipe scraping library (which I didn't even know was a thing. From the poking around I did, it actually seems like a really well designed library.)

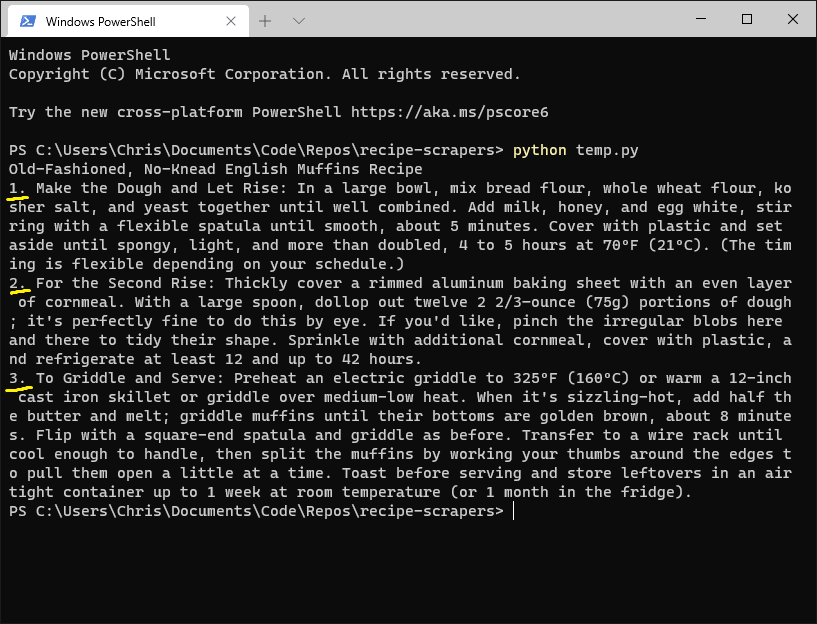

My first approach (titled: BLAME THE DEPENDENCY) was to see if this library was causing the issue. From there I'd know to fix the library or to fix plain old recipe. I installed the library and tried to run it, from here I actually learned a bit about how unit testing was done in Python, as the libraries install script runs a test coverage suite. I also discovered that my Python install somehow broke overnight and needed to be fixed (or at least that PyCharm had become very, very confused.) With Python reinstalled I made a simple script to scrape the recipe in question. Interestingly, the library scraped it just fine. Time for a second approach (titled: BLAME THE WEBSITE.) This library is slick though, I'd love to try to remake it using another stack sometime.

My first step was now to learn how to set up a Flask server. I poked through the documentation to see if anyone or the author had mentioned how to run it locally. His deploy.sh file deploys it on gcloud? Weird flex but okay. Anyway let's not do that. After a bit of digging into Flask (something I've always wanted to learn) it turns out all I had to do was: clone, install the requirements, and run main.py. Great, my local copy was up and running, and reproduces the issue as well. Now it's time to get my hand dirty.

From here I tried to update the recipe_scrapers dependency to its latest version (which coincidentally was just released a few hours ago). I was hoping this would be all that's required, maybe the library had the issue and resolved it? Thankfully it turns out no, it did not, no I don't have to find another issue to work on. From my first PR I learned that I should peek around things before trying to bash my head against something hoping it works, so that's what I did. Turns out the website grabs the URL, checks for a local scraping alternative, if present it'll run that parser, if not, it'll rely on the recipe_scrapers library. From there I figured the best bet was to just write a parser for the website in question and bypass the library entirely. So I figured out what the html template wanted from a recipe object and modelled a parser around that. I also looked at the surrounding parsers and used them as a reference, but a lot of them used JSON which seriouseats.com did not output to.

I started small, figured out how to grab the title, then the description (which plain old recipe doesn't actually grab), then the image of the food we're making, then the ingredients, and finally the actual directions. The directions were the trickiest thing to figure out how to scrape properly from seriouseats.com. I finished the rough draft of the parser in a few hours, but decided to test it on other random recipes which... broke everything. This is when I discovered that seriouseats.com is kind of a mess. Sometimes it renders extra paragraph tags, sometimes it renders blank lines. Luckily BeautifulSoup4 (a web scraping library used in the project which I have a bit of experience with) is flexible enough to just let me grab the string inside of a specified div's .class, which fortunately for me was always the same. After fixing my base issues every recipe I tested my parser on worked as expected. A lot of websites try to obfuscate their templates to detract from scraping, but I guess that's a problem the recipe industry doesn't typically have to worry about (This is actually a really interesting read.)

Funnily enough I actually spent about 20 minutes wondering why a particular recipe kept throwing errors... turns out it was just an article I had mistake for an actual recipe...

I submitted my PR for the author's consideration. Hopefully he's okay with how I did things.

I think I'll also submit some documentation on how to run the website locally. It was fun to learn a bit about Flask, it seems like a really neat technology. I'm not sure how relevant it'll be though, I surmise that JS/TS will continue to eat... well, everything that the market has to offer as the world pivots towards everything being a web app.

But that about does it for my Hacktoberfest 2020 adventure (even though two of the repos I contributed to didn't count towards the 4 PRs necessary for a shirt.) I had a lot of fun and learned a ton from the various projects I worked on. I'll be making a final Hacktoberfest blog post detailing everything shortly.

Man, it's nice to be able to just work on this stuff without having other course work.

Top comments (0)