This is my first #cloudguruchallenge. I took the challenge to learn more about ElastiCache and Python. So, let's get into it!

What is caching?

Caching is a tool to store and access data very quickly. With caching, you have the ability to mitigate unpredictable workload spikes, scale data sources, decrease network costs, and contribute to application availability by continuing to serve data in the event of failures.

Redis is an open-source, in-memory data structure cache and store, and from an operational point of view, Redis can be challenging to deploy monitor and scale on your own. In comes, Amazon ElastiCache for Redis. Amazon ElastiCache is a managed caching service compatible with both Redis and Memcached. It offers a fully managed platform that makes it easy to deploy, manage, and scale a high performance distributed in-memory data store cluster.

What is the challenge?

The application was made intentionally slow, so to improve application performance the goal is to implement a Redis cluster utilizing Amazon ElastiCache to cache the database queries in the Python application. You can find more details about the challenge here.

Approach and findings

Before I started the challenge, I decided to do two hands on labs hosted on A Cloud Guru. One utilized a PostgreSQL RDS instance and Redis and the other was a DynamoDB table utilizing Memcached cluster and Lambda functions.

With both manual deployments underway, I set out to deploy this fully utilizing Terraform.

I've been learning on my own for a while and was storing "throwaway" passwords in plain text to my Github repos when I needed to access a database. But I realize that is not best practice for security purposes, so I figured now was a good time try managing secrets in Terraform so my passwords are not exposed.

Managing secret variables

First, the Terraform state needs to be secure. Regardless of what technique you use to manage your secrets, if your backend is not encrypted, the secrets will still end up in a terraform.tfstate in plain text! With that in mind, I set S3 as my backend and encrypted it. The key is the path to where we want to store the state file.

resource "aws_s3_bucket" "terraform_state" {

bucket = "terraform-state-acgappperf"

versioning {

enabled = true

}

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

}

terraform {

backend "s3" {

bucket = "terraform-state-acgappperf"

region = "us-east-1"

key = "global/s3/terraform.tfstate"

encrypt = true

}

}

I chose to use environment variables. To use this technique, you have to declare variables for the secrets you wish to pass in, setting sensitive = true will suppress the values.

variable "db_username" {

description = "The username for the DB master user"

type = string

sensitive = true

}

variable "db_password" {

description = "The password for the DB master user"

type = string

sensitive = true

}

Next, I pass the variables to the Terraform resources that need those secrets

# Set secrets via environment variables

export TF_VAR_username=(the username)

export TF_VAR_password=(the password)

#When you run Terraform, it'll pick up the secrets automatically

terraform apply

I also created a user-data shell script to install the necessary dependencies, clone the repo, and configure the database.ini I utilized the template\_file function and the provisioner "file" block to pass through the variables to configure the Postgres RDS.

data "template_file" "init" {

template = file("./user-data.sh.tpl")

vars = {

DBUSER = var.db_username

DBPASS = var.db_password

DBNAME = aws_db_instance.acg-db.name

DBHOST = aws_db_instance.acg-db.address

REDISHOST = aws_elasticache_cluster.acg-redis.cache_nodes[0].address

}

}

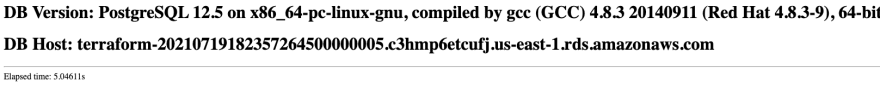

I had some trouble connecting to the database initially and had to edit my security groups. Once that was sorted everything was up, it was time to check out the load times, which were about 5 seconds.

Configuring app to use Redis

Editing the Python code to use Redis was harder than I thought it would be. Mainly due to indentation errors, and me being new to Python. To help, I worked in VS code instead of vi editor from the command line.

Updated code:

def fetch(sql):

ttl = 10 # Time to live in seconds

try:

params = config(section='redis')

cache = redis.Redis.from_url(params['url'])

result = cache.get(sql)

if result:

print('Redis result')

return result

else:

# connect to database listed in database.ini

conn = connect()

cur = conn.cursor()

cur.execute(sql)

# fetch one row

result = cur.fetchone()

print('Closing connection to database...')

cur.close()

conn.close()

# cache result

cache.setex(sql, ttl, ''.join(result))

return result

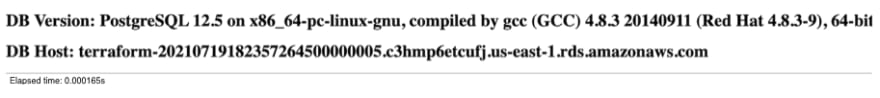

Now the load time is milliseconds

Final thoughts

Overall this challenge was indeed a challenge, next time I will look at different ways to manage secrets and utilize modules so I don't have to write everything out, but it was nice to deploy an ElastiCache cluster via Terraform for the first time. I also enjoyed working with Python. You can find my GitHub repo here.

Top comments (2)

Hi, thanks for the blog post, it was educative! I learnt how to use the Amazon ElastiCache! Thanks for linking the Github repo also.

Thanks for checking it out Dan!