Introduction

When you are running several AKS / Kubernetes clusters in production, the process of keeping your application(s), their dependencies, Kubernetes itself with the worker nodes up to date, turns into a time-consuming task for (sometimes) more than one person. Looking at the worker nodes that form your AKS cluster, Microsoft will help you by applying the latest OS / security updates on a nightly basis. Great, but the downside is, when the worker node needs a restart to be able to fully apply these patches, Microsoft will not reboot that particular machine. The reason is obvious: they simply don’t know, when it is best to do so. So basically, you would have to do this on your own.

Luckily, there is a project from WeaveWorks called “Kubernetes Restart Daemon” or kured, that gives you the ability to define a timeslot where it will be okay to automatically pull a node from your cluster and do a simple reboot on it.

Under the hood, kured works by adding a DaemonSet to your cluster that watches for a reboot sentinel like e.g. the file /var/run/reboot-required. If that file is present on a node, kured “cordons and drains” that particular node, inits a reboot and uncordons it afterwards. Of course, there are situations where you want to suppress that behavior and fortunately kured gives us a few options to do so (Prometheus alerts or the presence of specific pods on a node…).

So, let’s give it a try…

Installation of kured

I assume, you already have a running Kubernetes cluster, so we start by installing kured.

$ kubectl apply -f https://github.com/weaveworks/kured/releases/download/1.2.0/kured-1.2.0-dockerhub.yaml

clusterrole.rbac.authorization.k8s.io/kured created

clusterrolebinding.rbac.authorization.k8s.io/kured created

role.rbac.authorization.k8s.io/kured created

rolebinding.rbac.authorization.k8s.io/kured created

serviceaccount/kured created

daemonset.apps/kured created

Let’s have a look at what has been installed.

$ kubectl get pods -n kube-system -o wide | grep kured

kured-5rd66 1/1 Running 0 4m18s 10.244.1.6 aks-npstandard-11778863-vmss000001 <none> <none>

kured-g9nhc 1/1 Running 0 4m20s 10.244.2.5 aks-npstandard-11778863-vmss000000 <none> <none>

kured-vfzjk 1/1 Running 0 4m20s 10.244.0.10 aks-npstandard-11778863-vmss000002 <none> <none>

As you can see, we now have three kured pods running.

Test kured

To be able to test the installation, we simply simulate the “node reboot required” by creating the corresponding file on one of the worker nodes. We need to access a node by ssh. Just follow the official documentation on docs.microsoft.com:

https://docs.microsoft.com/en-us/azure/aks/ssh

Once you have access to a worker node via ssh, create the file via:

$ sudo touch /var/run/reboot-required

Now exit the pod, wait for the kured daemon to trigger a reboot and watch the cluster nodes by executing kubectl get nodes -w

$ kubectl get nodes -w

NAME STATUS ROLES AGE VERSION

aks-npstandard-11778863-vmss000000 Ready agent 34m v1.15.5

aks-npstandard-11778863-vmss000001 Ready agent 34m v1.15.5

aks-npstandard-11778863-vmss000002 Ready agent 35m v1.15.5

aks-npstandard-11778863-vmss000001 Ready agent 35m v1.15.5

aks-npstandard-11778863-vmss000000 Ready agent 35m v1.15.5

aks-npstandard-11778863-vmss000002 Ready,SchedulingDisabled agent 35m v1.15.5

aks-npstandard-11778863-vmss000002 Ready,SchedulingDisabled agent 35m v1.15.5

aks-npstandard-11778863-vmss000002 Ready,SchedulingDisabled agent 35m v1.15.5

aks-npstandard-11778863-vmss000001 Ready agent 36m v1.15.5

aks-npstandard-11778863-vmss000000 Ready agent 36m v1.15.5

aks-npstandard-11778863-vmss000002 NotReady,SchedulingDisabled agent 36m v1.15.5

aks-npstandard-11778863-vmss000002 NotReady,SchedulingDisabled agent 36m v1.15.5

aks-npstandard-11778863-vmss000002 Ready,SchedulingDisabled agent 36m v1.15.5

aks-npstandard-11778863-vmss000002 Ready,SchedulingDisabled agent 36m v1.15.5

aks-npstandard-11778863-vmss000002 Ready agent 36m v1.15.5

aks-npstandard-11778863-vmss000002 Ready agent 36m v1.15.5

Corresponding output of the kured pod on that particular machine:

$ kubectl logs -n kube-system kured-ngb5t -f

time="2019-12-23T12:39:25Z" level=info msg="Kubernetes Reboot Daemon: 1.2.0"

time="2019-12-23T12:39:25Z" level=info msg="Node ID: aks-npstandard-11778863-vmss000000"

time="2019-12-23T12:39:25Z" level=info msg="Lock Annotation: kube-system/kured:weave.works/kured-node-lock"

time="2019-12-23T12:39:25Z" level=info msg="Reboot Sentinel: /var/run/reboot-required every 2m0s"

time="2019-12-23T12:39:25Z" level=info msg="Blocking Pod Selectors: []"

time="2019-12-23T12:39:30Z" level=info msg="Holding lock"

time="2019-12-23T12:39:30Z" level=info msg="Uncordoning node aks-npstandard-11778863-vmss000000"

time="2019-12-23T12:39:31Z" level=info msg="node/aks-npstandard-11778863-vmss000000 uncordoned" cmd=/usr/bin/kubectl std=out

time="2019-12-23T12:39:31Z" level=info msg="Releasing lock"

time="2019-12-23T12:41:04Z" level=info msg="Reboot not required"

time="2019-12-23T12:43:04Z" level=info msg="Reboot not required"

time="2019-12-23T12:45:04Z" level=info msg="Reboot not required"

time="2019-12-23T12:47:04Z" level=info msg="Reboot not required"

time="2019-12-23T12:49:04Z" level=info msg="Reboot not required"

time="2019-12-23T12:51:04Z" level=info msg="Reboot not required"

time="2019-12-23T12:53:04Z" level=info msg="Reboot required"

time="2019-12-23T12:53:04Z" level=info msg="Acquired reboot lock"

time="2019-12-23T12:53:04Z" level=info msg="Draining node aks-npstandard-11778863-vmss000000"

time="2019-12-23T12:53:06Z" level=info msg="node/aks-npstandard-11778863-vmss000000 cordoned" cmd=/usr/bin/kubectl std=out

time="2019-12-23T12:53:06Z" level=warning msg="WARNING: Deleting pods not managed by ReplicationController, ReplicaSet, Job, DaemonSet or StatefulSet: aks-ssh; Ignoring DaemonSet-managed pods: kube-proxy-7rhfs, kured-ngb5t" cmd=/usr/bin/kubectl std=err

time="2019-12-23T12:53:42Z" level=info msg="pod/aks-ssh evicted" cmd=/usr/bin/kubectl std=out

time="2019-12-23T12:53:42Z" level=info msg="node/aks-npstandard-11778863-vmss000000 evicted" cmd=/usr/bin/kubectl std=out

time="2019-12-23T12:53:42Z" level=info msg="Commanding reboot"

time="2019-12-23T12:53:42Z" level=info msg="Waiting for reboot"

...

...

<AFTER_THE_REBOOT>

...

...

time="2019-12-23T12:54:15Z" level=info msg="Kubernetes Reboot Daemon: 1.2.0"

time="2019-12-23T12:54:15Z" level=info msg="Node ID: aks-npstandard-11778863-vmss000000"

time="2019-12-23T12:54:15Z" level=info msg="Lock Annotation: kube-system/kured:weave.works/kured-node-lock"

time="2019-12-23T12:54:15Z" level=info msg="Reboot Sentinel: /var/run/reboot-required every 2m0s"

time="2019-12-23T12:54:15Z" level=info msg="Blocking Pod Selectors: []"

time="2019-12-23T12:54:21Z" level=info msg="Holding lock"

time="2019-12-23T12:54:21Z" level=info msg="Uncordoning node aks-npstandard-11778863-vmss000000"

time="2019-12-23T12:54:22Z" level=info msg="node/aks-npstandard-11778863-vmss000000 uncordoned" cmd=/usr/bin/kubectl std=out

time="2019-12-23T12:54:22Z" level=info msg="Releasing lock"

As you can see, the pods have been drained off the node (SchedulingDisabled), which has then been successfully rebooted, uncordoned afterwards and is now ready to run pods again.

Customize kured Installation / Best Practices

Reboot only on certain days/hours

Of course, it is not always a good option to reboot your worker nodes during “office hours”. When you want to limit the timeslot where kured is allowed to reboot your machines, you can make use of the following parameters during the installation:

- reboot-days – the days kured is allowed to reboot a machine

- start-time – reboot is possible after specified time

- end-time – reboot is possible before specified time

- time-zone – timezone for start-time/end-time

Skip rebooting when certain pods are on a node

Another option that is very useful regarding production workloads, is the possibility to skip a reboot, when certain pods are present on a node. The reason for that could be, that the service is very critical to your application and therefor pretty “expensive” when not available. You may want to surveil the process of rebooting such a node and being able to intervene quickly, if something goes wrong.

As always in the Kubernetes environment, you can achieve this by using label selectors for kured – an option set during installation called blocking-pod-selector.

Notify via WebHook

kured also offers the possibility to call a Slack webhook when nodes are about to be rebooted. Well, we can “misuse” that webhook to trigger our own action, because such a webhook is just a simple https POST with a predefined body, e.g.:

{ "text": "Rebooting node aks-npstandard-11778863-vmss000000", "username": "kured" }

To be as flexible as possible, we leverage the 200+ Azure Logic Apps connectors that are currently available to basically do anything we want. In the current sample, we want to receive a Teams notification to a certain team/channel and send a mail to our Kubernetes admins whenever kured triggers an action.

You can find the important parts of the sample Logic App on my GitHub account. Here is a basic overview of it:

What you basically have to do is to create an Azure Logic App with a http trigger, parse the JSON body of the POST request and trigger “Send Email” and “Post a Teams Message” actions. When you save the Logic App for the first time, the webhook endpoint will be generated for you. Take that URL and use the value for the slack-hook-url parameter during the installation of kured.

If you need more information on creating an Azure Logic App, please see the official documentation: https://docs.microsoft.com/en-us/azure/connectors/connectors-native-reqres.

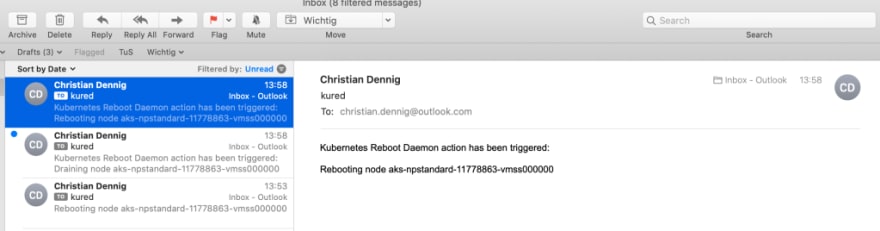

When everything is setup, Teams notifications and emails you receive, will look like that:

Wrap-Up

In this sample, we got to know the Kubernetes Reboot Daemon that helps you keep your AKS cluster up to date by simply specifying a timeslot where the daemon is allowed to reboot you cluster/worker nodes and apply security patches to the underlying OS. We also saw, how you can make use of the “Slack” webhook feature to basically do anything you want with kured notifications by using Azure Logic Apps.

Tip: if you have a huge cluster, you should think about running multiple DaemonSets where each of them is responsible for certain nodes/nodepools. It is pretty easy to set this up, just by using Kubernetes node affinities.

Top comments (3)

Hey, @cdennig . First of all thanks for this post. There is also an official documentation on how to install kured on AKS: docs.microsoft.com/en-us/azure/aks...

One thing to mention here is that the current chart doesn't work with AKS out of the box. The authors used

/sbin/systemctl rebootas default reboot command in the chart which doesn't work on Ubuntu nodes. You need to manually overwrite it to/bin/systemctl reboot– which is the application default – to make it work. This is also not covered in the official documentation. I honestly have no idea why the chart's default is using/sbin......Thank you for pointing out...btw, there are now built-in tools in AKS where you can automate that kind of tasks even without kured. You can use the cluster auto-upgrade feature (updated OS images are provided once a week) in combination with "planned maintenance windows" (currently still preview).

docs.microsoft.com/en-us/azure/aks...

docs.microsoft.com/en-us/azure/aks...

Apparently since writing this blog post ssh instructions for Linux nodes have changed. Following instructions in docs.microsoft.com/en-us/azure/aks... and then executing command (touch /var/run/reboot-required) will not trigger Kured reboot action.