A few days ago, I was working on SQS in Golang. Quite tricky but stressing enough for me. Because I stuck for 5 days only to make my consumer worked well in EKS.

To give some context, SQS stands for Simple Queue Service. It is a message queue service provided by AWS. More details about it, you can see on the official page of SQS from AWS.

To make it short, assume that I have an application that will need to consume a lot of messages from SQS.

Simply, we can use the AWS SDK Go for consuming the SQS message. But the flow is quite different from what I have experienced with Google Pubsub. In GCP, they’ve prepared a complete SDK that already has a function to long consuming/streaming the message from the Google Pubsub. In AWS, especially SQS, we need to make a long looping and making a REST call to pull the queued message.

So what we do is basically just copying this awesome article does “SQS Consumer Design: Achieving High Scalability while managing concurrency in Go”.

func (c \*consumer) Consume() {

for w := 1; w <= c.workerPool; w++ {

go c.worker(w)

}

}func (c \*consumer) worker(id int) {

for {

output, err := retrieveSQSMessages(c.QueueURL, maxMessages)

if err != nil {

continue

} var wg sync.WaitGroup

for \_, message := range output.Messages {

wg.Add(1)

go func(m \*message) {

defer wg.Done()

if err := h(m); err != nil {

//log error

continue

}

c.delete(m) //MESSAGE CONSUMED

}(newMessage(m))

wg.Wait()

}

}

}

Yeap, I just copying his code. Because it’s looking good already. With worker pattern. So why just not using it right?

TLDR; But now, I have 2 problems

- Chain Credentials Issue

- Timeout Error when Consuming Message from SQS

Problem Statement

Chain Credentials

Our problem is not with the worker or stuff. It begins with us following the SDK introduction.

So the thing is, the default SDK has a sequential order for authentication. If you go to this page, “Configuring the AWS SDK for Go”, you will found about chain credentials order.

From that documentation, we can learn that the SDK will look for ENV keys first. If not exists, then it will be looking to the shared credential file. And if the credential file also doesn’t exist, it will look at the IAM Role on EC2 and so on.

So, what’s the problem here is, in our use-case, since the default configurations of the SDK will load ENV variable first, if not exists, then it will be looking to the shared credentials file, it can be like AWS_WEB_IDENTITY_TOKEN_FILE. And for local workspace, it will look up to credentials file in AWS config which is located in ~/.aws/credentials (Mac/Linux) (for more info, you can read here).

It becomes a problem for us. Since the project will not be used only for us but maybe other engineers in a different team. We afraid that, to other engineers that have a configured AWS config in their locals, which is located in ~/.aws/credentials. We assume there will be a chance to them to run the application in local, but using production-access credentials that were configured in ~/.aws/credentials.

So, what we really want are:

- Local development, we use ENV variable

- On Staging and Production, we use IAM Role.

- Avoid using the Shared Credentials File on the first place, to avoid accidentally using a production-access credentials file by the other engineers.

First Attempt Solution

Our first solution is, we will detect the IAM Role first if it exists then ok. If doesn’t exist, then our application will lookup for the ENV key which is AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY.

So then, we customized our chain credentials, into like this below

If you see the above functions, especially the credProviders , we specify the orders for the credentials chaining provider, first, it will look up to the Instance IAM Role, and then lookup for the ENV provider. So we basically remove the authentication using the shared credentials file. So whenever the engineers have a configured AWS credentials key in their PC which is located in ~/.aws/credentials , it still safe, since the SDK will look for the IAM Role and ENV key only.

The Issue

After making a custom chain credentials, we found another problem. It can be run well in EC2 instance, but not in our EKS. In short, our chain credentials only working on EC2 instances level. And for EKS case, it will work if we only allow IAM Role on the Nodes level.

For security-wise, for EKS, we want to use the IAM role on the service account level or in pods level, not at node level as we can find in the example here, “Introducing fine-grained IAM roles for service accounts”.

So it obvious, our custom chain credentials are not working well for EKS. So we need to change the chain method.

Final Solutions

To fix it, we then sit together with our infra engineers. Discuss and debugging the application. Takes a whole day, with many attempts, we finally decide to customized the solution logically in our application.

Turns out, to make it work in EKS, we have to enable the shared credentials file. It’s because in EKS we will use AWS_WEB_IDENTITY_TOKEN_FILE , based on this article.

So to use the web identity token file, we have to enable credentials using a file. Which are we avoid in the first place, but for the sake of work well in EKS, we then decide to enable chain auth with the shared credential file.

But to avoid engineer’s local shared credential file ~/.aws/credentials being used accidentally, we decide to handle it logically in the application (statically written in code).

We use an ENV variable APP_ENV to check if the environment is local or not.

// IsLocal will return true if the APP\_ENV is not listed in those

// three condition

func IsLocal() bool {

envLevel := MustHaveEnv("APP\_ENV")

return envLevel != "production" &&

envLevel != "staging" &&

envLevel != "integration"

}

So no matter the APP_ENV’s value, if it’s not production or staging or integration we assume it as local.

As you can see, if the environment is not local (if !IsLocal()), we use the shared config file. And if it’s in local, we use ENV key, and it’s required, so we can avoid accidentally using production-access credentials in engineer’s local workspace ~/.aws/credentials.

With this, we finally solve our problem with credentials for the SDK safely. We will enforce the engineer’s to use ENV key in local (with using localstack). And will using shared credentials file in staging and production

Using Customized HTTP Client

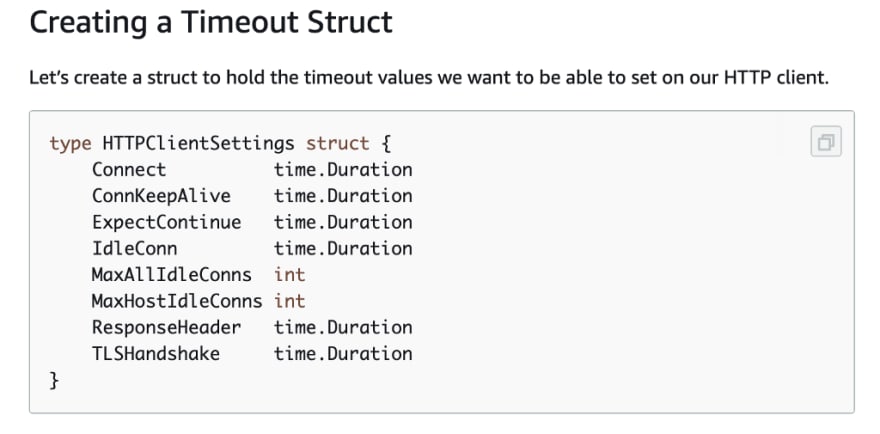

Another problem, it’s when we trying to consume the SQS message. But before telling the details about that, to give some context, in the AWS SDK Documentation pages, we found this article, “Creating a Custom HTTP Client”. On that page, we found how to customize our HTTP Client.

So then, we just follow these steps. We customized our HTTP Client. We set the timeout, the maximum idle connection, etc. And then, we run locally (thanks to localstack). We test it locally, and everything is fine. Perfectly worked.

The Issue

But then, when we tried to deploy it in our EKS. After deployed to EKS, we got a lot of errors.

time="2020-02-06T07:23:02Z" level=error msg="there was an error reading messages from SQS RequestError: send request failed\ncaused by: Post [https://sqs.ap-southeast-2.amazonaws.com/](https://sqs.ap-southeast-2.amazonaws.com/): net/http: request canceled (Client.Timeout exceeded while awaiting headers)"

time="2020-02-06T07:23:02Z" level=error msg="there was an error reading messages from SQS RequestError: send request failed\ncaused by: Post [https://sqs.ap-southeast-2.amazonaws.com/](https://sqs.ap-southeast-2.amazonaws.com/): net/http: request canceled (Client.Timeout exceeded while awaiting headers)"

Actually this is not really a big problem, but it will annoying and increase our log storage because this will run realtime due to our long-running pulling message from SQS using HTTP call. Solving this, I take 2 days!!! My whole 2 days ruined because of this. WTF!

Final Solutions

I was frustrated, there’s no one who understands why this issue happening in my team. And then, I decided to ask in Gopher’s slack group.

Thanks to André Eriksson and Zach Easey and others in Slack Gopher https://gophers.slack.com/archives/C029RQSEE/p1580979593499800. They help me to solve my problem here.

So to summarize, this has happened because we use long polling enabled, but with the long polling, connection enabled, we also customized our HTTP client.

So, the solution is, for long polling consume the message from SQS, we shouldn't customize our HTTP client, we use the default one. Because, if we customized the HTTP client (like the timeout, etc) it will compete with the long polling connection time, and that’s causing the request canceled (Client.Timeout exceeded while awaiting headers) errors happened a lot.

This is quite tricky, because as far as I know, to avoid the bad timeout and user experience, I usually customized my HTTP Client. But for this case, for SQS, since we enable the long polling call, the right solution is only using the default HTTP Client that doesn’t have the timeout settings.

Conclusions

The takeaways from this whole projects when integrating SQS with Go in EKS, are:

- We manually handle the custom chain credentials for the AWS SDK to avoid accidentally using production-access credentials file in the local workspace engineers, ~/.aws/credentials.

- To enable the IAM role on the pods level, we need to allow the SDK to use Shared Credentials file, which is quite tricky since we disable it on the local workspace.

- We handle the chain credentials logically in code, using the extra ENV key, like APP_ENV.

- If you enable the long polling connection to consume the SQS message, we must use default HTTP client, don’t customize its timeout, or you will face a lot of timeout error. https://medium.com/media/1f83e4a733ad3206f47e6dd38aa4fc6d/href

Top comments (0)