When embarking on your SRE journey, it can seem daunting to decipher all the acronyms. What are SLOs versus SLAs? What’s the difference between SLIs and SLOs? In this blog post, we’ll cover what SLI, SLO, and SLA mean and how they contribute to your reliability goals.

What’s the difference between SLI, SLO, and SLA?

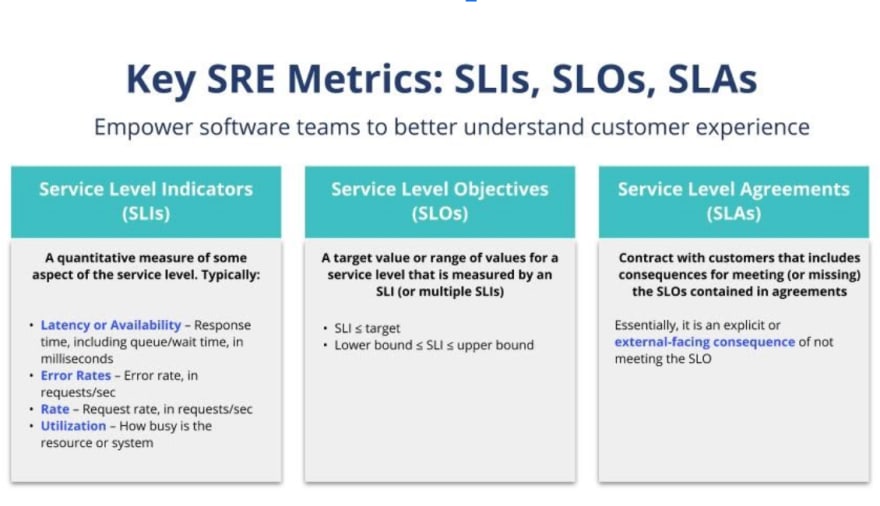

Below are the definitions for each of these terms, as well as a brief description. Definitions are according to the Google SRE Handbook.

SLI: “a carefully defined quantitative measure of some aspect of the level of service that is provided.”

SLIs are a quantitative measure, typically provided through your APM platform. Traditionally, these refer to either latency or availability, which are defined as response times, including queue/wait time, in milliseconds. A collection of SLIs, or composite SLIs, are a group of SLIs attributed to a larger SLO. These indicators are points on a digital user journey that contribute to customer experience and satisfaction.

When a developer sets up SLIs measuring their service, they do them in two stages:

- SLIs that will directly impact the customer.

- SLIs that directly influence the health and the availability or the latency and performance of certain services. Once you have SLIs set up, you move into your SLOs, which are targets against your SLI.

SLO: “a target value or range of values for a service level that is measured by an SLI. A natural structure for SLOs is thus SLI ≤ target, or lower bound ≤ SLI ≤ upper bound.”

Service level objectives become the common language that companies use that allows teams to set guardrails and incentives to drive high levels of service reliability.

Today many companies operate in a constantly reactive mode. They're reacting to NPS scores, churn, or incidents. This is an expensive, unsustainable use of time, and resources, let alone the potentially irrecoverable damage to customer satisfaction and the business. SLOs give you the objective language and measure of how to prioritize reliability work for proactive service health.

the SLOs they contain.”

Service level agreements are set by the business rather than engineers, SREs, or ops. When anything happens to an SLO, SLAs kick in; they're the actions that are taken when your SLO fails and often result in financial or contractual consequences.

How these terms help with reliability: an example case study

Imagine an organization is looking to increase reliability. The company has recently begun investigating expensive SLA breaches and wants to know why it’s reliability is suffering. This organization breaches its SLA for availability almost every month. As it onboards more customers with SLAs, these expenses can grow if it doesn’t meet its performance guarantees.

This fictitious organization is also dealing with low NPS scores. The team is aware of the problem, but NPS scores are a lagging indicator with respect to customers that have already begun to churn. The team met to discuss what needs to be done. The first step to this is breaking down the company’s SLIs.

Identifying SLIs that matter to the user

The team knows it needs to examine availability and set SLOs for it, so it begins looking at the user journey. The QA team has already done some documentation, so the team refers to the user journeys outlined there and augments this documentation with their own journeys.

The team identifies critical points that receive the brunt of complaints. Team members also look into black box monitoring, a tactic that helps identify issues from a user’s perspective. With black box monitoring, the team acts as an external user of the service with no access to the internal monitoring tools. This allows team members to concentrate on a few metrics that directly correlate with user happiness.

After looking at their user’s journey, the team determines that the individual load pages of each tab on the expenses feature don’t load slowly individually, but when someone needs to skim through 2 or more pages, it becomes tedious. So the team also decides to create an SLO for response time at the load balancers as well.

Establishing corresponding SLOs

After the team determines its SLIs, it’s time to set up the SLOs. The team is looking at availability of the site (a common complaint), as well as the latency issue on the expense page. While the team plans to add more SLOs later, these two will serve as the guinea pigs.

For the latency issue, the team sets an SLO for all pages to load in under 1 second. This faster load time means that users won’t be irritated scrolling through multiple pages. The team then moves on to the availability SLO.

Based on traffic levels, customer usage, NPS scores, the team has determined that its customers are likely to be happy with 99.5% availability. On the other hand, data from previous months suggests customer satisfaction and usage doesn’t seem to increase when uptime is greater than 99.9%. This means that there’s no reason to optimize at this point for higher than a 99.5% uptime metric.

With SLOs in place, the team will need to work on what to do if these targets are missed by creating an error budget policy. This policy will detail:

- The acceptable level of failure in the system over a given period of time (the error budget)

- Alerting and on-call procedures for the service Escalation policies in the event of error budget depletion

- An agreement to halt feature development and focus on reliability after a certain amount of time where the error budget is exceeded.

Once everyone agrees, the SLOs are launched. The team watches carefully and reiterates at the monthly error budget meeting. After a few months, the team feels confident enough to add more SLOs.

Agreeing on SLAs

SLAs are an external metric, therefore not goaled the same way as SLOs. SLAs are a business agreement with users that dictates a certain level of usability. The engineering team is aware of SLAs, but doesn’t set them. Instead, the team sets SLOs more stringently than the SLAs, giving themselves a buffer.

For example, the team’s 99.5% availability SLO means the service can only be down 3.65 hours per month. However, the SLA that the organization signs with users specifies that it must maintain a 99% availability. This means the service can be down 7.31 hours per month. The team has a buffer of 3.66 hours per month. Now, the team can work on new features with guardrails for reliability. The organization will benefit from happier users and the team has the confidence to innovate while remaining reliable.

When used together, SLI, SLO, and SLAs are powerful tools that allow you to provide the best for your users. While it can be tricky to get these metrics right, a culture of revision, iteration, and blamelessness will help you achieve your reliability goals.

If you liked this article, check out these resources:

Top comments (0)