Amazon CloudWatch logs lets you monitor, collect, act, analyze, stream metrics and access your log files from Amazon EC2 instances, AWS CloudTrail, Lambda functions, VPC flow logs, or other resources. CloudWatch can be costly and in order keep the costs under control it is ideal to move older logs to S3 for a long term retention period.

To accomplish this, we will be consuming the following AWS services:

- S3 (Bucket & Bucket Policy)

- IAM (Lambda Role)

- CloudWatch (Events Rules)

- Lambda (Functions)

- Step Functions

1. Create S3 bucket

Create an S3 Bucket to export the logs to. To allow CloudWatch to PUT (WRITE access) objects to the bucket attach the following policy:

{

"Version": "2008-10-17",

"Id": "Policy1335892530063",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "logs.YOUR-REGION.amazonaws.com"

},

"Action": "s3:GetBucketAcl",

"Resource": "arn:aws:s3:::YOUR-BUCKET-NAME"

},

{

"Effect": "Allow",

"Principal": {

"Service": "logs.YOUR-REGION.amazonaws.com"

},

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::YOUR-BUCKET-NAME/*",

"Condition": {

"StringEquals": {

"s3:x-amz-acl": "bucket-owner-full-control"

}

}

}

]

}

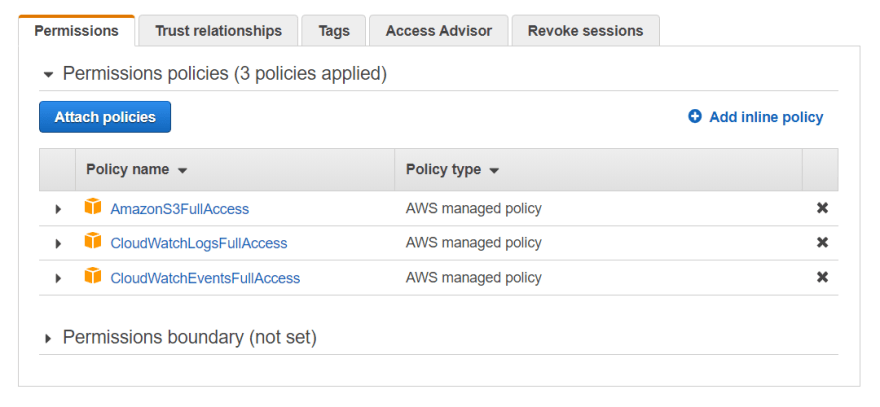

2. Create IAM role

Here we are creating IAM role i.e ‘Export-CloudWatch-Logs-To-S3-Lambda-Role’ for Lambda to log events and write to the S3 bucket we created. Add the following policy to the created role.

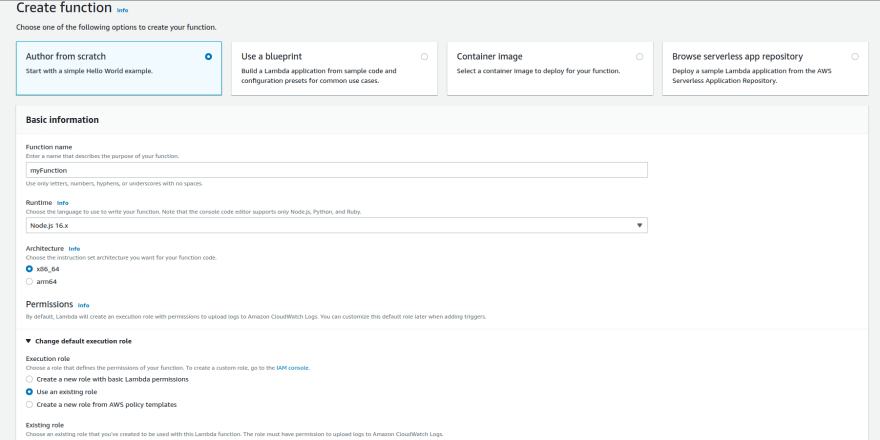

3. Create and configure Lambda function

With Lambda you don’t have to provision or manage servers. You can simply paste the code below to your function and it will run when triggered. In this section of the article, we will set up the Lambda Function.

Navigate to Lambda >> Functions >> Create Function from there you will need to perform the following:

Choose ‘Author from Scratch’.

Function Name: Export-CloudWatch-Logs-To-S3

Runtime: Node.js 16.x

Under Permissions, choose existing role. Select ‘Use an existing role’, and choose the IAM we created earlier. Now go ahead and click on Create Function.

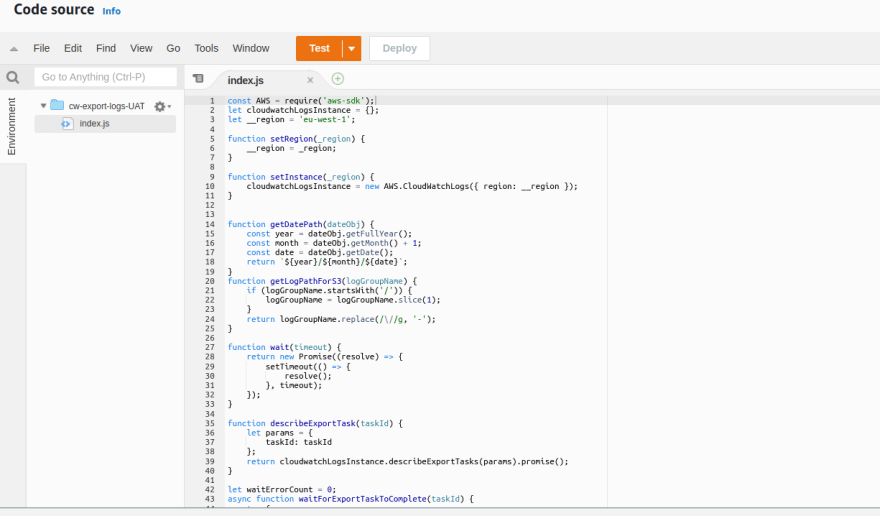

You will need to paste the code above under the Function Code section as shown in the screenshot below:

NB: Change bucket-name to the one created above and the region you are deploying to

4. Create Step Function State Machine

We will need a step machine to export multiple log groups data as one export task is accomplish at a time.

Go to services >> Step Functions >> Get started >> Create state machine >> Write your workflow in code >> put the following JSON in

{

"StartAt": "CreateExportTask",

"States": {

"CreateExportTask": {

"Type": "Task",

"Resource": "LAMBDA_FUNCTION_ARN",

"Next": "IsAllLogsExported"

},

"IsAllLogsExported": {

"Type": "Choice",

"Choices": [

{

"Variable": "$.continue",

"BooleanEquals": true,

"Next": "CreateExportTask"

}

],

"Default": "SuccessState"

},

"SuccessState": {

"Type": "Succeed"

}

}

}

(Put previously created lambda function ARN in JSON in State machine definition) → click on Create state machine.

5. Create CloudWatch event rule

In order to schedule the Lambda Function to run or trigger at a specific time, you will need to create and configure a CloudWatch Events Rule.

To create a new rule, you will need to navigate to CloudWatch >> Rules >> Go to Amazon EventBridge >> Create Rule. In this example, trigger the Lambda Function at 11:00 PM EAT. (Adjust the time to your needs) Targets >> AWS service >> select Step Functions state machine ( select previously created state machine) >> Execution role (create a new role) >> Additional settings >> from the dropdown select Constant (JSON text) and put the following JSON. Review and Create rule.

{

"region":"REGION",

"logGroupFilter":"/aws",

"s3BucketName":"BUCKET_NAME",

"logFolderName":"cloudwatchlogs"

}

NB: use ["/aws", "/prod"] for a list of logGroupFilter

Here region is the region where you set up this solution. Only log groups contain logGroupFilter value will be exported. s3BucketName is the destination bucket and in that what value we are giving in logFolderName, that named folder will be created and in that logs will be stored.

Choose Create a new role for this specific resource (this role is needed for CloudWatch Events needs permission to send events to your Step Functions state machine >> click on Configure details

Conclusion

This solution export the logs everyday to S3 bucket. With this, we are able to manage costs usage on cloudwatch. Go ahead and add a lifecycle to S3 bucket such that the logs become stale after say, 90 days then they get deleted.

Top comments (0)