It’s amazing how easy machine learning has become. It’s no longer a hinderance if you don’t have any mathematical background knowledge or any academia background on machine learning. I certainly belong to the category.

One of my goals in using machine learning is when recognising something. I wanted to build a model that could do facial recognition, identify animal species, name of things, etc. Learning the math to this was horrendous and quite difficult for someone with little knowledge on math. I almost gave up on this seemingly unattainable goal until I discovered “pre-trained models”.

Pre-trained models are basically (based on my understanding), models that have been trained on different datasets to solve a similar problem. There are different kinds of pre-trained models, each one for their own specific purposes for different problems.

Today, I’ll be showing you how I built a monkey recognition model using the pre-trained model ResNet50. ResNet50 is great for specifying species (based on what I’ve seen). I’ve seen this being used for dogs as well. Sooo let’s go with monkeys 🙈

Setup

I'm doing this project in a jupyter notebook. You can check my setup post if you haven't yet setup your machine for machine learning. I'm using the 10-monkey-species from Kaggle.

We must also have a JSON file with all the indexes of the species. You can download it in this Github gist. It's gonna be important for later.

Lastly, download the pre-trained models of ResNet50. Here's a link from Kaggle.

Structuring our Data Directory

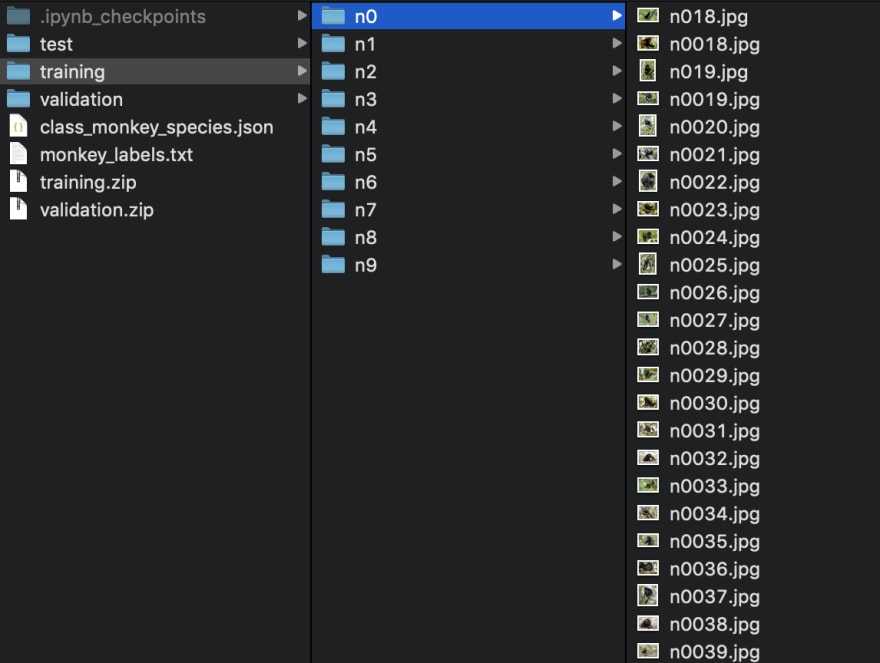

My directory folder looks like this:

We have images for training and validation. *remember data splitting

Inside the training/validation folder we have the directories for each species and their images.

Transforming the Images

First hurdle is taking the images and changing it into something that our machine learning model can understand.

from tensorflow.python.keras.preprocessing.image import ImageDataGenerator

from tensorflow.python.keras.applications.resnet50 import preprocess_input

image_size = 224

data_generator_with_aug = ImageDataGenerator(preprocessing_function=preprocess_input,

horizontal_flip=True,

width_shift_range=0.2,

height_shift_range=0.2)

train_path='10-monkey-species/training'

train_generator = data_generator_with_aug.flow_from_directory(train_path,

target_size=(image_size, image_size),

batch_size=24,

class_mode='categorical')

data_generator_with_no_aug = ImageDataGenerator(preprocessing_function=preprocess_input)

validation_path='10-monkey-species/validation'

validation_generator = data_generator_with_no_aug.flow_from_directory(validation_path,

target_size=(image_size, image_size),

batch_size=24,

class_mode='categorical')

Let’s break this down. ImageDataGenerator is a function that takes the image and preprocess the image and spits out data that the model can understand. The preprocessing_function argument takes in another function, in this case the preprocess_input from ResNet50 which translates the image that RestNet50 can understand. The other arguments allows for data augmentation.

Data augmentation basically allows you to augment your data. Meaning that it allows you to increase your data samples without actually increasing the number of data you have. In this example flipping the image or slightly changing the width and height of the image allows us to have more samples of the image without actually using the same exact image.

flow_from_directory takes in all the images you’ve placed in the directory.

Building Our Model

Now, let’s build our model!!

from tensorflow.python.keras.applications import ResNet50

from tensorflow.python.keras.models import Sequential

from tensorflow.python.keras.layers import Dense, Flatten, Conv2D, Dropout

resnet_weights_path = 'resnet50_weights_tf_dim_ordering_tf_kernels_notop.h5'

model = Sequential()

model.add(ResNet50(include_top=False, pooling='avg', weights=resnet_weights_path))

num_classes = 10

model.add(Dense(num_classes, activation='softmax'))

# Say not to train first layer (ResNet) model. It is already trained

model.layers[0].trainable = False

model.compile(optimizer='sgd', loss='categorical_crossentropy', metrics=['accuracy'])

model.fit_generator(train_generator,

steps_per_epoch=3,

epochs=20,

validation_data=validation_generator,

validation_steps=1)

Break down:

- So we used a Sequential convolutional network. Then we added a ResNet50 layer with the pre-trained weights.

- Then we added a Dense layer with the number of classes we have which is 10. Number of classes means the number of species (in this case) that we're trying to identify.

- We also said that for the first layer of our model we don’t want to re-train it.

- Then we compiled everything that we did with

model.compile()and finally fitted everything in themodel.fit_generator()

Remember the generators we’ve made when we transformed our images? We placed them all in here.

- Epochs is basically the number of times the model will cycle through the images.

-

steps_per_epochis the number of images we would take in for each epoch.

Testing Our Model

import numpy as np

from os.path import join

from tensorflow.python.keras.preprocessing.image import load_img, img_to_array

image_dir = '10-monkey-species/validation'

img_paths = [join(image_dir, filename) for filename in

['n0/n000.jpg',

'n5/n502.jpg',

'n9/n9031.jpg',

'n2/n2014.jpg']]

image_size = 224

def read_prep_images(img_paths, img_height=image_size, img_width=image_size):

imgs = [load_img(img_path, target_size=(img_height, img_width)) for img_path in img_paths]

img_array = np.array([img_to_array(img) for img in imgs])

output = preprocess_input(img_array)

return output

test_data = read_prep_images(img_paths)

Here we take in several test images from our validation dataset and changing them into a np.array using the read_prep function.

Then we predict them with our model.

preds = model.predict(test_data)

print(preds)

Translating Our Predictions

We need to translate our predictions into indexes so that:

- we can use that index on our JSON file

- Return the appropriate data for that index

I copied this from Kaggle with a few slight adjustments.

import json

from IPython.display import Image, display

def decode_predictions(preds, top=5, class_list_path=None):

if len(preds.shape) != 2 or preds.shape[1] != 10:

raise ValueError('`decode_predictions` expects '

'a batch of predictions '

'(i.e. a 2D array of shape (samples, 1000)). '

'Found array with shape: ' + str(preds.shape))

index_list = json.load(open(class_list_path))

results = []

for pred in preds:

top_indices = pred.argsort()[-top:][::-1]

result = [tuple(index_list[str(i)]) + (pred[i],) for i in top_indices]

result.sort(key=lambda x: x[2], reverse=True)

results.append(result)

return results

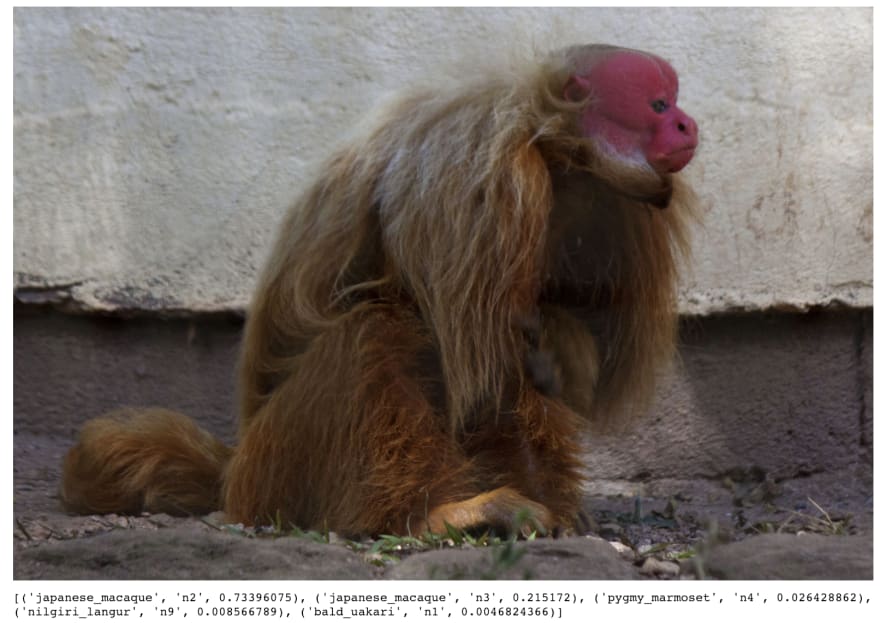

most_likely_labels = decode_predictions(preds, top=5, class_list_path='10-monkey-species/class_monkey_species.json')

for i, img_path in enumerate(img_paths):

display(Image(img_path))

print(most_likely_labels[i])

So the decode_predictions process takes in the preds which is a 2D array and indexes the corresponding object from the json file. So for example if preds was [0, 1, 8] then it would index that from the json and return [[‘index_0’, ‘folder_name’], ['index_1', 'folder_name'], ['index_8', 'folder_name']].

The for loop basically takes in the images and displays them with the decoded predictions.

Conclusion

Machine Learning has become incredibly simple. It’s flabbergasting. Certain terms here like ‘softmax’ and ‘relu’ are all mathematical terms which you don’t really need to dive into their mechanics but would be useful if you had a general idea of what they do and what they’re for. In my journey so far in machine learning, you only need a general understanding and concept of it to really begin.

Hope you learned something from this!

Would love to hear your feedback 😄

See more from me on twitter and instagram @heyimprax.

Thanks for reading! You've made it 🎉 Have a coffee break ☕️

Top comments (4)

Hi. Thank you for the helpful tutorial. I'm trying to reproduce the monkey classification but at the line "model.add(ResNet50(include_top=False, pooling='avg', weights=resnet_weights_path))" I get an error message "Shapes (1, 1, 256, 512) and (512, 128, 1, 1) are incompatible". Do you have any idea what could be the problem? I didn't make any changes in the code, except when I imported the preprocess_input: "from tensorflow.keras.applications.resnet import preprocess_input". I use tensorflow 2.0.

Hi e-velin! Thanks for reading my post! It's been awhile since I did this post, but if I recall correctly I was using an earlier version of tensorflow. It was probably around v1. Try downgrading your tensorflow to an earlier version.

Great tutorial! This proves how easy it is to start using Machine Learning in your company.

Thanks! 😄