In a career in operations spent ensuring the reliability of various websites and applications, I've run into a lot of stories. In Ops you tend to collect war stories as ways to reflect on moments that could have gone "better" or at least in a different direction. After being on-call for various companies for nearly ten years I started to recognize how many of these stories I had. I didn't just have my own stories, but those shared to me from others about the good times, the bad times and the plain out nightmares.

I had a sort of epiphany at the end of 2018 and realized that these stories needed greater cataloging. Great information was being left at the bar too many times for my sake. Rather than just allowing the conversation to end when the conference drinks hangout did, I decided to start collecting stories in a podcast I called "On-Call Nightmares."

A nightmare is temporary.

The word nightmare is defined in Webster's Dictionary as "a frightening dream that usually awakens the sleeper." The reason I chose the word nightmare in the title is I wanted people to understand that most issues that we deal with in on-call will have an end, like a nightmare. You will wake up from this nightmare and if you do some personal introspection likely will have gained some additional knowledge why you had the nightmare.

In technology, we've made it a point to take introspection to a higher level by diving deep into the reasons why experience failures. Modern operations in places like the cloud challenges teams to focus less on an individual cause of a problem and to determine how to provide greater reliability within your application and infrastructure to withstand randomized failure.

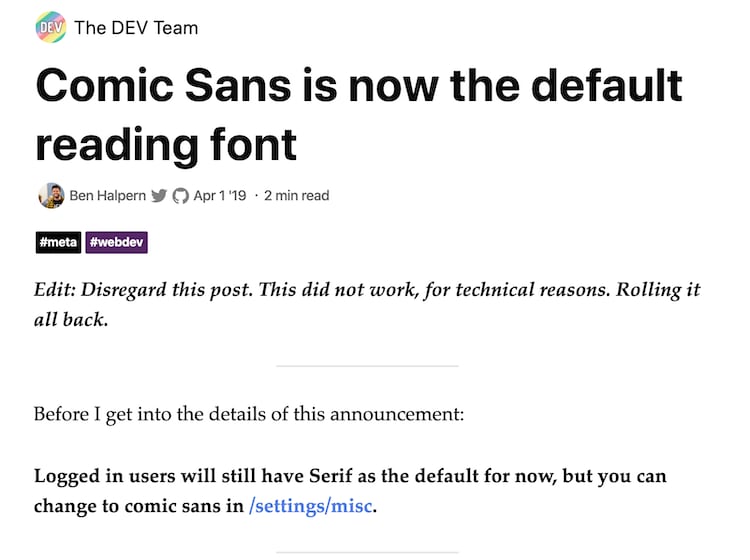

A joke that lead to failure

Failures happen in technology. Failure can be part of a plan that went wrong. Failure can be part of a plan that went right, but just didn't go the way you expected. It's how you learn from these failures is what makes teams best at what they do. How you respond, learn and eventually grow from failure makes for team culture.

In a conversation I had with Ben Halpern of dev.to, we talked about how a random moment of a joke ended up leading to one of those nightmares that we sometimes will leave at the bar:

(full On-Call Nightmares interview available here)

Jay: Sure. Let's get into the meat and potatoes. Can you tell me an incident that might have happened while you are either kind of ramping up for where you're right now, at any time specific that you can really remember where you had to be. You know that, that on-call resource?

Ben: Yeah, I think the biggest, best incident that comes to mind. And, um, and by far biggest, um, problem we've had in terms of up time, Um was this past April 1st. So it's the incident centered around, ah, attempt to do what seemed at the time like a somewhat simple joke. But it was a, uh it didn't joke ended a being on us. Um, big time. And so the concept for our April fool's gag this year was to change these sites, font to comic sans, which seems simple enough. But the, um the way we the way we serve our pages is basically almost all traffic is served on the edge. The CDN, and so not just, like not just static assets, but, like all coming and we a synchronously load any user data like, you know what your profile image looks like? And things like that. We store a lot of that stuff in local storage. But our our big sort of essential architecture decision was that everything goes to the edge and then gets served from there. So the, um, we've made some strides to improve this, but at the time, and this was only a few months ago, um, we with all that, that with the awesome decision that that was meaning that, like, practically even in our worst moments, in terms of our database availability our server, like just handling traffic like we've always had, like, served almost all are just general traffic pages like we, the concept of the site is just It's mostly for, you know, reading and consuming like important information and stuff. And the ratio of reads to writes is always gonna be heavily weighted on the reason I was kind of initial thinking, like, how often do you read on Medium versus write on Medium?

You know, it's gonna be heavily weighted towards read and we always kind of oh, overly emphasized, like real availability for performance purposes, which also we move over scaling, But, uh,W in order to make this change available immediately site wide, even though it was just a simple CSS change. We just don't do these, like, site-wide changes all at once, very often. And, um, we had to just clear the cache And what with the edge cash like we had to stop serving from the edge. And what we thought would happen was that it would, uh, slow down. And our server would kind of have to handle a bit extra load for a little while, and then I would kind of like once the cache kick back in. That would be fine.

Jay: Sure. So I guess the assumption was the cache will repopulate? You have everything, you know, surfing the cache and everything Go back to normal.

Ben Halpern: Yeah, just like it's like, be the way the way things were working like we couldn't get any cash is repopulated without, like, letting pages just take much, much, much longer than expected to load. And, um, you know, the whole process was kind of Ah, it was a real learning experience because I was the one saying, No, that's probably not right the right thing to do because we want to do the opposite. And, um, it actually took like it actually took. Like, basically, I said, like, that idea is to propose earlier on, um, from, uh, Andy on our team. And I kind of said like, No, I don't think that's right. And so we did something else first and set the infinite, the infinite time out. It was like that it was actually he had that idea like to lengthen the time out. I had the idea to do the opposite. So we tried that in a while and didn't work. And I'm I'm glad. Uh, we didn't forget about that and ultimately went back to that idea. And it's still like it still took a long time if before things looked like they were truly healthy. Um, and it was just a fairly brutal day. Like I was up practically all night. Kind of just like taking a look at things. Um, early in the morning, uh, Ana, who lives in Russia, comes online and she starts being helpful, But she also doesn't have admin privileges actually to do things. So I started, Like, at that point, I half I started, like taking naps like this is kind of, like, middle of the night. Um, and things were, like, kind of okay. And, you know, it just it wasn't worth hoping they'd be okay and finally going to sleep.

I believe we were planning on sending an April fool's email. I forget if I don't think we did that, I think instead we just made the email the sort of postmortem. Um, and this was not a were sort of too tired and too and we're very tired. And also this wasn't necessarily security concern or anything, so we didn't feel there was, like, a need to get really detailed. So we mostly just talked about how we were feeling, you know, um and also, like, you know, being a website for programmers. Like we feel like any time we have these issues like this was the worst. But we've had other times where you just kind of had a bit of downtime and stuff, and, uh, we've always felt like if we could just talk about these things, be honest, like, just, like, relate to the community like it's it winds up being like, you know, better than if we hadn't have issues like it's like we can actually get some value, like we can actually connect with the community. So we we, uh it's a special situation where we actually can really empathized. Our users can empathize with us, we can empathize with their users, and, um and even like I was at a conference, like shortly after and, you know, pretty much everybody I talked to who from the community or know what we're doing. Kind of like, actually specifically referenced the emails like that was actually pretty cool. Like, actually, like, I love hearing that kind of thing. So, um, we sort of know that that's a thing we do in these situations is that we just get real honest about it, really, Like real, real open with our feelings about how tough this could be and, um, and then try to move on.

You can read the full post about the change from Ben on dev.to

Sometimes you want failure – Chaos Engineering

In the early 2000s I worked for a company that provided datacenter co-location and managed server hosting. Before midnight on a Saturday, the local utility power to the datacenter was knocked out due to a fairly violent storm, affecting the3,000 servers hosted there. Whenever this happens, an automatic transfer switch (ATS) is supposed to recognize that power is no longer flowing to the datacenter and to immediately start and switch over to generator power. That didn't happen. These 3,000 servers all lost power and required manual intervention to recover almost 60% of the machines. That meant fscks, chkdsk and many other different types of recovery methods were required almost all night. Some servers simply never came back online and needed full restores. Hours of work was spent on getting the servers that belonged to our customers back and running.

The postmortem of this outage was absolutely brutal. We learned that no one tested this the power transfer process prior to the datacenter going online. Because of that, no one considered what the potential impact to the datacenter would have been if the ATS was not functional. Thousands of dollars in customer credits were lost as well as the faith our customers had in providing them with the uptime they depended on. This was really my first introduction to what "Chaos Engineering" is now, the idea destroying portions of your infrastructure and determining the result.

When building distributed systems, we must always consider that failure is almost certain. One of the better speakers on cloud computing, Werner Vogels likes to say, "Everything fails all the time," and he's right. The constant potential for failure is something that is almost built into the cloud now. You may be building apps in multiple datacenters across several regions. You may be putting faith in a third party that you've picked the right provider and proper configuration that no matter what, your app will survive an outage.

Introducing Chaos Engineering

The idea of "Chaos Engineering" isn't just about putting faith in a provider to stay online, it's finding ways to simulate failure in order to determine that you'll withstand an outage of any kind within your application. This means that if a number of your app servers take on a large portion of traffic and are highly CPU taxes, you'll know how to properly scale your application to withstand it. If portions of your application infrastructure were to take on a massive amount of packet loss, how does your team respond?

Chaos engineering helps answer some of these questions by allowing you to simulate the possibilities of what a failure may look like in your production environment.

For some, using tools like Chaos Monkey has helps produce load and service failures to help create attack simulations. Lately I have been working with Gremlin, which acts as a "Chaos-as-a-Service" through a simple client-server model.

You can see a full tutorial on implementing Gremlin with Azure VM's here.

It happens to us all, but the nightmare ends.

You put in that change, you create a fix, you think you have the deploy right, then things just come crashing down. These things occur, it's how you learn from them that makes the most sense. The nightmare will end, you will learn more from it and hopefully have far more pleasant dreams in the future.

You will at some point run into a failed system, a bad deploy or whatever the circumstance may be. The point of all of this is to be certain you can document your failure, communicate your failure and eventually find ways to avoid it from occurring again in the future. Like Ben, sometimes our failures happen by our own doing. All we can do is take time, reflect and prevent future incidents by not repeating our mistakes.

Some Azure Docs

Some docs and tips that that I really think can help you in thinking about reliability and failure in the cloud are:

Azure Fundamentals on Microsoft Learn – learn how to use the Azure cloud to build applications using highly available and distributed resources.

Introduction to Site Reliability Engineering (SRE) on Microsoft Learn – get the skills from Learn on SRE and why it's important to your business.

Learning from Failure – Jeremiah Dooley – Microsoft Ignite - Incidents will happen—there's no doubt about that. The key question is whether you treat them as a learning opportunity to make your operations practice better or just as a loss of time, money, and reputation.

Set up staging environments in Azure App Service – don't deploy right to prod, use a deployment slot to create a staging environment for your PaaS deployments.

Migrate hundreds of terabytes of data into Azure Cosmos DB – avoid using unmanaged services for your databases. Allow a Database as a service to provide you with greater redundancy and global availability to shrink your expected rate of failure due to a database outage.

Top comments (2)

You might want to go over the transcript of the interview with a fine-toothed comb. It's clearly machine generated, and very very wrong in a few places. ;)

thanks - did some edits!