With Java 9 came a whole bunch of ambitious projects to modernize the Java language and enhance the JVM performance; one of these projects is the Project Loom which tackled the multithreading model offered by the JDK. According to the project's page:

The goal of this Project is to explore and incubate Java VM features and APIs built on top of them for the implementation of lightweight user-mode threads (fibers), delimited continuations (of some form), and related features, such as explicit tail-call.

This statement seems not clear for anyone who used the page as the only source to be introduced to the subject of this still incubated and promising feature, but the project was announced in notorious tech conferences mainly Devoxx Belgium in 2018 and before that in an Oracle Java event in 2017. The purpose of this article is to clarify the underlying and the future enhancements expected from this important, yet under development, project. Please note that the code excerpts are taken as it from the project page and are not in a final state.

Motivation Behind

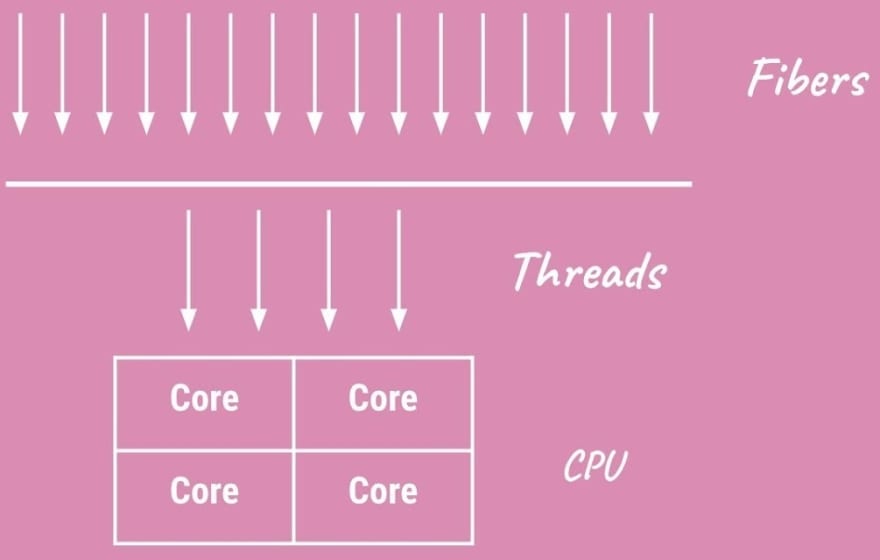

For any serious production ready Java code, concurrency comes as a prominent feature. Failing to fulfill concurrency and parallelism dooms the usability of the final product although its functional facet may be near perfection. In other words, real life Java applications must deal with multi-users, multi-resources, concurrent access and sporadic calls. For that reason, Java offers a threading model conform to the low level basic concurrency patterns and principles (locks, concurrent data structures, join-yield calls, etc...). Given this, implementing asynchronous and concurrent proof code presents the challenge of being an art apart and a burden for maintenance. A top of this, we have to use external libraries to manage Thread Pools in Java. So here comes the need to make the threading API in Java more accessible to the users via a user mode threads (called fibers) unlike the low level standard threads.

fiber = continuation + scheduler

The main idea is to use simply manageable user level threads called Fibers. Fibers are user mode thread which means that they are created and managed by the JVM unlike the normal Threads spawn by the OS via the JVM system calls. But before explaining more the concept of fibers, let's understand the concept of Continuation. After all, the Project Loom is introducing three concepts:

- Fibers

- Continuation

- Tail-calls

Forget, for the moment, about the last concept (tail-calls) as it's not yet developed. To those days, the first two concepts are almost ready.

Fibers are based on the concept of continuation; a Continuation is a demarcation of a flow of execution to allow the play-pause actions. In other words, a continuation allows us to design a piece of code to be started, paused, and then restarted again from its previously stopped point. The prototype for this class is

class _Continuation {

public _Continuation(_Scope scope, Runnable target)

public boolean run()

public static _Continuation suspend(_Scope scope, Consumer<_Continuation> ccc)

public ? getStackTrace()

}

So how continuation is related to the fibers? In fact, fibers are meant to be attached to one thread according to this logic:

- A flow of execution, meant to be concurrent and parallalised, is wrapped by a continuation.

- When starting the concurrency process, the JVM instantiates as many copies of this demarked continuation as needed.

- One thread is then spawn and it will carry the execution of one continuation instance.

- When preempted (by a blocking call or by an interruption), the call stack of this continuation is removed from the thread's stack and saved apart to mark the paused execution, this is called

parking, and the thread can execute another code. - When needed, the execution resumes by pushing again the saved continuation call stack (

un-parking) onto the threads stack and continue from there.

From the above we can see that a thread can hold the execution of multiple fibers and, according to the authors, the already existing scheduler of type ForkJoinPool is suitable for this schema of execution.

class _Fiber {

private final _Continuation cont;

private final Executor scheduler;

private volatile State state;

private final Runnable task;

private enum State { NEW, LEASED, RUNNABLE, PAUSED, DONE; }

public _Fiber(Runnable target, Executor scheduler) {

this.scheduler = scheduler;

this.cont = new _Continuation(_FIBER_SCOPE, target);

this.state = State.NEW;

this.task = () -> {

while (!cont.run()) {

if (park0())

return; // parking; otherwise, had lease -- continue

}

state = State.DONE;

};

}

public void start() {

if (!casState(State.NEW, State.RUNNABLE))

throw new IllegalStateException();

scheduler.execute(task);

}

public static void park() {

_Continuation.suspend(_FIBER_SCOPE, null);

}

private boolean park0() {

State st, nst;

do {

st = state;

switch (st) {

case LEASED: nst = State.RUNNABLE; break;

case RUNNABLE: nst = State.PAUSED; break;

default: throw new IllegalStateException();

}

} while (!casState(st, nst));

return nst == State.PAUSED;

}

public void unpark() {

State st, nst;

do {

State st = state;

switch (st) {

case LEASED:

case RUNNABLE: nst = State.LEASED; break;

case PAUSED: nst = State.RUNNABLE; break;

default: throw new IllegalStateException();

}

} while (!casState(st, nst));

if (nst == State.RUNNABLE)

scheduler.execute(task);

}

private boolean casState(State oldState, State newState) { ... }

}

Advantages and Limitations

Advantages of this approach are mainly less memory overhead (some 100Kb against 1Mb for threads), reduced context switching time between tasks and a simple threading model.

The main limitations reside in the management of thread stacks containing native methods calls and the impact on the existing concurrent APIs.

References

- The project main page: https://openjdk.java.net/projects/loom

- The Proposal: http://cr.openjdk.java.net/~rpressler/loom/Loom-Proposal.html

- Belgium 2018 Devoxx Talk: https://www.youtube.com/watch?v=vbGbXUjlRyQ

- Oracle Talk: https://www.youtube.com/watch?v=J31o0ZMQEnI

Top comments (0)