Recursive loops in AWS Lambda are the root of all evil because you create a large bill quickly.

You might think the danger is over because of new AWS recursive loop detection. But what about S3 buckets and Lambda? It's time for a closer look:

A word of caution: Be very careful when you test this. You could create large AWS bills.

TL;DR: Never ever use the same bucket for invoking a Lambda and write the output.

Some testing infrastructure:

1) A S3 bucket (CDK, GO)

bucky := awss3.NewBucket(stack, aws.String("incoming-ring"), &awss3.BucketProps{

BlockPublicAccess: awss3.BlockPublicAccess_BLOCK_ALL(),

RemovalPolicy: awscdk.RemovalPolicy_DESTROY,

})

2) A Lambda Function Resource

ringFunction := awslambda.NewFunction(stack, aws.String("ring"),

&awslambda.FunctionProps{

Description: aws.String("ring - test recursive loop stop"),

FunctionName: aws.String("ring"),

LogRetention: awslogs.RetentionDays_THREE_MONTHS,

MemorySize: aws.Float64(1024),

Timeout: awscdk.Duration_Seconds(aws.Float64(10)),

Code: awslambda.Code_FromAsset(&lambdaPath, &awss3assets.AssetOptions{}),

Handler: aws.String("main"),

Runtime: awslambda.Runtime_GO_1_X(),

DeadLetterQueueEnabled: aws.Bool(true),

DeadLetterQueue: dlq,

})

3) A Lambda Function, which gets the PutObject Event and write to the same bucket:

someString := "hello world\nand hello go and more"

myReader := strings.NewReader(someString)

resp, err := client.PutObject(context.TODO(), &s3.PutObjectInput{

Bucket: aws.String(bucket),

Key: aws.String(s3input),

Body: myReader,

})

4) An event, which triggers the Lambda function each time an object is Put to the bucket:

myHandler.AddEventSource(event.NewS3EventSource(bucky, &event.S3EventSourceProps{

Events: &[]awss3.EventType{awss3.EventType_OBJECT_CREATED,},

}))

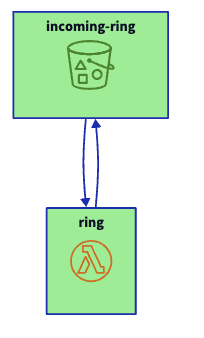

Now we have:

And because I am very careful, I decide to create a recursive loop stopper inside the Lambda function:

GlobalCounter++

if GlobalCounter > 20 {

log.Fatal("Counter exceeded\n")

os.Exit(1)

}

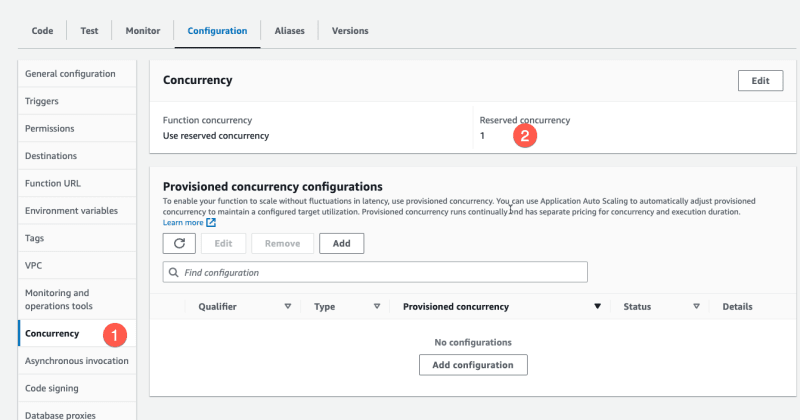

And in the Console, we set the concurrency to 1:

When we test the recursive loop, we always have a browser window with the concurrency open. Because: Setting the concurrency to 0 is the fastest way to stop an endless loop.

The test

Lets test whether S3-Lambda-S3 recursive loops are still possible:

We use saw to tail lambda log:

saw watch /aws/lambda/ring

And then start the recursive loop:

aws s3 cp README.md s3://ring-incomingring3eb9a9b9-1a8q8cp8qm2ch/readme1.md

upload: ./README.md to s3://ring-incomingring3eb9a9b9-1a8q8cp8qm2ch/readme1.md

Very fast you get the logs:

[2023-07-17T17:34:39+02:00] (2023/07/17/[$LATEST]e27ec4706f1a4fb0a6d3ca1fdb23bbc0) INIT_START Runtime Version: go:1.v18 Runtime Version ARN: arn:aws:lambda:eu-central-1::runtime:ccb68acb59818f9df9b10924cc6c83ca6eaf4067f70ba861c0e211b59e8af729

[2023-07-17T17:34:39+02:00] (2023/07/17/[$LATEST]e27ec4706f1a4fb0a6d3ca1fdb23bbc0) START RequestId: 10fba020-0e93-4430-a9d7-728d34b146c9 Version: $LATEST

[2023-07-17T17:34:39+02:00] (2023/07/17/[$LATEST]e27ec4706f1a4fb0a6d3ca1fdb23bbc0) Counter: 1

[2023-07-17T17:34:39+02:00] (2023/07/17/[$LATEST]e27ec4706f1a4fb0a6d3ca1fdb23bbc0) Etag: 446cce24a7c945503232494e881150ec

[2023-07-17T17:34:39+02:00] (2023/07/17/[$LATEST]e27ec4706f1a4fb0a6d3ca1fdb23bbc0) Seq: 0064B55F8E25E34383

[2023-07-17T17:34:39+02:00] (2023/07/17/[$LATEST]e27ec4706f1a4fb0a6d3ca1fdb23bbc0) END RequestId: 10fba020-0e93-4430-a9d7-728d34b146c9

[2023-07-17T17:34:39+02:00] (2023/07/17/[$LATEST]e27ec4706f1a4fb0a6d3ca1fdb23bbc0) REPORT RequestId: 10fba020-0e93-4430-a9d7-728d34b146c9 Duration: 92.67 ms Billed Duration: 93 ms Memory Size: 1024 MB Max Memory Used: 39 MB Init Duration: 110.22 ms

[2023-07-17T17:34:40+02:00] (2023/07/17/[$LATEST]e27ec4706f1a4fb0a6d3ca1fdb23bbc0) START RequestId: 97cf261e-b272-437f-9637-b7573cfb0fa8 Version: $LATEST

[2023-07-17T17:34:40+02:00] (2023/07/17/[$LATEST]e27ec4706f1a4fb0a6d3ca1fdb23bbc0) Counter: 2

[2023-07-17T17:34:40+02:00] (2023/07/17/[$LATEST]e27ec4706f1a4fb0a6d3ca1fdb23bbc0) Etag: a9c4c5f405a3e4cc6ba3b6b355da0e71

[2023-07-17T17:34:40+02:00] (2023/07/17/[$LATEST]e27ec4706f1a4fb0a6d3ca1fdb23bbc0) Seq: 0064B55F8FD24FBE14

[2023-07-17T17:34:41+02:00] (2023/07/17/[$LATEST]e27ec4706f1a4fb0a6d3ca1fdb23bbc0) END RequestId: 97cf261e-b272-437f-9637-b7573cfb0fa8

[2023-07-17T17:34:41+02:00] (2023/07/17/[$LATEST]e27ec4706f1a4fb0a6d3ca1fdb23bbc0) REPORT RequestId: 97cf261e-b272-437f-9637-b7573cfb0fa8 Duration: 27.20 ms Billed Duration: 28 ms Memory Size: 1024 MB Max Memory Used: 40 MB

The interesting part is:

Counter: 1

Counter: 2

...

Counter: 19

Counter: 20

Counter exceeded

And because AWS BLOG about recursive loops says:

"Lambda now detects the function running in a recursive loop between supported services after exceeding 16 invocations. It returns a RecursiveInvocationException to the caller."

We are now sure that the loop detection for S3 is not working because we counted to 20!

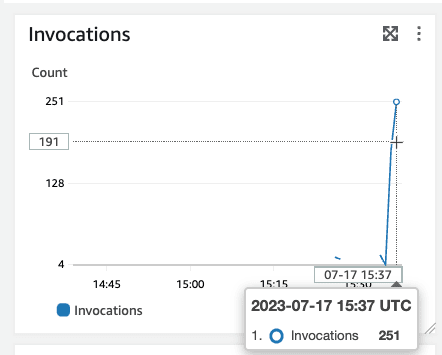

And although I implemented a loop stopper very fast, I started a recursive loop:

So now I set the concurrency to 0 again and delete the Lambda.

Summary

It's proven:

Recursive Loop detection does not stop S3 recursive loops, so do use different buckets for event trigger and Lambda output!

So be careful out there.

And checkout my website go-on-aws or - even better :) - use my new udemy course GO on AWS - Coding, Serverless and Infrastructure as Code

Enjoy building!

Gernot

Top comments (0)