You have probably noticed the privacy policies listed on Apps or websites you use. They include information detailing how the app collects, stores, and uses the data you're providing. This could include your name, Date of birth, email address, phone number, health information, and credit card details, depending on the business case of the app.

Nowadays, lots of data is being stored in Amazon S3, as it offers great scalability, availability, performance and security. Still, we've seen a considerable number of security vulnerabilities in the past in S3 buckets, exposing the data of millions of users. That's not a problem with S3 but in the misconfiguration of the S3 bucket. A quick google search returns a list of these data exposures if you're curious to know more.

This article will go over protecting your S3 data using an Amazon Service called Macie. That's more than just securing access to your bucket. It's also about protecting your data privacy. To make it a bit fun, let's consider we're a healthcare provider company that offers general medical consultations and treatments. Because we're very good at what we do, we decided to name our practice "The pain-killer".

Disclaimer: Keep in mind that there might be a cost associated with using Amazon Macie - for more details have a look at: https://aws.amazon.com/macie/pricing/

Even though our medical knowledge and practices are excellent, our tech is still primitive. So we decided to bring on board one of the most experienced engineers in the neighbourhood to help us out. We first wanted to take all the txt files prescriptions from the local computers to the cloud. Hence, our first S3 bucket got created using Infrastructure as Code and All.

Setup S3 Bucket

With Terraform, the code looks as follows:

provider "aws" {

region = "ap-southeast-2"

}

variable "bucketName" {

default = "the-pain-killer-practice"

type = string

}

resource "aws_s3_bucket" "thePainKiller" {

bucket = var.bucketName

}

We were lucky enough that there is no other S3 bucket with the same name as it needs to be globally unique.

Upload the data

Once deployed using the terraform apply command, the engineer collected the data and uploaded them to S3, grouping them in folders. Side notes: there is no folders concept in S3, and the folders you see in the AWS console are mainly a neat way of displaying the objects in S3 based on their names - e.g. folder/subfolder/file).

As a medical practice, our text files contain PHI (Personal Health Information). These include information about the patients, their conditions, the treatment, insurance information, test results and other data. It is critical to keep this data secure and private, not just because of our high ethical values but also because this might cause us legal and reputation problems, which no one wants.

Create a text file containing data similar to the following:

name: Jane Doe

address: Campbell Road (anything smaller than a state)

date of birth: 12/12/2012

email address: jane.doe@test.com

medical record number: 4432134

...

Protect the data with Amazon Macie

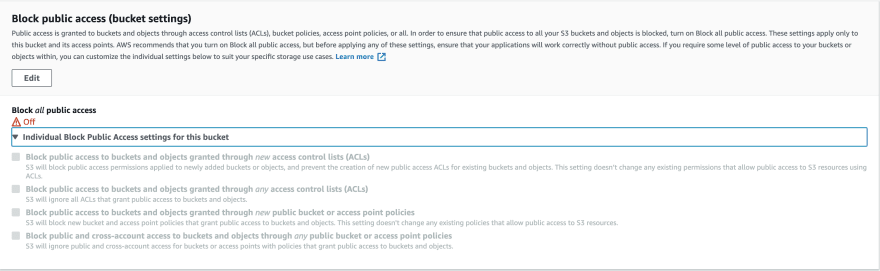

As our hypothetical engineer explored the S3 bucket in the AWS console, they figured out that the Block all public access was turned off:

Even though this doesn't directly give access to all of the objects publicly, it is still risky to keep it off - It's another layer of security that ensures our data doesn't leak. Apparently, it's required to explicitly enable this config when using Terraform (This is not the case when using tools such as CloudFormation).

Our engineer did some additional research and decided to use Amazon Macie, a data security and privacy service that monitors and protects data in S3.

Here's how to enable it with Terraform:

resource "aws_macie2_account" "test" {

finding_publishing_frequency = "FIFTEEN_MINUTES"

status = "ENABLED"

}

This resource enables Macie and allows you to specify the settings for your Macie account. The finding_publishing_frequency value can be set to FIFTEEN_NINUTES, ONE_HOUR and SIX_HOURS.

Run terraform apply to deploy your changes. You should see a dashboard with some metrics about your S3 Buckets:

Afterwards, add a Macie classification job for the bucket we created:

data "aws_caller_identity" "current" {}

resource "aws_macie2_classification_job" "test" {

job_type = "ONE_TIME"

name = "PAIN_KILLER"

s3_job_definition {

bucket_definitions {

account_id = data.aws_caller_identity.current.account_id

buckets = [var.bucketName]

}

}

depends_on = [aws_macie2_account.test]

}

The classification job is a set of settings that analyse the specified S3 bucket(s) for sensitive data. You can set this job to run daily, weekly, and monthly instead of the ONE_TIME type we put in the example above.

Run terraform apply to deploy your changes. Navigate to the Macie page in the AWS console, then select Jobs - notice the job you created running:

The output of Amazon Macie's job is findings, which could be either policy findings or sensitive data findings.

- The policy finding is related to a configuration that would reduce the security of your S3 bucket. Did you guess a config that we don't have set up in a very secure manner?

- The sensitive data findings are data that contain credentials, customer identifiers, financial records, health records and others. Do we have some of these in our files?

Navigate to the "Findings" tab in the left panel in the AWS Console. Notice both policy and sensitive data got detected (one related to Date of birth and the other related to "Block public access" being disabled on the bucket):

What's next?

You can set up an integration with Event Bridge, where the findings can be delivered and then routed to another AWS service such as Lambda or SNS. This enables you to notify the team to take actions when something is not expected regarding data privacy.

This post covers "Managed Data Identifiers", which returns common findings similar to those we mentioned earlier. Another excellent feature that Macie offers is "Custom Data Identifiers", where you can set up rules to detect cases specific to your business using a regular expression defining a text pattern to match.

How do you protect the data privacy of your customers?

Top comments (0)