Zero trust security is gaining a lot prominence due to the dynamic nature of cloud based microservices driven applications, but implementing it is a lot easier said than done especially if the microservices sit behind proxies, load balancers and service meshes. Zero trust security is a model where application microservices are considered discrete from each other and no microservice trusts any other. This manifests as a security posture designed to consider input from any source as potentially malicious. All the resources must be subject to strict identity verification regardless of whether they are sitting within or outside of the network perimeter.

Given the dynamic nature of microservices, you’ll need a service mesh like Istio to handle all the heavy lifting associated with Zero trust security. Istio offers a mix of additional layer 4–7 firewall controls, encryption over the wire, and advanced service and end-user authentication and authorization options for services in the mesh, along with the ability to capture detailed connection logging and metrics. As with most of Istio’s capabilities, these are all powered under the hood by the Envoy proxy running as a sidecar container beside each application instance in the service mesh.

End to end in-transit encryption is one of the key enablers of zero trust security. In this article, we’ll take a look at some of the options you can consider when implementing end to end in-transit encryption for microservices running on AWS EKS with Istio as a service mesh. End to end in-transit encryption is particularly important if you are dealing with sensitive information, and in the case of PCI DSS in-scope workloads this is a must have capability.

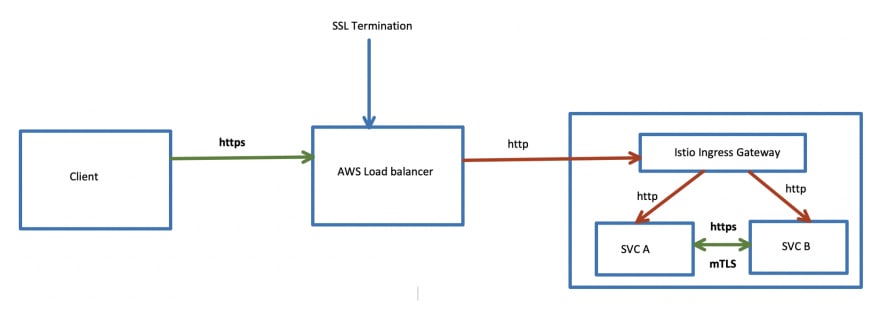

The Problem — Unencrypted traffic between ELB and the backend microservice

HTTPS traffic originates from the client, and terminates at the ELB. ELB uses a SSL/TLS certificate generated via AWS Certificate Manager. By doing this, all the traffic originating from the client to the ELB will be encrypted.

Microservices should be configured to allow both HTTP and HTTPS traffic. Communication between microservices happen over HTTPS in addition to the mTLS capabilities provided by Istio sidecars.

After SSL termination at the ELB, the traffic gets relayed to the backend Microservices via Istio Ingress Gateway in plain HTTP. This is not ideal because unencrypted traffic is vulnerable to both internal and external attacks.

Th problem of unencrypted traffic between ELB and the backend microservice can be addressed by the solutions outlined below.

Solution 1 : SSL Termination at the backend Microservices via AWS NLB and Istio

HTTPS traffic originates from the client, and gets relayed to the backend microservice via AWS NLB and Istio Ingress Gateway. SSL termination happens at the backend microservice.

Both AWS NLB and Istio Ingress Gateway are configured to perform SSL passthrough to allow HTTPS traffic to terminate on the backend microservice.

All the microservices catering to external internet bound traffic should be configured with a public SSL/TLS certificate generated via an external CA in order for the clients to trust the identity.

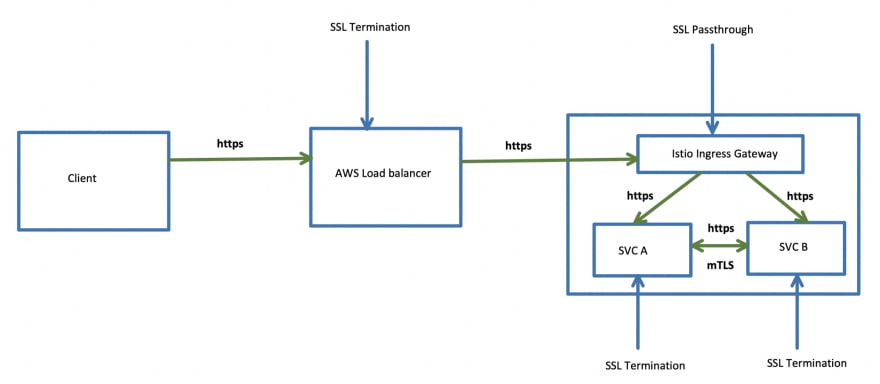

Solution 2: SSL Termination at the backend Microservices via AWS ELB and Istio

HTTPS traffic originates from the client, and terminates at the ELB. ELB uses a SSL/TLS certificate generated via AWS Certificate Manager.

ELB creates another HTTPS connection to the backend microservice using the listener configuration.

All the microservices including the Istio Ingress Gateway use SSL/TLS certificates generated by an internal CA.

The HTTPS traffic from ELB gets relayed to the backend microservice via the Istio Ingress Gateway. In order to achieve this, you have to configure Istio Ingress Gateway to perform SSL passthrough.

Solution 2 in action

In this section we’ll take a look at how to implement solution 2 for end to end in-transit encryption. The setup looks like this,

I have an EKS cluster running with three worker nodes (spot instances) running in AWS SG region.

Istio has been deployed in the cluster using istioctl utility.

I have a domain named rahulnatarajan.co.in. I created a CNAME record in my DNS to point to Istio Ingress Gateway’s ELB.

I have a public SSL/TLS certificate issued by AWS ACM for *.rahulnatarajan.co.in domain. ACM supports wild card SSL/TLS certificates.

Istio Ingress Gateway has been exposed via AWS ELB with the following listener configuration for HTTPS traffic. SSL/TLS certificate issued by AWS ACM has been associated with the Istio Ingress Gateway ELB.

The above listener configuration shows that both the Load Balancer Protocol and Instance Protocol are HTTPS. This is an important configuration for end to end encryption.

Port 31390 is Istio Ingress Gateway’s secure port used for HTTPS communication. This port number is allocated by Kubernetes to Istio Ingress Gateway service.

With this base configuration in place, let’s go ahead and deploy a couple of services — Nginx and DNS utility. Generate the SSL/TLS certificate for Nginx service. I used my domain name igw.rahulnatarajan.co.in as CN in the certificate request.

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /tmp/nginx.key -out /tmp/nginx.crt -subj "/CN=igw.rahulnatarajan.co.in/O=acn"

Create a ConfigMap used for the Nginx HTTPS service

kubectl create secret tls nginxsecret --key /tmp/nginx.key --cert /tmp/nginx.crt

Create a Nginx configuration file using the following contents as default.conf

server {

listen 443 ssl;

root /usr/share/nginx/html;

index index.html;

server_name localhost;

ssl_certificate /etc/nginx/ssl/tls.crt;

ssl_certificate_key /etc/nginx/ssl/tls.key;

}

Create a ConfigMap used for the Nginx HTTPS service

kubectl create configmap nginxconfigmap --from-file=default.conf

Deploy the Nginx HTTPS service. I’m using the sample Nginx deployment that comes with Istio 1.5.2. You can download Istio binary from here. After downloading the Istio binary, navigate to the Istio folder and deploy Nginx HTTPS service.

kubectl apply -f <(istioctl kube-inject -f samples/https/nginx-app.yaml)

Deploy Sleep service from the Istio samples folder. We’ll use this service to test HTTPS connectivity with Nginx.

kubectl apply -f <(istioctl kube-inject -f samples/sleep/sleep.yaml)

Create Istio Gateway and Virtual Service definition in order to allow external traffic into the cluster.

In the above Istio Gateway definition you can see that I’m using TLS in PASSTHROUGH mode to allow SSL termination on the Nginx HTTPS service.

In the above Istio Virtual Service definition you can see that all the traffic is going over a secure HTTPS connection.

Now that we have secured the entire communication channel with SSL encryption, let’s go ahead and test it from our browser.

You can see that the connection is secure and it is using a valid public SSL/TLS certificate issued by AWS ACM.

You can also verify if the connection is secure inside the cluster. I’m executing the curl command from the Istio Ingress Gateway pod. Please note that I’m not validating the SSL/TLS certificate on Nginx HTTPS in ELB. ELBs run exclusively on AWS VPC network, a Software Defined Network where they encapsulate and authenticate traffic at the packet level. The short of it is that traffic simply can’t be man-in-the-middled or spoofed on the VPC network, it’s one of their (AWS) core security guarantees.

If I try to connect to Nginx HTTPS service over HTTP the connection will fail as shown in the following screenshot

Test HTTPS connectivity from the Sleep pod. The connection should go through successfully.

When the Istio sidecar is deployed with an HTTPS service, the proxy automatically downgrades from L7 to L4 (no matter mutual TLS is enabled or not), which means it does not terminate the original HTTPS traffic. And this is the reason Istio can work on HTTPS services.

Security experts largely support the idea of end-to-end encryption because it better protects your data from hackers and other malicious entities. If the information is protected end to end, though, there’s no point in intercepting information halfway down the line as it’s in an encrypted format. Given the advantages of end to end security, and the ease with which you can get it done with AWS ACM and Istio, you should probably consider it for your microservices based applications to prevent any potential security nightmares.

Top comments (1)

Awesome work Rahul..need small help regarding the same. Request you to please connect as I am really stuck with something similar. I am at chadhasumit13@gmail.com.