Have you ever tried to handle a ton of requests at once with AWS Lambda? What if you had a critical operation that required speed, scaling the performance of a request down to single-digit milliseconds?

In a previous article, I discussed how to speed up your Lambda function, touching on the concept of a 'cold start' and how to minimize it by moving some non-critical code outside the Lambda handler, such as establishing a DB connection.

When we send a request to execute a Lambda function, the service initialises an execution environment. That's a virtual machine (MicroVM) dedicated to each concurrent invocation of a Lambda function. Within this VM, there's a kernel, a runtime (e.g. Node.js), function code, and extensions code. The cold start period includes creating this environment, initialising the runtime and extensions, and downloading the code. It also includes the execution of the 'Init' code (the code you write before the handler function).

With multiple consecutive executions, the Lambda service would reuse a previously created execution environment, and only execute the code within the handler function (that's what we call warm start). That's fascinating and would be sufficient in lots of cases. However, in some instances, we need to execute a single Lambda function concurrently. That would cause the creation of a new execution environment for each execution, which means that we cannot leverage the warm Lambda, and would need to experience the cold start for each invocation.

The solution in this case is to set up provisioned concurrency. Let's test it.

Set up the project

We will build a simple project consisting of 2 Lambda functions, one orchestrator and one worker that will be invoked concurrently from the orchestrator Lambda.

Let's create the infrastructure in Terraform:

- Create the IAM policies and roles that will be used by the Lambda functions

locals {

policy_document_cloudwatch = {

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Action = [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

]

Resource = "arn:aws:logs:*:*:*"

}

]

}

policy_document_lambda_invoke = {

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Action = ["lambda:InvokeFunction"]

Resource = ["arn:aws:lambda:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:function:*"]

}

]

}

}

data "aws_iam_policy_document" "lambda_assume_role" {

statement {

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["lambda.amazonaws.com"]

}

}

}

resource "aws_iam_role" "worker_lambda_execution_role" {

name = "worker_lambda-exec-role"

assume_role_policy = data.aws_iam_policy_document.lambda_assume_role.json

}

resource "aws_iam_role_policy_attachment" "lambda_policies_attachment_cloudwatch" {

role = aws_iam_role.worker_lambda_execution_role.name

policy_arn = aws_iam_policy.lambda_policies_cloudwatch.arn

}

resource "aws_iam_policy" "lambda_policies_cloudwatch" {

name = "lambda_logging_cloudwatch_access"

description = "lambda logs in CloudWatch"

policy = jsonencode({

Version = "2012-10-17"

Statement = local.policy_document_cloudwatch.Statement

})

}

resource "aws_iam_role" "orchestrator_lambda_execution_role" {

name = "orchestrator-lambda-exec-role"

assume_role_policy = data.aws_iam_policy_document.lambda_assume_role.json

}

resource "aws_iam_role_policy_attachment" "lambda_policies_attachment_cloudwatch_and_lambda_invocation" {

role = aws_iam_role.orchestrator_lambda_execution_role.name

policy_arn = aws_iam_policy.lambda_policies_cloudwatch_and_lambda_invocation.arn

}

resource "aws_iam_policy" "lambda_policies_cloudwatch_and_lambda_invocation" {

name = "lambda_logging_cloudwatch_access_and_lambda_invocation"

description = "lambda logs in CloudWatch and worker lambda invocation"

policy = jsonencode({

Version = "2012-10-17"

Statement = concat(

local.policy_document_cloudwatch.Statement,

local.policy_document_lambda_invoke.Statement

)

})

}

- Create a

lambda.tffile configuring the lambda functions

resource "aws_lambda_function" "orchestrator_lambda" {

function_name = "orchestrator_lambda"

handler = "orchestrator_lambda.handler"

runtime = "nodejs18.x"

filename = "orchestrator_lambda_function.zip"

source_code_hash = filebase64sha256("orchestrator_lambda_function.zip")

role = aws_iam_role.orchestrator_lambda_execution_role.arn

depends_on = [

aws_iam_role_policy_attachment.lambda_policies_attachment_cloudwatch_and_lambda_invocation

]

environment {

variables = {

FUNCTION_NAME = aws_lambda_function.worker_lambda.function_name

}

}

}

resource "aws_lambda_function" "worker_lambda" {

function_name = "worker_lambda"

handler = "worker_lambda.handler"

runtime = "nodejs18.x"

filename = "worker_lambda_function.zip"

source_code_hash = filebase64sha256("worker_lambda_function.zip")

role = aws_iam_role.worker_lambda_execution_role.arn

depends_on = [

aws_iam_role_policy_attachment.lambda_policies_attachment_cloudwatch

]

}

We have yet to define any concurrency. We will get to this later.

Now let's implement our Lambda functions:

- Create a new

orchestrator_lambda.jsfile

import { InvokeCommand, LambdaClient, LogType } from "@aws-sdk/client-lambda";

const invokeLambda = async (payload) => {

const client = new LambdaClient();

const command = new InvokeCommand({

FunctionName: process.env.FUNCTION_NAME,

LogType: LogType.Tail,

Payload: JSON.stringify(payload),

});

await client.send(command);

console.log("Lambda function invoked!");

};

export const handler = async (event, context) => {

const promises = [];

for (let i = 0; i < 10; i++) {

const payload = { invocation: i + 1 };

promises.push(invokeLambda(payload));

}

await Promise.all(promises);

};

- Create a new

worker_lambda.jsfile

export const handler = async (event, context) => {

console.log("here is the event received: ", event);

};

Test the concurrent Lambda execution before and after setting up the provisioned concurrency

Before Provisioned Concurrency

In the AWS Console, navigate to the "Orchestrator Lambda" and click the "Test" button. This will invoke the "Worker Lambda" 10 times.

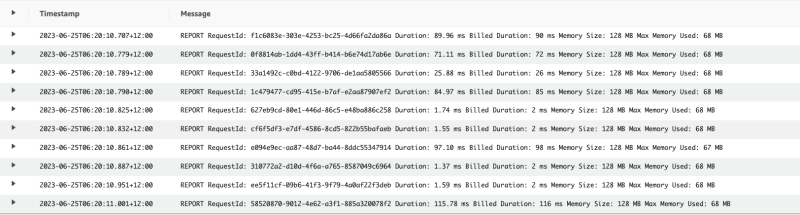

Have a look at the CloudWatch logs of the "Worker Lambda":

Notice the "Worker Lambda" got invoked 10 times concurrently. Each time starting a new execution environment and initialising it. That "cold start" duration shows as the "Init Duration" in the previous screenshot; there are ~ 160ms used for "Init" in every invocation. What if we can save this time?

After Provisioned Concurrency

Let's set up provisioned concurrency for the "Worker Lambda". This will allocate a specified number of execution environments for the Lambda function, which would ensure rapid function start-up, skipping the "Init" part. The Provisioned concurrency works only with a Lambda alias (a pointer to a specific Lambda version) or Lambda version, so we'll set this up as well.

- In your

lambda.tffile, under theworker_lambdaresource, add thepublishproperty, which will create a Lambda version:

publish = true

- Add the Lambda alias, which will point to the created version of the Lambda

resource "aws_lambda_alias" "worker_lambda_alias" {

name = "worker_lambda_alias"

function_name = aws_lambda_function.worker_lambda.function_name

function_version = aws_lambda_function.worker_lambda.version // This is the latest published version

}

- Add the provisioned concurrency configuration

resource "aws_lambda_provisioned_concurrency_config" "worker_lambda_provisioned_concurrency" {

function_name = aws_lambda_alias.worker_lambda_alias.function_name

provisioned_concurrent_executions = 10

qualifier = aws_lambda_alias.worker_lambda_alias.name

}

- On the "Orchestrator Lambda" config, pass the alias as an environment variable. So the environment tag would look as follows:

environment {

variables = {

WORKER_FUNCTION_NAME = aws_lambda_function.worker_lambda.function_name

WORLER_ALIAS = aws_lambda_alias.worker_lambda_alias.name

}

}

- Update the Lambda "Orchestrator Lambda" code to hit the alias, in the

orchestrator_lambda.js:

const command = new InvokeCommand({

FunctionName: `${process.env.WORKER_FUNCTION_NAME}:${process.env.WORKER_ALIAS}`,

LogType: LogType.Tail,

Payload: JSON.stringify(payload),

});

After you deploy these changes, navigate to the AWS Console and click the "Test" button in the "Orchestrator" Lambda. This will invoke the "Worker Lambda" 10 times.

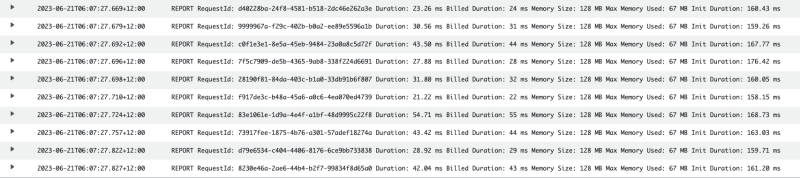

Have a look at the CloudWatch logs of the "Worker Lambda":

Notice that we no longer have an "Init" duration, as we have 10 provisioned Worker Lambda functions. If we submitted 11 concurrent requests, then one worker lambda will have an "Init" duration.

It's worth noting that, even with provisioned concurrency, you might still experience some instances having "Init" duration if you invoke the function immediately after deployment.

Provisioned concurrency comes with a cost as we have Lambda instances running all the time. One thing to think of is how would you balance the cost and performance benefits of using provisioned concurrency. I'd love to hear your thoughts.

Top comments (0)