In traditional database management systems, such as MySQL, there is a separation between the "INSERT" and the "UPDATE" operations. The "INSERT" statement is used to add records to a table, and the "UPDATE" statement is used to update existing records. If, for example, you try to insert a new record with an existing primary key, you would get an error.

On the other hand, DynamoDB uses a single operation PutItem to both insert or update existing records. When the PutItem operation is used with an existing primary key on the DynamoDB table, it will overwrite the data on that record.

If you're not familiar with it, DynamoDB is a NoSQL database service provided by AWS, known for its seamless scalability, high performance, and simplified data model.

Is PutItem a risky operation?

The PutItem behaviour, where it overwrites existing data, might be considered risky in some instances if the developers aren't careful about how to update existing records. That might be simply a bug or an oversight of a certain scenario, but could as well be a result of an incorrect DynamoDB table design to start with.

Of course, DynamoDB offers conditional expression to help mitigate the data overwrite risk. Without conditional expression, we'd need to add the logic in our code to check whether an item exists or not, and that's costly. This also comes with the risk of race conditions if other requests are trying to update the same item.

Example where data is overwritten

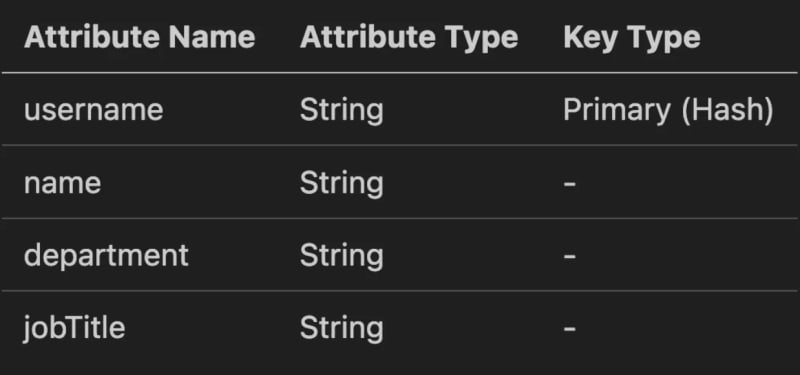

Let's see this as an example. Assume we have an "Employees" table designed as follows:

When creating a new employee, we'd want to ensure we don't overwrite an existing user. Below we write the code using Terraform and JavaScript.

For simplicity, I'll post the Terraform code in a single file main.tf:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

variable "aws_region" {

type = string

default = "ap-southeast-2"

}

provider "aws" {

region = var.aws_region

}

resource "aws_dynamodb_table" "employees_table" {

name = "employees"

billing_mode = "PAY_PER_REQUEST"

hash_key = "username"

attribute {

name = "username"

type = "S"

}

}

# IAM Role

resource "aws_iam_role" "add_employee_lambda_role" {

name = "add_employee_lambda_role"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Effect": "Allow",

"Sid": ""

}

]

}

EOF

}

# IAM Policy that allows interaction with DynamoDB

resource "aws_iam_policy" "add_employee_lambda_policy" {

name = "add_employee_lambda_policy"

description = "Allow lambda to access dynamodb"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"dynamodb:PutItem",

"dynamodb:DeleteItem",

"dynamodb:GetItem",

"dynamodb:Scan",

"dynamodb:Query",

"dynamodb:UpdateItem"

],

"Effect": "Allow",

"Resource": "*"

}

]

}

EOF

}

# Attach IAM Policy to IAM Role

resource "aws_iam_role_policy_attachment" "add_employee_lambda_role_policy_attachment" {

role = aws_iam_role.add_employee_lambda_role.name

policy_arn = aws_iam_policy.add_employee_lambda_policy.arn

}

# data archive for lambda function

data "archive_file" "add_employee_lambda_zip" {

type = "zip"

source_dir = "${path.module}/../src"

output_path = "${path.module}/add_employee.zip"

}

# Add employee lambda function

resource "aws_lambda_function" "add_employee_lambda" {

filename = data.archive_file.add_employee_lambda_zip.output_path

function_name = "add_employee_lambda"

role = aws_iam_role.add_employee_lambda_role.arn

handler = "add_employee.handler"

source_code_hash = data.archive_file.add_employee_lambda_zip.output_base64sha256

runtime = "nodejs18.x"

publish = true

environment {

variables = {

DYNAMODB_TABLE = aws_dynamodb_table.employees_table.name

}

}

}

Next, we'll write a simple JS Lambda function to put items into DynamoDB:

import {

DynamoDBClient,

PutItemCommand,

} from '@aws-sdk/client-dynamodb';

import { marshall } from '@aws-sdk/util-dynamodb';

const tableName = process.env.DYNAMODB_TABLE;

const client = new DynamoDBClient({});

export const handler = async (event) => {

const { username, name, department, jobTitle } = event;

const params = {

TableName: tableName,

Item: marshall({

username,

name,

department,

jobTitle,

}),

};

const command = new PutItemCommand(params);

const response = await client.send(command);

console.log(response);

return response;

};

Deploy this infrastructure (terraform apply), then navigate to the Lambda function in AWS Console and test the lambda with the following event object:

{

"username": "ali",

"name": "Ali Haydar",

"department": "Engineering",

"jobTitle": "Platform Lead"

}

Verify the item got added to DynamoDB.

Assume a new employee with the same first name joined the company, and the admin just went ahead with the following employee data (let's use the Lambda test functionality to add the new employee - of course, in a real-life scenario there would be a UI for that):

{

"username": "ali",

"name": "Ali Wong",

"department": "Acting",

"jobTitle": "Stand-up comedian and acress"

}

A few days later, the first employee "ali" got promoted to Chief Finance Officer, so it's time to update this info in the system. When searching for "ali", only "Ali Wong" was found. Where's our intended user?

That was an example where an oversight and a poor design of the table caused a bug leading to data loss.

example with conditional expression

To protect against this kind of mistake, we modify our code slightly, adding a conditional expression to the params:

ConditionExpression: 'attribute_not_exists(username)',

Deploy the change.

Try to update the existing "Ali Wong" record with the following data:

{

"username": "ali",

"name": "Ali Haydar",

"department": "Engineering",

"jobTitle": "Platform Lead"

}

Notice the error:

"errorType": "ConditionalCheckFailedException",

"errorMessage": "The conditional request failed",

The conditional expression checks for the non-existence of the username before allowing the update.

The conditional expressions can be useful in multiple other use cases. Have a look at the docs.

I've seen patterns in relational databases where an operation behaves similarly to PutItem in DynamoDB. Mostly, these were code-built functions that handle the logic of "Insert" or "Update". In some cases, we referred to them as "upsert" operations. The concept is often implemented using statements like INSERT ON DUPLICATE KEY UPDATE in MySQL.

In the example above, even though there is no direct impact of the table design; using a username as a primary key makes it more prone to data overwrite, especially if that's a manually entered username. In addition, it does limit the access patterns to the table, but that's a discussion for another day. One way to improve this might be to use a composite key of username and department for example. What do you think?

Top comments (0)