If you have ever worked in an app in which you have used bleeding-edge APIs, then you might have come across the process of loading polyfills, ponyfills, etc.., Though, there are many content delivery network (CDN) providers like cdnjs.com, unpkg.com, jsdeliver.com, etc.., which can be used to (lazy) load polyfills by based on feature detection, but there is this specific one polyfill.io which works by differently by sniffing user agent*(UA)* and then detects the minimal of amount of polyfill code required for that specific browser version. To me, it is quite novel because rather than blinding loading all the polyfill code, it makes sure to load only the necessary bits to improve the performance of the app.

When the Service Worker API was introduced, it helped the developers to optimize the performance of the website and taking inspiration from it, the Cloudflare introduced Workers which helps to deploy serverless apps on their global CDN. It opened a lot of new possibilities and the best thing about it was that it had the same API and mental model of service workers. I always wanted to build something moderately complex and useful with Cloudflare workers, so I thought of replicating the same functionality of polyfill.io using workers.

How Polyfill.io works?

First, it is really important to know how the application works for replicating it. The polyfill.io has a Node.js server built on top of express.js and it's only core functionality is to produce the polyfill code for the given set of query params. Yes, the query params act as an API to load the polyfill code. To know more about it, visit this link. The polyfill-library is used to generate the code and is actively maintained by the Financial Times. The major part of their setup is their CDN setup. They use Fastly as their CDN provider for caching the content and also delivering it faster to the end-users. To achieve a high cache-hit ratio, they have fine-tuned their CDN configuration by a bunch of VCL scripts. VCL is an acronym for varnish configuration language and for the fact that Varnish is a reverse caching proxy used by many famous sites like Facebook, Spotify, Twitter, etc.., VCL is a domain-specific language for varnish and it is not as readable as JavaScript. Here is the list of VCL scripts responsible for achieving a high cache hit ratio. I advise you, readers, to take a glance at the scripts.

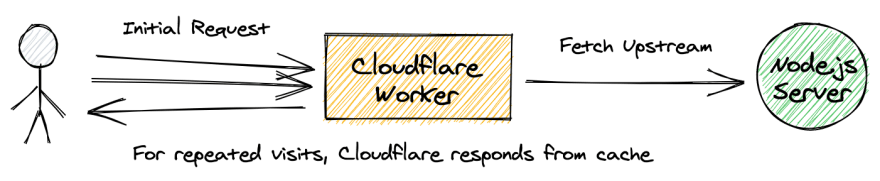

So, What do you think? Isn't it quite hard to follow when compared to other languages? This led me to the thought of what it takes to achieve the functionality of these VCL files but in a Cloudflare worker script written in JavaScript and also by taking advantage of the ecosystem(NPM, Typescript, etc.,). To me, I find the JavaScript code is much easier to understand, maintain and requires a minimal amount of code when compared to VCL files. For the fact that VCL files have almost 50% more code than a Cloudflare worker script. The setup can be described as,

Original Polyfill.io = Node.js Server + Fastly CDN

Replicating Polyfill.io using Cloudflare Workers

To start, we will use the same methodology of having a Node.js server for generating the polyfill code and replacing the Fastly CDN with a Cloudflare worker script. For the server, I have cloned the code repo and hosted in Heroku. So in our case, it would be like,

Replicated Polyfill.io = Node.js Server + Cloudflare Worker

So, let's dip our toes and get started with the Cloudflare script. For this project, we will be using TypeScript for type safety and Webpack for bundling the files.

Let's implement the fetch listener for handling the request and it is plain and simple. It just responds with the content given by the handlePolyfill function.

addEventListener('fetch', (event: FetchEvent) => {

// Omitted code for brevity

event.respondWith(handlePolyfill(event.request, event))

})

Now for the handlePolyfill function,

async function handlePolyfill(

request: Request,

event: FetchEvent,

): Promise<Response> {

let url = new URL(request.url)

// Store the query params as key-value pairs in an object

let queryParams = getQueryParamsHash(url);

// TODO : Handle the logic here

}

So before implementing the handlePolyfill function, it is really important to know certain things to achieve a high cache HIT ratio. As I said, the query params act an API and therefore it leads to quite a lot of combinations and using the URL as the cache key will negatively affect the cache hit ratio. For example, here are 5 different URLs,

1. https://polyfill.asvny.workers.dev/polyfill.min.js?features=IntersectionObserver,fetch,Intl&callback=main

2. https://polyfill.asvny.workers.dev/polyfill.min.js?features=fetch,IntersectionObserver,Intl&callback=main

3. https://polyfill.asvny.workers.dev/polyfill.min.js?features=Int,fetch,IntersectionObserver&callback=main

4. https://polyfill.asvny.workers.dev/polyfill.min.js?callback=main&features=Int,fetch,IntersectionObserver

5. https://polyfill.asvny.workers.dev/polyfill.min.js?callback=main&features=Int,fetch,IntersectionObserver&lang=en

Though they are five different URLs from the browser perspective, but it actually will load the same polyfill code. It is quite obvious that, in the first 3 items, the features are the same but in a different order and for the 4th, features and callback query param position are interchanged. Now coming to the 5th one, it is the same as the other four ones because lang is NOT a part of the API (query params). So, here it is just another query param but of no use. So now, the above list of URLs can be represented as a JSON and by sorting the features array values.

// For all 5 URLs, it results the same JSON object

{ "features": [ "Intl", "IntersectionObserver", "fetch" ], "callback": "main" }

{ "features": [ "Intl", "IntersectionObserver", "fetch" ], "callback": "main" }

{ "features": [ "Intl", "IntersectionObserver", "fetch" ], "callback": "main" }

{ "features": [ "Intl", "IntersectionObserver", "fetch" ], "callback": "main" }

{ "features": [ "Intl", "IntersectionObserver", "fetch" ], "callback": "main" }

So now, we know to represent URLs as meaning full JavaScript Object (which we will discuss later on how we use it). Now coming back to the function implementation,

async function handlePolyfill(

request: Request,

event: FetchEvent

): Promise<Response> {

// Construct a new URL object which is fetch from upstream origin

let fetchURL = new URL(request.url);

fetchURL.host = "polyfill-io.herokuapp.com";

fetchURL.pathname = "/v3/" + fetchURL.pathname;

// Get query params from the URL object and store as key-value object

let queryParams = getQueryParamsHash(url);

let uaString = request.headers.get("User-Agent") || "";

// Sort the keys and also the values and have it in same shape

let json: PolyFillOptions = {

excludes: queryParams.excludes ? queryParams.excludes.split(",") : [],

features: featuresfromQueryParam(features, queryParams.flags),

callback: /^[\w\.]+$/.test(callback || "") ? callback : false,

unknown: queryParams.unknown || "polyfill",

minify: url.pathname.endsWith(".min.js"),

compression: true,

uaString

};

// Get SHA-1 of json object

let KEY = await generateCacheKey(json);

// Since cache API expects first argument to be either Request or URL string,

// so prepend a dummy host

let cacheKey = HOST + KEY;

// Get the cached response for given key

let response = await cache.match(cacheKey);

if (!response) {

/**

* If there is no cached response, fetch the polyfill string

* from the upstream Node.js Server and tell cloudflare to cache

* the response with hash as the cache key

*/

response = await fetch(fetchURL, {

headers: {

"User-Agent": uaString

},

cf: { cacheKey, cacheEverything: true }

});

// Store the response in the response without blocking execution

event.waitUntil(cache.put(cacheKey, response.clone()));

}

/**

* Check if cached response's ETag value and current request's

* If-None-Match value are equal or not, if true respond

* with a 304 Not Modified response

*/

let ifNoneMatch = request.headers.get('if-none-match')

let cachedEtag = response.headers.get('etag')

if (ifNoneMatch == cachedEtag) {

return new Response(null, { status: 304 });

}

// Finally, send the response

return response;

}

I hope the above code is clear with the comments but I want to explain just the cacheKey part alone. Remember, how we used to represent a URL as JSON, we will now use that along with the User Agent String (because UA string is responsible for generating the necessary code for that specific browser version). So, for the above URLs the JSON would be something similiar like,

let json = {

"excludes": [],

"features": ['intl', 'fetch', 'IntersectionObserver'],

"callback": 'demo',

"unknown": 'polyfill',

"uaString": { browser: 'chrome', major: '76', minor: '0' },

}

Now, generating a hash of this above-stringified JSON will be the same for all 5 URLs. So, when a request comes in, the cache is empty so it calls the upstream Node.js server and caches the response with the generated hash. Now, when the second request kicks in, the generated hash will be a match with the first URL one, so, therefore, we can return the cached response from the worker and helps to minimize upstream network requests and achieve high cache hit ratio. For all 5 URLs, the generated hash is shown below,

4b8fe085e7c3d935b7b85b619bbb1e5acf3de60d

Finally, we were able to achieve the same functionality of polyfill.io with a minimal code with a Cloudflare worker script and a simple Node.js server. But it got me into thinking, whether the current Node.js server can be removed and solely depend on the Cloudflare script for generating the polyfill code. So what it looks like,

Polyfill.io = Node.js server + Cloudflare worker

Polyfill.io = Cloudflare worker

I knew it was possible and I actually started this project to achieve this as my primary goal.

Why this madness?

Think of it like,

- No need to maintain a Node.js server.

- No server bills, only pay for Cloudflare Workers.

- Minimal lines of code.

So before discussing my successful attempt, let's discuss my failed attempts and how overcame it.

Failed Attempt 1

My first attempt was to bundle all the Cloudflare worker script, polyfill code required for every feature like fetch, Array.of, Web Animations, etc.., into one giant JavaScript file. So, when a request kicks in, I can generate the code in the worker itself and responds immediately with it.

Something similar like

// polyfillObject is gaint JSON of around 20mb+ in size

let polyfillJSON = JSON.parse(polyfillObject)

// Here feature name can be fetch, atob, Intl etc.,

let polyfillString = polyfillJSON[featureName]

response = new Response(polyfillString)

But this didn't work because the Cloudflare worker allows a maximum of 1mb file (after compression) and file size in our case was 25mb. It was a real bummer and had to think of some other method.

Failed Attempt 2;

For this attempt, I compiled the polyfill code required for every feature in a single giant JSON file as key-value pairs. It would look something like,

{

"fetch: "....", // fetch polyfill code

"Object.is" : "...", // Object.is polyfill code

"Array.of" : "..." // Array.of polyfill code

}

The file was roughly around 20mb in size and I hosted the file in my domain. So, the idea was to,

- On the first visit - cache the polyfill.json by fetching from the URL and then use that as a data source for generating the polyfill code.

- On repeated visit - Since the polyfill.json is already in the cache, we can directly work on generating the polyfill code.

It would look something similar like, say for getting the polyfill code for fetch

let cachedPolyfill = await cache.match("https://DOMAIN/path-to-the-gaint-hosted-json-file")

let polyfillString = '';

let polyfillJSON = '';

if(!cachedPolyfill) {

let polyFillResponse = await fetch("https://DOMAIN/path-to-the-gaint-hosted-json-file")

// polyfillObject is again a gaint JSON of around 20mb+ in size

polyfillJSON = await polyFillResponse.json()

} else {

polyfillJSON = await cachedPolyfill.json()

}

// Here feature name can be fetch, atob, Intl etc.,

polyfillString = polyfillJSON[featureName]

response = new Response(polyfillString)

It kinda works, but the performance is really really bad in this case. It is because. for example, the fetch module has its own polyfill code and also depends on these Array.prototype.forEach, Object.getOwnPropertyNames, Promise, XMLHttpRequest, and Symbol.iterator files.

For each dependency, we have to go back and forth for getting the polyfill code from the JSON. As dependencies increases, the cost of JSON serialization/deserialization becomes very high and takes around a minimum of 5-10s to generate the response. So, it was strictly a deal-breaker and had to move on.

Note: I tried the last two attempts because I wanted to accomplish it in free-tier plan

Successful Attempt

For this attempt, I planned to use their Cloudflare KV storage for storing the polyfill code and use that as a data source. You can think of Cloudflare KV as an in-memory database like Redis. It is optimized for high reads and is a perfect candidate for our needs. I created two KV namespaces TOML and POLYFILL . TOML is for storing the dependencies, browser support, polyfill code path of each feature and in POLYFILL, we store the polyfill source for each feature. I used their cloud APIs for populating the data and here is the script link for reference.

The polyfill-library used for generating the code bundle. The library uses streams and other Node.js APIs for producing the code and it is optimized for Node.js environment and it doesn't work in other environments like browser, web/service worker, etc.., So to make it work, I forked the library and made appropriate changes to make it work in the above-mentioned environments. Some of the notable changes are removal of file-based access to use Cloudflare KV storage and mock certain dependencies to adapt to worker environment. To see the changes, you can click this link (https://polyfill-fork-repo-url). So here is the code for replicating polyfill.io using workers.

import {} from '@cloudflare/workers-types'

import * as pkg from '../package.json'

import * as polyfillLibrary from 'polyfill-library'

import * as UAParser from 'ua-parser-js'

type Flag = Record<string, Array<string>>

interface PolyFillOptions {

excludes: string[]

features: Record<string, Flag>

callback: string | boolean

unknown: string

uaString: string

minify: boolean

}

/**

* The cache key should either be a request object or URL string,

* so we prepend this HOST with the hash of PolyFillOptions object

*

* Eg: https://polyfill-clouldfare-workers.io/85882a4fec517308a70185bd12766577bef3e42b

*/

const HOST = 'https://polyfill-clouldfare-workers.io/'

addEventListener('fetch', (event: FetchEvent) => {

event.respondWith(handlePolyfill(event.request, event))

})

async function handlePolyfill(

request: Request,

event: FetchEvent,

): Promise<Response> {

let url = new URL(request.url)

// @ts-ignore

let cache = caches.default

// Get query params from the URL object and store as key-value object

let queryParams = getQueryParamsHash(url)

let {

excludes = '',

features = 'default',

unknown = 'polyfill',

callback,

} = queryParams

// By default, now it supports only with minification

const minify = url.pathname.endsWith('.min.js')

const uaString = request.headers.get('User-Agent') || ''

// Sort the keys and also the values and have it in same shape

let json: PolyFillOptions = {

excludes: excludes

? excludes.split(',').sort((a, b) => a.localeCompare(b))

: [],

features: featuresfromQueryParam(features, queryParams.flags),

callback: /^[\w\.]+$/.test(callback || '') ? callback : false,

unknown,

uaString,

minify,

}

// Default headers for serving the polyfill js file

const headers = {

'cache-control':

'public, s-maxage=31536000, max-age=604800, stale-while-revalidate=604800, stale-if-error=604800', // one-month

'content-type': 'application/javascript',

}

// Get SHA-1 of json object

let KEY = await generateCacheKey(json)

// Since cache API expects first argument to be either Request or URL string,

// so prepend a dummy host

let cacheKey = HOST + KEY

// Get the cached response for given key

let response = await cache.match(cacheKey)

try {

if (!response) {

/**

* If there is no cached response, generate the polyfill string

* for the input object, the json object params can be

* polyfill-library README file.

*/

let polyfillString = await getPolyfillBundle(json)

/**

* Construct a response object with default headers and append a

* ETag header, so that for repeated requests, it can be checked

* with If-None-Match header to respond immediately with a 304

* Not Modified response

*/

response = new Response(polyfillString, {

headers: {

...headers,

ETag: `W/"${KEY}"`,

},

})

// Store the response in the response without blocking execution

event.waitUntil(cache.put(cacheKey, response.clone()))

}

/**

* Check if cached response's ETag value and current request's

* If-None-Match value are equal or not, if true respond

* with a 304 Not Modified response

*/

let ifNoneMatch = request.headers.get('if-none-match')

let cachedEtag = response.headers.get('etag')

if (ifNoneMatch == cachedEtag) {

return new Response(null, { status: 304 })

}

// Finally, send the response

return response

} catch (err) {

// If in case of error, respond the error message with error status code

const stack = JSON.stringify(err.stack) || err

return new Response(stack, { status: 500 })

}

}

// Sort features along with the flags (always, gated) as a JavaScript object

function featuresfromQueryParam(

features: string,

flags: string,

): Record<string, Flag> {

let flagsArray = flags ? flags.split(',') : []

let featuresArray = features

.split(',')

.filter(x => x.length)

.map(x => x.replace(/[\*\/]/g, ''))

return featuresArray.sort().reduce((obj, feature) => {

const [name, ...featureSpecificFlags] = feature.split('|')

obj[name] = {

flags: uniqueArray([...featureSpecificFlags, ...flagsArray]),

}

return obj

}, {} as Record<string, Flag>)

}

// Generate the polyfill source

async function getPolyfillBundle(json: object): Promise<string> {

return polyfillLibrary.getPolyfillString(json)

}

// Make key-value pairs object from the URL object

function getQueryParamsHash(url: URL) {

let queryParams: Record<string, string> = {}

// @ts-ignore

for (let [key, value] of url.searchParams.entries()) {

queryParams[key] = value

}

return queryParams

}

// Generate hash string which is used to store in cache and also as ETag header value

async function generateCacheKey(opts: PolyFillOptions): Promise<string> {

let seed = {

excludes: opts.excludes,

features: opts.features,

callback: opts.callback,

unknown: opts.unknown,

uaString: normalizeUA(opts.uaString),

}

let hashString = await hash(seed)

return hashString

}

// Normalize the user agent string, we consider only the browser family, major and minor version

function normalizeUA(uaString: string): string {

const ua = new UAParser(uaString).getResult()

const family = ua.browser.name || 'Unknown'

const [major = 0, minor = 0] = ua.browser.version

? ua.browser.version.split('.')

: [0, 0]

return `${family}#${major}#${minor}`

}

Conclusion

Replicating the functionality of polyfill.io was not an easy task as I thought off initially. The worker functionality on handling the request, caching the response was an easy task and the main problem that I faced was making the polyfill-io library adapt to the worker environment. So, now speaking of the performance of the original polyfill.io vs this worker script, I was able to notice about 500ms-1.5s of lag for the very initial request and for repeated visits, the worker was able to respond faster in my project. For my use-case, I did feel that the Varnish VCL configuration performed slightly better than the Cloudflare worker script. I might be wrong because the polyfill-io library is not optimized for the worker environment, may be we can do a detailed comparison after optimizing it. My thoughts about Cloudflare workers are, it is not that mature enough in certain aspects. I found it hard to performance profile the script, tracing (to DataDog) and also narrowing time-execution of every function call. I would conclude by saying that, it is still relatively new but I am confident that it will catch up and the best is, I can code in JavaScript and take advantage of tooling and package ecosystem.

Future

I am planning to optimize the polyfill-library so that it can adapt and perform much faster in a worker environment. Some of the optimization techniques are already in mind are moving to native streams API, change TOML to JSON and remove TOML parser dependency, use less async-await and depend more on streams, etc.., If you have any ideas on how to optimize, please just file an issue in the repo and then maybe, we can experiment with that idea.

Acknowledgments

The ideas and techniques mentioned here originated from the polyfill.io repo and thanks to @jakedchampion for his blog posts on this topic and here are the links for a follow up read part-1 and part-2.

To see the full code, here is the github repo link.

Thank you, reader!

Top comments (0)