This past summer I had the incredible opportunity of working under Olin professor Alexander Morrow on a very exciting project developing easy-to-use systems for blind-sailors to sail more effectively.

What is blind sailing?

Blind sailing is exactly what it sounds like—visually-impaired sailors sailing, guided primarily by their senses of hearing and touch, and often relying on technology to assist them. It is an internationally-recognized sport, with highly-competitive regattas hosted by Blind Sailing International, attracting sailors, guides, spectators, and engineers from around the world. Conventionally, blind-sailing is done via one of two methods:

Homerus System - Consists of a set of sirens, one at each of the waypoints around which the sailors must sail as well as one on each boat (to allow the sailors to recognize opposing boats and avoid collisions). Each siren has a different tone to allow for differentiation, but all sirens are enabled, all the time. This is the system currently used for competitive regattas. This system, however, can be difficult to use because the sirens can blend together, and they are also extremely loud (such that they can be heard several hundreds of yards away), which poses a risk to sailor's hearing as parts of the course pass extremely close to these sirens.

Onboard Sighted Guide - Recreational sailing usually relies on sighted human guides who sail on the boat along with the blind sailors, and provide them with navigation guidance.

Project Goal

Olin's research work on blind sailing, was initially requested by the Carroll Center for the Blind, but has since grown to include collaborations with Blind Sailing International, Blind Sail SF Bay, Community Boating, Boston Blind Sailing, and other organizations. The goal was to develop a safer and easier system to replace the antiquated and dangerous Homerus system. In particular, my project last summer focused on developing a low-power, GPS-based guidance system to allow blind sailors to more easily sail, both recreationally and competitively.

First Steps

Initially, I developed an Android app that provides very similar guidance to what a sailor would receive from a sighted guide, but allowing them to sail independently. While this was a stark change from the Homerus system with sirens and buoys, it was designed to be easy to use for recreational sailors and competitive sailors alike.

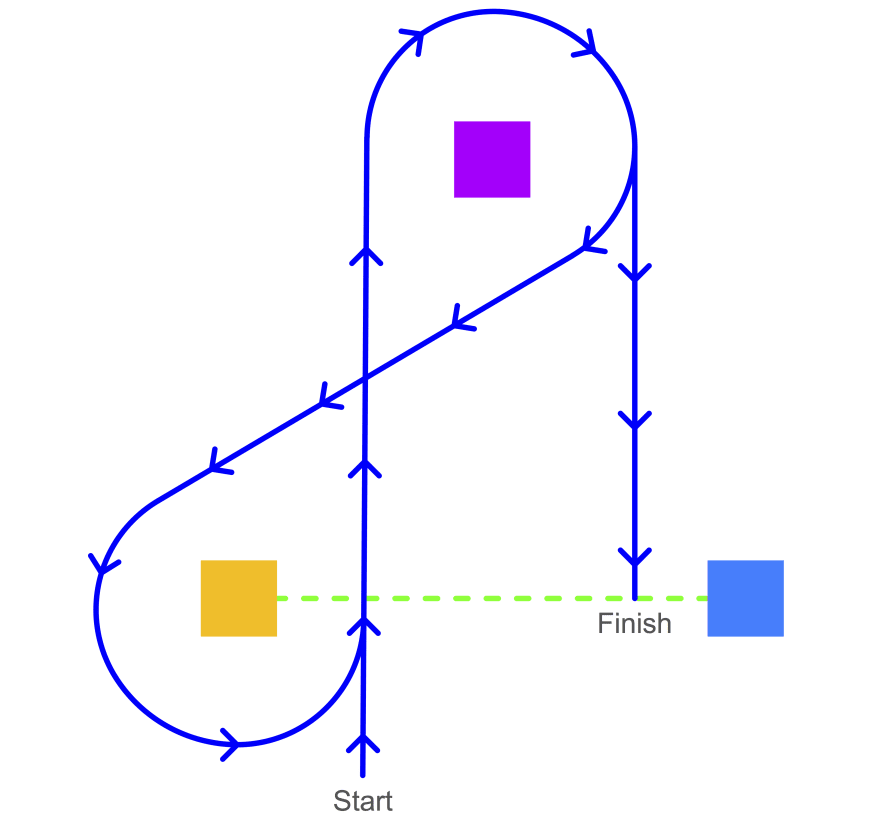

I developed the app using Kotlin on Android, taking advantage of GPS to precisely track the user's phone and notify them verbally (using text-to-speech) of the bearing and distance to their next waypoint ("40 degrees left, 20 meters"). In addition, the app streamed the user's location in real-time to a webserver, which allowed each user's app to notify them if another sailor was approaching (to avoid collisions). Additionally, I developed a web frontend for spectator-viewing and post-race analysis of the sailors' performance.

Working with Blind Sail SF Bay

After completing this initial milestone and testing in a large open field (on land), I finally had the opportunity to test my app with blind-sailors at Blind Sail SF Bay, who were kind enough to host me and try out my app. The GPS tracking and guidance worked great on open waters. The sailors and guides all loved it, and one of the newer sailors was able to learn how to navigate completely independently very quickly, using just the app's text-to-speech guidance. I had a great time demoing the app, hearing firsthand blind-sailing experiences from the sailors, and even practicing sailing myself a bit. This is where things got interesting. Although the text-to-speech worked very effectively, more experienced sailors wanted something closer to the older Homerus system, as it felt more natural (both as a result of their prior experience, as well as because it is a natural human ability to locate the direction of sound, and it is something that all blind people use).

Spatial Audio Synthesis

I started exploring options that would feel more natural for humans rather than a "X degrees heading, Y meters distance." I ended up discovering that it is possible to artificially synthesize audio such that a user listening to the audio through stereo headphones would "feel" like the audio was coming from a certain direction. The human brain is able to locate the direction and (to a lesser extent) distance of a certain sound source just by listening closely, and it was possible to artificially recreate this, in real-time, for blind-sailors. While the specific details of how this is implemented are beyond the scope of this post, they are available in my ACM paper which is linked at the end of this post.

I threw together a prototype that ran on a desktop PC and immediately noticed the first issue: rotating my head, even slightly, would completely break the illusion. This was easy to fix by putting together a custom mount for a BNO055 9-axis IMU (gyro, accelerometer, and magnetometer) which could precisely track the user's head, and, through a microcontroller, stream this information to the PC, where the audio source locations would then be transformed such that they would always appear to be coming from the correct direction (this direction was determined using GPS to calculate the bearing between the user and the target waypoint, the same was it was done in the mobile app).

The next issue that I faced was latency: although the PC-based system worked, if the user's head moved quickly there was a noticeable latency which made the system inaccurate at best, disorienting at worst. However, the concept had been proven: by replicating the Homerus system artificially, the volume, tone, and sound sources could be controlled by the user, in real-time, meaning that they could avoid the major issues (hearing damage and difficulty of distinguishing the different sirens) of the Homerus system.

FPGA Acceleration

After proving the concept came the interesting part, and definitely the most involved part of this entire project: making it run with low latency, low power consumption, in a small embedded form-factor. After running several comparisons of CPU and GPU, and theoretical estimates for FPGA DSP performance, I decided to build a custom FPGA accelerator for 3D audio synthesis. It uses a Xilinx Zynq-7020 SoC, a fairly capable chip with relatively low power consumption and cost. The ARM cores on the Zynq are used to intake audio and location data from a mobile device, and to pull the HRTF data (which are the audio filters used to convert from mono audio to stereo audio) from RAM and place it into the FPGA's cache. The FPGA runs a set of custom DSP logic that I developed, which takes sound sources, runs the HRTF algorithm (which converts them from mono audio to stereo audio that appears to be coming from a certain location), crossfades them together (by allowing for multiple audio sources, the user can choose to listen to all waypoints and opposing boats simultaneously, or flip between them at will), and outputs it directly to the user's headphones with minimal latency between head movements (tracked using a BNO055 IMU connected directly to the FPGA fabric) and audio updates.

After implementing the FPGA accelerator using Verilog and debugging/verifying it in simulation and on the FPGA itself, the system worked successfully. The audio from the stereo headphones was nearly indistinguishable from real-life, and even the quickest of head movements did not cause any noticeable jitter in the audio source location. Test results showed that users were able to extremely successfully, precisely, and quickly locate the audio sources (full results are available in my paper). Furthermore, the power consumption was low enough to be powered for many hours from a small battery that the sailor could wear on their person, along with the FPGA itself.

Examples of HRTF Algorithm

Following is a video that shows a live demonstration of the audio synthesis in action. The HRTFs (Head-Related Transfer Functions) for each ear are shown at the bottom (the graph represents the frequency response for each side). For the full experience, wear stereo headphones (it may help to close your eyes) to be able to feel the spatial audio effect in action. (YouTube has slightly botched the audio quality, experiencing it with the FPGA provides a much better experience, but the video still provides a general feel for how it works.)

Publication and Presentation at FPGA'2020 Conference

After the completion of my project, I submitted it for publication in the FPGA'2020 conference and it was accepted. I attended the conference in February 2020 and gave a short talk on my paper, and received plenty of feedback and was able to network with many other researchers also working on FPGA-based accelerators.

My paper was published in the ACM conference proceedings and is available at https://dl.acm.org/doi/10.1145/3373087.3375323 (as of this writing it is available open-access).

Top comments (0)