We recently launched record.a.video, a new web app that can record your camera and screen right from the browser! When you are done recording, it uploads the video to api.video to create a link for easy sharing of your video.

In the process of building this app, I learned a lot about a few web APIs, so I thought I would write a bit more detail in how I used these APIs and how they work.

In this post, we'll use getUserMedia API to record the user's camera and microphone.

In post 2, I discussed recording the screen, using the Screen Capture API.

Using the video streams created in posts 1& 2, I draw the combined video on a canvas.

In post 3, I'll discuss the MediaRecorder API, where I create a video stream from the canvas to create the output video. This output stream feeds into the video upload (for video on demand playback) or the live stream (for immediate and live playback).

In post 4, I'll discuss the Web Speech API. This API converts audio into text in near real time, allowing to create 'instant' captions for any video being created in the app. This is an experimental API that only works in Chrome, but was so neat, I included it in record.a.video anyway.

MediaDevices.getUserMedia()

Let's start at the beginning, With record.a.video, we grab the camera and microphone inputs from the device to record the video. This API allows the browser to interact with external media devices, and read their output.

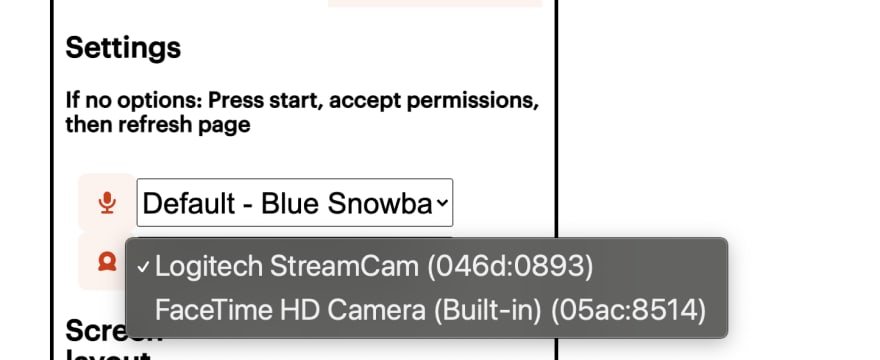

In record.a.video, we enumerate the video and audio inputs to display them as options for the recording:

Once the capture begins, we display the chosen camera, and record from the chosen microphone. So, how does this work?

Enumerating the devices

When you call the enumerateDevices, you get all of the details of the devices:

navigator.mediaDevices.enumerateDevices() .then(function(devices) { devices.forEach(function(device) { console.log(device.kind + ": " + device.label +" id = " + device.deviceId); if(device.kind =="videoinput"){ //add a select to the camera dropdown list // var option = document.createElement("option");

console.log(device);

deviceIds[counter] = (device.deviceId);

deviceNames[counter]= (device.label);

counter++; } });

If we look at the console log, we get a list of all the input and outputs:

audioinput: Default - Blue Snowball (046d:0ab9) id = default audioinput: Blue Snowball (046d:0ab9) id = 693957e7a6c63d00cf9068338ec0108bfdfad2f108182bc988f7ed79430d5024

audioinput: Logitech StreamCam (046d:0893) id = ec257829b4d910400bdff0fe8639e3e2c5b9bd761c1cd4454b8f272672e4d482

audioinput: MacBook Pro Microphone (Built-in) id = be26a24fb1ca054193d51c80a6c875a091a285153959ddae8ab24f55c939bcd5

audioinput: Iriun Webcam Audio (Virtual) id = 5e911d92f330fb9333fee989407c5c84c3b99af29046d8182e582545e772687f

videoinput: Logitech StreamCam (046d:0893) id = 8d59c8e7bc02076c4230ba70125c03491020950b008769bd593bdb13e33c1ce7

videoinput: FaceTime HD Camera (Built-in) (05ac:8514) id = e3fce20226c193876c5ff206407fd4815ad5b1e6329e67a8e82c9636d8e75c8d audiooutput: Default - External Headphones (Built-in) id = default audiooutput: U32J59x (DisplayPort) id = b342ee4661c78101936e50a2b5a3e5080d5ed748e031547e492c7ab0eeddd9df

audiooutput: External Headphones (Built-in) id = 4a3980d8579a418193b1e8ff46771f204e87b2997b90d0d4e7e60a4acaae1235

audiooutput: MacBook Pro Speakers (Built-in) id = b7045191ebec43d797348478004f25472c868bcae61ad04a7de73f70878e27b2

Here we learn that, yes, I have a lot of audio/video devices hooked into my computer. (mental note: why is Iriun webcam only presenting as audio, and not as video?) Each device has a:

- kind: audio or video; input or output

- label: A text description for human consumption

- deviceId: a random string that uniquely IDs the device.

We'll use the deviceId to decide which device to broadcast, but in record.a.video, use the labels in the form - since they are better descriptions for our users.

Picking a broadcast

Once a user has chosen their video input, we can make a request to obtain this video. (The same approach works for audio as well, so I'm only describing video here):

navigator.getUserMedia = (navigator.mediaDevices.getUserMedia || navigator.mediaDevices.mozGetUserMedia || navigator.mediaDevices.msGetUserMedia || navigator.mediaDevices.webkitGetUserMedia);

When requesting a camera, you can apply constraints to the request to enforce exactly what you would like, for example:

cameraW=1280;

cameraH=720;

cameraFR=25;

var camera1Options = { audio:false,

video:{ deviceId: deviceIds[0],

width: { min: 100, ideal: cameraW, max: 1920 },

height: { min: 100, ideal: cameraH, max: 1080 }, frameRate: {ideal: cameraFR} } };

Here I set the ideal video as 1280x720, but allow for differences with the min & max parameters. This means that if the camera cannot provide 1280x720, it will give me a similar size, but in the available sizes for the camera.

Note: I have set the audio to false. In this demo, I am applying the video onto the screen with a video tag. If the browser were to play audio from the camera, the mic would pick it up, and you'd get an awful feedback loop. I want to avoid this. I could just mute the video, but I thought it would be cool to take another Media Stream for the audio, and apply that track to my stream during recording (after it appears on the screen).

Getting the stream and placing it on the page

I have a video element called 'video1'. I assign the getUserMedia to display there:

video1= document.getElementById("video1"); navigator.mediaDevices.getUserMedia(camera1Options).then(function(stream1){ video1.srcObject=stream1;

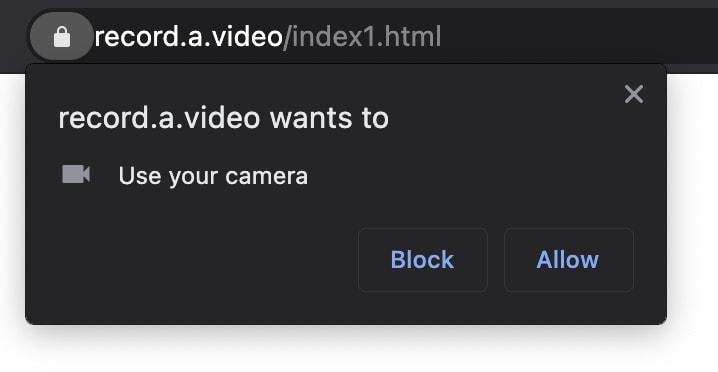

When this call is made - the browser will ask the user for permission to use the camera. If the user accepts the sharing request - the browser grabs the camera feed, and applies it into the video1 element:

That's all there is to it!

With the getUserdevices API, pulling the video feed from your camera to the browser just takes a few lines of code.

If one camera is great, what about two?

While most desktops do not have multiple cameras, most smartphones do. I thought it would be very cool to extract a video feed from 2 cameras on one device.

(Imagine a real estate walkthrough where you can see the surroundings, and the face of the person presenting.) So I built a demo application. You can see this application in action at record.a.video/index1.html.

I basically repeat the camera1 and video1 code above with camera2 and video2, and use the first two cameras reported by the enumeration query.

When I test the page on desktop, I get both cameras to stream (which is super cool!) Unfortunately on Android & iOS, only one camera can stream at a time - resulting in the first camera grabbing a still image from the start or capture, and going moving to an inactive state as the second camera becomes active. (On my phone, the front camera has the still image, and the rear camera continues to show video).

I imagine that this is a battery/CPU saving setting, as running 2 cameras full time on a phone battery would quickly use up the battery.

Conclusion

The MediaDevices.getUserMe4dia() API allows the browser to use the external camera and mics to display (and record) content. See it in action at record.a.video and build your own version of a video recording system today!

Top comments (0)