SDKs win developers. The clear DX advantage of an SDK over raw HTTP is undeniable–type safety, autocomplete, and idiomatic language patterns all combine to reduce the overhead of API integration.

But, building SDKs is also a lose for developers–your developers. Maintaining multiple language-specific SDKs means juggling different build systems, package managers, and testing frameworks. Each language requires knowledge of its ecosystem and deployment pipelines. It's resource-intensive work that pulls focus from core API development.

This is why there is a growing list of SDK generators. These tools handle the heavy lifting of translating OpenAPI definitions into native code, managing cross-language type mappings, handling authentication flows, and implementing error-handling patterns. The goal is to produce SDKs native to each target language while maintaining consistency with your API's design.

However, not all generators are created equal–the quality of the generated code, the developer experience they provide, and their ability to handle complex API patterns vary significantly. SDK generators need to consider two sets of users:

- The developers building the SDK need tooling that streamlines the generation process and maintains consistency across multiple language targets.

- The developers using the SDK require intuitive, well-documented libraries that feel native to their preferred programming language.

Here, we’re testing two SDK generators: Stainless and Speakeasy. We’ll follow a realistic workflow for a developer trying to create their own SDK:

- We’ll initially try to generate the SDK from the specification as it is currently written.

- Once the SDK is generated, we’ll look into the structure of the SDK and project.

- We’ll finally build an AI application on top of the SDK.

We’ll use the OpenAI API, which most developers are now familiar with: the OpenAI API. This API already has an SDK, so we know it works. We’ll create two Node.js SDKs based on the OpenAI OpenAPI specification, trying to understand what it takes for each SDK generator to go from spec to alpha to 1.0.0.

Generating the Initial SDK

We want to work as each product intends, so we will follow the suggested workflow from the generator. This is one of the core differences between Stainless and Speakeasy:

- The Stainless workflow prioritizes getting you an up-and-running SDK as soon as possible.

- Speakeasy prioritizes linting and validation of the SDK, then the build.

Let’s start with Stainless.

Generating an SDK with Stainless

Stainless uses a UI approach. You upload your OpenAPI spec, and it immediately generates an SDK.

This process took 28 seconds for the OpenAI API spec (~24k LOC). Using the chat.ts file that Stainless generated for the /chat/completions endpoint as an example, let's examine what happened during those 28 seconds.

You can find the OpenAPI spec for this endpoint here. First, there is a core class implementation:

import { APIResource } from '../resource';

export class Chat extends APIResource {

/**

* Creates a chat completion for the provided messages

*/

create(

params: ChatCreateParams,

options?: Core.RequestOptions

): Core.APIPromise<ChatCreateResponse> {

return this._request('POST', '/chat/completions', params, options);

}

}

This class shows several decisions by the generator:

- It extends APIResource to inherit standard API functionality like authentication and request handling

- The method name 'create' is derived from the OpenAPI operationId

- The return type uses a generic APIPromise to handle asynchronous operations while maintaining type safety

- The generator identifies that this is a POST endpoint and encodes that in the _request call

The generator then creates a type hierarchy for the response:

export namespace ChatCreateResponse {

export interface Choice {

// The generator converts the OpenAPI enum into a union type

finish_reason: 'stop' | 'length' | 'tool_calls' | 'content_filter' | 'function_call';

message: Choice.Message;

logprobs: Choice.Logprobs | null;

}

// Nested namespace to handle deep structures

export namespace Choice {

export interface Message {

role: 'assistant';

content: string | null;

tool_calls?: Array<Message.ToolCall>;

// Note how the generator handles deprecated fields

function_call?: Message.FunctionCall; // deprecated

}

// Further nesting for complex types

export namespace Message {

export interface ToolCall {

id: string;

type: 'function';

function: ToolCall.Function;

}

}

}

export interface Usage {

prompt_tokens: number;

completion_tokens: number;

total_tokens: number;

// The generator preserves detailed token breakdowns

completion_tokens_details?: Usage.CompletionTokensDetails;

prompt_tokens_details?: Usage.PromptTokensDetails;

}

}

Nested namespaces maintain the organization of complex types. Nullable fields are properly typed with | null, and Optional fields use the ? operator. Deprecated fields are preserved but marked appropriately. Enums are converted to TypeScript union types for better type safety.

The generator also creates types for the request parameters:

export interface ChatCreateParams {

// Required parameters are not optional

messages: Array

| ChatCompletionRequestSystemMessage

| ChatCompletionRequestUserMessage

| ChatCompletionRequestAssistantMessage

| ChatCompletionRequestToolMessage

>;

model: string | ModelEnum;

// Optional parameters

frequency_penalty?: number | null;

logprobs?: boolean | null;

max_tokens?: number | null;

n?: number | null;

presence_penalty?: number | null;

response_format?: ChatCreateParams.ResponseFormat;

seed?: number | null;

stop?: string | Array<string> | null;

stream?: boolean | null;

temperature?: number | null;

tool_choice?: 'none' | 'auto' | ChatCreateParams.ChatCompletionNamedToolChoice | null;

tools?: Array<ChatCreateParams.Tool> | null;

top_p?: number | null;

user?: string | null;

}

// Nested types for request parameters

export namespace ChatCreateParams {

export interface ResponseFormat {

type: 'text' | 'json_object';

// The generator adds a schema property only for json_object type

schema?: JsonSchema;

}

export interface Tool {

type: 'function';

function: Tool.Function;

}

// Notice how it handles function parameters

export namespace Tool {

export interface Function {

name: string;

description?: string;

parameters: Record<string, unknown>;

}

}

}

Again, we have union types for messages that allow different message types and a nested structure using namespaces to maintain organization. Required vs optional parameters are correctly distinguished, and the generator handles complex types like Record<string, unknown> for flexible JSON schemas. Tool definitions are properly typed for function calling.

A key part of SDKs is documentation. The generator preserves the OpenAPI documentation for use with the SDK:

/**

* Creates a chat completion for the provided messages

* @param messages - A list of messages comprising the conversation so far

* @param model - ID of the model to use

* @returns A chat completion response

*/

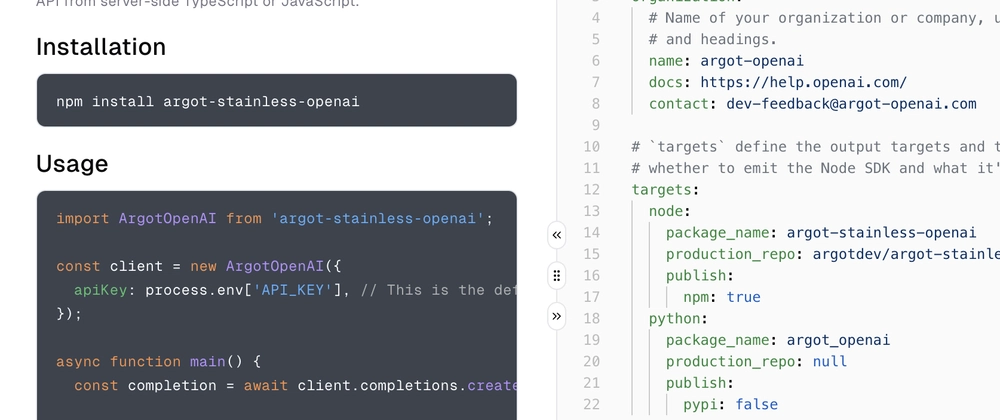

After those 28 seconds, the user has an SDK stored in a private repo on the Stainless GitHub. You request access to start using it. You also have:

- Documentation for each resource/method

- A stainless configuration file that allows you to change aspects of the SDK

The Stainless configuration file is an essential intermediary between the OpenAPI specification and the generated SDK.

The generated README has the temporary npm install option directly from the repo, which you can use before publishing your package. So, at this point, with Stainless, you have an SDK you can start using. However, it's made clear that it won’t work as intended. The UI also has a diagnostics pane, where, in this case, there were 139 warnings and notes. There were no errors, though, allowing the SDK to be generated and used.

Generating an SDK With Speakeasy

Speakeasy takes a different approach, using a CLI to guide the SDK generation:

brew install speakeasy-api/homebrew-tap/speakeasy

speakeasy quickstart

The quickstart asks a few questions before starting the generation process: which specs to use, the name of your SDK, the SDK language, and where to save the generated SDK. In contrast to Stainless, where the SDK generation is performed within the Stainless product, everything is local here.

Validation and linting come first, and our OpenAI spec failed the validation:

ERROR validation error: [line 7813] path-params - POST must define parameter user_id as expected by path /organization/users/{user_id}

ERROR validation error: [line 8038] path-params - POST must define parameter project_id as expected by path /organization/projects/{project_id}

ERROR validation error: [line 8297] path-params - POST must define parameter project_id as expected by path /organization/projects/{project_id}/users/{user_id}

ERROR validation error: [line 8297] path-params - POST must define parameter user_id as expected by path /organization/projects/{project_id}/users/{user_id}

OpenAPI document linting complete. 4 errors, 12 warnings, 105 hints

Thus, the SDK generation failed. Stainless also caught the parameter definition, but it was categorized as a warning and didn’t stop the SDK generation.

Speakeasy also offers a separate linting operation, which we can run for more diagnostics:

speakeasy lint openapi -s openai-openapi.yaml

This linting returned eight errors, 62 warnings, and 641 hints. We generated the SDK by adding the four parameter definitions and restarting the generation process.

The SDK is saved to a local directory. To use the SDK, have to create your own repo, install the speakeasy GitHub app, set up a speakeasy secret, and then run the Speakeasy action. The SDK is now in your own repo, with all documentation generated (though the README has the npm install wrong [npm install <UNSET>]).

Overall Thoughts on Generation

Stainless makes the SDK generation process much easier and quicker. If you want to start an SDK immediately and then refine it over time, Stainless is a better option.

Speakeasy prioritizes two things. First, validation before generation is great for creating a robust SDK. However, as we’ll see, the validation step didn’t catch an issue with the SDK. Second, local ownership is good because you have complete control over your SDK; it is bad because you have less information about your SDK during generation.

You might prefer one over the other; in this case, the more difficult but supposedly robust Speakeasy generation seems to have been for naught.

Understanding Our SDK Structure

SDK structure is an integral part of SDK use. An SDK with an unintuitive structure can hinder developer adoption and increase integration time, regardless of how well it implements the underlying API functionality.

The Stainless and Speakeasy SDKs have similar high-level organizations but different implementation details. Both have the core SDK functionality, tests, documentation and build tooling, with Stainless being more test-focused and Speakeasy being more documentation-heavy.

Testing Philosophy and Implementation

Stainless demonstrates a test-first architecture, with tests mirroring the API structure in /tests/api-resources/. Each API feature has dedicated test coverage–for instance, separate test files for audio transcription (transcriptions.test.ts) and translation (translations.test.ts) rather than a single audio test file. The test suite includes comprehensive coverage for edge cases, such as empty keys and different retry scenarios, enabling precise testing of individual API behaviors and easier debugging when specific features fail.

While Speakeasy also includes unit tests, they're organized at the root of the /tests directory with less granular separation. Its approach emphasizes documentation and type definitions, with extensive model documentation in /docs/models/. The SDK uses runtime validation through zod, which requires developers to carefully handle error objects when the API returns invalid data. This creates a different balance between compile-time and runtime type safety.

Cross-Platform Architecture and Runtime Support

Stainless's implementation includes a sophisticated cross-platform compatibility layer through its _shims directory. This handles runtime-specific implementations for:

- Node.js environments (

node-runtime.ts) - Deno (

runtime-deno.ts) - Bun (

bun-runtime.ts) - Web browsers (

web-runtime.ts)

The shim architecture is particularly notable for its automated runtime detection. The _shims/index.ts file handles runtime selection, while package.json uses conditional exports to automatically choose the correct implementation. This allows the SDK to maintain consistent behavior across platforms while optimizing for platform-specific features–for example, using native fetch in browsers while employing more efficient HTTP clients in Node.js.

In contrast, Speakeasy takes a more manual approach to cross-platform support. It relies on developers explicitly importing runtime-specific packages (e.g., node-fetch, node:fs) and requires manual importing of shims files. While this provides more explicit control, it also introduces the possibility of runtime errors if the correct shims aren't imported.

Resource Organization

The repos take contrasting approaches to resource organization that reflect different philosophies about API consumption. Stainless uses a hierarchical resource structure that closely mirrors the API's own organization:

/resources/

/audio/

audio.ts

translations.ts

transcriptions.ts

/images/

variations.ts

edits.ts

generations.ts

This tight coupling between related functionality improves cohesion and clarifies the relationship between different API features. However, locating individual methods without familiarity with the overall structure can make it slightly more complicated.

Speakeasy opts for a flatter organization with clearer separation between interfaces and implementations:

/sdk/

audio.ts

images.ts

/funcs/

audioCreateTranscription.ts

imagesCreateVariation.ts

Splitting SDK definitions from function implementations makes individual endpoints more discoverable and potentially easier to maintain in isolation. The tradeoff is that related functionality is more dispersed throughout the codebase.

Type System Implementation

Both SDKs extensively leverage TypeScript's type system but with fundamentally different approaches to type organization. Stainless generates deeply nested namespaces for complex types. This approach creates a clear hierarchy of types that matches the JSON structure of API responses, making it easier to understand the relationship between different parts of the API's data model. The namespacing also helps prevent naming collisions in complex API responses.

Speakeasy favors flatter type hierarchies with more explicit naming. This approach prioritizes immediate comprehension of type relationships through naming conventions rather than structural organization. While this can make types more straightforward to find and reference, it requires more careful attention to naming to prevent confusion in complex APIs.

Build System and Tooling

The build systems reflect their different philosophies about SDK maintenance and evolution. Stainless provides granular build scripts that give developers direct control over the development process:

/scripts/

bootstrap

lint

build

format

test

Each script handles a specific development aspect, allowing developers to run individual tasks or modify build behavior as needed. While this requires more active maintenance of the build scripts, it provides maximum flexibility for customizing the build process.

Speakeasy relies more on generated configurations and SDK-specific tooling:

/.speakeasy/

workflow.yaml

gen.yaml

workflow.lock

This approach emphasizes consistency through automation, using a single configuration file (gen.yaml) and GitHub workflows for build and deployment processes. While this reduces the maintenance burden, it also means that customizations must work within the constraints of the generated configuration system.

These architectural choices create different tradeoffs for SDK maintenance and evolution:

- Stainless's approach provides more immediate developer control but requires more active maintenance of individual components.

- Speakeasy's generated approach offers stronger guarantees about consistency but potentially less flexibility for custom build requirements.

As with generation, the choice between them depends on your specific requirements for SDK customization, available resources, and the importance of standardization across multiple SDKs.

The Developer Experience

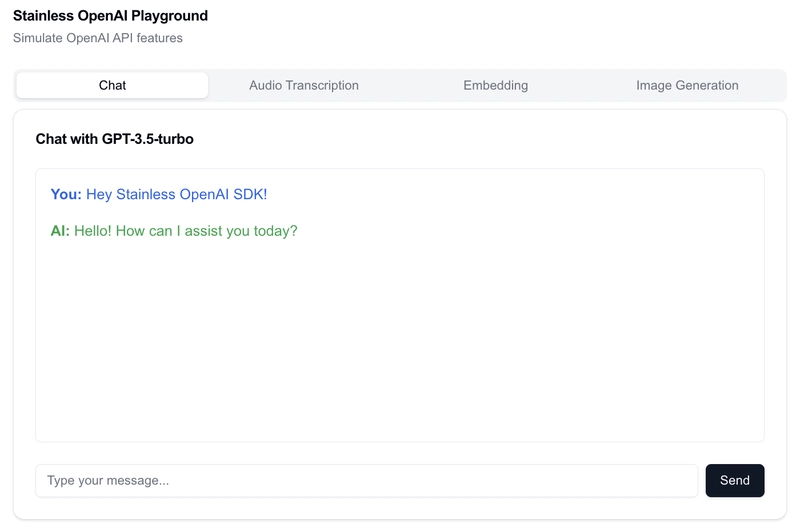

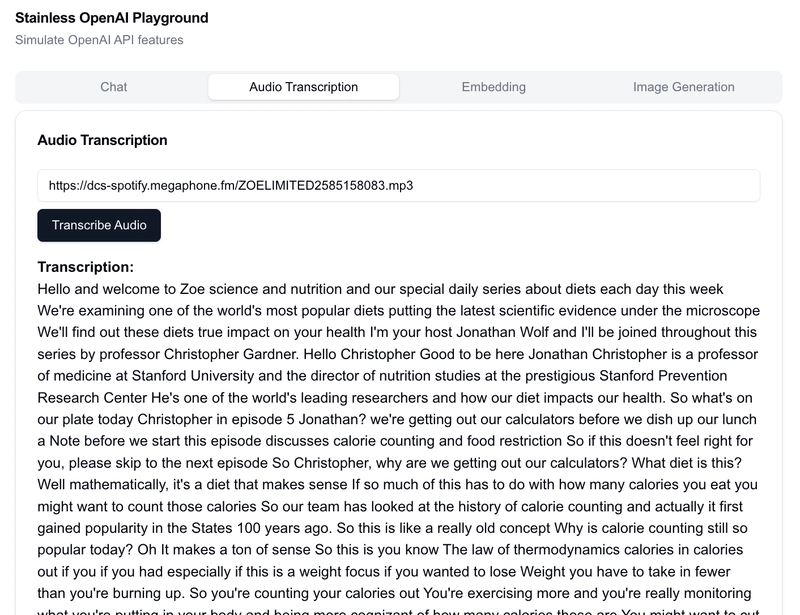

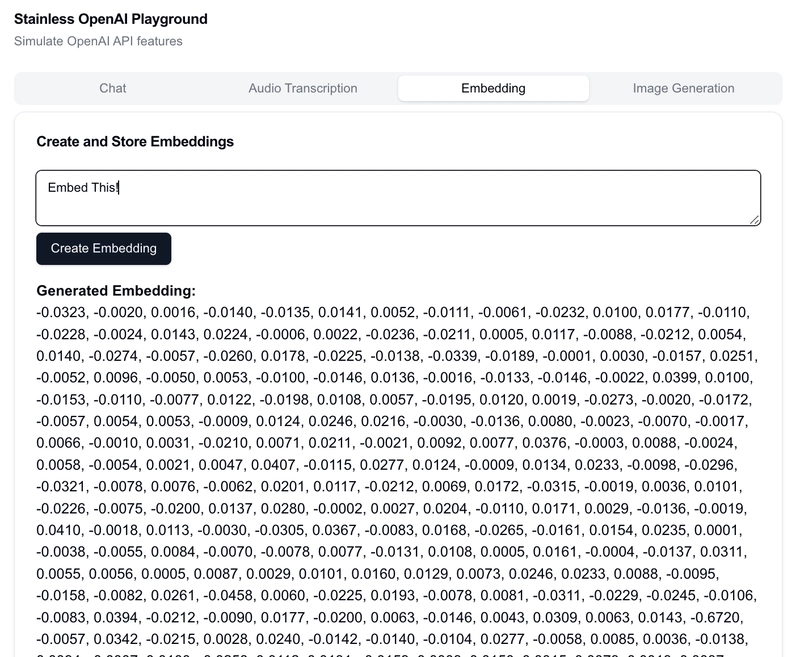

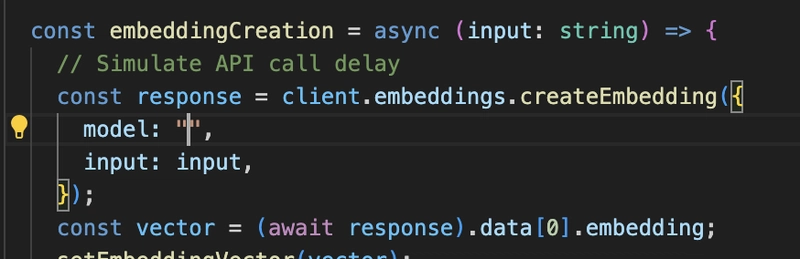

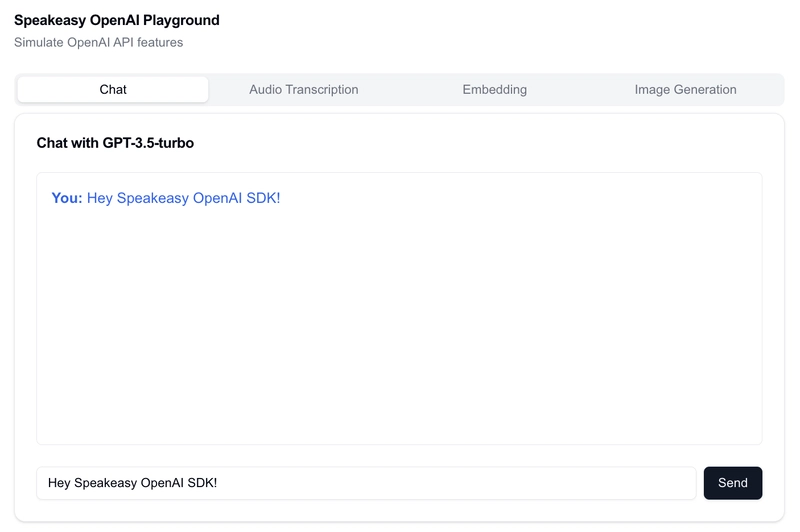

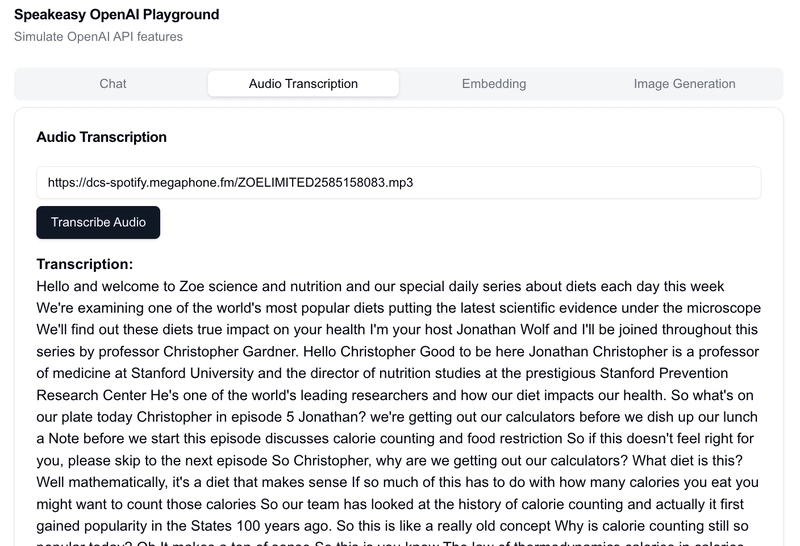

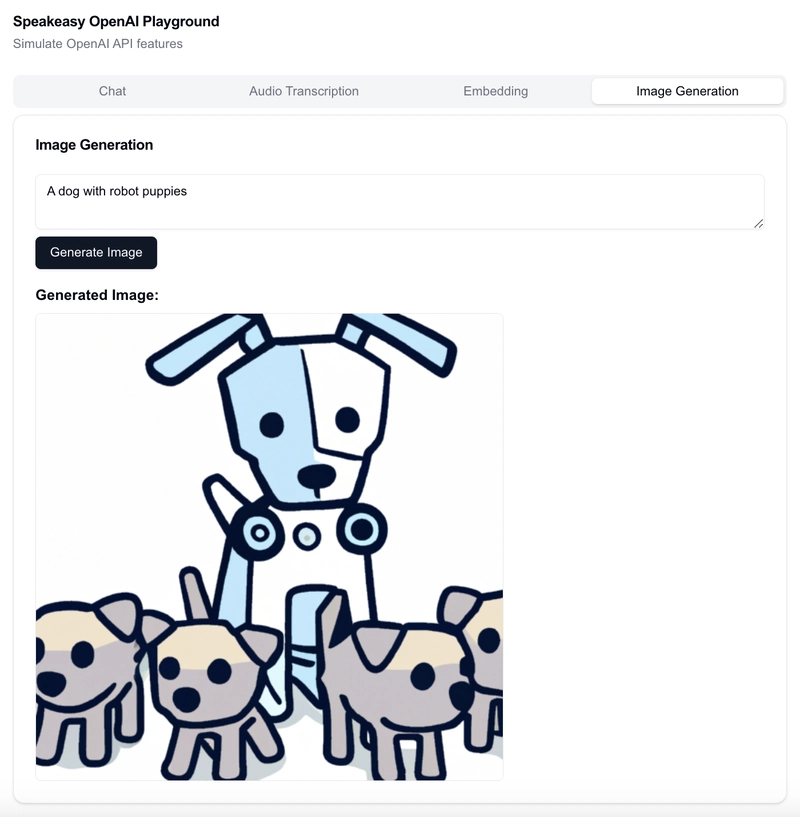

Let’s build an AI app with our SDKs. We’ll build an app with:

- A chatbot

- A transcription service

- An embeddings generator

- An image generator

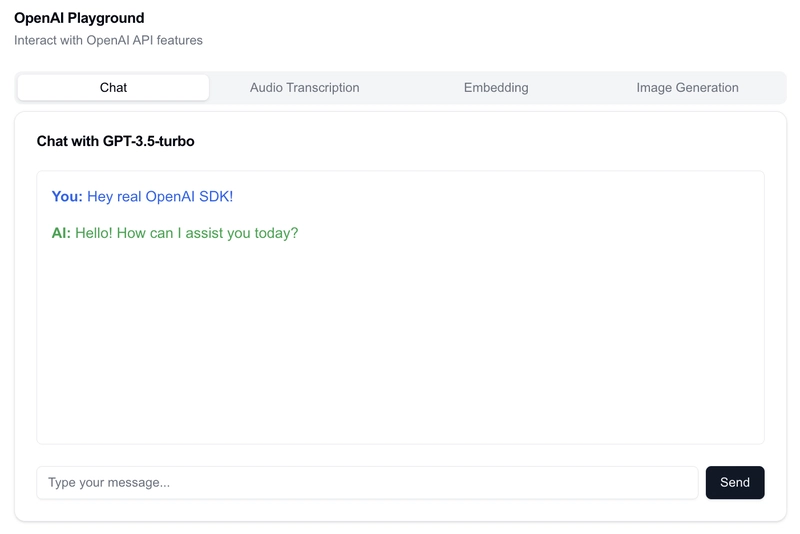

First, we’ll build this with the actual OpenAI Node SDK. This way, we’ll know exactly how it is supposed to work:

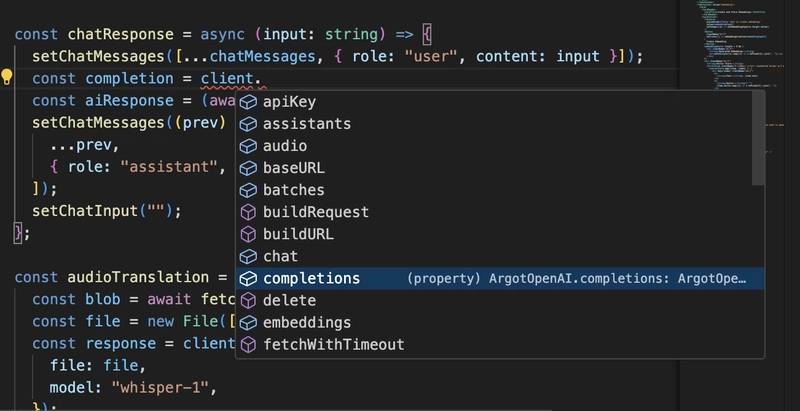

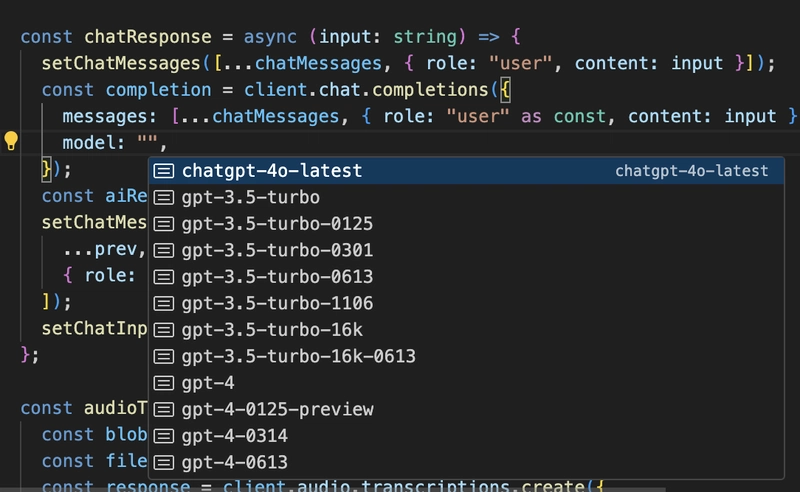

Now, let’s build with the Stainless SDK. We’ll turn off AI in VS Code to use only the SDKs' helpers. The Stainless SDK provides excellent type-ahead support, powered by TypeScript definitions in each package. For example, when you type client, VS Code will immediately display a list of available SDK methods, such as chat, completions, images, etc.

Only methods belonging to the completions resource will be offered as you continue typing (e.g., client.completions.). Finally, when typing something like client.completions.create(, the editor's tooltip shows you all parameters for that method, along with their types (e.g., prompt: string, model: string), helping you understand precisely what data the API expects.

All four methods worked as expected:

The Speakeasy SDK had some issues with type-ahead, showing methods/properties but not enums:

That wasn’t the biggest issue with the SDK, though. For the chat API, we tried the example from the extensive Speakeasy documentation:

import { ArgotOpenAi } from "argot-open-ai";

const argotOpenAi = new ArgotOpenAi({

apiKeyAuth: process.env["ARGOTOPENAI_API_KEY_AUTH"] ?? "",

});

async function run() {

const result = await argotOpenAi.chat.createChatCompletion({

messages: [

{

content: "<value>",

role: "user",

},

{

content: [

{

type: "text",

text: "<value>",

},

{

type: "image_url",

imageUrl: {

url: "https://fixed-circumference.com",

},

},

],

role: "user",

},

{

role: "tool",

content: "<value>",

toolCallId: "<id>",

},

],

model: "gpt-4o",

n: 1,

temperature: 1,

topP: 1,

user: "user-1234",

});

// Handle the result

console.log(result);

}

run();

But this didn’t work. Unfortunately, somewhere, the SDK had generated differently from how the documentation suggested:

error": {\n' +

' "message": "This is a chat model and not supported in the v1/completions endpoint. Did you mean to use v1/chat/completions?",\n' +

' "type": "invalid_request_error",\n' +

' "param": "model",\n' +

' "code": null\n' +

' }\n' +

We were able to fix this issue, but then a second issue surfaced:

{

"error": {

"message": "Unsupported parameter: 'parallel_tool_calls' is not supported with this model.",

"type": "invalid_request_error",

"param": "parallel_tool_calls",

"code": "unsupported_parameter"

}

}

This wasn’t as easy to overcome. We tried to remove these calls directly from the OpenAPI spec, but the Speakeasy SDK generates an SDKValidationError during use. This makes the chat functionality unusable.

Though the other methods worked as advertised:

What other DevEx considerations are there with these SDKs?

Strong Type Safety

Both SDKs use a robust type system to provide safety at compile time by describing the shape and structure of the data being processed. They also generate all types from an OpenAPI schema, ensuring API consistency and reducing the need to inspect types manually.

The SDKs will also catch various errors before the code is even run. If incorrect types are given to functions or classes, or if any parameters are missing, your editor will notify you of an error. This includes properties explicitly named as optional and fields where union types are enforced (such as API responses). This level of compile-time safety also helps make the code much easier to maintain over time, as changes to these types will throw errors on the parts of your application that now have mismatches to their data inputs.

For instance, in the Stainless SDK, if you try to pass a number where a string is expected, you’ll immediately get a TypeScript error. This contrasts strongly with plain JavaScript, where the developer must wait until runtime to understand where an incorrect data type might be.

Similarly, when using the Speakeasy SDK, providing incorrect type information will fail at compile-time, and the SDK will also catch issues at runtime by inspecting the error object returned from the API. This way, SDK users are exposed to strongly typed error objects from the API.

Error Handling

Both SDKs provide a mechanism for handling errors returned from the API but use different techniques.

Stainless uses specific, named exception classes, which are all subclasses of a base APIError. These error classes are type-safe and make the pattern-matching process on errors much more ergonomic. Instead of catching a generic error, which could be thrown from anywhere, developers can use a try/catch block specific to a particular error type, such as AuthenticationError or BadRequestError. In this way, it’s possible to use type-safe exception handling to manage errors fine-grainedly.

Speakeasy also provides type safety around errors, but instead of using exception throwing, it encapsulates errors and successful values in an explicit Result<Value, Error> type. The developer must inspect the result type to handle successful and error responses. This approach is commonly used in functional programming. It is handy for browser environments and for components in state management, which may prefer to return a value rather than throw an exception. By providing an explicit error type, developers can avoid catching generic exceptions and handle specific errors using pattern matching and type-safe switch statements.

Both approaches also support catching HTTP-related errors, such as ConnectionError, RequestAbortedError, and RequestTimeoutError, if an HTTP connection can’t be established or a network timeout has occurred.

Retries

Both SDKs are designed to automatically retry requests when a server has a transient issue, for example, by respecting retry-after headers when rate-limiting occurs. This improves the user experience, as applications are more resilient to transient failures and network errors.

Both SDKs use an exponential backoff algorithm to space out retries. When an API request results in a retryable code (429, 500, 502, 503, 504, 408, or 409) or the server sends an x-should-retry header, the SDK will attempt a retry following an increasing delay using a random jitter value to avoid request collision.

By providing the ability to configure the retry algorithm, either through an optional SDK setting or on a per-method basis, developers can tune retry behavior based on the type of application or operating environment.

Custom HTTP Client

Both SDKs allow developers to plugin a custom client responsible for handling requests. The Stainless SDK particularly specifies this mechanism well, using an HTTPClient object with lifecycle hooks. This architecture is useful when adding custom request or response interceptors.

Examples are when you want to add custom headers for observability or handling responses to return a value based on your requirements, or add custom request timeouts. Both SDKs can also have a custom fetch function or other mechanism injected, though the Speakeasy SDK lacks the concept of lifecycle hooks on an injected client.

Modularity and Tree Shaking

How both SDKs are organized highlights a trade-off between SDK discoverability and bundle size.

In Stainless, resources are grouped (for example, /src/resources/chat.ts, /src/resources/completions.ts, etc.). This makes the files self-contained and improves discoverability of related types and API methods in the editor. However, bundlers cannot remove code on a per-function basis.

In Speakeasy, functions are created as standalone exports (for example, /src/funcs/chatCreateChatCompletion.ts, /src/funcs/completionsCreateCompletion.ts, etc.), which enables a much greater degree of tree-shaking. This means that bundlers can remove code if the user does not use the functions or related types and schemas.

Customizing Your SDK

While both Stainless and Speakeasy offer customization options, their approaches reflect their different SDK maintenance and evolution philosophies. Let's look at how you might customize the OpenAI SDK focusing on Stainless's more structured approach.

Understanding the Stainless Configuration File

The heart of Stainless's customization approach is the stainless.yaml file. This configuration file bridges your OpenAPI specification and the generated SDK, allowing you to control various aspects of the SDK's structure and behavior. Let's break down its key sections using the OpenAI SDK as an example:

Organization Settings

organization:

name: openai-sdk

docs: https://help.openai.com/

contact: dev-feedback@openai-sdk.com

These settings define basic metadata about your SDK. The name field is critical as it influences package naming across different language targets.

Target Languages

targets:

node:

package_name: openai-sdk

production_repo: null

publish:

npm: false

python:

package_name: openai_sdk

production_repo: null

publish:

pypi: false

The targets section specifies which languages you want to generate SDKs for and their configuration. Each target can have its own package name and publishing settings. In the OpenAI example, both Node.js and Python SDKs are configured but not set up for automatic publishing.

Client Settings

client_settings:

opts:

bearer_token:

type: string

description: Bearer token for authentication

nullable: false

read_env: OPENAI_API_KEY

auth:

security_scheme: ApiKeyAuth

role: value

This section defines how the SDK client should be configured. For OpenAI, it specifies that authentication requires a bearer token, which can be read from the OPENAI_API_KEY environment variable.

Environments

environments:

production: https://api.openai.com/v1

The environments section maps different environment names to their base URLs. This allows the SDK to support multiple environments (like staging and production) while maintaining a single configuration file.

Resources

resources:

audio:

methods:

speech: post /audio/speech

subresources:

transcriptions:

methods:

create: post /audio/transcriptions

translations:

methods:

create: post /audio/translations

The resources section is where you define the structure of your SDK's API. It maps OpenAPI endpoints to SDK methods and organizes them into logical groups. The configuration supports:

- Top-level resources (like

audio) - Methods within resources (like

speech) - Subresources (like

transcriptions) - Nested methods within subresources

- Model definitions that map to OpenAPI schemas

This hierarchical structure allows you to create intuitive, well-organized SDKs that match your API's domain model.

Settings and Documentation

settings:

license: Apache-2.0

readme:

example_requests:

default:

type: request

endpoint: post /completions

params: {}

The configuration file also controls SDK metadata, such as licensing and documentation. The readme section allows you to specify which examples should appear in your SDK's documentation.

Configuration-Driven Customization

Stainless emphasizes configuration-driven customization through this file, allowing you to modify SDK behavior without touching the generated code.

Resource Organization

The configuration file also gives you fine-grained control over your SDK's organization of API resources. The OpenAI SDK's current configuration groups related endpoints into logical resources:

resources:

audio:

methods:

speech: post /audio/speech

subresources:

transcriptions:

methods:

create: post /audio/transcriptions

translations:

methods:

create: post /audio/translations

You could reorganize this structure to better suit your needs. For example, if you wanted to flatten the audio endpoints for more straightforward access, you could modify the configuration:

resources:

audio_speech:

methods:

create: post /audio/speech

audio_transcription:

methods:

create: post /audio/transcriptions

audio_translation:

methods:

create: post /audio/translations

This would change how developers access these methods in your SDK, from client.audio.transcriptions.create() to client.audioTranscription.create()

Custom Code Integration

While Stainless encourages configuration-based customization, sometimes you need to add custom code. For example, let's say you wanted to add a helper method to the OpenAI SDK that combines chat completion with embeddings for semantic search. Stainless provides a dedicated location for such additions:

// src/lib/semantic-search.ts

export async function semanticSearch(

client: OpenAIClient,

query: string,

documents: string[]

): Promise<string[]> {

// Generate embeddings for the query

const queryEmbedding = await client.embeddings.create({

model: "text-embedding-3-small",

input: query

});

// Generate embeddings for all documents

const documentEmbeddings = await client.embeddings.create({

model: "text-embedding-3-small",

input: documents

});

// Implement cosine similarity and ranking...

return rankedResults;

}

This custom code lives in the lib directory, which Stainless never modifies during regeneration. You can then document its usage in examples:

// examples/semantic-search.ts

import { OpenAIClient } from 'openai-sdk';

import { semanticSearch } from '../lib/semantic-search';

const client = new OpenAIClient({

apiKey: process.env.OPENAI_API_KEY

});

const documents = [

"The quick brown fox jumps over the lazy dog",

"The lazy dog sleeps while the quick brown fox jumps"

];

const results = await semanticSearch(

client,

"What is the fox doing?",

documents

);

Manual Patches

As a last resort, Stainless allows direct modification of the generated code through manual patches. These changes are preserved across regenerations, though they require ongoing maintenance. For example, you might want to add rate limiting to all API calls:

// Custom patch to _request method in src/core.ts

protected async _request<T>(

method: string,

path: string,

params?: Record<string, unknown>,

options?: RequestOptions

): Promise<T> {

// Add rate limiting logic

await this.rateLimiter.acquire();

try {

const result = await super._request<T>(method, path, params, options);

return result;

} finally {

this.rateLimiter.release();

}

}

While this works, it's important to note that such patches create technical debt. Each time the generator updates the core request handling, you must manually resolve any conflicts between your custom code and the generator's changes.

Customization Best Practices

The examples above illustrate a recommended approach to SDK customization:

-

Start with Configuration: Use the

stainless.yamlfile for structural changes whenever possible. This provides the most maintainable way to customize your SDK. -

Use the Lib Directory: Prefer the

libdirectory over direct patches when adding new functionality. This keeps custom code separate from generated code while still allowing tight integration. - Document with Examples: Create example files showing how to use your custom functionality. This helps developers understand your additions without diving into the implementation.

- Patch as Last Resort: Only modify generated code directly when necessary, and document these changes thoroughly to aid future maintenance.

This layered approach to customization allows you to adapt the SDK to your needs while minimizing maintenance overhead. It's particularly valuable for complex APIs like OpenAI's, where you might want to add higher-level abstractions without compromising the underlying SDK's reliability.

Wrapping Up

Choosing between Stainless and Speakeasy depends on your SDK development priorities and workflow preferences. Stainless excels in rapid iteration and developer experience, with its quick generation time, robust testing infrastructure, and extensive customization options through its configuration system. Its hierarchical resource organization and cross-platform architecture make it particularly suitable for teams looking to quickly prototype and evolve their SDKs while maintaining high quality.

Speakeasy's emphasis on validation and documentation provides a different advantage. Its strict OpenAPI validation and comprehensive documentation generation create a solid foundation for SDK development. However, as we discovered with the OpenAI SDK implementation, even thorough validation doesn't guarantee perfect runtime behavior—the chat completion issues highlight that robust validation must be balanced with practical testing.

For teams prioritizing quick iteration cycles, extensive testing capabilities, and granular customization control, Stainless offers a more streamlined path forward. Its configuration-driven approach and support for custom code integration make it particularly well-suited for complex APIs that require ongoing refinement.

Meanwhile, Speakeasy's focus on documentation and validation makes it attractive for teams that need to ensure API specification correctness from the outset and maintain extensive documentation. Its local-first approach also appeals to organizations with strict code ownership requirements.

As with many developer tools, the “best” choice depends on your specific needs—whether you value rapid iteration and customization over upfront validation, or prefer comprehensive documentation over quick deployment. Both generators produce functional SDKs, but their different approaches to generation, validation, and maintenance create distinct development experiences that cater to different team preferences and priorities.

Top comments (1)

@andy_tate_ I just wanted to say thank you on behalf of Speakeasy. It's clear you invested a good deal of time to do this write up. We're going to look into the possible bug you noted and we'll get it patched ASAP. I would love for you to join our Slack community so that we can followup with you when we've got a fix.

I also wanted to leave a link to this reddit thread which may be interesting for anyone who wants to learn more about the differences between Speakeasy & Stainless.