In your load-balanced cluster of nice, performant application servers, you may occasionally find that there is an outside resource that requires access be synchronised between each server in the cluster (ideally not too often, because it’s a pain, but there you go).

We encountered this recently when dealing with multiple clients trying to access and update a reporting component we interface with via our application. Each client issued HTTP API requests to the application that would:

- Read a resource from the reporting system (by the ID)

- Do something

- Update the resource

The problem is that no two tasks should be allowed to get past step 1 at the same time for a given named resource. This is starting to look pretty familiar, right?

We see this in a single-server multi-threaded model sometimes (although not too often hopefully; I find synchronous locks are generally bad for performance if used too liberally in web requests).

The problem with the above code is that it only locks a resource in the current process; a different HTTP request, routed to a different server by the load balancer, would happily acquire it's own lock.

Establishing a distributed lock

What we need now is to lock the resource across our entire cluster, not just on the one server.

We use MongoDB for general shared state between servers in our cluster, so I looked into how to use MongoDB to also synchronise access to our resource between the application servers.

Luckily, it turns out that by using existing MongoDB functionality, this is pretty straightforward to create a short-lived resource lock.

Note

I've written this solution in C#, using the official MongoDB C# client,

but there's no reason this wouldn't apply to a different MongoDB client

implementation in any language.

Want to jump to the end?

https://github.com/alistairjevans/mongodb-locks

Create the Collection

First up, I want to create a new MongoDB collection to hold my locks. I'll create a model and then get an instance of that collection.

That's pretty basic stuff, you'd have to do that generally to access any MongoDB collection.

Next we're going to add the function used to acquire a lock, AcquireLock. This method is responsible for the 'spin' or retry on the lock, waiting to acquire it.

The AcquireLock method:

- Creates a 'lock id' from the resource id.

- Creates a 'DistributedLock' object, which is where the locking mechanism happens (more on this in a moment).

- Attempts to get the lock in a while loop.

- Waits up to a 10 second timeout to acquire the lock, attempting again every 100ms.

- Returns the DistributedLock once the lock is acquired (but only as an IDisposable).

Next let's look at what is going on inside the DistributedLock class.

DistributedLock

The DistributedLock class is responsible for the actual MongoDB commands, and attempting the lock.

Let's break down what happens here. The AttemptGetLock method issues a FindOneAndUpdate MongoDB command that does the following:

- Looks for a record with an ID the same as the provided lock ID.

- If it finds it, it returns it without doing anything (because our update is only a SetOnInsert, not a Set).

- If it doesn't find it, it creates a new document (because IsUpsert is true), with the expected ID.

We've set the ReturnDocument option to 'Before', because that means the result of the FindOneAndUpdateAsync is null if there was no existing lock document. If there was no existing document, there will be one now, and we have acquired the lock!

When you dispose of the lock, we just delete the lock document from the collection, and hey presto, the lock has been released, and the next thread to try to get the lock will do so.

Using It

Because the AcquireLock method returns an IDisposable (via a Task), you can just use a 'using' statement in a similar manner to how you would use the 'lock' statement.

Do you see that await inside the using definition? Right, do not forget to use it. If you do forget, all hell will break loose, because Task also implements IDisposable! So you'll end up instantly entering the using block, and then disposing of the task afterwards at some point while the lock attempts are happening. This causes many bad things.

Make sense? Good. Unfortunately, we're not quite done...

Handing Concurrent Lock Attempts

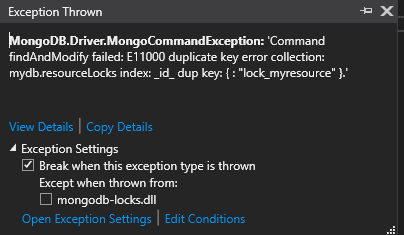

So, while the above version of DistributedLock works pretty well most of the time, at some point it will inevitably throw an exception when handling multiple concurrent lock attempts:

Why does this happen? Well, the upsert functionality in findAndModify is not technically atomic in the way you might expect; the find and the insert are different operations internally to MongoDB, so two lock attempts might both attempt to insert a record.

When that happens, the default ID index on the MongoDB collection will throw an E11000 duplicate key error.

This is actually OK; one of the threads that attempted to acquire a lock will get it (the first one to complete the insert), and the second one will get an exception, so we just need to amend our code to say that the thread with the exception failed to get the lock.

Handling a Crash

The last problem we have to solve is what happens if one of the application servers crashes part-way through a piece of work?

If a thread has a lock, but the server crashes or is otherwise disconnected from MongoDB, it can't release the resource, meaning no-one else will ever be able to take a lock on the resource.

We need to put in some safeguard against this that allows the lock to eventually be released even if the application isn't able to do it correctly.

To do this, we can use one of my favourite MongoDB features, TTL Indexes, which allows MongoDB to 'age out' records automatically, based on a column that contains an 'expiry' time.

Let's update the original LockModel with an expiry property, and add a TTL index to our collection.

In the above index creation instruction, I'm specifying an ExpireAfter of TimeSpan.Zero, meaning that as soon as the DateTime specified in ExpireAt of the lock document passes, the document will be deleted.

Finally, we'll update the MongoDB FindOneAndUpdate instruction in DistributedLock to set the ExpireAt property to the current time plus 1 minute.

Now, if a lock isn't released after 1 minute, it will automatically be cleaned up by MongoDB, and another thread will be able to acquire the lock.

Notes on TTL Indexes and Timeouts

The TTL index is not a fast way of releasing these locks; the accuracy on it is low, because by default the MongoDB thread that checks for expired documents only runs once every 60 seconds.

We're using it as a safety net for an edge case, rather than a predictable mechanism. If you need a faster recovery than that 60 second window, then you may need to look for an alternative solution.You may notice that I'm using DateTime.UtcNow for the ExpireAt value, rather than DateTime.Now; this is because I have had a variety of problems storing C# DateTimes with a timezone in MongoDB in a reliable way, so I tend to prefer storing UTC values whenever possible (especially when it's not a user-entered value).

Sample Project

I've created a github repo with an ASP.NET Core project at https://github.com/alistairjevans/mongodb-locks that has a complete implementation of the above, with an example API controller that outputs timing information for the lock acquisition.

This article was originally posted on my blog.

Top comments (0)