Kubernetes For Beginners : 4

Application Lifecycle Management (ALM) — Part 1

Hello Everyone,

Let’s continue the series of “Kubernetes For Beginners”

This is the Fourth article of the kubernetes series and we will be covering various concepts related to Application Lifecycle Management (ALM) in Kubernetes. This is part 1 of ALM.

In this blog, we will explore Application Lifecycle Management (ALM) in Kubernetes and understand its various components. We will also dive into relevant examples using AWS Elastic Kubernetes Service (EKS), Real world examples to make it easier for everyone.

Rolling Updates & Rollbacks -

Imagine you have an application running on Kubernetes, and you want to update it to a new version.

Kubernetes allows you to perform rolling updates, which means updating the application without any downtime.

It achieves this by incrementally updating instances of the application, one at a time, while others continue to serve traffic.

If any issues arise during the update, Kubernetes can roll back to the previous version seamlessly.

It ensures a smooth transition between different versions of your application.

Pros:

Seamless Updates: Rolling updates allow applications to be updated without downtime, ensuring continuous availability for users.

Quick Rollbacks: In case an issue arises during the update, Kubernetes facilitates easy rollbacks to the previous version, minimizing the impact on users.

Cons:

Complexity: Managing rolling updates requires careful planning and coordination to ensure smooth transitions and avoid any potential disruptions.

Resource Utilization: During the update process, both the previous and new versions of the application run simultaneously, which may temporarily increase resource consumption.

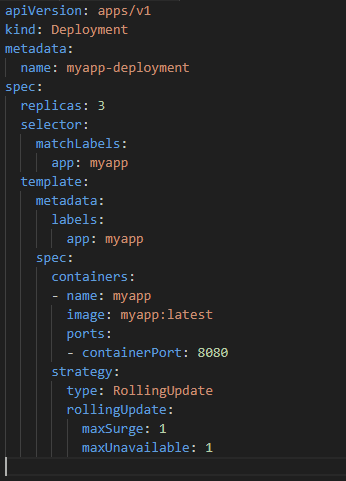

AWS EKS Example :

We have a web application deployed on AWS EKS.

To perform a rolling update, We can use the kubectl set image command to update the Docker image used by our application.

Kubernetes will then manage the rollout and ensure availability throughout the process.

An alternative approach using Kubernetes Deployment manifest and AWS EKS native rolling update capabilities. kubectl apply -f app-deployment.yaml

Real-world example: Netflix

Netflix, a popular streaming service, uses Kubernetes for managing their vast infrastructure.

They leverage rolling updates to ensure seamless updates of their microservices without disrupting the user experience.

Commands, Arguments & Environment Variables -

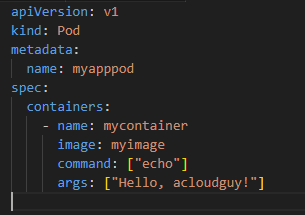

Commands are the primary executable for the container, while arguments provide additional parameters.

By configuring these, we can control how the container behaves and what actions it performs.

When running containers in Kubernetes, we often need to specify commands and arguments to be executed within the container.

Environment variables are dynamic values that can be passed to containers during runtime. It allow us to configure our application without modifying the container image.

Environment variables are incredibly useful for storing sensitive information, such as passwords or API keys, and for providing configuration settings

Pros:

Flexibility: By specifying commands and arguments, you have granular control over the behavior and actions of your containers, allowing you to tailor them to your application’s specific requirements.

Reusability: Containers with well-defined commands and arguments can be easily reused across different environments, simplifying deployment and maintenance.

Cons:

Dependency Awareness: Defining commands and arguments requires a good understanding of the dependencies and interrelationships between containers within the application.

Potential Data Leakage: Improper handling of environment variables can lead to accidental exposure of sensitive information, making it crucial to handle them securely.

AWS EKS Example :

Our application needs a database connection string.

Instead of hardcoding it in the code or configuration files, we can store it as an environment variable.

Kubernetes allows you to define environment variables in the pod specification, and AWS EKS enables us to securely manage these variables within its infrastructure.

Real-world example: Twitter

Twitter, the social media platform, utilizes Kubernetes for managing its infrastructure.

They make extensive use of environment variables to configure their microservices and handle sensitive information securely.

Twitter sets environment variables to store API keys, database connection strings, and other configuration values.

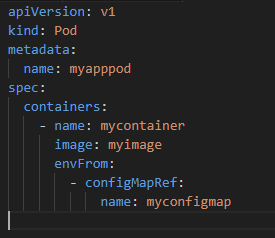

ConfigMaps -

ConfigMaps are a Kubernetes resource that allows us to decouple configuration from the application code.

They provide a way to store key-value pairs or configuration files and make them available to containers as environment variables or mounted volumes.

ConfigMaps simplify the management of application configuration and promote portability.

A ConfigMap is not designed to hold large chunks of data. The data stored in a ConfigMap cannot exceed 1 MiB.

Pros:

Decoupling Configuration: ConfigMaps separate configuration from the application code, allowing for more flexibility and portability, as the same container image can be used with different configuration values.

Easy Updates: Modifying the configuration in a ConfigMap does not require rebuilding the container image, enabling quick updates and reducing deployment overhead.

Cons:

Synchronization Challenges: If configuration changes frequently or if multiple pods rely on the same ConfigMap, ensuring synchronization and consistency across the application can be challenging.

Limited Scalability: ConfigMaps may not be suitable for handling large or complex configurations, as the management and retrieval of the configuration data may become inefficient.

AWS EKS Example :

Assume we have a microservice-based application running on AWS EKS, and we want to configure certain properties of our application using ConfigMaps.

Our application requires the database connection string and an external API URL as configuration values.

We can separate the configuration values from our application code, making it easier to manage and update configurations without modifying the application itself.

Real-world example: Spotify

Spotify, the popular music streaming platform, runs on Kubernetes and employs ConfigMaps to manage configuration settings for their services.

They store configuration values, such as feature flags, service URLs, and timeout settings, in ConfigMaps.

By separating configuration from the application code, Spotify achieves more flexibility and easier management of their services across different environments and deployment scenarios.

-------------------------------------*******----------------------------------------

I am Kunal Shah, AWS Certified Solutions Architect, helping clients to achieve optimal solutions on the Cloud. Cloud Enabler by choice, DevOps Practitioner having 7+ Years of overall experience in the IT industry.

I love to talk about Cloud Technology, DevOps, Digital Transformation, Analytics, Infrastructure, Dev Tools, Operational efficiency, Serverless, Cost Optimization, Cloud Networking & Security.

aws #community #builders #devops #kubernetes #application #management #lifecycle #nodes #pods #deployments #eks #infrastructure #webapplication #acloudguy

You can reach out to me @ acloudguy.in

Top comments (0)