A batch job is a scheduled block of code that process messages without user interaction. Typically a batch job will split a message into individual records, performs some actions on each record, and pushes the processed the output to other downstream systems.

Batch processing are useful when working with scenarios such as

Synchronising data between different systems with a “near real-time” data integration.

For ETL into a target system, such as uploading data from a flat file (CSV) to a BigData system.

For regular file backup and processing

In this article we are going to build a batch file processing following a serverless architecture using Kumologica.

Kumologica is a free low-code development tool to build serverless integrations. You can learn more about Kumologica in this medium article or subscribe to our YouTube channel for the latest videos.

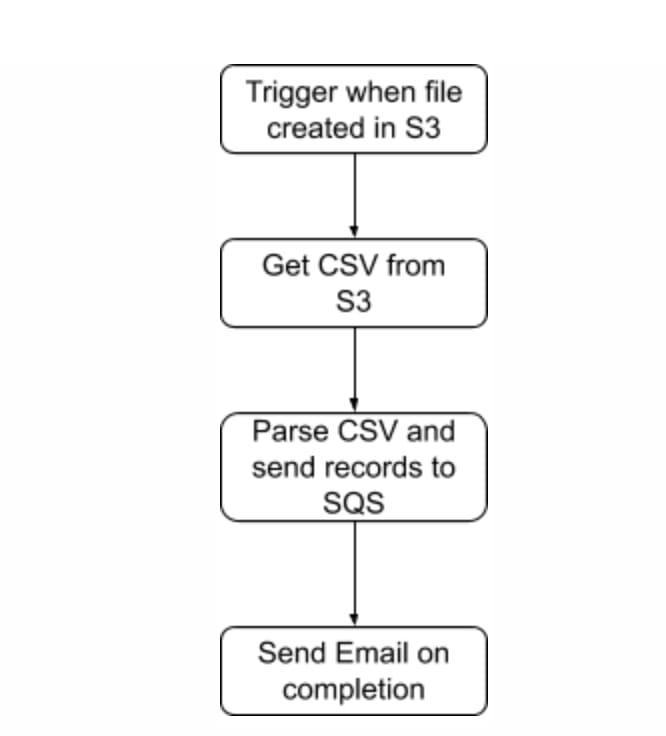

Use case

We are going to implement a batch process flow that will pick up a CSV file as when the file is created in the S3 folder.. The file will be parsed and converted to a specific JSON structure before being copied to a SQS queue. The flow will finish by sending an email reporting on the completion of the job.

Prerequisite

Kumologica designer installed in your machine. https://kumologica.com/download.html

Create an AWS S3 bucket with the name — kumocsvstore. Also download the following sample csv file used in this use case to store in your AWS S3 bucket.

Create an Amazon SQS queue with the name — kumocsvqueue.

Create an AWS SES entry with a verified email id

Implementation

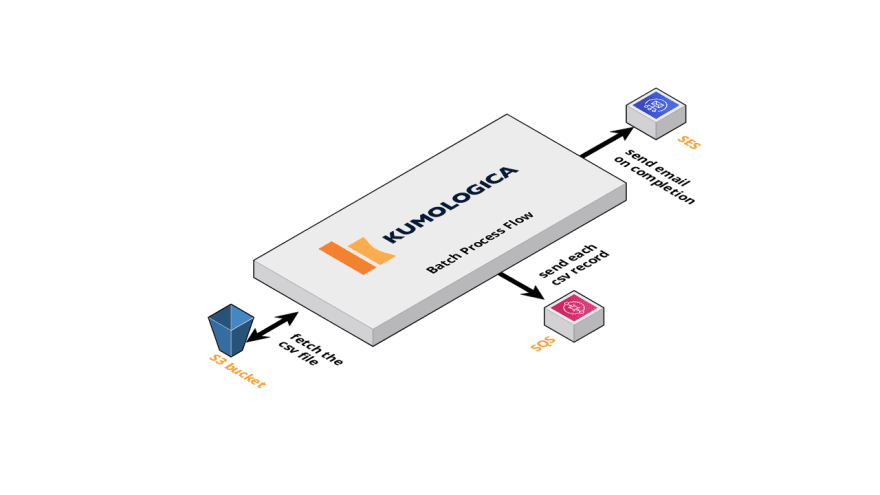

The diagram below shows the different systems that our flow will be responsible to orchestrate. Given that most of our dependencies are in AWS, we are going to target AWS Lambda as our deployment target to run our flow.

Steps:

Open Kumologica Designer, click the Home button and choose Create New Kumologica Project.

Enter name (for example BatchProcessFlow), select directory for project and switch Source into From Existing Flow …

Copy and Paste the following flow

press Create Button.

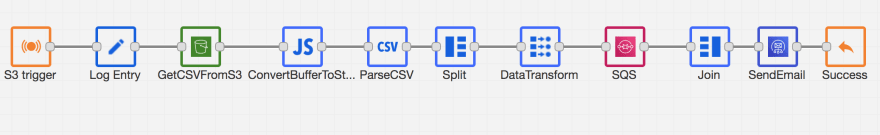

You should be seeing flow as given below on the designer canvas.

Understanding the flow

S3 trigger is the EventListener node is configured to have the EventSource as “Amazon S3”. This is to have the Kumologica flow to accept Amzon S3 trigger events when file created in S3 folder.

Log Entry is the logger node to print the entry of this flow.

GetCSVFromS3 is the AWS S3 node to get the CSV file content from S3 bucket.

ConvertBufferToString is the function node to convert Buffer object to String in UTF-8. As the AWS S3 node returns a buffer object we need to make it as UTF-8 string before giving to CSV node to parse.

ParseCSVis the CSV node to parser CSV string to javascript object.

Split will split the parsed csv object to individual record objects which are passed to a Datamapper node.

The Datamapper node maps the object to a JSON structure expected to be published on to Amazon SQS queue.

SendToSQS node published the JSON message to SQS queue.

Join node ensure to close the iteration done by the split node.

SendEmail node is the Amazon SES node for sending the completion email.

Event listener End node to stop the flow.

Deployment

Select CLOUD tab on the right panel of Kumologica designer, select your AWS Profile.

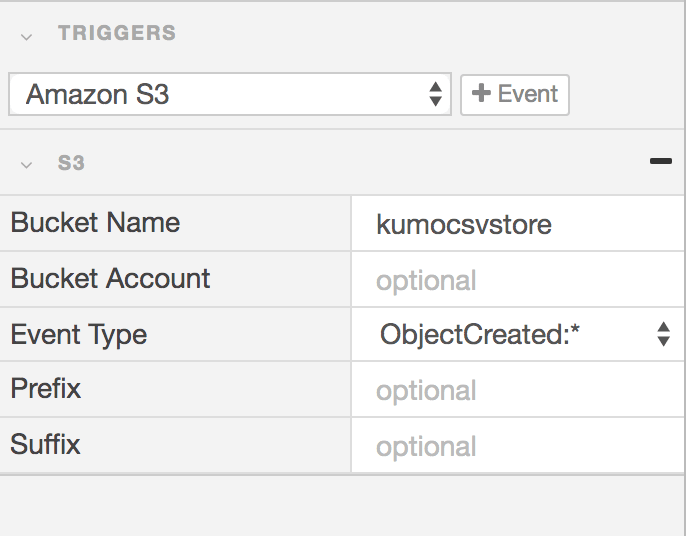

Go to “Trigger” section under cloud tab and select the S3 bucket where CSV file is expected.

- Press Deploy button.

Conclusion

This article presented how easy Kumologica Designer flow orchestrates different AWS service to create an Serverless Batch Process which run on demand when the file is created.

Remember Kumologica is totally free to download and use. Go ahead and give it a try, we would love to hear your feedback.

Top comments (0)