Okay, so I’ve never been to Michigan. I don’t know much about Michigan besides the fact that it’s pretty much a pennisula and it looks pretty big. Searching for a featured image for this post though landed me upon the pictures of Munising and that place is GORGEOUS. Good job, Michigan.

Today, I’m going to show what I did to scrape the Michigan Secretary of State. It’s another entry in the Secretary of State scraping series.

Investigation

A quick check of Michigan’s secretary of state page shows pretty standard searches. It doesn’t have the extremely helpful date range but it does allow you to search with “contains” for the entity name and it doesn’t limit the results.

I try to look for the most recently registered businesses. They are the businesses that very likely are trying to get setup with new services and products and probably don’t have existing relationships. I think typically these are going to be the more valuable leads.

If the state doesn’t offer a date range with which to search, I’ve discovered a trick that works pretty okay. I just search for “2020”. 2020 is kind of a catchy number and because we are currently in that year people tend to start businesses that have that name in it.

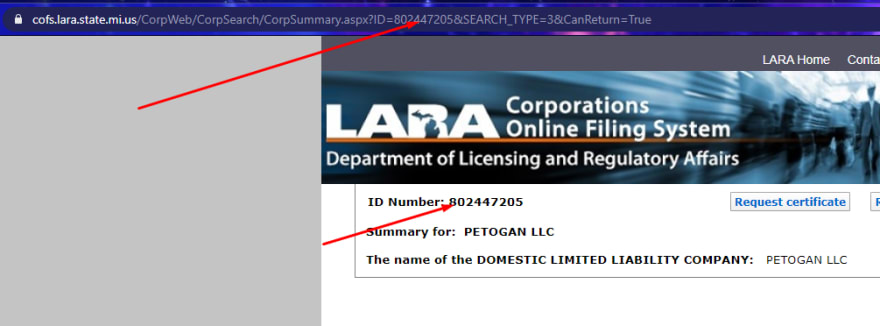

Once I find one of these that is registered recently, I look for a business id somewhere. It’s typically a query parameter in the url or form data in the POST request. Either way, if I can increment that id by one number and still get a company that is recently registered, I know I can find recently registered business simply by increasing the id with which I search.

Michigan is no exception to this and the above worked like a charm. If I’m able to find two businesses with consecutive business ids within the same filing date range, then I’m in business (see what I did there?).

Oddity

One odd thing about Michigan was that while generally bigger id numbers meant more recently registered, it wasn’t the rule. There were still higher numbers that sometimes were a registered a few days before the previous number.

There were also packs of numbers that were missing. For example, I started at 802447095 and kept going until I hit 20 numbers in a row that didn’t have a registered business. I ended at 802447466 and only had 109 businesses registered. That means I went through about 370 numbers and only found businesses at less than a third of them.

I’ve seen things kind of similar to this in other states but never this many numbers missing. I really don’t have any guesses as to why this would happen. With other states I’ve assumed that a business began to register but then never ended up finishing. So the id got reserved but never was actualized. But these Michigan ids are up to 800 million. No way that many businesses have attempted to register but never finished.

The code

The code was very simple for this. The basic scraping and css selecting cut and dry. Here’s my function for getting the business details:

async function getDetails(id: number) {

const url = `https://cofs.lara.state.mi.us/CorpWeb/CorpSearch/CorpSummary.aspx?ID=${id}&SEARCH_TYPE=3&CanReturn=True`;

const axiosResponse = await axios.get(url);

const $ = cheerio.load(axiosResponse.data);

const title = $('#MainContent_lblEntityNameHeader').text();

const filingDate = $('#MainContent_lblOrganisationDate').text();

const agentName = $('#MainContent_lblResidentAgentName').text();

const agentStreetAddress = $('#MainContent_lblResidentStreet').text();

const agentCity = $('#MainContent_lblResidentCity').text();

const agentState = $('#MainContent_lblResidentState').text();

const agentZip = $('#MainContent_lblResidentZip').text();

const business = {

title: title,

filingDate: filingDate,

agentName: agentName,

agentStreetAddress: agentStreetAddress,

agentCity: agentCity,

agentState: agentState,

agentZip: agentZip,

sosId: id

};

return business;

}

This is based on the fact that when navigating to the details of a business, you can see that it’s a GET request with the business id in the url. So, I just ended up looping through ids, incrementing them as I went.

With this scrape I built in some checking to automatically discover when we were at the end of the numbers. If I found a number that returned without a title, then I’d increment a notFoundCount by 1 and stop the loop when it hit 20. Every time I found a valid business, however, I’d reset the count back down to 0.

(async () => {

let startingId = 802447095;

const businesses: any[] = [];

let notFoundCount = 0;

while (notFoundCount < 20) {

try {

const business = await getDetails(startingId);

if (business.title) {

businesses.push(business);

notFoundCount = 0;

console.log('business', business);

}

else {

notFoundCount++;

}

startingId++;

console.log('notFoundCount', notFoundCount, 'businesses.length', businesses.length);

}

catch (e) {

notFoundCount++;

console.log('Error making request for', startingId);

}

await timeout(1000);

}

})();

It was a pretty good and simple scrape. I like the smart way of letting the scrape run to make sure we really hit the end. Though…with Michigan, I’m not sure we be totally sure even after 20 in a row without finding a business.

Looking for business leads?

Using the techniques talked about here at javascriptwebscrapingguy.com, we’ve been able to launch a way to access awesome business leads. Learn more at Cobalt Intelligence!

The post Jordan Scrapes Secretary of States: Michigan appeared first on JavaScript Web Scraping Guy.

Top comments (0)