How to avoid being blocked while web scraping is something I think about often. I even wrote a post about it for use with puppeteer. This time I did a little more research into how the web servers see requests and how they could identify potential web scrapers just based on the request.

The scene

I was scraping a website locally without any problems. It was a direct call with axios without any headers, cookie information, or anything. Just a normal GET request. It looked something like this:

const axiosResponse = await axios.get(url);

And it worked. No problem. This was on my local machine. I pushed it up to my webserver on Digital Ocean (which I still love and use regularly) and…it didn’t work. Timeout or returned an error. I’ve had this happen before.

This is what led me to the investigation of this post. What did the target site see in my request from my webserver that differed from what I was sending it from my local machine.

Investigation with headers

So I set up a webserver to try and see the differences. I made it return to me the headers of the request so I could see any differences in them between my local request and the one from my cloud request.

Here is what the code looked like:

(async () => {

const url = `https://backend.cobaltintelligence.com/tester`;

const axiosResponse = await axios.get(url);

console.log('axiosResponse', axiosResponse.data, axiosResponse.status);

})();

And the response from my local machine:

axiosResponse {

headers: {

connection: 'close',

host: '<redacted-host-name>',

'x-forwarded-proto': 'https',

'x-forwarded-for': '<redacted-ip-address>',

'x-forwarded-port': '443',

'x-request-start': '1590340584.518',

accept: 'application/json, text/plain, */*',

'user-agent': 'axios/0.19.2'

}

} 200

Here’s the response from my cloud server:

axiosResponse {

headers: {

connection: 'close',

host: '<redacted-host-name>',

'x-forwarded-proto': 'https',

'x-forwarded-for': '<redacted-ip-address>',

'x-forwarded-port': '443',

'x-request-start': '1590340667.867',

accept: 'application/json, text/plain, */*',

'user-agent': 'axios/0.19.2'

}

} 200

Identical. The user-agent is clearly a tell that this isn’t a normal browser but we can certainly spoof that. There is nothing here with which I could use to block a potential web scraper.

Investigation with web server access logs

I knew there were web server logs and I had even looked at them before but it has been a long while and I remember not fully understanding them. This time I dug a little more into it.

I use Dokku to host the backend application I am testing and nginx. It is a simple command to view logs.

dokku nginx:access-logs <app-name> -t

Here’s a list of some tests I did.

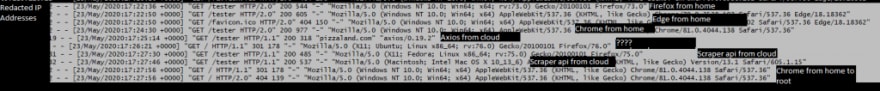

I realize that the text is small here. I uploaded it to imgur and linked it so you are able to see it bigger. The columns are this:

$remote_addr - $remote_user [$time_local] "$request" $status $body_bytes_sent "$http_referer" "$http_user_agent"

I tried to label where the requests came from. I also started trying to use a proxy service, like Scraper Api, to see how those would look. The most notable difference between them is that pretty much all browsers are using HTTP/2.0 whereas my requests both with and without the proxy are using HTTP/1.1. There was a single instance where I navigated to a route that didn’t exist with Chrome where the initial request was HTTP/1.1 but then the next request was HTTP/2.0. So probably categorically blocking based on HTTP/1.1 is a bad idea at this point. And this doesn’t explain how I get blocked when calling from the cloud but not blocked from my local machine.

Besides that…you can’t really see anything different. You can see the user agents and axios is an easy one to pick out. But again, that can be spoofed incredibly easy. Scraper Api does that automatically and you can’t tell a difference.

Conclusion

After all of this research, the only difference I can tell is IP address. I started using the proxy service on the cloud and suddenly everything started working.

The site that was blocking me when I scraped it from the cloud was not a modern or technical one so I thought that maybe a list of cloud ip addresses were readily available. I googled, hard , and couldn’t find anything helpful. I even found a lot of articles saying that blocking the cloud ip addresses isn’t a very effective strategy. So it doesn’t make sense to me that this is what this target site is doing but I cannot think of any other way how they could detect me when I am scraping from the cloud vs my local machine.

I am an affiliate for Scraper Api but it honestly is so easy and just works so well. I used it in this example and there were no problems at all. It’s a really great product. If you are getting blocked while scraping in the cloud but not on your local machine, I’d recommend trying a proxy service or some kind of different ip address.

Looking for business leads?

Using the techniques talked about here at javascriptwebscrapingguy.com, we’ve been able to launch a way to access awesome business leads. Learn more at Cobalt Intelligence!

The post Avoid Being Blocked with Axios appeared first on JavaScript Web Scraping Guy.

Top comments (0)