Do developers care about code security? This question, I believe, is still open to debate. I wrote this article to solicit feedback from both developers and security experts. Would you help me with that?

I'll explain why this topic interests me.

I'm working on PVS-Studio. Our tool detects both coding errors and security flaws. Thus, PVS-Studio combines the features of both a "typical" static analyzer and SAST solution.

I've been interested in PVS-Studio being a SAST solution. So, I'd like to know more about what teams expect from such tools. What problems do they face when introducing SAST into the development process? What matters to them and what does not? How are workflows for handling analysis results managed?

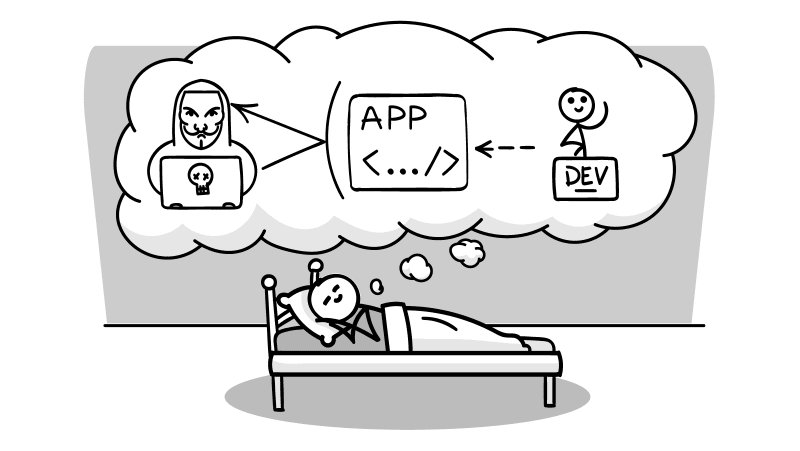

Since the experience of integrating SAST is often discussed at security conferences, I listened to the talks seeking answers to these questions. After watching a dozen talks, I was left with the idea that... developers aren't truly interested in SAST.

Take it from me, developers would say a few choice words after they find that SAST has been brought into the development process.

I noticed a common idea in the talks: developers are not happy with the integration of SAST. It's not easy to set up a workflow so that developers sort out and handle all the warnings.

However, SAST helps to find some security problems. That's why teams developing secure SDLC integrate SAST into the development process. While different companies take different approaches:

- some companies take a firm line and impose a blocking security gate on SAST warnings;

- other companies take a more lenient approach and do not block development processes with warnings.

In the first case, developers hack the system (for example, they may suppress all warnings, whether they are false or not), while in the second case, developers may not take the analysis results into account.

Changing people's minds and getting them to be at ease with fixing their code is another matter.

This raises the question: do developers care about code security?

Well, SAST solutions should help development teams improve code security. Yes, there are drawbacks, such as false positives, but still. Why do disputes arise? Is the problem in the tools, in the workflows, or both?

This is where I need your help — could you share your experience and help me find answers?

Do the development and security teams have any conflicts of interest? How does SAST integrate into the development process? What matters in the SAST tool and what doesn't? Who handles the analysis results, and how it is done?

You are welcome to share your opinions and experiences: tell me more about how these workflows function in your company, what you like and dislike about them, and what may be improved.

Top comments (2)

In my experience, yes. I've encountered resistance and friction when implementing security changes to plug control gaps. Friction has a correlation to how invasive the security request is in the engineer's current work.

So I'm the purchaser for an Enterprise-grade SaaS company with SOC2 and NIST 800-53 compliance obligations. What I look for when I'm pitched SAST products:

There would be different actors in our particular use case:

Additionally, a premium-value SAST platform will work where you work, meaning it integrates with your other tooling like Slack, JIRA, etc. to reduce the human interaction for a complete workflow.

Thank you for the detailed feedback! I've read the comment with a great interest.