This post is originally published on yoursunny.com blog https://yoursunny.com/t/2021/das-file-worker/

I'm bored on 4th of July holiday, so I made a wacky webpage: Deep Atlantic Storage.

It is described as a free file storage service, where you can upload any file to be stored deep in the Atlantic Ocean, with no size limit and content restriction whatsoever.

How does it work, and how can I afford to provide it?

This article is the second of a 3-part series that reveals the secrets behind Deep Atlantic Storage.

The previous part introduced the algorithm I use to sort all the bits in a Uint8Array.

Now I'd continue from there, and explain how the webpage accepts and processes file uploads.

File Upload

File upload has always been a part of HTML standard as long as I remembered:

<form action="upload.php" method="POST" enctype="multipart/form-data">

<input type="file" name="file">

<input type="submit" value="upload">

</form>

This would create a Browse button that allows the user to select a local file.

When the form is submitted, the file name and content are sent to the server, and a server-side script can process the upload.

It's straightforward, but not ideal for Deep Atlantic Storage.

As explained in the last article, regardless of how large a file is, the result of sorting all the bits could be represented by just two numbers: how many 0 bits and 1 bits are in the file.

It is unnecessary to send the whole file to the server; instead, counting in the browser would be a lot faster.

File and Blob

Fast forward to 2021, JavaScript can do everything.

In JavaScript, given the DOM object corresponding to the <input type="file"> element, I can access the (first) selected file via .files[0] property.

Using files from web applications has further explanation of these APIs.

.files[0] returns a File object, which is a subclass of Blob.

Then, Blob.prototype.arrayBuffer() function asynchronously reads the entire file into an ArrayBuffer, providing access to its content.

<form id="demo_form">

<input id="demo_upload" type="file" required>

<input type="submit">

</form>

<script>

document.querySelector("#demo_form").addEventListener("submit", async (evt) => {

evt.preventDefault();

const file = document.querySelector("#demo_upload").files[0];

console.log(`file size ${file.size} bytes`);

const payload = new Uint8Array(await file.arrayBuffer());

const [cnt0, cnt1] = countBits(payload); // from the previous article

console.log(`file has ${cnt0} zeros and ${cnt1} ones`);

});

</script>

This code adds an event listener to the <form>.

When the form is submitted, the callback function reads the file into an ArrayBuffer and passes it as a Uint8Array to the bit counting function (countBits from the previous article).

ReadableStream

file.arrayBuffer() works, but there's a problem: if the user selects a huge file, the entire file has to be read into the memory all at once, causing considerable memory stress.

To solve this problem, I can use Streams API to read the file in smaller chunks, and process each chunk before reading the next.

From a Blob object (such as the file in the snippet above), I can call .stream().getReader() to create a ReadableStreamDefaultReader.

Then, I can repeatedly call reader.read(), which returns a Promise that resolves to either a chunk of data or an end-of-file (EOF) indication.

To process the file chunk by chunk and count how many 1 bits are there, my strategy is:

- Call

reader.read()in a loop to obtain the next chunk. - If

doneis true, indicating EOF has been reached, break the loop. - Add the number of

1bits in each byte of the chunk into the overall counter. - Finally, calculate how many

0bits are there from the file size, accessible viablob.sizeproperty.

async function countBitsBlob(blob: Blob): Promise<[cnt0: number, cnt1: number]> {

const reader = (blob.stream() as ReadableStream<Uint8Array>).getReader();

let cnt = 0;

while (true) {

const { done, value: chunk } = await reader.read();

if (done) {

break;

}

for (const b of chunk!) {

cnt += ONES[b];

}

}

return [8 * blob.size - cnt, cnt];

}

Web Worker

In a web application, it's best to execute complex computations on a background thread, so that the main thread can react to user interactions swiftly.

Web Workers are a simple means for web content to run scripts in background threads.

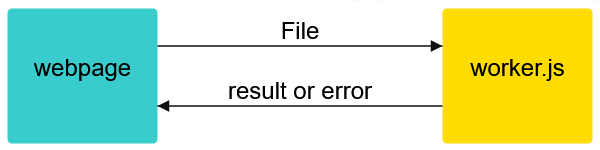

In Deep Atlantic Storage, I delegated the task of sorting or counting bits in the file to a web worker.

When the user selects a file and submits the form, the form event handler creates a Worker (if it has not done so), and calls Worker.prototype.postMessage() to pass the File object to the background thread.

let worker;

document.querySelector("#demo_form").addEventListener("submit", async (evt) => {

evt.preventDefault();

const file = document.querySelector("#demo_upload").files[0];

worker ??= new Worker("worker.js");

worker.onmessage = handleWorkerMessage; // described later

worker.postMessage(file);

});

The worker.js runs in the background.

It receives the message (a MessageEvent enclosing a File object) in a function assigned to the global onmessage variable.

This function then calls countBitsBlob to count how many zeros and ones are in the file, then calls the global postMessage function to pass the result back to the webpage main thread.

It also catches any errors that might have been thrown, and passes those to the main thread as well.

I've included type: "result" and type: "error" in these two types of messages, so that the main thread can distinguish between them.

onmessage = async (evt) => {

const file = evt.data;

try {

const result = await countBitsBlob(file);

postMessage({ type: "result", result });

} catch (err) {

postMessage({ type: "error", error: `${err}` });

}

};

Notice that in the catch clause, the Error object is converted to a string before being passed to postMessage.

This is necessary because only a handful of types can pass through postMessage, but Error is not one of them.

Back in the main thread, the handleWorkerMessage function, which was assigned to worker.onmessage property, receives messages from the worker thread.

function handleWorkerMessage(evt) {

const response = evt.data;

switch (response.type) {

case "result": {

const [cnt0, cnt1] = response.result;

console.log(`file has ${cnt0} zeros and ${cnt1} ones`);

break;

}

case "error": {

console.error("worker error", response.error);

break;

}

}

}

Combined with some user interface magic (not described in this article, but you can look at the webpage source code), this makes up the Deep Atlantic Storage webpage.

Summary

This article is the second of a 3-part series that reveals the secrets behind Deep Atlantic Storage.

Building upon the bit counting algorithm designed in the previous article, I turned it into a web application that reads an uploaded file chunk by chunk via Streams API, and moved the heavy lifting to a background thread via Web Workers.

The next part in this series will explain how I made a server to reconstruct the file from the bit counts.

Oldest comments (0)