Note: this post was written by my collegue Keyur at Moesif, where we all blog here.

In quest of breaking the inflexibility of the monolithic application architecture, developers are turning in large numbers to microservices. Microservices are small, independent loosely coupled modules within large software which communicate with each other via APIs. Microservice architecture provides fault tolerance, enables continuous delivery and ability to scale across multiple regions to ensure high availability.

In this article, we will show an example of how Kong a popular open-source API gateway, built on NGINX at its core, one of the most popular HTTP servers is used for load balancing.With Kong, you can get more control to handle authentication, rate limiting,data transformation, among other things from a centralized location even though you have multiple microservices than a typical load balancer.

Why Load balancing?

Load balancing is a key component of highly available infrastructure which is used to distribute users’ request load across multiple services among healthy hosts so that no host get overloaded.

The single point of failure could be mitigated by introducing a load balancer and a replica of the service. Every service will return an identical response so the users receive consistent response irrespective of which service was called.

Kong provides different ways of load balancing requests to multiple services - a DNS-based method, round-robin method and a hash-based balancing method. A DNS-based method will configure a domain in DNS in such a manner that the user requests to the domain are distributed among a group of services. Round-robin method will distribute the load to the host evenly and in order. When using hash-based balancing method, Kong will act as a service registry which handle the adding and removing of the services with a single HTTP request.

Setting up Kong locally

Kong is available to install in multiple operating environments. Set up Kong based on the environment you’re currently using. More details on how to install kong

Setting up Services

We are going to start two services which will be two NGINX instances. We will pull the OpenResty official docker image based on NGINX and LuaJIT.

docker pull openresty/openresty

Let’s start two services and map it to different ports

docker run -p 9001:9001 -d openresty/openresty

docker run -p 9002:9002 -d openresty/openresty

Before configuring the services we will install the packages.

docker exec -it <container> /bin/bash

apt-get update

apt-get install vim

apt-get install libnginx-mod-http-ndk nginx-common libnginx-mod-http-lua

Now we will configure the services with two endpoints / and /profile which we will authenticate and listen to port 9001 and 9002 respectively. We will update etc/nginx/conf.d/default.conf the config file.

listen <port_number>;

location / {

return 200 "Hello from my service";

}

location /profile {

content_by_lua_block {

local cjson = require "cjson"

local consumer_id = ngx.req.get_headers()["X-Consumer-ID"]

if not consumer_id then

ngx.status = 401

ngx.say("Unauthorized")

return

end

ngx.header["Content-Type"] = "application/json"

ngx.say(cjson.encode{

id = consumer_id,

username = "moesif",

description = "Advanced API Analytics platform"

})

}

}

We will restart the services to ensure the latest config changes are reflected.

docker restart <container>

We will quickly see what the services response if we hit them based on the config.

# Service A

curl -XGET 'http://localhost:9001'

# Service B

curl -XGET 'http://localhost:9002'

The idea is to have both services be replica of each other so we can load balance our traffic between them.

Start the Kong

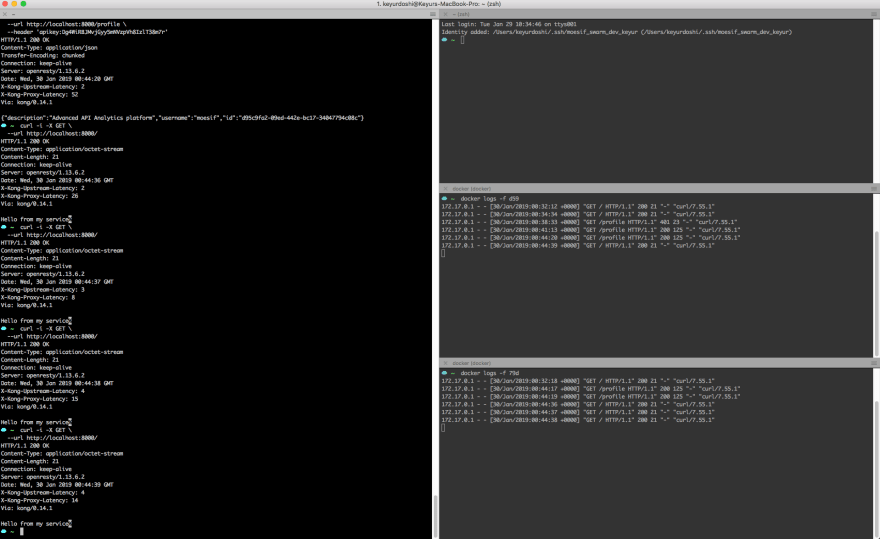

We will start Kong with the idea to proxy the traffic through Kong as a reverse proxy instead of hitting the NGINX instances directly.

To achieve this we will have to make a requests to the proxy port 8000 and the admin port 8001.

Create a service

We will create a service with name moesif-service.

curl -i -X POST \

--url http://localhost:8001/services/ \

--data 'name=moesif-service' \

--data 'url=http://localhost:9001'

Create a route

We will create a route that will accept traffic and send all the traffic received from that route to the service created above.

curl -i -X POST \

--url http://localhost:8001/routes \

--data 'paths[]=/' \

--data 'service.id=<service-id>' \

-f

At this point we are able to proxy traffic through Kong to port 9001 service.

curl -i -X GET \

--url http://localhost:8000/

Awesome, we are actually proxying traffic over Kong to the upstreams as the Kong will add few headers in the response.

We could also proxy traffic to any upstream endpoint which makes Kong fully transparent as it doesn’t care which request we are sending in, it will proxy that to the upstreams as long as it matches the rule we have defined in the route. In our case it is / which basically means every request going through Kong will be proxy to port 9001 service.

Now let’s talk about the other endpoint /profile which we had defined in the upstream.

If we want to enforce every request goes through /profile upstream is authenticated, we can create a route and apply key-auth plugin on top that route.

Create a route

curl -i -X POST \

--url http://localhost:8001/routes \

--data 'paths[]=/profile' \

--data 'service.id=<service-id>' \

-f

To be as flexible as possible Kong has a configuration parameter which defines whether we strip the request path or not, which is defaults to true. This means /profile is proxied as / upstream. So, we have to disable that with a simple PATCH request.

curl -i -X PATCH \

--url http://localhost:8001/routes/<service-id> \

--data 'strip_path=false'

Now if we make a request to /profile it will correctly hit the /profile in the upstream.

curl -i -X GET \

--url http://localhost:8000/profile

Now let’s apply the key-auth plugin to the /profile route.

curl -i -X POST \

--url http://localhost:8001/routes/<service-id>/plugins \

--data 'name=key-auth'

We need to pass the API key when calling the /profile endpoint for that we will have to create the Consumer.

curl -i -X POST \

--url http://localhost:8001/consumers \

--data 'username=moesif'

To get the API key we will make a POST request

curl -i -X POST \

--url http://localhost:8001/consumers/<username>/key-auth

We make a request to /profile with API-key as the header,

curl -i -X GET \

--url http://localhost:8000/profile \

--header 'apikey:<apikey>'

We could see that the /profile is authenticated and returns the response as configured in the upstream.

You’ve observed that the another service is sitting idle, is not receiving any traffic. The goal here is to load balance the traffic between both the services.

We are not going to use the DNS-based load balancing but will manually do it using upstreams which is similar to upstream module in NGINX. The only parameter we have to give is the name in our case it is localhost. So every service that has a localhost as it’s hostname will be proxied through that upstream, which will make load balancing decision.

curl -i -X POST \

--url http://localhost:8001/upstreams \

--data 'name=localhost'

We will add targets for both the port and start proxying load-balancing traffic between the services.

curl -i -X POST \

--url http://localhost:8001/upstreams/<upstream-id>/targets \

--data 'target=localhost:9001'

Now from this point if we make a request through kong it will load-balance between the two services.

If we take one of the services down, Kong will use the retry mechanism. But in certain cases if we exhaust all of the retries on the same upstream, it is possible to still have errors. While monitoring the Kong logs, you can see the decision Kong is making. It’s trying to talk to second upstream but couldn’t and retries the request to the first upstream. The size of our load balancing slots is quite large, it might take a while to hit another service. In that case we have to continue sending more requests.

To understand how your APIs are used, capture API requests and responses and log to Moesif for easy inspecting and real-time debugging of your API traffic via KongFor more detail about how to install Moesif plugin, check out this approach.

Meanwhile, if you have any questions, reach out to the Moesif Team

Moesif is the most advanced API Analytics platform, supporting REST, GraphQL, Web3 Json-RPC and more. Thousands of API developers process billions of API calls through Moesif for debugging, monitoring and discovering insights.

Latest comments (0)