TL;DR

acloudguru released a #CloudGuruChallenge on January 4th that they are calling Multi-Cloud Madness. The criteria is to utilize 3 cloud service providers to complete a serverless image processing pipeline.

- Serverless Website and Compute hosted on AWS

- NoSQL Database hosted on Azure with Tables Storage

- ML Image Processing service hosted on GCP with Cloud Vision

Check it out!

Getting Started

I immediately knew that I would use AWS, Azure, and GCP to accomplish this challenge. This is because I am most familiar with these 3 cloud providers.

I love how this challenge finally gave me a chance to work outside of AWS, which I use everyday. AWS is great, yet I wanted to get hands on with something else.

I decided on using Azure Tables Storage as my NoSQL solution. My reasoning here is that the service was straight-forward, low-cost, and easy to use.

Then I chose to use GCP Cloud Vision as my machine learning image processing service. I've researched this Cloud Vision service before and already knew how to use it.

Architecture

- Serverless Website hosted on CloudFront backed by S3

- Lambda Function to generate signed URLs for accessing S3 Buckets

- Lambda Function to access Azure Tables Storage

- Lambda Function to process images uploaded into S3 by Trigger

Image Analysis

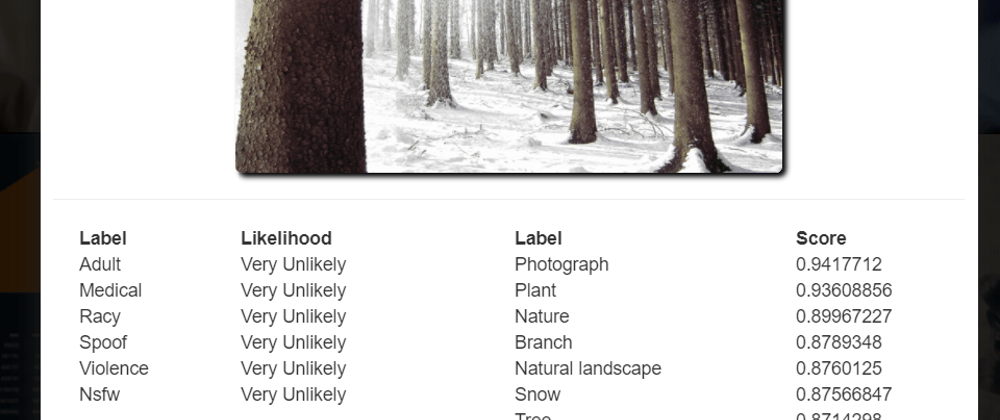

Similar to Rekognition, GCP Cloud Vision was able to return labels for the image uploaded. Another feature that I thought was really neat was their Safe Search.

I was hesitant about taking images online since I know how people can be. I utilized this functionality to filter images that were deemed by the service to be possibly "unsafe".

The Safe Search feature returned multiple categories such as: Adult, Medical, Racy, Spoof, and Violence. Although, to keep the frontend logic simple I decided to take the max likelihood of all of these categories and apply that score to the NSFW label.

This meant that I could use the "Nsfw" label to detect if the content should be shown/hidden.

public VisionAnalysis(SafeSearchAnnotation safeSearch)

{

// add safe search labels

Labels = new List<Label>

{

new Label()

{

Likelihood = safeSearch.Adult,

Name = "Adult",

Score = safeSearch.AdultConfidence

},

new Label()

{

Likelihood = safeSearch.Medical,

Name = "Medical",

Score = safeSearch.MedicalConfidence

},

new Label()

{

Likelihood = safeSearch.Racy,

Name = "Racy",

Score = safeSearch.RacyConfidence

},

new Label()

{

Likelihood = safeSearch.Spoof,

Name = "Spoof",

Score = safeSearch.SpoofConfidence

},

new Label()

{

Likelihood = safeSearch.Violence,

Name = "Violence",

Score = safeSearch.ViolenceConfidence

}

};

// add nsfw label

Likelihood nsfw = Labels.Select(i => i.Likelihood).Max();

Labels.Add(new Label()

{

Likelihood = nsfw,

Name = "Nsfw",

Score = safeSearch.NsfwConfidence

});

var records = $.grep(data['Data'], function(r){

var labels = JSON.parse(r.SerializedVisionAnalysis).Labels;

return !isUnsafe(labels);

});

function isUnsafe(labels){

var unsafe = false;

$.each(labels, function(idx, r){

if("Nsfw" === r.Name && r.Likelihood > 2){

console.log("Potentially Unsafe Content");

unsafe = true;

return false; // break

}

});

return unsafe;

}

https://github.com/wheelerswebservices/cgc-multicloud-madness/blob/main/web/js/browse.js

Database

Similar to DynamoDB, Azure Tables uses a PartitionKey to store the data. I knew queries would need to use this PartitionKey to avoid full table scans. I decided I would use a randomly generated number between 1 and 9 as the PartitionKey. Then on the website I would randomly load data for one of the 9 partitions.

I wrote the implementation in C# only because I've been learning C# in my spare time and wanted a real-world application of what I've learned. The NuGet package manager made dependency management a breeze. The APIs to interact with the Azure Table service was intuitive.

In the beginning I saw that Azure Tables was not persisting my nested fields. e.g. public VisionAnalysis VisionAnalysis {get; set;}. That was happening as complex types were not stored. It was easy enough to resolve this problem by serializing the object before inserting the data.

using System.Text.Json;

response.SerializedVisionAnalysis = JsonSerializer.Serialize(analysis);

Serverless Application Model (SAM)

Additionally, I decided to use SAM to help with some the deployments this time around. In the past I've used serverless (sls). I wanted to gain some practical experience with SAM so that I could better choose between the two serverless deployment offerings in the future.

Unfortunately, I don't have a favorite yet... they're both so convenient!

Conclusion

Finally, another awesome cloud challenge in the bag! Bonus: I am still learning so much. I can't wait to see what the next #CloudGuruChallenge will entail.

If you have any thoughts I'd love to hear them. Feel free to reach out to me on LinkedIn. Cheers!

GitHub

https://github.com/wheelerswebservices/cgc-multicloud-madness

LinkedIn

https://www.linkedin.com/in/justin-wheeler-the-cloud-architect/

Website

https://selfieanalyzer.com/

Top comments (0)