Table of content

1.Collect Data

- Data Preprocessing

- Visualizing Data

- Build Model

- Train model

- Evaluating Trained Model

- Save a trained model

- Predict on custom data

- Realtime detection

1.Collect data

In every machine learning, problem data is the main.

here, data is collected from Kaggle.

data is a subset of this dataset you can download it from here

2.Data Preprocessing

Before we process data, first structure our data in the right folder.

For this we have two option:

- Use TensorFlow image_dataset_from_directory.

- load CSV file.

Here we choose to load from a CSV file.

For that, we change the image name to withmask and withoutmask.

Withmask

# importing os module

import os

# Function to rename multiple files

def main():

for count, filename in enumerate(os.listdir("DATASET/")):

dst ="withmask." + str(count) + ".jpeg"

src ='DATASET/'+ filename

dst ='DATASET/'+ dst

# rename() function will

# rename all the files

os.rename(src, dst)

# Driver Code

if __name__ == '__main__':

# Calling main() function

main()

Withoutmask

# importing os module

import os

# Function to rename multiple files

def main():

for count, filename in enumerate(os.listdir("New folder/")):

dst ="withoutmask." + str(count) + ".jpeg"

src ='New folder/'+ filename

dst ='New folder/'+ dst

# rename() function will

# rename all the files

os.rename(src, dst)

# Driver Code

if __name__ == '__main__':

# Calling main() function

main()

Now move all images into one folder and create a pandas data frame

Generate DataFrame

import pandas as pd

filenames=os.listdir("FULL_DATA/")

categories=[]

for f_name in filenames:

category=f_name.split('.')[0]

if category=='withmask':

categories.append('withmask')

else:

categories.append('withoutmask')

df=pd.DataFrame({

'filename':filenames,

'labels':categories

})

Save dataFrame into CSV file.

Now data is in the right structure we can load data

- Read CSV file

- Shuffle DataFrame with sample(frac=1)

- Turn label into an Array of Boolean

- Create a validation set with train_test_split

- Turning images into Tensor

# Define image size

IMG_SIZE = 224

# Function

def process_image(image_path, image_size=IMG_SIZE):

"""

Takes an image file path and turns the image into a Tensor.

"""

# Read in an image file

image = tf.io.read_file(image_path)

# Turn the jpg image into numerical Tensor with 3 colour channel(RGB)

image = tf.image.decode_jpeg(image,channels=3)

# Convert the color channel values to (0-1) values

image = tf.image.convert_image_dtype(image,tf.float32)

# Resize the image to (224,224)

image = tf.image.resize(image, size=[image_size,image_size])

return image

- Turning data into Batches

# Create a function to return a tuple (image, label)

def get_image_lable(image_path,label):

"""

Takes an image file path name and the label,

processes the image and return a tuple (image, label).

"""

image = process_image(image_path)

return image, label

# Define the batch size

BATCH_SIZE = 32

# Function to convert data into batches

def create_data_batches(X,y=None, batch_size=BATCH_SIZE,valid_data=False):

"""

Creates batches of data of image (X) and label (y) pairs.

Shuffle the data if it's training data but doesn't shuffle if it's validation data.

"""

# If data is valid dataset (NO SHUFFLE)

if valid_data:

print("Creating valid data batches.........")

data = tf.data.Dataset.from_tensor_slices((tf.constant(X),

tf.constant(y)))

data_batch = data.map(get_image_lable).batch(batch_size)

return data_batch

else:

print("Creating train data batches.........")

# Turn filepaths and labels into Tensors

data = tf.data.Dataset.from_tensor_slices((tf.constant(X),

tf.constant(y)))

# Shuffling pathname and labels before mapping image processor fun

data = data.shuffle(buffer_size=len(X))

data_batch = data.map(get_image_lable).batch(batch_size)

return data_batch

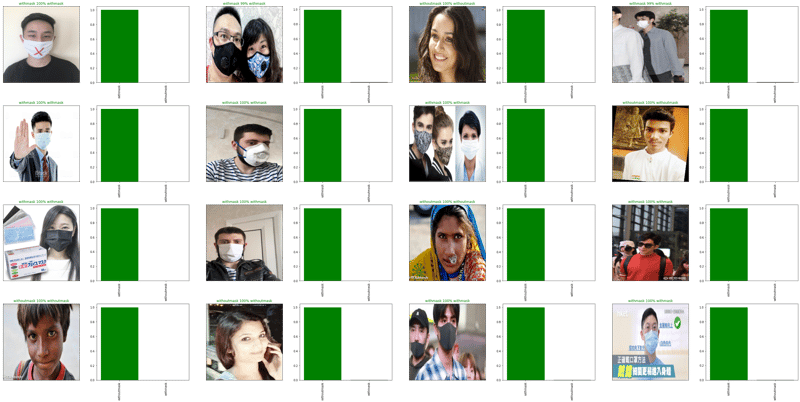

3.Visulizing Data

import matplotlib.pyplot as plt

# Create fun for viewing in a data batch

def show_images(images, labels):

"""

Displays a plot of 25 images and their labels from a data batch.

"""

plt.figure(figsize=(20, 20))

for i in range(25):

# Subplot

ax = plt.subplot(5,5,i+1)

plt.imshow(images[i])

plt.title(unique_category[labels[i].argmax()])

plt.axis("Off")

Call this fun

For Train data

train_images, train_labels = next(train_data.as_numpy_iterator())

show_images(train_images,train_labels)

For valid Data

val_images, val_labels = next(val_data.as_numpy_iterator())

show_images(val_images, val_labels)

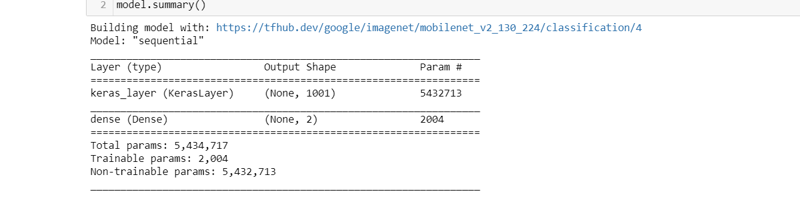

4.Building a model

Here we can use the TensorFlow hub for pre-trained models.

For this task, we use MobileNet V2 which is a small model.

- Set input_shape = [none, 224,224,3]

- Set output_shape = 2

- Use Sequential model from tf.keras

# Create a fun to build a keras model

def create_model(input_shape=INPUT_SHAPE,output_shape=OUTPUT_SHAPE, model_url=MODEL_URL):

print("Building model with:", model_url)

# Setup the model

model = tf.keras.Sequential([

hub.KerasLayer(model_url),

tf.keras.layers.Dense(units=output_shape,

activation="softmax")

])

# Compile the model

model.compile(

loss = tf.keras.losses.BinaryCrossentropy(),

optimizer = tf.keras.optimizers.Adam(),

metrics = ["accuracy"]

)

# Build the model

model.build(input_shape)

return model

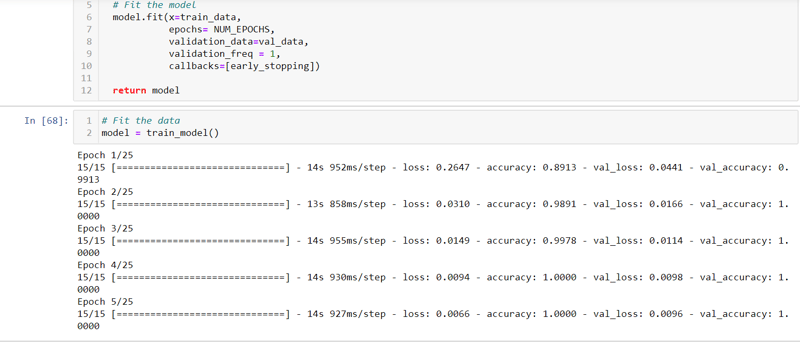

5. Train a model

Train a model on train_data and valid_data for 25 EPOCHS

Also, add an Early stopping callback

model = create_model()

model.summary()

With this model, val_loss is 0.0096 and Accuracy is almost 99.99 %

6.Evaluating prediction

Using model.predict() on val_data model return NumPy array of shape (_ , 2)

7.Saving and reloading a trained model

Save a trained model using save_model from keras.

Loading a model is a bit different from regular load_model

model = load_model(

'model/model.h5', custom_objects={"KerasLayer": hub.KerasLayer})

here we have to provide custom_objects={“KerasLayer”: hub.KerasLayer} in load_model function alongside model_path.

8.Predict on custom data

Before predicting the new data make sure it is in the right shape as well as the right size.

def test_data(path):

demo = imread(path)

demo = tf.image.convert_image_dtype(demo,tf.float32)

demo = tf.image.resize(demo,size=[224,224])

demo = np.expand_dims(demo,axis=0)

pred = model.predict(demo)

result = unique_category[np.argmax(pred)]

return result

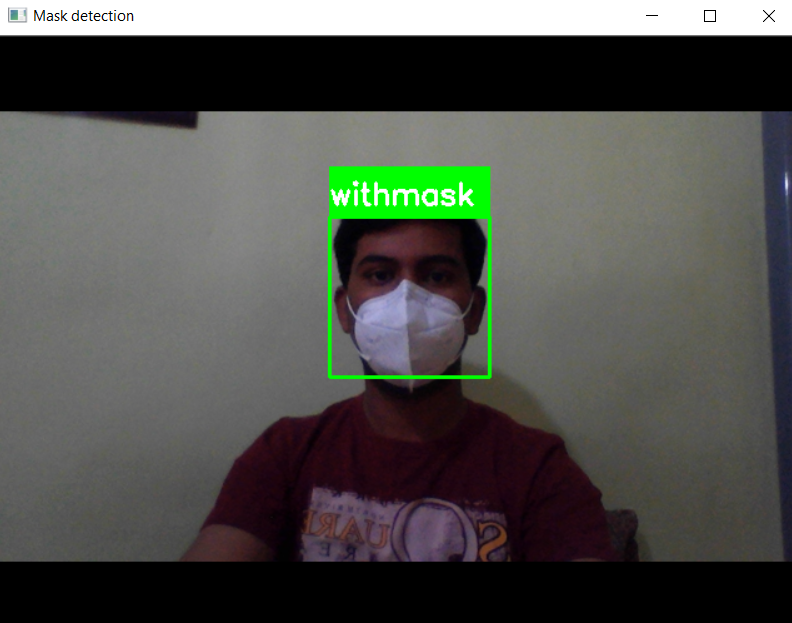

9.Real-time detection

In this, we can use our pre-trained model with OpenCV to make real-time detection.

- Import library

- Use webcam with cv2.VideoCapture()

- Use Haarcascade_frontalface XML file for face detection.

- Predict with the loaded model.

- You can learn more here about OpenCV projects.

you can find the code repo here

patelvivekdev

/

End-to-end-mask-detector

patelvivekdev

/

End-to-end-mask-detector

build deep learning model for mask detection

End-to-end-mask-detector

Build deep learning model for mask detection

Download Data

Use TensorFlow hub model

Predicted image

Use OpenCV for real-time detection.

Hey Readers, thank you for your time. If you liked the blog, don’t forget to appreciate it.

Ai/ML Enthusiast | Blogger

If you have any queries or suggestions feel free to contact me on

Top comments (0)