Web scraping is the process of extracting data from websites. To refine my skills I choose to do multiple projects using some of the awesome python libraries. For this project, we are using requests-html, which the creators describe as HTML parsing for humans.

The project idea is very minimal and the goal is to get basics of using requests-html library. We are going to scrape through all categories in thehackernews.com website and get all the titles and links of the latest articles. To keep things simple, we are going to print out the story titles and links to the terminal.

So let's get started by creating a new project folder (am naming the folder thn_scraper)

mkdir thn_scraper

Change into the folder and create a python virtual environment

cd thn_scraper

python3 -m venv env

Activate the environment & install the packages

source env/bin/activate

pip3 install requests-html

Let's start by creating file named main.py

nvim main.py (am using neovim, u can use any editor of your choice)

from requests_html import HTMLSession

session = HTMLSession()

baseURL = 'https://thehackernews.com/search/label/'

categories = ['data%20breach', 'Cyber%20Attack', 'Vulnerability', 'Malware']

First, we are importing HTMLSession function from the requests_html library we just installed. Also, we are initializing HTMLSession(). We also store the website URL to a variable baseURL. We are also storing the categories in a list.

class CategoryScrape():

catURL = ''

r = ''

def __init__(self, catURL, category):

print(f'Scraping starting on Category : {category} \n')

print(' ')

self.catURL = catURL

self.r = session.get(self.catURL)

Here, we are creating class named CategoryScrape, which has initialization function which takes in two parameters catURL and category. We are also calling session.get in here, and passing the catURL. The response is then stored on a variable named r.

def scrapeArticle(self):

blog_posts = self.r.html.find('.body-post')

for blog in blog_posts:

storyLink = blog.find('.story-link', first=True).attrs['href']

storyTitle = blog.find('.home-title', first=True).text

print(storyTitle)

print(storyLink)

print("\n")

The class also has a function named scrapeArticle, which has all bells and whistles to parse through the HTML and look for a list of articles and then scrape titles and links of individual articles. Once the data is scraped for each article, the story title and link are printed out into the terminal.

for category in categories:

category = CategoryScrape(f'{baseURL}{category}', category)

category.scrapeArticle()

Now, once we have the class and its functions ready, we are going to do a loop on for every category in the list named categories. And for in every loop, we will create a new object with the category name and will initialize it with the class, CategoryScrape. We also pass in the URL and category name. Then we call the scrape function on the object we created.

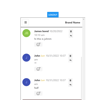

So, all the code we needed for the project. Now we can go ahead and run the project by running the command in terminal

python3 main.py

Happy Coding, Keep Coding

Connect with me on Instagram

Top comments (0)