Apple's framework VideoToolbox has been around for many years now. This year it had an update to handle low-latency encoding, among other things, so it's certainly worth getting familiar with the framework both generally and in order to make use of the newer features. Unfortunately, there are not a great number of examples on how to do this and the official documentation is not much more than a collection of header files. Hopefully we can provide some useful examples in this and future articles.

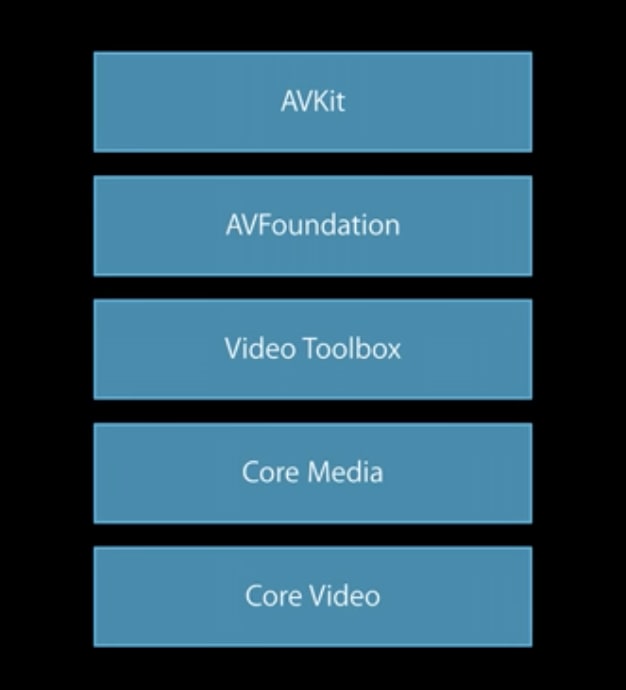

VideoToolbox is a fairly low-level framework, positioned as it is below AVKit and AVFoundation but on top of CoreMedia and CoreVideo.

You can do many things with both AVKit and AVFoundation. AVKit provides a lot of functionality at the view level. AVFoundation can also encode and decode streams of compressed video data (in the example accompanying this post, there is a display view based on AVFoundation's AVSampleBufferDisplayLayer). Sometimes, however, you will want greater control over the processes of compression and decompression. This is where VideoToolbox can be useful.

As an introduction to VideoToolbox, I will walk through the process of building a simple camera capture view that can display the captured data using either a Metal view layer or an AVSampleBufferDisplayLayer. The captured data is then also encoded with the help of VideoToolbox. Once a stream of data is being produced in this way, a next step would be to use that stream in some fashion - streaming it over a network, for example. And on the receiving side, VideoToolbox can then decode the data for display on that device. In fact, there is an example of the decoding process included in the code, which simply takes the encoded buffers and decodes them immediately. Not a realistic example, but useful as a reference.

Credit where it's due before we go further; I based my code on this repo which I initially forked and adjusted to taste. I've since rebuilt the project from scratch but the basic inspiration for the code remains. There is a useful WWDC video on VideoToolbox (seven years old now!) and the more recent one on low-latency encoding can be found here . I will keep the descriptions here mostly to the VideoToolbox-related code and just provide a high-level description of the steps. The accompanying code should suffice as a more detailed example.

This project can be built using SwiftUI, so the first step is to create a new Xcode macOS app project selecting SwiftUI as the interface, but AppKit App Delegate for the Life cycle option. If you're taking the step-by-step approach, you can then delete the generated ContentView as we will be using our own VideoView.swift:

struct VideoView: View {

let displayView = RenderViewRep() // Metal or AVDisplayViewRep to use AVSampleBufferDisplayLayer

// let displayView = AVDisplayViewRep()

var body: some View {

displayView

.frame(maxWidth: .infinity, maxHeight: .infinity)

}

func render(_ sampleBuffer: CMSampleBuffer) {

displayView.render(sampleBuffer)

}

}

struct CameraView_Previews: PreviewProvider {

static var previews: some View {

VideoView()

}

}

Notice that we have the option to use either a metal layer or an AVSampleBufferDisplayLayer, both are available in the sample code. We use this VideoView in our AppDelegate and create a window in which to use the view, like so:

let cameraView = VideoView()

var cameraWindow: NSWindow!

and

// Create the windows and set the content view.

cameraWindow = NSWindow(

contentRect: NSRect(x: 0, y: 0, width: 480, height: 300),

styleMask: [.titled, .closable, .miniaturizable, .resizable, .fullSizeContentView],

backing: .buffered, defer: false)

cameraWindow.isReleasedWhenClosed = false

cameraWindow.center()

cameraWindow.setTitleWithRepresentedFilename("Camera view")

cameraWindow.contentView = NSHostingView(rootView: cameraView)

cameraWindow.makeKeyAndOrderFront(nil)

Here we set the contentView to a NSHostingView in order to use SwiftUI.

To handle the camera capture, we can build an AVManager class that implements AVCaptureVideoDataOutputSampleBufferDelegate:

import AVFoundation

protocol AVManagerDelegate: AnyObject {

func onSampleBuffer(_ sampleBuffer: CMSampleBuffer)

}

class AVManager: NSObject, AVCaptureVideoDataOutputSampleBufferDelegate {

// MARK: - Properties

weak var delegate: AVManagerDelegate?

var session: AVCaptureSession!

private let sessionQueue = DispatchQueue(label:"se.eyevinn.sessionQueue")

private let videoQueue = DispatchQueue(label: "videoQueue")

private var connection: AVCaptureConnection!

private var camera: AVCaptureDevice?

func start() {

requestCameraPermission { [weak self] granted in

guard granted else {

print("no camera access")

return

}

self?.setupCaptureSession()

}

}

private func setupCaptureSession() {

sessionQueue.async {

let deviceTypes: [AVCaptureDevice.DeviceType] = [

.builtInWideAngleCamera

]

let discoverySession = AVCaptureDevice.DiscoverySession(

deviceTypes: deviceTypes,

mediaType: .video,

position: .front)

let session = AVCaptureSession()

session.sessionPreset = .vga640x480

guard let camera = discoverySession.devices.first,

let format = camera.formats.first(where: {

let dimens = CMVideoFormatDescriptionGetDimensions($0.formatDescription)

return dimens.width * dimens.height == 640 * 480

}) else { fatalError("no camera of camera format") }

do {

try camera.lockForConfiguration()

camera.activeFormat = format

camera.unlockForConfiguration()

} catch {

print("failed to set up format")

}

self.camera = camera

session.beginConfiguration()

do {

let videoIn = try AVCaptureDeviceInput(device: camera)

if session.canAddInput(videoIn) {

session.addInput(videoIn)

} else {

print("failed to add video input")

return

}

} catch {

print("failed to initialized video input")

return

}

let videoOut = AVCaptureVideoDataOutput()

videoOut.videoSettings = [ kCVPixelBufferPixelFormatTypeKey as String: Int(kCVPixelFormatType_420YpCbCr8BiPlanarFullRange)

]

videoOut.alwaysDiscardsLateVideoFrames = true

videoOut.setSampleBufferDelegate(self, queue: self.videoQueue)

if session.canAddOutput(videoOut) {

session.addOutput(videoOut)

} else {

print("failed to add video output")

return

}

session.commitConfiguration()

session.startRunning()

self.session = session

}

}

private func requestCameraPermission(handler: @escaping (Bool) -> Void) {

switch AVCaptureDevice.authorizationStatus(for: .video) {

case .authorized: // The user has previously granted access to the camera.

handler(true)

case .notDetermined: // The user has not yet been asked for camera access.

AVCaptureDevice.requestAccess(for: .video) { granted in

handler(granted)

}

case .denied, .restricted: // The user can't grant access due to restrictions.

handler(false)

@unknown default:

fatalError()

}

}

// MARK: - AVCaptureVideoDataOutputSampleBufferDelegate

func captureOutput(_ output: AVCaptureOutput,

didOutput sampleBuffer: CMSampleBuffer,

from connection: AVCaptureConnection) {

delegate?.onSampleBuffer(sampleBuffer)

}

// MARK: - Types

enum AVError: Error {

case noCamera

case cameraAccess

}

}

This means, by the way, that we will need to add an entitlement to our entitlements file for Camera set to YES, and we will also need to add the usual usage text for camera to the info.plist file, stating the reason why we need to use the camera.

Notice the method onSampleBuffer, where we render the current buffer to the camera view and then send it on to the encoder to compress the data according to our needs. The encoder in this case is the H264Encoder class. The buffers we are working with here are, as you can see, CMSampleBuffers. These are typically, but not necessarily, compressed sample data. In the case of the camera capture output they are CoreVideo pixel buffers, but these are compressed after running through the H264Encoder. Taking a look at the H264Encoder class, this is where VideoToolbox is used. To encode we need to create a "compression session" using VideoToolbox's VTCompressionSessionCreate:

init(width: Int32, height: Int32, callback: @escaping (CMSampleBuffer) -> Void) {

self.callback = callback

let encoderSpecification = [

kVTVideoEncoderSpecification_RequireHardwareAcceleratedVideoEncoder: true as CFBoolean

] as CFDictionary

let status = VTCompressionSessionCreate(allocator: kCFAllocatorDefault, width: width, height: height, codecType: kCMVideoCodecType_H264, encoderSpecification: encoderSpecification, imageBufferAttributes: nil, compressedDataAllocator: nil, outputCallback: outputCallback, refcon: Unmanaged.passUnretained(self).toOpaque(), compressionSessionOut: &session)

print("H264Coder init \(status == noErr) \(status)")

}

Here, the session, of type VTCompressionSession, is a member of the H264Encoder class. We can pass in an encoderSpecification on creation - this gives us control over hardware acceleration and, in the latest update, low-latency control. The init also assigns the necessary callback of type VTCompressionOutputCallback which provides the compressed result.

With these building blocks in place, we are able to capture camera output and encode the result. I have also included an example of setting properties on the compression session, after it has been created. In the example I indicate that the stream is in realtime:

guard let compressionSession = session else { return }

status = VTSessionSetProperty(compressionSession, key: kVTCompressionPropertyKey_RealTime, value: kCFBooleanTrue)

Next steps

There are additional methods available in VideoToolbox for preparing CMSampleBuffers for network transmission as NAL units (Network Abstraction Layer). This would be a good next step for this project, as would an investigation into the details of decoding sessions in a similar fashion. Watch this space...

The repository accompanying this post is available here.

Alan Allard is a developer at Eyevinn Technology, the European leading independent consultancy firm specializing in video technology and media distribution.

If you need assistance in the development and implementation of this, our team of video developers are happy to help out. If you have any questions or comments just drop us a line in the comments section to this post.

Top comments (0)