For a few months, my computer experienced some freezing during several minutes and the only solution to return to work was a reboot. It’s really annoying so I decided to look at what’s going on.

I have been working since three years on a laptop DELL E7450. It has 8GB RAM size and an Intel i5 core with 2 cores and 4 logical processors. It is quite enough to work with, isn’t it ?

I’m using Ubuntu 18.04 coupled with i3 for my window manager. By the way, if you don’t know yet about i3, you have to take a look at it.

I noticed that with only 2 open applications : Google Chrome and Slack, both used most of my memory. Google Chrome is well known to use a lot of RAM and Slack application is built upon Electron and bad news: Electron is a known resource hog.

Memory Usage

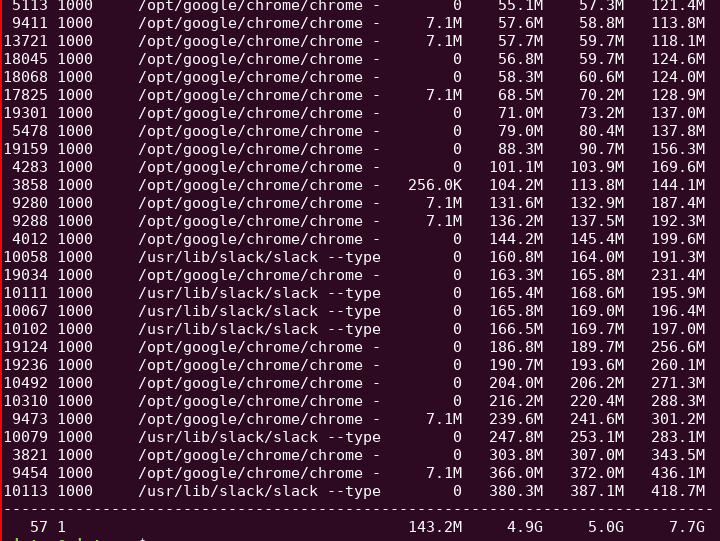

I’ve made some simple tests to check memory consumption. As you can see from this picture, only 2 open applications use nearly 100% of my memory.

This picture is extracted from a screenshot of the htop program. Htop is a great alternative to top. It provides facilities to filter, sort, search and view per core. The thing that interested me here is the memory, especially memory per process. To do that, i’m using smem, a tool that calculates the USS, PSS and RSS per process.

USS stands for Unique Set Size. This is the amount of unshared memory unique to that process. It does not include shared memory. In the other hand, PSS stands for Proportional Set Size. It adds together the unique memory (USS), along with a proportion of its shared memory divided by the number of processes sharing that memory. It gives a representation of how much actual physical memory is being used per process - with shared memory truly represented as shared.

RSS stands for Resident Set Size. This is the amount of shared memory plus unshared memory used by each process. If any process shares memory, this will over-report the amount of memory actually used, because the same shared memory will be counted more than once - appearing again in each other process that shares the same memory. This metric can be unreliable, especially when processes have a lot of forks.

Here the command I used :

smem -n -s pss -t -k -P chrome

If you're not familiar with command line, you can use explainshell.com, a great website to dissect commands.

To sum up, 57 processes match ‘chrome’ and used 4,9G of USS, 5.0G of PSS and 7,7G of RSS.

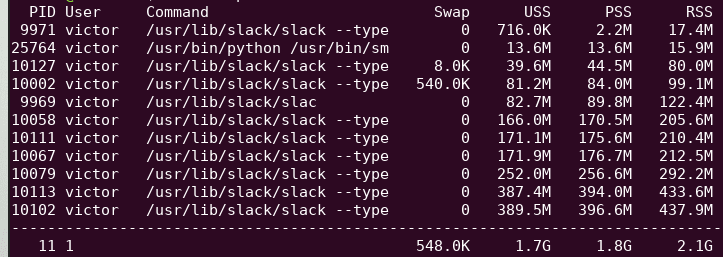

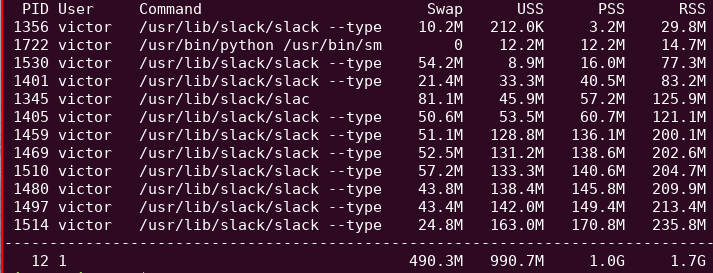

Let’s focus on Slack memory consumption:

Memory Limitation

Let’s say I want to limit the memory allocated to the Slack application. My first thought was to use Docker. By wrapping the application in a Docker container, I can use Docker abilities to limit the resources of a container.

For example:

docker run --memory=1G ….

This parameter will set the maximum amount of memory the container can use. It works, but it means I need to create a Docker image dedicated to all applications I want to limit the amount of memory.

In fact, Docker uses a technology called namespaces to isolate containers from other processes. To learn more about Docker architecture, you can take a look at Docker overview page. One namespace is interesting in my case : Control groups also known as cgroups. This one enables the limitation of physical resources.

Let’s use it:

# Create a group for memory named “slack_group”

cgcreate -g "memory:slack_group" -t victor:victor

# Specify memory limit to 1G for this group

cgset -r memory.limit_in_bytes=1G "slack_group"

To ensure memory limitation is correctly applied, it’s possible to look in directory /sys/fs/cgroup/memory/mygroup/ and precisely at file memory.limit_in_bytes.

# Launch slack application in this group

cgexec -g "memory:slack_group" slack

# If needed, we can remove the group

cgdelete "memory:slack_group"

In my case, I use cgroups only to limit memory allocated but it’s also possible to limit CPU.

Then, let’s see if it’s works.

As you can see, PSS is strictly equal to 1G but the swap has now increased to 500M. The application is a bit slow, changing from one workspace to another is a bit long but it’s still comfortable to use.

Let’s disable swap to see if it works.

echo 0 > /sys/fs/cgroup/memory/slack_group/memory.swappiness

Guess what ? It doesn’t work, the application is not responding and some processes are killed. In fact, it’s the OOM Killer (Out-of-memory Killer) who is in charge to kill processes in order to free up memory for the system. The OOM Killer selects the best candidate for elimination from a score maintained by the kernel. You can see this score in:

/proc/${PID}/oom_score

Automation

I know how to limit memory consumption for one application but it’s definitely not sustainable in the future. I will have to create a group per each application I want to limit memory usage. Thanks to this StackExchange question, I found a script that does the job. It’s available here:

This script creates a group each time it’s launched and remove it when it’s killed.

Easy to use:

limitmem.sh 1G slack

That’s fine but this script is a piece of code that I will have to maintain, update, upgrade, etc….

Another way to integrate memory limit to my system is to use systemd.

systemd is a Linux initialization system and service manager that includes features like on-demand starting of daemons, mount and automount point maintenance, snapshot support, and processes tracking using Linux control groups. systemd provides a logging daemon and other tools and utilities to help with common system administration tasks. Reference.

To do this, I have to wrap my application into a systemd service.

[Unit]

Description=slack

After=network.target

[Service]

User=victor

Group=victor

Environment=DISPLAY=:0

ExecStart=/usr/bin/slack

#Restart=on-failure

KillMode=process

MemoryAccounting=true

MemoryMax=1G

[Install]

WantedBy=multi-user.target

By specifing paramaters MemoryAccounting and MemoryMax, I’m able to limit memory allocated. As explained in systemd documentation, systemd organizes processes with cgroups. In my opinion, using systemd is far more sustainable than maintaining a custom shell script as it’s became the standard for most Linux distributions.

Conclusion

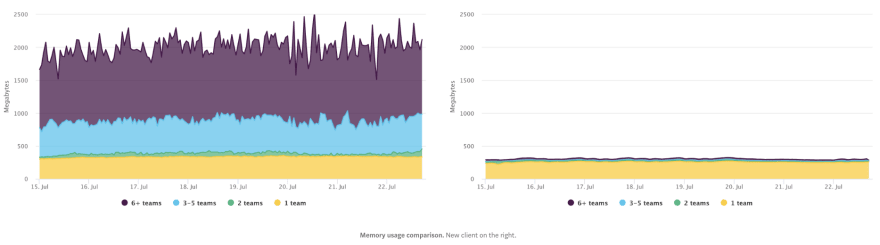

As I was writing this article, slack engineering published an article announcing a new Slack desktop application and I quote:

One of our primary metrics has been memory usage

To be clear, my goal was not to prove how bad memory management is of Slack application. I simply wanted to go deeper in memory management for the operating system I use. I used slack application here like I could have used any other.

By the way, all of this makes me think: do I have to let a messaging application consumes 30% of my memory? More generally, do I have to let any application manages it own memory consumption? Could the concept of container (an application in an isolated environment) be applied to my everyday applications?

Don’t hesitate to tell me if I forgot a way to limit memory consumption and let me know what you think about it.

Thanks to Nastasia and Mathieu for their time and proofreading.

Cover photo by Harrison Broadbent on Unsplash

Oldest comments (3)

you could also try zram I have found lz4 to still be quite fast and its seems to compress around 4x I have 50% of my memory compressed

zramctl

NAME ALGORITHM DISKSIZE DATA COMPR TOTAL STREAMS MOUNTPOINT

/dev/zram7 lz4 1.9G 477.4M 109.2M 116.5M 8 [SWAP]

/dev/zram6 lz4 1.9G 472.8M 108.2M 115M 8 [SWAP]

/dev/zram5 lz4 1.9G 474.6M 109.7M 116.5M 8 [SWAP]

/dev/zram4 lz4 1.9G 480.2M 109.7M 117.3M 8 [SWAP]

/dev/zram3 lz4 1.9G 476.7M 109.9M 116.8M 8 [SWAP]

/dev/zram2 lz4 1.9G 479.8M 108.1M 115.2M 8 [SWAP]

/dev/zram1 lz4 1.9G 474.4M 107.8M 114.6M 8 [SWAP]

/dev/zram0 lz4 1.9G 475.6M 107.9M 115M 8 [SWAP]

Hi, thanks for the script.

I want to limit the RAM usage of python script with the systemd style (the limitmem.sh works for me though). I tried the following systemd service:

pylimitmem.service

After this, I ran the following comand:

With the following script to test whether it works:

Then the os still crash immediately...

Could you please give me some suggestions? Thanks.

Thanks. Great walkthrough.