Load Balancing helps you to scale horizontally by increasing resources (increasing number of servers) while caching enables you to make better use the existing resource you have.

Caching works on the principle that recently requested data is likely to be requested again

Cache is a hardware or a software component which helps in serving the data which is either frequently requested or is resource expensive to compute, So cache stores the response and serves it when asked to do so.

Caches can exist at all levels in architecture, but are often found at the level nearest to the client where they are implemented to return data quickly without taxing downstream levels.

For example:

A client requests image from a server, Initially the server looks through DB for the image and return it to client but however there are the following possibilities

- The response can be cached in server side (Eg: in reverse proxy)

- The response can be cached client side (Eg: Browser cache, Forward proxy)

This way we are saving the copy of response and client do not have to request the server frequently for the same data, This even decrease the time to load data for client and even decreases load for the server.

If the response for a particular request is saved in cache it is called cache hit or else it is called cache miss

Pros:

- Improves read Performance (aka Latency)

- Reduce the Load (aka Throughput)

Cons:

- Increase complexity of System

- Consumes Resources

Cache Invalidation & Eviction

Cache Invalidation:

Updating the cached data is known as cache invalidation. The most common technique used for this purpose is known as TTL (Time To live). A certain time period is defined for cache to live in the memory and after that interval of time cache expires. And new data is requested from server

Cache Eviction:

As cache memory is limited so we have to decide which cached data to keep or not.

Eg: our storage is capable of only storing only 100 keys and currently all of them are filled and now we want to add an additional key so we have to kick out an older cached key so that room for a new one can be made. This process is know as cache Eviction.

Below are some parameters considered while Eviction of a cached key:

- FIFO: First In First Out, In this the oldest cached key gets removed so that latest one can be occupied.

- LRU: Least Recently Used, In this the cached key which hasn't been used in recent time and was used a long time ago gets discarded.

- LFU: Least Frequently Used, In this the cached is used the most less number of times is discarded. Eg: there are 3 keys k1, k2, k3 and they are used 7,3,9 times respectively so k3 will be discarded first as it is used only 3 times which is the least number.

Cache Patterns

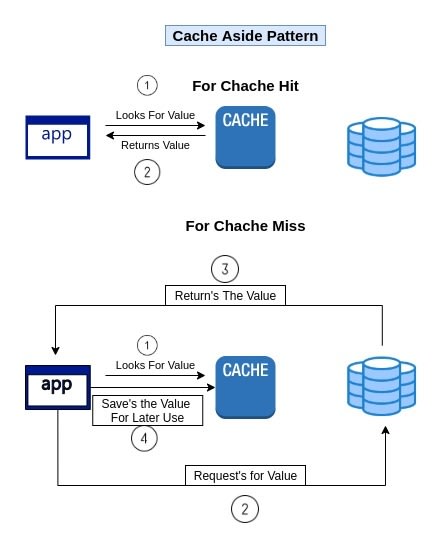

Cache Aside pattern:

This is the most commonly used technique in this the cache does not interact with DB or Server it only interacts with Application.

When a client request some data through application, Application looks for it in cache if found then returns it or else request the same to server and then returns to client and also caches it.

Here there was no interaction between cache and server or DB

When the data in DB changes corresponding data in cached memory also have to be modified either through some code by application or through TTL method or combination of both can be used

Pros of cache aside:

- Caches only what is needed

Cons of cache Aside:

- Cache Miss are Expensive

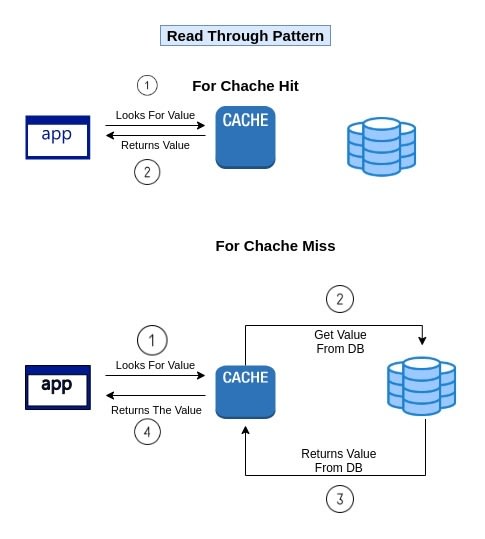

Read Through pattern:

Here the cache resides between application and server, in this case application never interacts with server or cache.

When a client request some data it is first looked by the application in cache and if not found the cache itself request data to server and returns to the application.

Pros of cache aside:

- Caches only what is needed

Cons of cache Aside:

- Cache Miss are Expensive

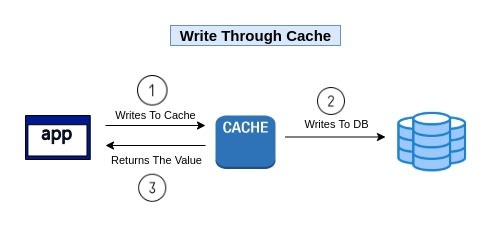

Write Through pattern:

The write-through strategy adds data or updates data in the cache whenever data is written to the database. Because the data in the cache is updated every time it's written to the database, the data in the cache is always current.

Pros of Write Through:

- Up to Date Data

Cons of cache Aside:

- Writes are Expensive are Expensive

- There is a chance that we may add certain data to cache that no one ever reads

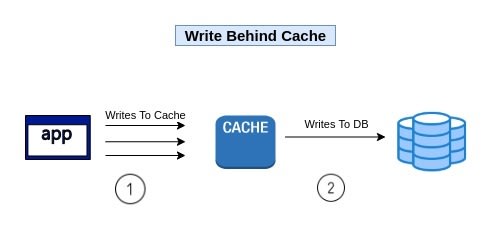

Write Behind pattern:

It is similar to write through and just instead of updating cache instantaneously it waits for some time and sends requests in bulk

Pros of Write Through:

- No Write penalty

Cons of cache Aside:

- Reliability

- Lack of consistency

Where Do Cache Resides

Depending on the pattern used for caching and some other parameters cache can be embedded at various levels such as.

- Client Side (Like Browser cache or OS Cache)

- Forward proxy

- Reverse proxy

- Application layer itself

Top comments (0)