Hello, in this tutorial I will describe the steps needed to be follow in order to migrate Indices from Elasticsearch to another Elasticsearch Instance. To accomplish this goal I will use this fantastic cli called elasticsearch-dump to perform the necessary operations (PUT/GET) and Minio S3 Compatible Object Storage to temporarily store the ES indices.

Lets Dive in...

Step1. Clone the repository and build the image.

1. git clone https://github.com/elasticsearch-dump/elasticsearch-dump

2. cd elasticsearch-dump

3. Add any missing packages like curl nano e.t.c

### Start DockerFile

FROM node:14-buster-slim

LABEL maintainer="ferronrsmith@gmail.com"

ARG ES_DUMP_VER

ENV ES_DUMP_VER=${ES_DUMP_VER:-latest}

ENV NODE_ENV production

RUN npm install elasticdump@${ES_DUMP_VER} -g

RUN apt-get update \

&& apt-get install -y curl \

&& rm -rf /var/lib/{apt,dpkg,cache,log}

COPY docker-entrypoint.sh /usr/local/bin/

ENTRYPOINT ["docker-entrypoint.sh"]

CMD ["elasticdump"]

4. docker build . -t <docherhub-user>/<image-name>:<release-version>

5. docker login -u <username>

6. docker push <docherhub-user>/<image-name>:<release-version>

Step2. Create a Persistent Volume for the Kubernetes job

cat << EOF | kubectl apply -f -

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

labels:

app.kubernetes.io/name: elasticdumpclient-pvc

name: elasticdumpclient-pvc

namespace: default

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: <depends on the Cloud Provider>

volumeMode: Filesystem

EOF

Step3. Create a new Kubernetes Job and mount the previously provisioned Persistent Volume.

cat << EOF | kubectl apply -f -

apiVersion: batch/v1

kind: Job

metadata:

name: elasticdumpclient

labels:

app: elasticdumpclient

spec:

template:

spec:

initContainers:

- name: fix-data-dir-permissions

image: alpine:3.16.2

command:

- chown

- -R

- 1001:1001

- /data

volumeMounts:

- name: elasticdumpclient-pvc

mountPath: /data

containers:

- name: elasticdumpclient

image: thvelmachos/elasticdump:6.68.0-v1

command: ["/bin/sh", "-ec", "sleep 460000"]

imagePullPolicy: Always

volumeMounts:

- name: elasticdumpclient-pvc

mountPath: /data

restartPolicy: Never

volumes:

- name: elasticdumpclient-pvc

persistentVolumeClaim:

claimName: elasticdumpclient-pvc

backoffLimit: 1

EOF

Step3. Upload a Script to the Kubernetes Job

kubectl cp <local-path>/migrate-es-indices.sh <pod-name>:/data/migrate-es-indices.sh

# cat migrate-es-indices.sh

#!/bin/sh

arr=$(curl -X GET -L 'http://elasticsearch-master.default.svc.cluster.local:9200/_cat/indices/?pretty&s=store.size:desc' | grep -E '[^access](logs-)' | awk '{print $3}')

for idx in $arr; do

echo "Working: $idx"

elasticdump --s3AccessKeyId "<access-key>" --s3SecretAccessKey "<secret-key>" --input=http://http://elasticsearch-master.default.svc.cluster.local:9200/$idx --output "s3://migrate-elastic-indices/$idx.json" --s3ForcePathStyle true --s3Endpoint https://<minio-endpoint>:<port>

done

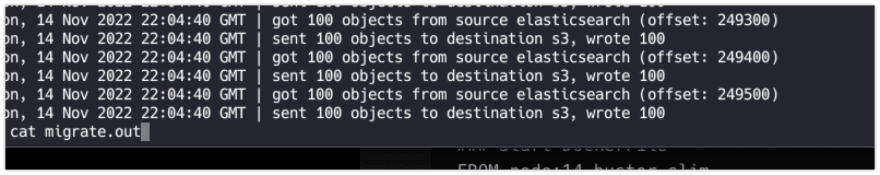

Step4. Execute the Script.

chmod +x /data/migrate-es-indices.sh

# Send job to the Background with nohup

nohup sh /data/migrate-es-indices.sh > /data/migrate.out 2>&1 &

That's it, Enjoy!!!

I hope you like the tutorial, if you do give a thumps up! and follow me in Twitter, also you can subscribe to my Newsletter in order to avoid missing any of the upcoming tutorials.

Media Attribution

I would like to thank Clark Tibbs for designing the awesome photo I am using in my posts.

Latest comments (0)