Last time, I promised to write about “getting the benefits that SPAs enjoy, without suffering the consequences they extremely don’t enjoy”. And then Nolan Lawson wrote basically that, and then the madlad did it again. He included almost everything I would’ve:

- MPA pageloads are surprisingly tough to beat nowadays

- Paint holding, streaming HTML, cross-page code caching, back/forward caching, etc.

- Service Worker rendering

- Also see Jeremy Wagner on why offline-first MPAs are cool

- In theory, MPA page transitions are Real Soon Now

- In practice, Kroger.com had none and our native app barely had any, so I didn’t care

- And his main point:

-

If the only reason you’re using an SPA is because “it makes navigations faster,” then maybe it’s time to re-evaluate that.

(I don’t think he talked about how edge rendering and MPAs are good buds, but I mentioned it so here’s ticking that box.)

Since Nolan said what I would’ve (in less words!), I’ll cut to the chase: did my opinions in this series make a meaningfully fast site? This is the part where I put my money where my mouth was:

Proving that speed mattered wasn’t enough: we also had to convince people emotionally. To show everyone, god dammit, how much better our site would be if it were fast.

The best way to get humans to feel something is to have them experience it. Is our website painful on the phones we sell? Time to inflict some pain.

The demo

I planned to demonstrate the importance of speed at our monthly product meeting. It went a little something like this:

Buy enough Poblano phones for attendees.

-

On those phones and a throttled connection, try using Kroger.com:

- Log in

- Search for “eggs”

- Add some to cart

- Try to check out

Repeat those steps on the demo.

Note how performance is the bedrock feature: without it, no other features exist.

Near the laptop with the horrible pun are 10 of the original 15 demo phones. (The KaiOS flip phone helped stop me from overspecializing for Chrome or the Poblano VLE5 specs.)

A nice thing about targeting wimpy phones is that the demo hardware cost me relatively little. Each Poblano was ≈$35, and a sale at the time knocked some down to $25.

How fast was it?

Sadly, I can’t give you a demo, so this video will have to suffice:

The browser UI differs because each video was recorded at different times. (Also, guess walmart.com’s framework.)

Me racing to get the demo, kroger.com, Kroger’s native app, amazon.com, and walmart.com all to the start of checkout as fast as possible. In order of how long each took:

If you want to improve dev.to’s video accessibility, consider voting for my feature request for the Text description of video

<track> element.

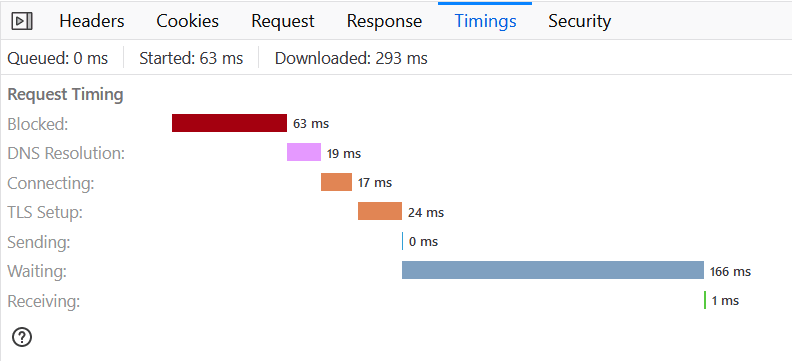

For a bit, our CDN contact got it semi-public on the real Internet. I was beyond excited to see this in @AmeliaBR’s Firefox devtools:

That’s Cincinnati, Ohio → Edmonton, Canada. 293 milliseconds ain’t bad for a network response, but I was so happy because I knew we could get much faster…

- About 50–100ms was from geographical distance, which can be improved by edge rendering/caching/etc.

- PCF’s gorouters have a 50ms delay. Luckily, we were dropping PCF.

-

40ms from Nagle’s algorithm, maybe even 80ms from both Node.js and the reverse proxy. This is what

TCP_NODELAYis for. - Tweaked gzip/brotli compression, like their buffer sizes and flushing behavior

- Lower-latency HTTPS configuration, such as smaller TLS record sizes

Let’s say that averages out to 200ms in the real world. Based on the numbers in the first post, that’s $40 million/year based on kroger.com’s 1.2 TTFB today. Or, ~5% of company profit at the time. (The actual number would probably be higher. With a difference this large, latency→revenue stops being linear.)

So… how’d it go?

The burning questions are related to how it performed and what the organization thought about it? How much was adopted? Etc.

What did the organization think of it?

The immediate reaction exceeded even my most indulgent expectations. Only the sternest Dad Voice in the room could get enough quiet to finish the presentation. Important people stood up to say they’d like to see more bottom-up initiative like it. VIPs who didn’t attend requested demos. Even some developers who disagreed with me on React and web performance admitted they were intrigued.

Which was nice, but kroger.com was still butt-slow. As far as how to learn anything from the demo, I think these were the options:

- Adapt new principles to existing code

- Rewrite (incremental or not)

- Separate MVP

Adapt new principles to kroger.com’s existing code?

Naturally, folks asked how to get our current React SSR architecture to be fast like the demo. And that’s fine! Why not React? Why not compromise and improve the existing site?

We tried it. Developers toiled in the Webpack mines for smaller bundles. We dropped IE11 to polyfill less. We changed the footer to static HTML. After months of effort, we shrank our JS bundle by ≈10%.

One month later, we were back where we started.

Does that mean fast websites are too hard in React? C’mon, that’s a clickbait question impossible to answer. But it was evidence that we as a company couldn’t handle ongoing development in a React SPA architecture without constant site speed casualties. Maybe it was for management reasons, or education reasons, but after this cycle repeated a few times, a fair conclusion was we couldn’t hack it. When every new feature adds client-side JS, it felt like we were set up to lose before we even started. (Try telling a business that each new feature must replace an existing one. See how far you get.)

At some point, I was asked to write a cost/benefit analysis for the MPA architecture that made the demo fast, but in React. It’s long enough I can’t repeat it here, so instead I’ll do a Classic Internet Move™: gloss a nuanced topic into controversial points.

Reasons not to use React for Multi-Page Apps

- React server-renders HTML slower than many other frameworks/languages

If you’re server rendering much more frequently, even small differences add up. And the differences aren’t that small.

- React is kind of bad at page loads

-

react+react-domare bigger than many frameworks, and its growth trendline is disheartening.In theory, React pages can be fast. In practice, they rarely are.

VDOM is not the architecture you’d design if you wanted fast loads.

Its rehydration annoys users, does lots of work at the worst possible time, and is fragile and hard to reason about. Do you want those risks on each page?

ℹ️ Okay, I feel like I have to back this one up, at least.

Performance metrics collected from real websites using SSR rehydration indicate its use should be heavily discouraged. Ultimately, the reason comes down to User Experience: it's extremely easy to end up leaving users in an “uncanny valley”.

— Rendering on the Web § A Rehydration Problem: One App for the Price of Two

The Virtual DOM approach inflicts a lot of overhead at page load:

- Render the entire component tree

- Read back the existing DOM

- Diff the two

- Render the reconciled component tree

That’s a lot of unnecessary work if you’re going to show something mostly-identical to the initial

text/htmlresponse!Forget the performance for a second. Even rehydrating correctly in React is tricky, so using it for an MPA risks breakage on every page:

- Why Server Side Rendering In React Is So Hard

- The Perils of Rehydration

- Case study of SSR with React in a large e-commerce app

- Fixing Gatsby’s rehydration issue

- gatsbyjs#17914: [Discussion] Gatsby, React & Hydration

- React bugs for “Server Rendering”

No, really, skim those links. The nature of their problems is more important than the specifics.

- React fights the multi-page mental model

-

It prefers JS properties to HTML attributes (you know, the

classvs.classNamething). That’s not a dealbreaker, but it’s symptomatic.Server-side React and its ecosystem strive to pretend they’re in a browser. Differences between server and browser renders are considered isomorphic failures that should be fixed.

React promises upcoming ways to address these problems, but testing, benching, and speculating on them would be a whole other post. (They also extremely didn’t exist two years ago.) I’m not thrilled about how React’s upcoming streaming and partial hydration seem to be implemented — I should test for due diligence, but a separate HTTP connection for a not-quite-JSON stream doesn’t seem like it would play nice during page load.

Taking it back to my goals, does Facebook even use React for its rural/low-spec/poorly-connected customers? There is one data point of the almost-no-JS mbasic.facebook.com.

Rewrite kroger.com, incrementally or not?

Software rewrites are the Forever Joke. Developers say this will be the last rewrite, because finally we know how to do it right. Businesses, meanwhile, knowingly estimate how long each codebase will last based on how wrong the developers were in the past.

Therefore, the natural question: should our next inevitable rewrite be Marko?

I was able to pitch my approach vs. another for internal R&D. I can’t publish specifics, but I did make this inscrutable poster for it:

And because I’m an incorrigible web developer, I made it with HTML & CSS.

That bakeoff’s official conclusion: “performance is an application concern, not the platform’s fault”. It was decided to target Developer Experience™ for the long-term, not site speed.

I was secretly relieved: how likely will a new architecture actually be faster if it’s put through the same people, processes, and culture as the last architecture?

With the grand big-bang rewrite successfully avoided, we could instead try small incremental improvements — speed A/B tests. If successful, that’s reason enough to try further improvements, and if those were successful…

The simplest thing that could possibly work seemed to be streaming static asset <script> and <link> elements before the rest of the HTML. We’d rewrite the outer scaffolding HTML in Marko, then embed React into the dynamic parts of the page. Here’s a simplified example of what I mean:

import {

renderReactRoot,

fetchDataDependencies

} from './react-app'

<!doctype html>

<html lang="en-us">

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width,initial-scale=1">

<for|{ url }| of=input.webpackStaticAssets>

<if(url.endsWith('.js')>

<script defer src=url></script>

</if>

<if(url.endsWith('.css')>

<link rel="stylesheet" href=url>

</if>

</for>

<PageMetadata ...input.request />

</head>

<body>

<await(fetchDataDependencies(input.request, input.response)>

<@then|data|>

$!{renderReactRoot(data)}

</@then>

</await>

</body>

</html>

This had a number of improvements:

Browsers could download and parse our static assets while the server waited on dynamic data and React SSR.

Since Marko only serializes components with

state, the outer HTML didn’t add to our JS bundle. (This had more impact than the above example suggests; our HTML scaffolding was more complicated because it was a Real Codebase.)If successful, we could rewrite components from the outside-in, shrinking the bundle with each step.

Marko also paid for itself with more efficient SSR and smaller HTML output (quote stripping, tag omission, etc.), so we didn’t regress server metrics unless we wanted to.

This almost worked! But we were thwarted by our Redux code. Our Reducers ‘n’ Friends contained enough redirect/page metadata/analytics/business logic that assumed the entire page would be sent all at once, where any code could walk back up the DOM at its leisure and change previously-generated HTML… like the <head>.

We tried to get dev time to overcome this problem, since we’d have to make Redux stream-friendly in a React 18 world anyway. Unfortunately, Redux and its ecosystem weren’t designed with streaming in mind, so assigning enough dev time to overcome those obstacles was deemed “not product-led enough”.

Launch a separate, faster version of kroger.com?

While the “make React do this” attempts and the Streaming A/B test were, you know, fine, they weren’t my favorite options. I favored launching a separate low-spec site with respectful redirects — let’s call it https://kroger.but.fast/. I liked this approach because…

- Minimum time it took for real people to benefit from a significant speedup

- Helped with the culture paradox: your existing culture gave you the current site. Pushing a new approach through that culture will change your current culture or the result, and the likelihood of which depends on how many people it has to go through. A small team with its own goals can incubate its own culture to achieve those goals.

- If it’s a big enough success, it can run on its own results while accruing features, until the question “should we swap over?” becomes an obvious yes/no.

How much was adopted?

Well… that’s a long story.

The Performance team got rolled into the Web Platform team. That had good intentions, but in retrospect a platform team’s high-urgency deploys, monitoring, and incident responses inevitably crowd out important-but-low-urgency speed improvement work.

Many folks were also taken with the idea of a separate faster site. They volunteered skills and time to estimate the budget, set up CI/CD, and other favors. Their effort, kindness, and optimism amazed me. It seemed inevitable that something would happen — at least, we’d get a concrete rejection that could inform what we tried next.

The good news: something did happen.

The bad news: it was the USA Spring 2020 lockdown.

After the initial shock, I realized I was in a unique position:

COVID-19 made it extremely dangerous to enter supermarkets.

The pandemic was disproportionately hurting blue-collar jobs, high-risk folks, and the homeless.

I had a proof-of-concept where even cheap and/or badly-connected devices can quickly browse, buy, and order groceries online.

People won’t stop buying food or medicine, even with stay-at-home orders. If we had a website that let even the poorest shop without stepping in our stores, it would save lives. Even if they could only browse, it would still cut down on in-store time.

With a certainty of purpose I’ve never felt before or since, I threw myself into making a kroger.but.fast MVP. I knew it was asking for burnout, but I also knew I’d regret any halfheartedness for the rest of my life — it would have been morally wrong not to try.

We had the demo running in a prod bucket, agonizingly almost-public, only one secret login away. We tried to get anyone internally to use it to buy groceries.

I’m not sure anyone bothered.

I don’t know what exactly happened. My experience was very similar to Zack Argyle’s with Pinterest Lite, without the happy ending. (It took him 5 years, so maybe I’m just impatient.) I was a contractor, not a “real employee”, so I wasn’t privy to internal decisions — this also meant I couldn’t hear why any of the proposals sent up the chain got lost or rejected.

Once it filtered through the grapevine that Bridge maybe was competing for resources with a project like this… that was when I decided I was doing nothing but speedrunning hypertension by staying.

When bad things happen to fast code

On the one hand, the complete lack of real change is obvious. The demo intentionally rejected much of our design, development, and even management decisions to get the speed it needed. Some sort of skunkworks to insulate from ambient organizational pressures is often the only way a drastic improvement like this can work, and it’s hard getting clearance for that.

Another reason: is that to make a drastic improvement on an existing product, there’s an inherent paradox: a lot of folks’ jobs depend on that product, and you can’t get someone to believe something they’re paid not to believe. Especially when the existing architecture was sold as faster than the even-more-previous one. (And isn’t that always the case?)

It took me a while to understand how people could be personally enthusiastic, but professionally could do nothing. One thing that helped was Quotes from Moral Mazes. Or, if you want a link less likely to depress you, I was trying to make a Level 4 project happen in an org that could charitably be described as Level 0.5.

But enough about me. What about you?

Maybe you’re making a website that needs to be fast. The first thing you gotta do is get real hardware that represents your users. Set the right benchmarks for the people you serve. Your technology choices must be informed on that or you’re just posturing.

If you’re targeting cheap phones, though, I can tell you what I’d look at today.

For the closest performance to my demo, try Marko. Yes, I’m paid to work on Marko now, [EDIT: not anymore] but what technology would better match my demo’s speed than the same technology? (Specifically, I used @marko/rollup.)

But, it’s gauche to only recommend my employer’s thing. What else, what else… If your site doesn’t need JS to work, then absolutely go for a static site. But for something with even sprinkles of interactivity like e-commerce — well, there’s a reason my demo didn’t run JAMstack.

My checklist of requirements are…

- Streaming HTML. (See part #2 for why.)

- Minimum framework JS — at least half of

react+react-dom. - The ability to only hydrate some components, so your users only download JavaScript that actually provides dynamic functionality.

- Can render in CDN edge servers. This unfortunately is hard to do for languages other than JavaScript, unless you do something like Fly.io’s One Weird Trick.

Solid is the closest runner-up to Marko; the only requirement it lacks is partial hydration.

Svelte doesn’t stream, or have partial hydration, but tackles the too-much-app-JS problem via its culture discouraging it. If Svelte implemented streaming HTML, I’d recommend it. Maybe someday.

If Preact had partial hydration and streaming, I’d recommend it too; even though Preact’s goals don’t always match mine, I can’t argue with Jason Miller’s consistent results. Preact probably will have equivalents of React’s streaming and Server Components, right?

Remix is almost a recommend; its philosophies are 🧑🍳💋. Its progressive enhancement approach is exactly what I want, as of React 18 it can stream HTML, and they’re doing invaluable work successfully convincing React devs that those things are important. This kind of stuff has me shaking my fists in agreement:

Wouldn’t it be great, if we could just move all of that code out of the browser and onto the server? Isn’t it annoying to have to write a serverless function any time you need to talk to a database or hit an API that needs your private key? (yes it is). These are the sorts of things React Server Components promise to do for us, and we can definitely look forward to that for data loading, but they don’t do anything for mutations and it’d be cool to move that code out of the browser as well.

We’ve learned that fetching in components is the quickest way to the slowest UX (not to mention all the content layout shift that usually follows).

It’s not just the UX that suffers either. The developer experience gets complex with all the context plumbing, global state management solutions (that are often little more than a client-side cache of server-side state), and every component with data needing to own its own loading, error, and success states.

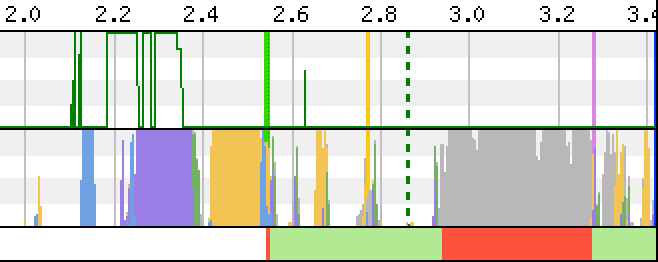

Really, the only thing I don’t like about Remix is… React. Check this perf trace:

Sample from this remix-ecommerce.fly.dev WebPageTest trace

Sure, the main thread’s only blocked for 0.8 seconds total, but I don’t want to do that to users on every page navigation. That’s a good argument for why Remix progressively enhances to client-side navigation… but I’ve already made my case on that.

Ideally, Remix would let you use other frameworks, and I’d shove Marko in there. They’ve discussed the possibility, so who knows?

Oldest comments (21)

"how likely will a new architecture actually be faster if it’s put through the same people, processes, and culture as the last architecture?" Concur 100%. It's not your framework that's slow it's your team!

That's a wild generalisation. Teams evolve, learn, and do better when the framework they use has better built-in patterns and practices. This is why React is so hard to get right and I wouldn't recommend it to 99% of the teams/projects.

Yeah, my grapes were probably a little sour

@taylorhuntkr FYI - the "Rendering on the Web § A Rehydration Problem: One App for the Price of Two" link has a quote that is making it a 404.

Ah, thank you! Fixed

Watching this I have to wonder how much of "the web is slower than native" is just a self-fulfilling prophecy (or even an excuse).

Tell that to the "nobody cares in the end if things are < 100kb" and "premature optimization" types (who presumably never heard of avoiding gratuitous pessimization).

"So the engineers at Facebook in a stroke of maniacal genius said “to hell with the W3C and to hell with best practices!” and decided to completely abstract away the browser, …"

Mark Nutter - Modern Web Development (2016)

React Server Components seem to take the whole "build a browser just for React" notion to an entirely new level.

… really?

"What is clear: right now, if you’re using a framework to build your site, you’re making a trade-off in terms of initial performance—even in the best of scenarios.

Some trade-off may be acceptable in the right situations, but it’s important that we make that exchange consciously."

Tim Kadlec - The Cost of Javascript Frameworks (2020)

If the platform is the fundamental limiting component then it's the platform's fault …

No honourable(, hopeful) mention for Solid Start? 😁

Great post as always, Thank You!

Funny thing about Solid: I asked @ryansolid if it met my checklist, and he was humble and well-reasoned enough to say he wasn’t certain it qualified. Now he got his link at the bottom in the end anyway!

Apparently I was too humble. On further discussion Solid has been added to the article. Thanks @taylorhuntkr.

That was an incredibly valuable and insightful 5 articles. Thank you for this!!

Just wanted to say thanks for your meticulous attention to accessibility in the alt text of the images and even a text description of the demo video.

Thanks for the excellent series on this topic. I never really gave much thought about giving up on client side routing, even with server side rendering. Turbolinks and Hotwire also go in that direction, suggesting client side routing is a must if you want a fast website, but I started to consider the opposite point of view, maybe it's not crucial...

I must admit that I'm happy with the direction that React is going, with progressive and concurrent hydration, streaming etc. It will result in HTML getting in the browser more quickly and not blocking the main thread by hydration not being done in a single chunk. Adding Remix to the mix, even before hydration is complete, basic functionality is there. I'm also excited about Remix integrating with other libraries.

This may seem like I'm too invested in React, and it's probably true. I like the development experience, rich ecosystem, and of course familiarity is a real thing. Even though I'm hopeful about React's future, it's still materializing and I don't have the hard numbers in my hand. I need to have my own metrics to optimize for and actually measure if new streaming and hydration strategies improve my metrics or not. Remix' server components blog was an eye opener for me and I plan to do my own testing in the future.

Again, thanks for the great series and it will be on my bookshelf.

Yeah, as far as the state-of-React, those upcoming additions are inarguable improvements, and I’m glad React is getting them. And if you’re measuring your own numbers, that’s the most important part by far.

Copy-Paste the whole

“software is faster is you don’t import a framework that you only 10% use”

thing across the entire software industry please. 👍

At work I'm working on seeing if we can port some of the React code over the simpler HTMX library. But between React and other complex tools I'm wondering if I can pull it off. It is just so confusing. You want a details/summary action? In React they built or used some library that uses divs to recreate it! You want to search for and add to a select element that can do multiple? Sure here's a really complex way to do it?

Why can't I use some simple web components to do that?

<multi-select><option value=1>Whatever</option></multi-select>. That seems like a nice simple and elegant way to add that.I just don't understand why front end devs make everything so complicated. I know complexity is needed sometimes. But React and other technologies make it much more difficult than it should be. All we are doing is serving simple forms for goodness sake! Yes, we want the forms to have some added benefits like searching in a long list of values for a multi-select form, but I don't need a whole framework to do that!

And after the front end devs left the company to make $50k+ elsewhere they leave this terrible mess. One coworker had asked, "Why is it doing that weird thing there?" Front end dev, "I have no idea!" Well, maybe if we went with simpler technology we would have an idea.

Simpler CSS too. Yes, I know we can't have my ideal CSS for my really simple web pages I make on my own. But we definitely don't need the complexity of some of these frameworks out there.

Anyways, I had to rant. I really don't like how complicated React has made the front end. Especially for business facing apps which are basically just forms. It isn't all that complex but we have made it super complex as a developer industry. For no reason that I can tell.

So… what about Quik? And Quik City?

Not sure. I didn’t see anything about streaming on its site, but I could have missed it.

For the site I built, Qwik’s code-on-interaction didn’t improve the initial download — where Qwik

import()s somethingonclick, my use of classica[href]andform[action]accomplished the same goal.Qwik pure HTML streaming: youtube.com/watch?v=yVOI81GKZBo

What a great series! Aside from the content, first I'm hearing that the demo actually happened and that you left Kroger. But really cool to see how far this has come and where you've landed!

Reading this in 2023, I think Astro Build is worth trying that controls Islands component astro.build/

Astro 2.0 released recently, Astro 1.0 supported HTML streaming.

twitter.com/matthewcp/status/15571...

Also reading this in 2023 thanks to all the anti-SPA sentiment swirling around Mastodon. Marko doesn't do TypeScript so trying Solid right now. Thanks for your superb series, I'm sure I'm not the only one who is most grateful!

Oh, you replied under my comment. Recently, Tanstack router has beta release for Astro, it feel like SPA experience although I don't think I will use Tanstack.