This is the first in a series of class notes as I go through the Georgia Tech/Udacity Machine Learning course. The class textbook is Machine Learning by Tom Mitchell.

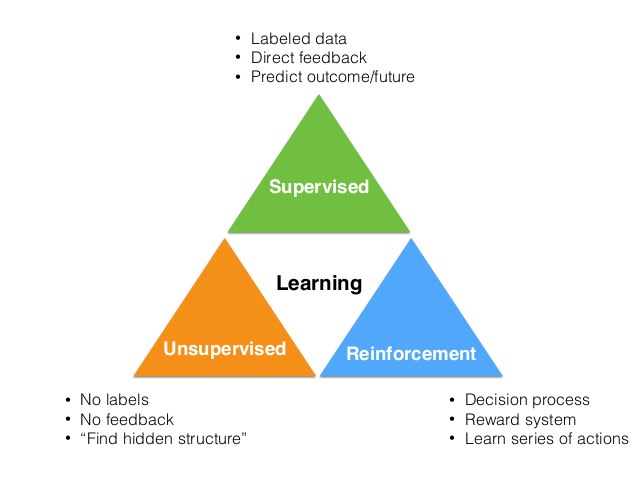

Supervised Learning: Learning by Approximation

Supervised Learning takes labeled data sets and gleaning information so that you can label new data sets. An example is if you look at this table:

| x | y |

|---|---|

| Chihuahua | A |

| German Shepherd | A |

| Siamese | B |

| Persian | B |

| Poodle | ? |

You might fill in ? with A. You do this despite not being told what x, y, A, or B are, but because you had a labeled data set with 4 examples, you were able to infer the right answer (together with some contextual knowledge about dog and cat breeds, which in reality is usually not present in machines but is more interesting to look at than a bunch of numbers).

More generally, you can view this as function approximation where you suppose that your labeled data is the outcome of an unknown "real" function, and you are trying to get as close to it as possible with your machine learning algorithm. Approximation is a key word here - the unknown function can be marvelously complex, but as long as our approximation walks, talks, and quacks close enough to the real thing (and for noisy domains this is a very useful assumption!) then that works for us. Fortunately we have proof that neural networks and functions in general can be approximated with deliberate control over accuracy.

The problem of Induction

Notice that if in the above example the real function were simply "is x longer than 7 characters?" then the correct answer would be B, not A.

This presents the problem with generalizing from an incomplete set of data points. Philosophers have long been familiar with the Problem of Induction. Supervised learning leans on Induction to go from specific data points to general rules, as opposed to Deduction, where you have axioms/rules and you deduce only what can be concluded from those rules.

Classification vs Regression

-

Classification is the process of taking some kind of input

xand mapping to a discrete label, for example True or False. So for example, given a picture, asking if it is or is not a Hotdog. - Regression is more about continuous value functions, like plotting a line chart through a scatter plot.

Unsupervised Learning: Learning by Description

With Unsupervised Learning, we don't get the benefit of labels. We just get a bunch of data and have to figure out some internal structure within what we're given:

| x | y |

|---|---|

| Chihuahua | ? |

| German Shepherd | ? |

| Siamese | ? |

| Persian | ? |

| Poodle | ? |

Here our goal is to find interesting and useful ways to describe our dataset. These descriptions are useful and concise ways to "cluster", "compress" or "summarize" the dataset. This reductionism necessarily loses some details in favor of a more simple model, so we must try to come up with algorithms to decide which are the more important details worth keeping.

Unsupervised learning can be used as an input to Supervised learning, where the features it identifies can form useful labels for faster Supervised learning.

Reinforcement Learning: Learning by Delayed Reward

In RL we try to learn like how people learn, as individual smart agents interacting with other smart agents, and trying to figure out what to do over time. The problem with creating an algorithm for this is that often the reward or outcome isn't determined based on a single set of decisions alone, but rather a sequence of them.

For example, placing an X or O on a blank game of Tic-Tac-Toe doesn't immediately tell you if that was a good or bad move, especially if you don't know the rules. We can only tell if it was a "better" or "worse" move by playing out all possible games after that starting point, tallying up wins and losses, and working backwards. This is modeled as a Markov Decision Process.

More generally, RL describes problems where you are given a set of valid decisions or actions to make, but have no idea how they impact the eventual outcome that you want, and having to figure out how to make those decisions at each step of the way.

Comparison of SL, UL and ML

The truth is, these categories aren't all that distinct. In SL there is the problem of induction, so it might seem that UL is better due to its agnosticism. But in practice, what we choose to cluster or emphasize involves some implicit assumptions we impose anyway. In some sense you can turn any SL problem into an UL problem.

You can also view these approaches as different kinds of optimization:

- in SL you want something that labels data well - so you try to approximate a function that does that

- in RL you want behavior that scores well

- in UL you make up some criterion and then find clusters that organize the data so that it scores well on the criterion

Everything boils down to one thing: DATA. Data is king in all Machine Learning, and more data generally helps. However more importantly, all these approaches assume Data is clean, consistent, trustworthy, and unbiased. They all start with data (even if the data is generated), not, for example, a theoretical hypothesis like how the human scientific method might suggest.

Further Resources

Class notes from other students going thru the class:

Next in our series

Hopefully this has been a good high level overview of Machine Learning. I am planning more primers and would love your feedback and questions on:

- Overview

- Supervised Learning

- Unsupervised Learning

- Reinforcement Learning

- Markov Decision Processes - week of Mar 25

- "True" RL - week of Apr 1

- Game Theory - week of Apr 15

Top comments (0)