Belong is a company with a simple vision — “Help folks discover where they Belong”. One way in which we help companies be discovered by these folks is by providing talent branding and engagement solutions.

Some of these solutions involved building microsites with about 4–5 pages. Since, Belong caters to many customers, we quickly realised that we will end up having many such microsites with a lot of things in common technically. So, we started discussing how do we set up an efficient development & deployment pipeline.

As developers working on these projects, we wanted to utilise a stack that checked the following:

Code Reusability (DRY): These sites have multiple UI components/functionalities that can be shared between them. Hence, we decided to build the site in React, since we could utilise a library of components we have already built! (You can check out the library here !) .

Server Side rendering: Our microsites are usually a good mix of static and dynamic pages. Each of these sites need be optimised for search engine crawlers. Hence, we need to render React on the server side.

-

Great development experience: For us the following comprise great experiences:

i. Easy to setup up on local machines

ii. Hot module replacement (HMR)

After evaluating multiple tools, we found Next.js to be the most promising framework for our use case. It’s incredibly easy to setup and also provides support for hot module replacement.*

Isolation & Reliability: Given that these sites represent our client’s brand, we need a setup that is highly reliable. Nobody wants 2AM pagers on downtime/degraded performance. Additionally, a bug in one client site should not affect other clients.

Maintainability: Every client has 2 site instances, staging and production. Thus, need a scalable process to maintain these sites without having to reconfigure each server independently.

Low expenses: Need to optimise costs for nearly 2*(no. of clients) instances. Each of these clients would have different workloads based on their talent brand and hiring needs. Needed a setup where manual tuning of each client instance is avoided.

Serverless Architecture checks of all these points, and hence we decided to deploy our Next.js apps on AWS Lambda. It offers considerable amount of reliability whilst keeping the costs down.

Repository structure

We have a single repository to maintain all the microsites with each customer having a separate branch.

The master branch serves as a template for the microsites. When a new customer signs up, a new branch is forked from master and the necessary changes are made to the serverless.yml file (Example: the domain name that maps to the API gateway endpoint). The customer specific assets are also checked into this branch.

The serverless config accepts a bunch of environment variables and parameters that help gain control over which resources are deployed.

What is the deployment like?

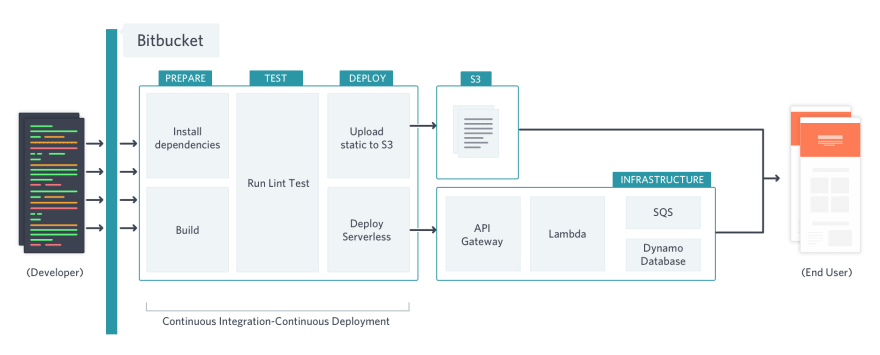

Serverless uses Cloudformation under the hood to bring up the resources as defined in the configuration file(serverless.yml). Although, it primarily supports definitions of Lambda functions and their triggers, one can also use native Cloudformation templates to bring up other resources like DynamoDB tables and the like. The following illustration highlights our deployment process,

When a new branch/existing branch is pushed, Strider, our CI system picks it up and

Clones the repository

Installs the package dependencies

Runs the linter

Builds the Next.js project

Exports the static files to S3 and the STATIC_PATH bearing the S3 URL is set in the Lambda environment for the application to utilise

Deploys the serverless configuration with the right parameters based on the environment.

This greatly reduces the complexity that comes with deploying a plethora of microsites while also keeping the costs down. Consider a scenario where we have to deploy microsites for 4 customers while maintaining isolation. Traditionally, we would have had to spin up 8 instances which would have cost us north of $160(assuming we pick the smallest t2.small instance type with no hardware abstraction using Kubernetes and the likes).

With serverless, given the fact that the number of requests most of these microssites handle is pretty low, the cost adds up to only $10! That is a huge cost saving that would scale impressively as the number of microsites go up.

Note: In scenarios where you plan to handle a million requests/day and above, it would be economical to deploy the application on traditional servers/containers as opposed to using the serverless architecture.

While the setup works seamlessly now, the path to get here was not all that straightforward. As we started to experiment with setting up the Next.js application on AWS Lambda, we stumbled upon a set of challenges with serving static files.

In retrospect, we would not recommend serving static files via Lambda for two reasons:

Increases the cost since we get billed for each request

Sometimes we might need to serve large files(videos, GIFs) which cannot be optimised to work around the limits of Lambda.

Which is why we had to include the step in the CI pipeline that would push the static files to S3 as part of the deployment process and made the path available to the application via the Lambda environment variables.

If you still want to serve the static files via Lambda (cause you are a rebel 😉), make sure you whitelist the correct mime-types in the API gateway configuration.

Sidenote: If you want your serverless deployments to connect to your services running inside a VPC, then you’d have to configure the Lambda to run in a subnet that has a NAT gateway/instance attached to it.

Testing serverless locally

For the most part, you don’t have to push to Lambda to check if the application is working as intended. The serverless framework provides a great way to test the functions locally, which helps save a ton of time and effort.

DIY

Along with these learnings we also wish to share a simple starter kit on Github, which can aid you to evaluate this architecture/setup!

Github Link: https://github.com/belongco/nextjs-serverless-setup

The starter kit will:

Setup the Lambda function

Configure DynamoDB table for the app to work with

Setup the API gateway, map its endpoint to a custom domain and create the Route53 entry for the same(uses a few plugins to do the domain mapping)

Challenges ahead:

Our application use case also requires us to run some long running crons and Lambda has an inherent limit on the execution time which doesn’t make it a good candidate for running tasks that exceed 300 seconds.

We are evaluating AWS Fargate and AWS Batch to get those tasks running. Hopefully, we should be back with another blog post covering the implementation details of the same.

About Us:

I am a frontend engineer working with Product teams at Belong. I love building web apps with JS and then regretting why I built them with JS 😅! If not coding, you will find me playing FIFA ⚽️, reading history 📖 or learning cartooning 🎨 !!

I like complicating things. 💥

👩🎨 Illustration by Anukriti Vijayavargia

Note: This post was originally posted to Medium here.

Top comments (2)

Hi Phani!

Great post by the way, been waiting for something like this for quite some time now. I’ve followed your example and it worked like a treat.

For some reason unknown to me, now when I deploy my NextJS app using Serverless when I hit the URL it shows 404 for all the resources and the standard 404 NextJS page can be seen.

Did you ever run into an issue like this or sound familiar to you? There’s no errors on deployment and looks all good in the terminal. Surely it’s just some misconfiguration on my part.

Thanks once again! Awesome stuff!

did you resolve mark?