AWS Elastic Kubernetes Service: a cluster creation automation, part 2 — Ansible, eksctl

The first part — AWS Elastic Kubernetes Service: a cluster creation automation, part 1 — CloudFormation.

To remind the whole idea is to create an automation process to create an EKS cluster:

- Ansible uses the cloudformation module to create an infrastructure

- by using an Outputs of the CloudFormation stack created — Ansible from a template will generate a cluster-config file for the eksctl

- Ansible calls eksctl with that config-file to create an EKS cluster

All this will be done from a Jenkins job using a Docker image with AWS CLI, Ansible and eksctl. Maybe it will be the third part of this series.

All the resulted files after writing this post are available in the eksctl-cf-ansible Github repository. The link here is to the branch with an exact copy of the code below.

Content

- AWS Authentification

- Ansible CloudFormation

- CloudFormation Parameters and Ansible variables

- The CloudFormation role

- Ansible eksctl

- Cluster config — eks-cluster-config.yml

- eksctl create vs update

- TEST: CloudFormation && EKS Custom parameters

- P.S. aws-auth ConfigMap

- Useful links

- Kubernetes

- Ansible

- AWS

- EKS

- CloudFormation

AWS Authentification

It worth thinking about authentification beforehand to not remodel everything from scratch (I did).

Also, it’s highly recommended to read the Kubernetes: part 4 — AWS EKS authentification, aws-iam-authenticator, and AWS IAM post as in this current post will be used a lot of things described there.

So, we need to have some AWS authentification mechanism because we need to authenticate:

- Ansible for its cloudformation role

- Ansible for its eksctl role

- Ansible to execute AWS CLI commands

The most non-obvious issue here is the fact, that the IAM user used to create an EKS cluster becomes its “super-admin” and you can not change it.

For example, in the previous part we created the cluster using the following command:

$ eksctl --profile arseniy create cluster -f eks-cluster-config.yml

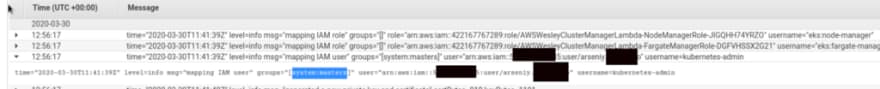

Now, you can check the authenticator logs in the CloudWatch, the very first entries there — and you’ll see the root-suer from the system:masters group with kubernetes-admin permissions:

Well — what can we use for AWS authentification??

- access keys — AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY

- AWS CLI named profiles

- AWS IAM EC2 Instance profiles (see also AWS: IAM AssumeRole — описание, примеры, Rus)

But as we will use Jenkins here — we can use its IAM EC2 Instance role with necessary permissions.

For now, I realized the next scheme:

- manually create an IAM user called eks-root with the EKS Admin policy

- Jenkins will create necessary resources using its IAM Instance role with the EKS Admin policy and will become a “super-admin” for all EKS clusters in an AWS account

- and in Ansible we will add eksctl create iamidentitymapping (see Managing IAM users and roles) to add additional users.

Check the aws-auth ConfigMap now:

$ eksctl --profile arseniy --region eu-west-2 get iamidentitymapping --cluster eks-dev

ARN USERNAME GROUPS

arn:aws:iam::534***385:role/eksctl-eks-dev-nodegroup-worker-n-NodeInstanceRole-UNGXZXVBL3ZP system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes

Or with this:

$ kubectl -n kube-system get cm aws-auth -o yaml

apiVersion: v1

data:

mapRoles: |

- groups:

- system:bootstrappers

- system:nodes

rolearn: arn:aws:iam::534***385:role/eksctl-eks-dev-nodegroup-worker-n-NodeInstanceRole-UNGXZXVBL3ZP

username: system:node:{{EC2PrivateDNSName}}

mapUsers: |

[]

kind: ConfigMap

…

Let’s do everything manually now to avoid surprised during the automation creation:

- create an IAM user

- create an “EKS Admin” policy

- attach this policy to this user

- configure local AWS CLI profile with this user

- configure local kubectl for this AWS CLI profile

- execute eksctl create iamidentitymapping

- execute kubectl get nodes to check access

Go ahead — create the user:

$ aws --profile arseniy --region eu-west-2 iam create-user --user-name eks-root

{

“User”: {

“Path”: “/”,

“UserName”: “eks-root”,

“UserId”: “AID***PSA”,

“Arn”: “arn:aws:iam::534***385:user/eks-root”,

“CreateDate”: “2020–03–30T12:24:28Z”

}

}

His access keys:

$ aws --profile arseniy --region eu-west-2 iam create-access-key --user-name eks-root

{

“AccessKey”: {

“UserName”: “eks-root”,

“AccessKeyId”: “AKI****45Y”,

“Status”: “Active”,

“SecretAccessKey”: “Qrr***xIT”,

“CreateDate”: “2020–03–30T12:27:28Z”

}

}

Configure local AWS CLI profile:

$ aws configure --profile eks-root

AWS Access Key ID [None]: AKI***45Y

AWS Secret Access Key [None]: Qrr***xIT

Default region name [None]: eu-west-2

Default output format [None]: json

Try access now — must be declined:

$ aws --profile eks-roo eks list-clusters

An error occurred (AccessDeniedException) when calling the ListClusters operation […]

Create a policy, here are some examples>>> (later it can be added to the CloudFormation stack as a nested stack, in the same way, SecurityGroups will be managed), save it in a dedicated file ../../cloudformation/files/eks-root-policy.json:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"eks:*"

],

"Resource": "*"

}

]

}

Add this policy to AWS IAM:

$ aws --profile arseniy --region eu-west-2 iam create-policy --policy-name eks-root-policy --policy-document file://../../cloudformation/files/eks-root-policy.json

{

“Policy”: {

“PolicyName”: “eks-root-policy”,

“PolicyId”: “ANPAXY5JMBMEROIOD22GM”,

“Arn”: “arn:aws:iam::534***385:policy/eks-root-policy”,

“Path”: “/”,

“DefaultVersionId”: “v1”,

“AttachmentCount”: 0,

“PermissionsBoundaryUsageCount”: 0,

“IsAttachable”: true,

“CreateDate”: “2020–03–30T12:44:58Z”,

“UpdateDate”: “2020–03–30T12:44:58Z”

}

}

Attach the policy to the user created above:

$ aws --profile arseniy --region eu-west-2 iam attach-user-policy --user-name eks-root --policy-arn arn:aws:iam::534***385:policy/eks-root-policy

Check:

$ aws --profile arseniy --region eu-west-2 iam list-attached-user-policies --user-name eks-root --output text

ATTACHEDPOLICIES arn:aws:iam::534***385:policy/eks-root-policy eks-root-policy

Try access again:

$ aws --profile eks-root --region eu-west-2 eks list-clusters --output text

CLUSTERS eks-dev

And in another region:

$ aws --profile eks-root --region us-east-2 eks list-clusters --output text

CLUSTERS eksctl-bttrm-eks-production-1

CLUSTERS mobilebackend-dev-eks-0-cluster

The next tasks will be better to do from a box with no configured accesses at all, for example — from the RTFM blog’s server :-)

Install the AWS CLI:

root@rtfm-do-production:/home/setevoy# pip install awscli

Configure a default profile:

root@rtfm-do-production:/home/setevoy# aws configure

Check access to AWS EKS in general — in our IAM policy we’ve granted permissions to the eks:ListClusters API-call, so it must be working:

root@rtfm-do-production:/home/setevoy# aws eks list-clusters — output text

CLUSTERS eks-dev

Okay.

Install the eksctl:

root@rtfm-do-production:/home/setevoy# curl --silent --location “https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz” | tar xz -C /tmp

root@rtfm-do-production:/home/setevoy# mv /tmp/eksctl /usr/local/bin

Repeat the eks:ListClusters - also must work, as eksctl will use the default AWS CLI profile configured above:

root@rtfm-do-production:/home/setevoy# eksctl get cluster

NAME REGION

eks-dev eu-west-2

Install kubectl:

root@rtfm-do-production:/home/setevoy# curl -LO [https://storage.googleapis.com/kubernetes-release/release/`curl](https://storage.googleapis.com/kubernetes-release/release/%60curl) -s [https://storage.googleapis.com/kubernetes-release/release/stable.txt`/bin/linux/amd64/kubectl](https://storage.googleapis.com/kubernetes-release/release/stable.txt%60/bin/linux/amd64/kubectl)

root@rtfm-do-production:/home/setevoy# chmod +x ./kubectl

root@rtfm-do-production:/home/setevoy# mv ./kubectl /usr/local/bin/kubectl

Install aws-iam-authenticator to let kubectl perform authentification in AWS:

root@rtfm-do-production:/home/setevoy# curl -o aws-iam-authenticator [https://amazon-eks.s3.us-west-2.amazonaws.com/1.15.10/2020-02-22/bin/linux/amd64/aws-iam-authenticator](https://amazon-eks.s3.us-west-2.amazonaws.com/1.15.10/2020-02-22/bin/linux/amd64/aws-iam-authenticator)

root@rtfm-do-production:/home/setevoy# chmod +x ./aws-iam-authenticator

root@rtfm-do-production:/home/setevoy# mv aws-iam-authenticator /usr/local/bin/

Configure kubectl:

root@rtfm-do-production:/home/setevoy# aws eks update-kubeconfig --name eks-dev

Updated context arn:aws:eks:eu-west-2:534***385:cluster/eks-dev in /root/.kube/config

Try to execute a command on the cluster — must be authorization error:

root@rtfm-do-production:/home/setevoy# kubectl get nodes

error: You must be logged in to the server (Unauthorized)

root@rtfm-do-production:/home/setevoy# kubectl get pod

error: You must be logged in to the server (Unauthorized)

Go back to the working PC and add the eks-root user to the aws-auth ConfigMap:

$ eksctl --profile arseniy --region eu-west-2 create iamidentitymapping --cluster eks-dev --arn arn:aws:iam::534***385:user/eks-root --group system:masters --username eks-root

[ℹ] eksctl version 0.16.0

[ℹ] using region eu-west-2

[ℹ] adding identity “arn:aws:iam::534***385:user/eks-root” to auth ConfigMap

Check it:

$ eksctl --profile arseniy --region eu-west-2 get iamidentitymapping --cluster eks-dev

ARN USERNAME GROUPS

arn:aws:iam::534***385:role/eksctl-eks-dev-nodegroup-worker-n-NodeInstanceRole-UNGXZXVBL3ZP system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes

arn:aws:iam::534***385:user/eks-root eks-root system:masters

Go back to the testing host and try access again:

root@rtfm-do-production:/home/setevoy# kubectl get node

NAME STATUS ROLES AGE VERSION

ip-10–0–40–30.eu-west-2.compute.internal Ready <none> 133m v1.15.10-eks-bac369

ip-10–0–63–187.eu-west-2.compute.internal Ready <none> 133m v1.15.10-eks-bac369

And let’s check something else, the same aws-auth ConfigMap for example:

root@rtfm-do-production:/home/setevoy# kubectl -n kube-system get cm aws-auth -o yaml

apiVersion: v1

data:

mapRoles: |

- groups:

- system:bootstrappers

- system:nodes

rolearn: arn:aws:iam::534***385:role/eksctl-eks-dev-nodegroup-worker-n-NodeInstanceRole-UNGXZXVBL3ZP

username: system:node:{{EC2PrivateDNSName}}

mapUsers: |

- groups:- system:masters

userarn: arn:aws:iam::534***385:user/eks-root

username: eks-root

kind: ConfigMap

…

The mapUsers with our user added - all good here.

Later we can use this aws-auth to add new users or will add a task to Ansible to execute eksctl create iamidentitymapping, or we can generate our ownConfigMap and upload it to EKS.

I’ll proceed the tasks from our Jenkins host as we have vim available anywhere.

Ansible CloudFormation

CloudFormation Parameters and Ansible variables

Before starting the following tasks — let’s think about parameters used in the CloudFormation stack from the previous part and what we will need to have in our Ansible.

The first thing came in mind is the act, that we will have Dev, Stage, Production cluster + dynamic for QA team (to let them an ability to create a dedicated custom cluster from a Jenkins job to test something), so parameters have to be flexible enough to create a stack in any region with any VPC networks.

Thus we will have:

- common parameters for all:

- region

- AWS access/secret (or IAM EC2 Instance Profile)

- dedicated parameters for an environment:

- ENV(dev, stage, prod)

- VPC CIDR (10.0.0.0/16, 10.1.0.0/16, etc)

- SSH key for Kubernetes Worker Nodes access

- AWS ЕС2 instances types for Kubernetes Worker Nodes (t3.nano, c5.9xlarge, etc)

In particular, worth to pay attention to the namings of the stacks and clusters.

So, we will have for names which are used “globally” over Ansible playbook and roles and which we will see later in AWS Console:

- an environment name — dev, stage, prod

- a CloudFromation stack name created by us from the cloudformation role (a root stack and its nested stacks)

- a CloudFromation stack name created by the eksctl when creating a cluster and its WorkerNodes stacks

- and an EKS cluster name

eksctl of course, will do some things behind the scene and will append some prefixes-postfixes to the names here.

For example, when creating a stack for EKS cluster itself — it will append its CloudFormation stack name with the eksctl- prefix and will add a -cluster postfix, and for its WokerNodes stacks — will use prefix eksctl- and postfix -nodegroup + name of the WorkerNodes group from a cluster config-file.

But still, the cluster itself will be named exactly as we set it in the eks-cluster-config.yml.

So, let’s grab altogether — which variables we will use:

- env:

- format: string “dev”

- description: used to compose values for other variables

- result: dev

- eks_cluster_name:

- format: bttrm-eks-${env}

- description: will set the metadata: name: in the eks-cluster-config.yml cluster-config will be used to compose values for other variables and CloudFormation tags

- result: bttrm-eks-dev

- cf_stack_name:

- format: eksctl-${eks_cluster_name}-stack

- description: with the -stack here we are noting that this contains only AWS related resources, no EKS

- result: eksctl-bttrm-eks-dev-stack

- (AUTO) eks_cluster_stack_name:

- format: eksctl-${cluster_name}-cluster

- description: will be created by eksctl itself for a CloudFormation stack, just keeping it in mind

- result: eksctl-bttrm-eks-dev-cluster

- (AUTO) eks_nodegroup_stack_name:

- format: eksctl-${cluster_name}-nodegroup-${worker-nodes-name}, where worker-nodes-name - is a value from the nodeGroups: - name: from the cluster-config eks-cluster-config.yml

- description: will be created by eksctl itself for a CloudFormation stack, just keeping it in mind

- result: eksctl-bttrm-eks-dev-cluster

Regarding different files for different environments — for now, can use common group_vars/all.yml, and later will see how to make them separated.

Create a directory:

$ mkdir group_vars

Create the all.yml file and set initial values:

###################

# ANSIBLE globals #

###################

ansible_connection: local

#####################

# ENV-specific vars #

#####################

env: "dev"

#################

# ROLES globals #

#################

region: "eu-west-2"

# (THIS) eks_cluster_name: used for EKS service to set an exactly cluster's name - "{{ eks_cluster_name }}"

# (AUTO) eks_cluster_stack_name: used for CloudFormation service to format a stack's name as "eksctl-{{ eks_cluster_name }}-cluster"

# (AUTO) eks_nodegroup_stack_name: used for CloudFormation service to format a stack's name as "eksctl-{{ eks_cluster_name }}-nodegroup-{{ worker-nodes-name }}"

eks_cluster_name: "bttrm-eks-{{ env }}"

# used bythe cloudformation role to st a stack's name

cf_stack_name: "eksctl-{{ eks_cluster_name }}-stack"

##################

# ROLES specific #

##################

# cloudforation role

vpc_cidr_block: "10.0.0.0/16"

Also, put shared variables here like region - it will be used by the cloudformation and eksctl roles, and vpc_cidr_block - only in the cloudformation.

The CloudFormation role

After we’ve finished our templates in the previous part we must have the following directories and files structure:

$ tree

.

└── roles

├── cloudformation

│ ├── files

│ │ ├── eks-azs-networking.json

│ │ ├── eks-region-networking.json

│ │ └── eks-root.json

│ ├── tasks

│ └── templates

└── eksctl

├── tasks

└── templates

└── eks-cluster-config.yml

Now, in the repository root create a new file eks-cluster.yml - this will be our Ansible playbook.

Add the cloudformation execution there:

- hosts:

- all

become:

true

roles:

- role: cloudformation

tags: infra

Add tags to be able later run roles independently - will be helpful when we will start writing a role for the eksctl.

Create a roles/cloudformation/tasks/main.yml file - add the cloudformation module execution here and set necessary parameters for our templates via template_parameters - the VPCCIDRBlock from the vpc_cidr_block variable and EKSClusterName parameter from the eks_cluster_name variable:

- name: "Create EKS {{ cf_stack_name | upper }} CloudFormation stack"

cloudformation:

region: "{{ region }}"

stack_name: "{{ cf_stack_name }}"

state: "present"

disable_rollback: true

template: "/tmp/packed-eks-stacks.json"

template_parameters:

VPCCIDRBlock: "{{ vpc_cidr_block }}"

EKSClusterName: {{ eks_cluster_name }},

tags:

Stack: "{{ cf_stack_name }}"

Env: "{{ env }}"

EKS-cluster: "{{ eks_cluster_name }}"

Didn’t use Ansible for half of the year — forgot everything(

Google for “ansible inventory” — and read the Best Practices

In the repository root, create an ansible.cfg file with some defaults:

[defaults]

gather_facts = no

inventory = hosts.yml

In the same place create an inventory file — hosts.yml:

all:

hosts:

"localhost"

W era e using localhost, here as Ansible will not use SSH anywhere — all tasks will be done locally.

Check syntax:

admin@jenkins-production:~/devops-kubernetes$ ansible-playbook eks-cluster.yml — syntax-check

playbook: eks-cluster.yml

Looks good? Generate a template’s file (in Jenkins pipeline will have to have an additional stage for this:

admin@jenkins-production:~/devops-kubernetes$ cd roles/cloudformation/files/

admin@jenkins-production:~/devops-kubernetes/roles/cloudformation/files$ aws --region eu-west-2 cloudformation package --template-file eks-root.json --output-template /tmp/packed-eks-stacks.json --s3-bucket eks-cloudformation-eu-west-2 --use-json

Successfully packaged artifacts and wrote output template to file /tmp/packed-eks-stacks.json.

Execute the following command to deploy the packaged template

$ aws cloudformation deploy --template-file /tmp/packed-eks-stacks.json --stack-name <YOUR STACK NAME>

Run the stack creation:

admin@jenkins-production:~/devops-kubernetes$ ansible-playbook eks-cluster.yml

…

TASK [cloudformation : Setting the Stack name] ****

ok: [localhost]

TASK [cloudformation : Create EKS EKSCTL-BTTRM-EKS-DEV-STACK CloudFormation stack] ****

changed: [localhost]

PLAY RECAP ****

localhost : ok=3 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

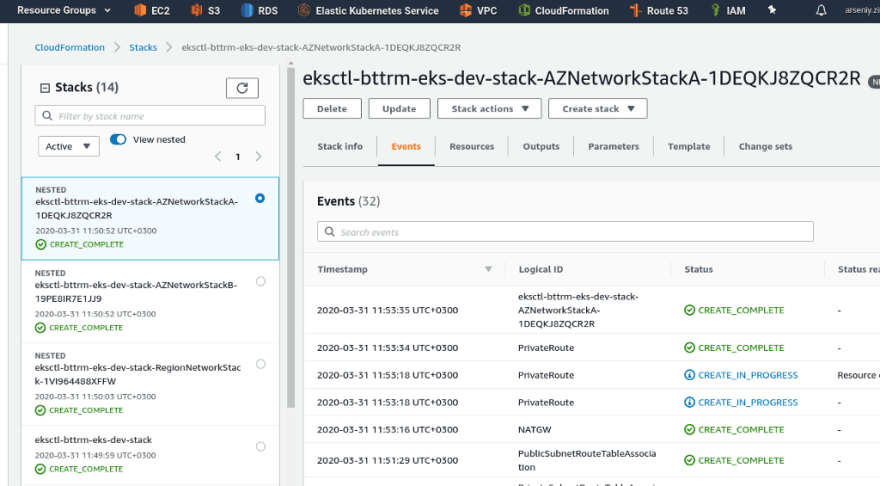

Check:

The stack’s name was set as eksctl-bttrm-eks-dev-stack — nice.

Now, can start writing a role for the eksctl to create a cluster itself.

Ansible eksctl

We already have the cluster config file — let’s rename it to the .j2 (to note it as a Jinja's file - Ansible templating engine):

admin@jenkins-production:~/devops-kubernetes$ mv roles/eksctl/templates/eks-cluster-config.yml roles/eksctl/templates/eks-cluster-config.yml.j2

From the CloudFormation stack created in the first role we need to pass a bunch of values here — VPC ID, subnets IDs, etc, to set values in the cluster config for eksctl.

Let’s use Ansible module cloudformation_info to collect information about the stack created.

Add it, save its output to the stack_info variable and then will check its content.

Create a new file roles/eksctl/tasks/main.yml:

- cloudformation_info:

region: "{{ region }}"

stack_name: "cf_stack_name"

register: stack_info

- debug:

msg: "{{ stack_info }}"

Add the eksctl role execution to the eks-cluster.yml playbook, add tags:

- hosts:

- all

become:

true

roles:

- role: cloudformation

tags: infra

- role: eksctl

tags: eks

Run using --tags eks to execute the eksctl role only:

admin@jenkins-production:~/devops-kubernetes$ ansible-playbook — tags eks eks-cluster.yml

…

TASK [eksctl : cloudformation_info] ****

ok: [localhost]

TASK [eksctl : debug] ****

ok: [localhost] => {

“msg”: {

“changed”: false,

“cloudformation”: {

“eksctl-bttrm-eks-dev-stack”: {

“stack_description”: {

…

“outputs”: [

{

“description”: “EKS VPC ID”,

“output_key”: “VPCID”,

“output_value”: “vpc-042082cd2d011f44d”

},

…

Cool.

Now — let’s cut the { "outputs" } block only.

Update the task — from the stack_info into msg pass only the stack_outputs:

- cloudformation_info:

region: "{{ region }}"

stack_name: "{{ cf_stack_name }}"

register: stack_info

- debug:

msg: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs }}"

Run:

admin@jenkins-production:~/devops-kubernetes$ ansible-playbook — tags eks eks-cluster.yml

…

TASK [eksctl : debug] ****

ok: [localhost] => {

“msg”: {

“APrivateSubnetID”: “subnet-0471e7c28a3770828”,

“APublicSubnetID”: “subnet-07a0259b33ddbcb4c”,

“AStackAZ”: “eu-west-2a”,

“BPrivateSubnetID”: “subnet-0fa6eece43b2b6644”,

“BPublicSubnetID”: “subnet-072c107cef77fe859”,

“BStackAZ”: “eu-west-2b”,

“VPCID”: “vpc-042082cd2d011f44d”

}

}

…

Niiice!

And now — let’s try to set those values as variables to use them to generate the cluster config from its template.

Update the roles/eksctl/tasks/main.yml, add a vpc_id variable and print it via debug to check its value:

- cloudformation_info:

region: "{{ region }}"

stack_name: "{{ cf_stack_name }}"

register: stack_info

- debug:

msg: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs }}"

- set_fact:

vpc_id: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.VPCID }}"

- debug:

msg: "{{ vpc_id }}"

- name: "Check template's content"

debug:

msg: "{{ lookup('template', './eks-cluster-config.yml.j2') }}"

In the «Check the template content» by direct call of the template module let's check what we will have as a resulted file from the template file.

Edit the roles/eksctl/templates/eks-cluster-config.yml.j2 - set the {{ vpc_id }}:

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: eks-dev

region: eu-west-2

version: "1.15"

nodeGroups:

- name: worker-nodes

instanceType: t3.medium

desiredCapacity: 2

privateNetworking: true

vpc:

id: "{{ vpc_id }}"

subnets:

public:

eu-west-2a:

...

Check:

admin@jenkins-production:~/devops-kubernetes$ ansible-playbook — tags eks eks-cluster.yml

…

TASK [eksctl : debug] ****

ok: [localhost] => {

“msg”: {

“APrivateSubnetID”: “subnet-0471e7c28a3770828”,

“APublicSubnetID”: “subnet-07a0259b33ddbcb4c”,

“AStackAZ”: “eu-west-2a”,

“BPrivateSubnetID”: “subnet-0fa6eece43b2b6644”,

“BPublicSubnetID”: “subnet-072c107cef77fe859”,

“BStackAZ”: “eu-west-2b”,

“VPCID”: “vpc-042082cd2d011f44d”

}

}

TASK [eksctl : set_fact] *****ok: [localhost]

TASK [eksctl : debug] ****

ok: [localhost] => {

“msg”: “vpc-042082cd2d011f44d”

}

TASK [eksctl : Check the template’s content] ****

ok: [localhost] => {

“msg”: “apiVersion: eksctl.io/v1alpha5\nkind: ClusterConfig\nmetadata:\n name: eks-dev\n region: eu-west-2\n version: \”1.15\”\nnodeGroups:\n — name: worker-nodes\n instanceType: t3.medium\n desiredCapacity: 2\n privateNetworking: true\nvpc:\n id: \”vpc-042082cd2d011f44d\”\n […]

vpc:\n id: \”vpc-vpc-042082cd2d011f44d\” — good, we got our VPC ID in the template.

Add other varialbes, so the template will look like:

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: "{{ eks_cluster_name }}"

region: "{{ region }}"

version: "{{ k8s_version }}"

nodeGroups:

- name: "{{ k8s_worker_nodes_group_name }}"

instanceType: "{{ k8s_worker_nodes_instance_type }}"

desiredCapacity: {{ k8s_worker_nodes_capacity }}

privateNetworking: true

vpc:

id: "{{ vpc_id }}"

subnets:

public:

{{ a_stack_az }}:

id: "{{ a_stack_pub_subnet }}"

{{ b_stack_az }}:

id: "{{ b_stack_pub_subnet }}"

private:

{{ a_stack_az }}:

id: "{{ a_stack_priv_subnet }}"

{{ b_stack_az }}:

id: "{{ b_stack_priv_subnet }}"

nat:

gateway: Disable

cloudWatch:

clusterLogging:

enableTypes: ["*"]

Next, need to add additional variables for Ansible to be used in this template:

- eks_cluster_name — is already added

- vpc_id — is already added

- for the AvailablityZone-А stack need to add:

- a_stack_pub_subnet

- a_stack_priv_subnet

- for the AvailablityZone-В need to add:

- a_stack_pub_subnet

- a_stack_priv_subnet

- region — is already added, will be set later from a Jenkins parameters

- kubernetes_version

- kubernetes_worker_nodes_group_name

- kubernetes_worker_nodes_instance_type

- kubernetes_worker_nodes_capacity

Add set_facts in the roles/eksctl/tasks/main.yml:

- cloudformation_info:

region: "{{ region }}"

stack_name: "{{ cf_stack_name }}"

register: stack_info

- debug:

msg: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs }}"

- set_fact:

vpc_id: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.VPCID }}"

a_stack_az: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.AStackAZ }}"

a_stack_pub_subnet: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.APublicSubnetID }}"

a_stack_priv_subnet: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.APrivateSubnetID }}"

b_stack_az: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.BStackAZ }}"

b_stack_pub_subnet: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.BPublicSubnetID }}"

b_stack_priv_subnet: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.BPrivateSubnetID }}"

- name: "Check the template's content"

debug:

msg: "{{ lookup('template', './eks-cluster-config.yml.j2') }}"

In the group_vars/all.yml add values for the eksctl role:

...

##################

# ROLES specific #

##################

# cloudforation role

vpc_cidr_block: "10.0.0.0/16"

# eksctl role

k8s_version: 1.15

k8s_worker_nodes_group_name: "worker-nodes"

k8s_worker_nodes_instance_type: "t3.medium"

k8s_worker_nodes_capacity: 2

Let’s check — for now in the way, by calling the template directory:

…

TASK [eksctl : Check the template’s content] ****

ok: [localhost] => {

“msg”: “apiVersion: eksctl.io/v1alpha5\nkind: ClusterConfig\nmetadata:\n name: \”bttrm-eks-dev\”\n region: \”eu-west-2\”\n version: \”1.15\”\nnodeGroups:\n — name: \”worker-nodes\”\n instanceType: \”t3.medium\”\n desiredCapacity: 2\n privateNetworking: true\nvpc:\n id: \”vpc-042082cd2d011f44d\”\n subnets:\n public:\n eu-west-2a:\n id: \”subnet-07a0259b33ddbcb4c\”\n eu-west-2b:\n id: \”subnet-072c107cef77fe859\”\n private:\n eu-west-2a:\n id: \”subnet-0471e7c28a3770828\”\n eu-west-2b:\n id: \”subnet-0fa6eece43b2b6644\”\n nat:\n gateway: Disable\ncloudWatch:\n clusterLogging:\n enableTypes: [\”\*\”]\n”

}

…

“It works!” ©.

Well — not it’s time to run the eksctl.

Cluster config — eks-cluster-config.yml

Before calling eksctl need to generate the config from the template.

At the end of the roles/eksctl/tasks/main.yml add template, and by using the role/eksctl/templates/eks-cluster-config.yml.j2 generate a eks-cluster-config.yml file in the /tmp directory:

...

- name: "Generate eks-cluster-config.yml"

template:

src: "eks-cluster-config.yml.j2"

dest: /tmp/eks-cluster-config.yml

eksctl create vs update

A note: actually, the update for eksctl will perform a version upgrade instead of configuration update, but I’ll leave this part here just for example

By the way, what if a cluster already exists? A job will fail, as we are passing the create.

Well — we can add a check if a cluster already present and then create a variable to store a value create or update, similarly as I did it in the BASH: скрипт создания AWS CloudFormation стека.

Thus, need to:

- get a list of the EKS clusters in an AWS region

- try to find the name of the cluster being created in this list

- if not found then set value create

- if found then set value update

Let’s try to do it in this way:

# populate a clusters_exist list with names of clusters devided by TAB "\t"

- name: "Getting existing clusters list"

shell: "aws --region {{ region }} eks list-clusters --query '[clusters'] --output text"

register: clusters_exist

- debug:

msg: "{{ clusters_exist.stdout }}"

# create a list from the clusters_exist

- set_fact:

found_clusters_list: "{{ clusters\_exist.stdout.split('\t') }}"

- debug:

msg: "{{ found_clusters_list }}"

# check if a cluster's name is found in the existing clusters list

- fail:

msg: "{{ eks_cluster_name }} already exist in the {{ region }}"

when: eks_cluster_name not in found_clusters_list

- meta: end_play

...

Those tasks during testing add to the beginning of the roles/eksctl/tasks/main.yml, and to stop execution after them - add the - meta: end_play.

In the fail condition now set " not in" as we are just testing now and have no cluster created yet:

…

TASK [eksctl : debug] ****

ok: [localhost] => {

“msg”: “eks-dev”

}

TASK [eksctl : set_fact] ****

ok: [localhost]

TASK [eksctl : debug] ****

ok: [localhost] => {

“msg”: [

“eks-dev”

]

}

TASK [eksctl : fail] ****

fatal: [localhost]: FAILED! => {“changed”: false, “msg”: “bttrm-eks-dev already exist in the eu-west-2”}

…

Cool.

Now, add an eksctl_action variable with the "create" or "update", depending on was the cluster found or not:

# populate a clusters_exist list with names of clusters devided by TAB "\t"

- name: "Getting existing clusters list"

shell: "aws --region {{ region }} eks list-clusters --query '[clusters'] --output text"

register: clusters_exist

- debug:

msg: "{{ clusters_exist.stdout }}"

# create a list from the clusters_exist

- set_fact:

found_clusters_list: "{{ clusters_exist.stdout.split('\t') }}"

- debug:

msg: "{{ found_clusters_list }}"

- set_fact:

eksctl_action: "{{ 'create' if (eks_cluster_name not in found_clusters_list) else 'update' }}"

- debug:

var: eksctl_action

# check if a cluster's name is found in the existing clusters list

- fail:

msg: "{{ eks_cluster_name }} already exist in the {{ region }}"

when: eks_cluster_name not in found_clusters_list

...

Check:

…

TASK [eksctl : debug] ****

ok: [localhost] => {

“eksctl_action”: “create”

}

…

Next, add this to the eksctltask - add the {{ eksctl_action }} to its arguments instead of direct "create":

- cloudformation_info:

region: "{{ region }}"

stack_name: "{{ cf_stack_name }}"

register: stack_info

- set_fact:

vpc_id: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.VPCID }}"

a_stack_az: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.AStackAZ }}"

a_stack_pub_subnet: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.APublicSubnetID }}"

a_stack_priv_subnet: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.APrivateSubnetID }}"

b_stack_az: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.BStackAZ }}"

b_stack_pub_subnet: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.BPublicSubnetID }}"

b_stack_priv_subnet: "{{ stack_info.cloudformation[cf_stack_name].stack_outputs.BPrivateSubnetID }}"

- name: "Generate eks-cluster-config.yml"

template:

src: "eks-cluster-config.yml.j2"

dest: /tmp/eks-cluster-config.yml

# populate a clusters_exist list with names of clusters devided by TAB "\t"

- name: "Getting existing clusters list"

command: "aws --region {{ region }} eks list-clusters --query '[clusters'] --output text"

register: clusters_exist

# create a list from the clusters_exist

- set_fact:

found_clusters_list: "{{ clusters_exist.stdout.split('\t') }}"

- name: "Setting eksctl action to either Create or Update"

set_fact:

eksctl_action: "{{ 'create' if (eks_cluster_name not in found_clusters_list) else 'update' }}"

- name: "Running eksctl eksctl_action {{ eksctl_action | upper }} cluster with name {{ eks_cluster_name | upper }}"

command: "eksctl {{ eksctl_action }} cluster -f /tmp/eks-cluster-config.yml"

The moment of truth! :-)

Run:

admin@jenkins-production:~/devops-kubernetes$ ansible-playbook — tags eks eks-cluster.yml

…

TASK [eksctl : cloudformation_info] ****

ok: [localhost]

TASK [eksctl : set_fact] ****

ok: [localhost]

TASK [eksctl : Generate eks-cluster-config.yml] ****

ok: [localhost]

TASK [eksctl : Getting existing clusters list] ****

changed: [localhost]

TASK [eksctl : set_fact] ****

ok: [localhost]

TASK [eksctl : Setting eksctl action to either Create or Update] ****

ok: [localhost]

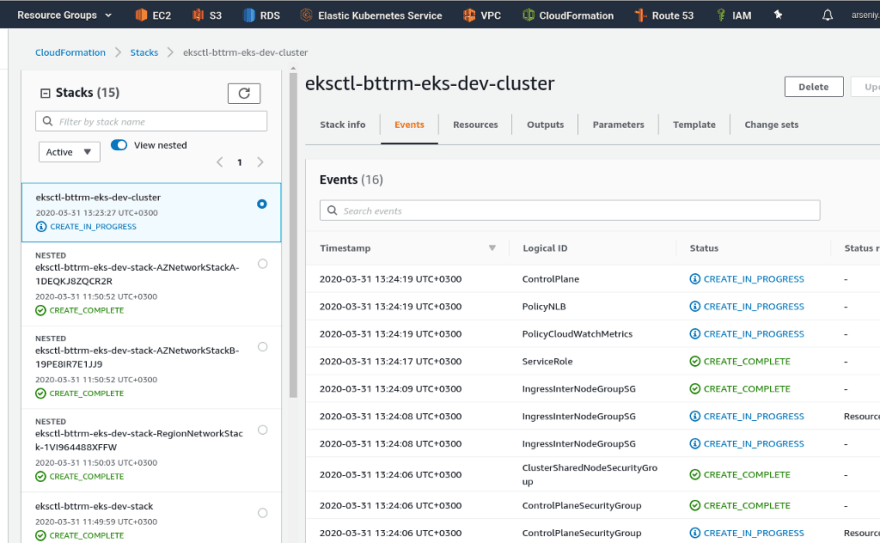

TASK [eksctl : Running eksctl eksctl_action CREATE cluster with name BTTRM-EKS-DEV]

The stack and cluster are creating, the stack’s name is eksctl-bttrm-dev-cluster — everything as we planned:

Hurray!

Left just one more test.

TEST: CloudFormation && EKS Custom parameters

The latest thing to test is the flexibility of everything we wrote.

For example, an QA team wants to create a brand new cluster named qa-test with VPC CIDR 10.1.0.0/16.

Update the group_vars/all.yml, set:

- env: "dev" > "qa-test"

- region: "eu-west-2" > "eu-west-3"

- vpc_cidr_block: "10.0.0.0/16" > "10.1.0.0/16"

...

env: "qa-test"

...

region: "eu-west-3"

...

vpc_cidr_block: "10.1.0.0/16"

Re-pack the template to re-geneteare the packed-eks-stack.json:

admin@jenkins-production:~/devops-kubernetes$ cd roles/cloudformation/files/

admin@jenkins-production:~/devops-kubernetes/roles/cloudformation/files$ aws — region eu-west-3 cloudformation package — template-file eks-root.json — output-template packed-eks-stacks.json — s3-bucket eks-cloudformation-eu-west-3 — use-json

Run Ansible with no --tags to create CloudFormation stacks and EKS cluster from scratch:

admin@jenkins-production:~/devops-kubernetes$ ansible-playbook eks-cluster.yml

…

TASK [cloudformation : Create EKS EKSCTL-BTTRM-EKS-QA-TEST-STACK CloudFormation stack] ****

changed: [localhost]

TASK [eksctl : cloudformation_info] ****

ok: [localhost]

TASK [eksctl : set_fact] ****

ok: [localhost]

TASK [eksctl : Generate eks-cluster-config.yml] ****

changed: [localhost]

TASK [eksctl : Getting existing clusters list] ****

changed: [localhost]

TASK [eksctl : set_fact] ****

ok: [localhost]

TASK [eksctl : Setting eksctl action to either Create or Update] ****

ok: [localhost]

TASK [eksctl : Running eksctl eksctl_action CREATE cluster with name BTTRM-EKS-QA-TEST] ****

…

Wait, check:

Yay!

Actually — that’s all.

The final thing will be to create a Jenkins-job.

Oh, wait! Forgot about ConfigMap.

P.S. aws-auth ConfigMap

No matter how hard you try — you’ll forget something.

So — need to add the eks-root, user created at the very beginning of this post to the cluster we are creating.

It can be done with one command and two variables.

A note: actually, this will append the user each time when provisioning is running, so in my final solution I did it in a bit another way, but for now I’m leaving it here “as is” — will add a better way later.

In the group_vars/all.yml add a user's ARN:

...

eks_root_user_name: "eks-root"

eks_root_user_arn: "arn:aws:iam::534***385:user/eks-root"

And in the roles/eksctl/tasks/main.yml - add him to the cluster:

...

- name: "Update aws-auth ConfigMap with the EKS root user {{ eks_root_user_name | upper }}"

command: "eksctl create iamidentitymapping --cluster {{ eks_cluster_name }} --arn {{ eks_root_user_arn }} --group system:masters --username {{ eks_root_user_name }}"

Run:

…

TASK [eksctl : Running eksctl eksctl_action UPDATE cluster with name BTTRM-EKS-QA-TEST] ****

changed: [localhost]

TASK [eksctl : Update aws-auth ConfigMap with the EKS root user EKS-ROOT] ****

changed: [localhost]

…

Go back to the RTFM’s server where we have “clean” access for only the eks-root user, update its ~/.kube/config:

root@rtfm-do-production:/home/setevoy# aws — region eu-west-3 eks update-kubeconfig — name bttrm-eks-qa-test

Added new context arn:aws:eks:eu-west-3:53***385:cluster/bttrm-eks-qa-test to /root/.kube/config

Try to access nodes info:

root@rtfm-do-production:/home/setevoy# kubectl get node

NAME STATUS ROLES AGE VERSION

ip-10–1–40–182.eu-west-3.compute.internal Ready <none> 94m v1.15.10-eks-bac369

ip-10–1–52–14.eu-west-3.compute.internal Ready <none> 94m v1.15.10-eks-bac369

And pods:

root@rtfm-do-production:/home/setevoy# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

aws-node-rgpn5 1/1 Running 0 95m

aws-node-xtr6m 1/1 Running 0 95m

coredns-7ddddf5cc7–5w5wt 1/1 Running 0 102m

…

Done.

Useful links

Kubernetes

- Introduction to Kubernetes Pod Networking

- Kubernetes on AWS: Tutorial and Best Practices for Deployment

- How to Manage Kubernetes With Kubectl

- Building large clusters

- Kubernetes production best practices

Ansible

AWS

EKS

- kubernetes cluster on AWS EKS

- EKS vs GKE vs AKS — Evaluating Kubernetes in the Cloud

- Modular and Scalable Amazon EKS Architecture

- Build a kubernetes cluster with eksctl

- Amazon EKS Security Group Considerations

CloudFormation

- Managing AWS Infrastructure as Code using Ansible, CloudFormation, and CodeBuild

- Nested CloudFormation Stack: a guide for developers and system administrators

- Walkthrough with Nested CloudFormation Stacks

- How do I pass CommaDelimitedList parameters to nested stacks in AWS CloudFormation?

- How do I use multiple values for individual parameters in an AWS CloudFormation template?

- Two years with CloudFormation: lessons learned

- Shrinking Bloated CloudFormation Templates With Nested Stack

- CloudFormation Best-Practices

- 7 Awesome CloudFormation Hacks

- AWS CloudFormation Best Practices — Certification

- Defining Resource Properties Conditionally Using AWS::NoValue on CloudFormation

Originally published at RTFM: Linux, DevOps и системное администрирование.

Top comments (0)