Photo by ammiel jr on Unsplash

Have you ever deployed code from one environment to another only to have to spend time reconfiguring files, re-downloading libraries and redoing work because the operating system or general environment was slightly different from the development environment?

For developers who have even just being able to easily port different applications into new environments used to be surprisingly time consuming.

Not only that, applications could be limited to the OS they could run on and required much more resources.

Much of this has changed with the popularization of containers.

What is a Container and Containerization?

Simply put, a container packs up code along with it dependencies so it can be run reliably and efficiently over different environments. Containerization refers to the bundling of an application along with its libraries, dependencies and configuration files which are required to run it efficiently across multiple computing environments.

Benefits of Containerization

We want to discuss three technologies that you will likely run into as a developer. But first, in case you haven't used a container, we wanted to discuss some of the benefits of containers. For instance:

- Resource Efficiency: A major benefit is efficiency of resources. Containers do not require separate OS, thus they take up less resources. Some might argue that container offer similar benefits to virtual machines. However, virtual machines often take gigabytes in terms of size. Compare that to a container which is usually in the megabyte range.

- Platform Independence: The portability of containers is also another huge benefit since it wraps all dependencies of the application so it allows you to easily run applications on different environments for both public and virtual servers. This portability also allows organizations to work with a great deal of flexibility since it speeds up the development process and also allows developers to switch to other cloud environments easily.

- Effective Resource Sharing: Although containers run on the same server and share the same resources, they do not communicate with each other. Therefore, if one application crashes, the other will keep running flawlessly. This effective resource sharing yet isolation in working between the applications results in decreased security risks since any negative effects of one application are not spread to the other running containers.

- Predictable Environment: Containers allow developers to create predicted environments which are isolated from other applications. Therefore, containerization allows programmers to run consistency in working no matter where the application is deployed.

- Smooth Scaling: Another major benefit of containers is horizontal scaling. When working with a cluster environment you can add identical containers to scale out the entire process. With the smart scale procedures, you can run containers in real-time and reduced resource costs drastically. This also accelerates your return on investment while working with containers. So far this feature is being used by major vendors like Twitter Netflix and Google.

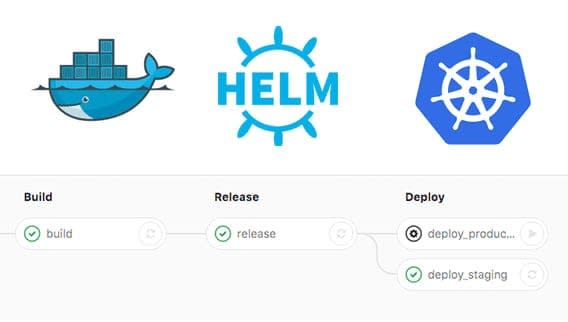

The Evolution Of Container Management From Docker To Helm

Although containers have provided many advantages, they also offered a host of new complexities. Especially as the concept of micro services became more prominent.

The need to not only create containers but better manage them became important. Below we will discuss 3 of the currently popular container and container management technologies that are being used.

Docker

You can't talk about containers without bringing up Docker.

Now many people might assume that Docker was the first container technology but that's not the case. The Linux academy has a great little bit of history on containers if you would like to learn more.

Now on to Docker.

Docker is an open-source project based on Linux containers. This container engine is developed on Dotcloud. Docker allows developers the ability to focus more on writing code without having to worry about the system that application will be running on.

Docker has a client server-architecture. This means that the Docker server is responsible for all container related actions. This server receives commands via Docker client using REST API's or CLI.

Commands like "docker run" or "docker build".

There are further nuances worth looking into. We would recommend reading Stackify's Docker Image vs container as it covers some of these very well.

Docker Image vs Container: Everything You Need to Know

Kubernetes

Initially developed by Google, Kubernetes is an open-source system used for managing containerized applications in different environments. The main aim of this project is to offer better ways for managing services or components of an application across varied infrastructures. The Kubernetes platform allows you to define how your application should run or interact with the environment. As a user, you can scale your services and perform updates conveniently.

Kubernetes system is built in form of layers --- with each layer abstracting complexity found in lower levels. To begin with, the base layer brings virtual and physical machines into a cluster via shared network. Here, one of the server functions as master server and acts as gateway to expose an API for the clients and users.

From here there are other machines that act as nodes that get instructions from the master for how to mange the different workloads. Each node needs to have some form of container software running on it in order to ensure that it can properly follow the instructions provided from the master node. To get a really good understanding on this topic Digital Ocean further elaborates on the topic here. You can also take a free course from Google Cloud on Coursera.

Helm

Helm, itself, is an application package manager which can be used to run atop Kubernetes. This program allows you to describe application structure via helm-charts as it can be managed through simple commands. Helms is a drastic shift as it has redefined how server-side applications are managed, stored, or even defined. This manager simplifies mass adoption of micro services so you can use several mini services instead of monolithic application. Moreover, you can compose new applications out of existing loosely coupled micro services.

This tool streamlines management and installment of Kubernetes applications by rendering templates and communicating with the Kubernetes API. This manager can be easily stored on disk and fetched via chart repositories such as RedHat packages and Debian.

How does it Work?

Helm groups logical components of an application into a "chart" so you can deploy and maintain them conveniently over extended time period. Each time a chart is deployed to cluster, a server side component of Helm creates its release. This release tracks application deployment over time. With Helm, you can deploy almost anything virtually --- ranging from Redis cache to complex web apps.

Conclusion:

Containerization is not a new concept and Docker is not the only technology designed to allow developers to easily configure their containers. However, as a developer, being able to understand the benefits and know how to use containers has made a big impact as they have helped simplify deployment as well as maintenance of code.

Let me know your thoughts below on.

10 Great Courses For AWS, Azure and GCP

5 AWS Technologies That'll Make Your Life Easier

Airbnb's Airflow Vs. Spotify's Luigi

How Algorithms Can Become Unethical and Biased

Top 10 Business Intelligence (BI) Implementation Tips

5 Great Big Data Tools For The Future --- From Hadoop To Cassandra

Latest comments (3)

Is there any similarity between Docker Compose and Helm or they try to solve two different problems ?

From my experience with Helm I'd like to point to some alternatives:

Thank you @seattledataguy for your useful article. I am wondering what did you do in the release stage in your Gitlab pipeline?