Photo by Zbynek Burival on Unsplash.

One of the most beneficial ways to increase your chances of being selected for a data engineering position is to add well-documented portfolio of software projects to your resume.

It not only improves your skills but also provides a tangible final product you can discuss with your recruiter.

We previously shared an article that looked over 5 examples of data engineering projects you could take on. But what if you want to make your own?

Where should you start?

Often, finding the right dataset is the hardest part of putting together an effective project.

To assist you in your journey of project development, this article will curate a list of data sources that can be utilized for data engineering. I will also outline a few ways that other developers have implemented these datasets for you to draw inspiration from.

1. San Francisco Open Data's API

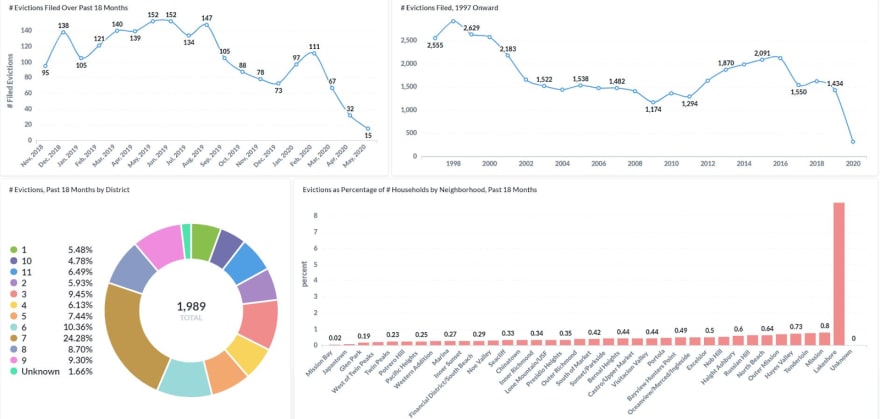

The first data source comes from San Francisco Open Data's API. The local San Francisco government has done a tremendous job of tracking data from a large variety of publishing departments including Treasurer-Tax Collector, Airport (SFO), and the Municipal Transportation Agency, to name a few. An apt data engineering application of this data source was outlined by Ilya Galperin in which eviction trends were tracked by district, filing reason, neighborhood, and demographic.

This study was especially interesting because it was conducted over the months following COVID-19, allowing for exploratory analysis of how COVID-19 impacted various subsets of San Francisco. It will be years before we fully grasp the intricacies of the impact of the pandemic and how they differ across various locations and demographics. This project is one small step towards understanding the repercussions of COVID-19 and the disparity of the impact among various groups. Galperin followed the general model architecture outlined below:

Source: GitHub

With respect to resume project building, providing an image like the one listed above is crucial. It allows a potential recruiter to quickly reference packages that you have had hands-on experience with. In general, it is always best to make the information you know recruiters look for easy to find. In addition to the architecture, the author also displayed the data model for this project:

Source: GitHub

Although this project is great, I would encourage you to further explore this data source to see everything that this API has to offer. This will allow you to tailor your own project to suit your own interest.

Lastly, one of the most important aspects of a good resume project is a visually appealing user interface (UI). Although it does not prove knowledge of key aspects of data engineering, for someone skimming your repository, your UI visually conveys the level of effort you put into the project. Don't let your hard work be diluted by a mediocre visual representation. Listed below is the UI that the author created for this project:

Source: GitHub

Data Engineering Project Sources Video

2. Big List of Real Estate APIs

Source: Zillow

Continuing with the topic of real estate, the next project is brought to you by the power of Zillow and curated by Patrick Pohler. Here, you can find one of the most comprehensive sets of real estate-related APIs that I have come across. Pohler has done a great job of selecting and categorizing the various resources along with detailing other helpful tools for implementation.

The major categories of this list include:

- Home evaluations

- Neighborhood and community data

- Mortgage rates

- Mortgage calculators

An example of these APIs being implemented into a data engineering pipeline can be found on GitHub. The developer of this repository created a model pipeline that utilizes both historical and current market data to determine the potential return that a local region would yield from a real estate investment. Listed below is the general architecture of the author's model:

Source: GitHub

This project features an XGBoost Regression model to facilitate prediction. The author has put together a well-documented project that would serve as a great reference for your own potential project.

For More Content Like This Subscribe To My Youtube Today

3. US Census Bureau Data

Source: US Census Bureau

The next dataset comes from one of the largest governmental studies: the United States Census. Here, you can find access to datasets dating from 1970-2020. These sources cover a wide variety of topics including:

- City and town population totals

- Annual social and economic supplements

- State government tax dataset

This dataset would make for a great auxiliary dataset to answer questions about the impact of events like the housing crisis in 2008 or the recent pandemic.

4. Consumer Complaint Database

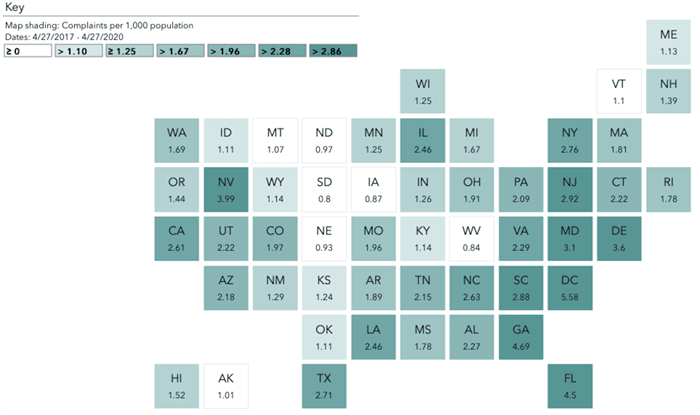

So far, we have looked at a few governmental data courses. However, the most comprehensive source of governmental data can be found on Data.gov. It facilitates an enormous variety of datasets and features a search tool allowing you to uncover data on most any social or economic topic.

For demonstration, here is an interesting dataset: Consumer Complaint Database. This dataset spans the past three years. Like the data used in Galperin's project, this information could be used to map some of the economic impacts of COVID-19.

Source: Consumer Financial Protection Bureau

5. Scraping News From RSS Feeds

Lastly, the most readily available data source would be data scraped from the internet. To be slightly less vague, I have outlined a project that web-scrapes new online articles every ten minutes to provide all the latest news curated into one place. This project utilizes a wide variety of relevant data engineering tools, which makes it a great project example. The author of this project is Damian Kliś, and he outlines his model architecture below:

Source: GitHub

Interestingly, Kliś implements an updated proxypool. He uses proxies in combination with rotating user agents to work around anti-scraping measures and to prevent being identified as a scraper. The author also employs a data validation step to ensure coherent data is being sent to the end-user. Finally, the API of this project was written using the Django REST framework.

The documentation of this project is superb. Everything is outlined and easy to implement. If you are interested in possibly scraping the data for your own project, I would suggest reviewing this project in depth.

So Where Will You Start?

When compiling your project, remember how crucial presentation is for creating an effective portfolio project. Aspects of documentation, visualization, and the user interface can make or break a project --- especially when your audience is a recruiter who is potentially skimming hundreds of projects to narrow down a shortlist of candidates.

Unfortunately, lacking visually appealing projects could contribute to your resume being weeded out in the early stages of candidate selection.

Thanks for reading! If you want to read more about data consulting, big data, and data science, then click below.

How Much Do Data Engineers Make: Data Engineer Salaries Vs Software Engineers

Building Your First Data Pipeline: How To Build A Task In Luigi Part 1

Greylock VC and 5 Data Analytics Companies It Invests In

How To Improve Your Data-Driven Strategy

What In The World Is Dremio And Why Is It Valued At 1 Billion Dollars?

Latest comments (0)