or Universal TCP SNI/Virtual Hosting via Client-IP-Address-Correlated DNS Queries, whichever you prefer.

Introduction

I should preface this article by disclosing that I’ll be covering some fairly advanced concepts. You should have at least a working knowledge of DNS, IP networking, and web servers in order to understand what I’m talking about.

IPv4 exhaustion is a problem. The price of IPv4 addresses has gone through the roof, with no more free IP addresses available to allocate. Meanwhile, the adoption of IPv6 has been disappointing. I confess that the title of this article is a bit clickbait-y — for reasons that I will demonstrate, this solution wouldn’t work in the real world, however it’s a novel hypothesis that deserves some thought.

An Overview of Existing Solutions

In the cloud space, the price of dedicated IPv4 addresses has gone up significantly lately. Some low-end providers have gone as far as to sell virtual servers that share public IPs, getting allocated only a set range of ports to reduce the overall cost. This is obviously inconvenient, if not entirely untenable if you need access to application-specific ports, such as 80/443 for HTTP(s).

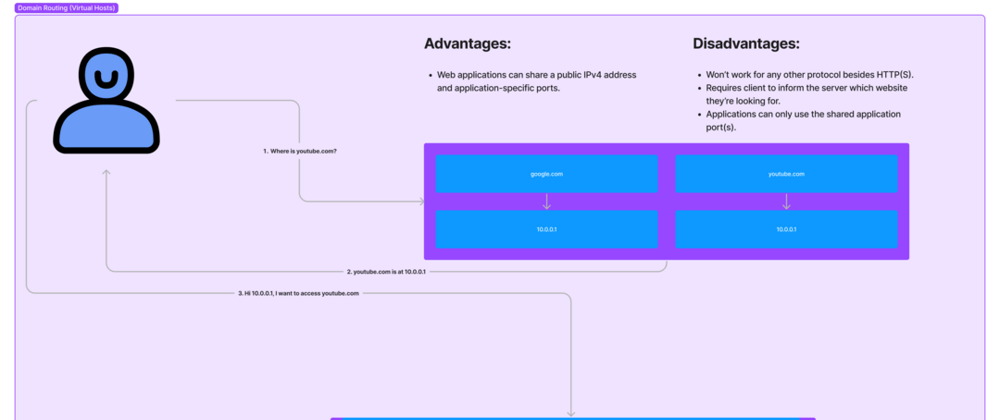

Shared web hosting providers solve this problem with virtual hosts — a feature of web servers wherein the client (in this case, a web browser) explicitly tells the server the name of the website they’re looking for by means of the HTTP Host header. The web server can be configured to direct the client to the correct web content or application based on this header.

This is a perfectly reasonable solution for HTTP (or any protocol that uses SNI — lots of abbreviations, I know. Read more here), but what about TCP in general? There are lots of applications and protocols aside from HTTP that may need access to a specific port, and without either specialized schemes like virtual hosts or dedicated IPs, how can we effectively share server space?

My Solution

This is where the scheme I’ve developed comes in. In our setup, we control not only the server running the TCP-based application, but also the authoritative DNS server for our domain (for an explanation, see this article from Cloudflare). When a client connects to a service by domain name, they must first perform a DNS lookup for the domain. Our DNS server is set up to store the domain being looked up along with the IP address of the client who performed the query. This way, when the client gets the result of the query back and actually connects to our server, the server can check which service that particular client is looking for by checking which domain name that client had just queried for.

In other words, instead of relying on the client to indicate the desired host explicitly via SNI, we infer which host/service they’re seeking by monitoring their DNS queries to our DNS server for our domain(s).

To demonstrate, I’ve created an extremely basic JavaScript program. This script creates both the DNS authoritative server and the TCP proxy. The TCP proxy will dynamically route traffic to whichever upstream it thinks the connecting client is looking for, based on whatever it has stored as the last domain that client looked up. This information is stored in-memory in JS maps, however in production, it would be more practical to use a proper datastore like Redis.

I would never deploy this scheme into production (see the following section), but if I did, I certainly wouldn’t use JavaScript. JS happens to be a language I’m familiar with that has existing packages for a programmable DNS server and a network proxy, and for the purposes of demonstration, it works well enough.

Reasons Why This Is Stupid

This sounds perfect, right? Well, this header should indicate to you that there are some problems with this solution after all, as alluded to in the opening.

The Client Must Use the Domain Name

We’re all used to using domain names when typing in the name of a website, but for other applications like SSH access for servers, this is much less common. Directly accessing the IP address will yield unreliable results, since we wouldn’t know which service the client is looking for.

NATting exists

Ironically, another attempted solution at staving off IPv4 exhaustion issues interferes with ours. Network Address Translation (or “NAT”), is a technology that allows local devices to share a public IP address. It’s often used in home networks, allowing any number of devices on a local network to interact with the world wide web through one single public IPv4. This poses a problem — we only see their public IP. If two devices on the same local network were to try to access our services at or around the same time, we would have no way of reliably differentiating them.

DNS Caching

As it turns out, it’s extremely uncommon for any client or device to ever communicate directly with the authoritative DNS server for a domain. DNS queries are almost always done through a series of caches — your local DNS cache and the DNS cache provided by either your ISP or a public DNS provider such as Google (8.8.8.8) or Cloudflare (1.1.1.1). This means that the authoritative DNS server will never see the IP address of the client who’s making the request.

One possible solution to this problem is a specification called ECS/EDNS Client Subnet/EDNS0/Snoop Lion (RFC 7871). By way of this extension to the DNS protocol, a public DNS provider can provide the upstream authoritative DNS server with a portion of the client’s IP address in the query. The intended use for this information is geolocation-based routing — the subnet indicates to the authoritative DNS server where the client is physically located, allowing it to direct the client to the closest of any number of servers in different physical locations. However, we could go off-label and use this subnet as the correlating factor between the desired domain and incoming traffic from the client.

This solution is far from perfect for two reasons:

- Not all DNS providers support ECS. Notably, Cloudflare vocally refuses to support the standard, citing privacy concerns.

- Subnets are imprecise. Since we’re only provided a subnet (e.g. 192.168.1.1/24), we can’t know exactly who the client is. If IP address “neighbors” (e.g. 192.168.1.23 and 192.168.1.24) were to make queries proximally to each other, we would have no reliable way of knowing who is who, and where to send each client. While the odds of this are fairly low (/24 subnets are most common and only contain 256 IP addresses), it still stands out as a glaring imperfection in this system.

Conclusion

The real conclusion here should be to accelerate adoption of IPv6. It would eliminate IPv4 exhaustion once and for all while having the added benefit of making the IPv4 squatters’ precious /8 subnets worthless (seriously, why does the US DoD need 234,881,024 IPs?). It would also incidentally render this experiment worthless, but that is a loss I’d be willing to accept, given the net benefits.

As an experiment, this was extremely interesting and opens the door to many branching lines of thought— how could this apply to reverse DNS? What about other similarly structured systems? I hope I’ve stimulated your imagination and given you the impetus to pursue and research your own ideas.

The complete source code of my demo is available here. Please give it a look over, and if you want to go the extra mile, replace some of the values and run it yourself.

Top comments (0)