TLDR:

We had horrible outage where rabbitmq node ran out of memory because I

forgot to unbind a queue (one of 50s or so), and we lost a whole bunch

of in-producer-memory state, this made our whole infrastructure

completely probabilistic and it took us 2 hours of blood sweat and

tears to recover the lost data. We should've just used postgres as a

queue.

In almost any system being built today we have users that perform

actions to mutate state. This is life, to create side effects, yes,

mutation is life.

I will illustrate it like this:

[ user(U) ]<------+

| |

| action(A) |

v |

[ receiver (R)] ^ world

| | change

| |

v |

[ state (S) ] ----+

receiver:

this is usually a backend endpoint

user:

in our case is a chef

state:

in our case: creating an order

You can move those pieces in any way, for example the action can be a

function of the state mutation, instead of the other way around, or

the receiver of the action can be the user itself and the user

directly mutates the state, etc. This however is what technical

implementation means, looks the same to the outside, but has very

different emergent properties.This is what this post is about,

emergent behavior and chaos.

Lets a possible technical implementation of 'user' creating an 'order'

- user sends action to an endpoint /create-order

- backend code pushes to 'order.new'

-

a) consumer of 'order.new'

- order is picked up transformed a bit and written to a database

- another message is sent to 'order.created' queue

-

a) consumer of 'order.created'

- create audit log of who/when/what

-

b) consumer 'order.created'

- send email to the interested parties

-

c) consumer 'order.created'

- builds an email and sends it to 'email.created'

- logs the email for archiving purposes

-

d) consumer 'order.created'

- create chat message with the order

- push to 'message.created'

-

e) consumer 'order.created'

- extract features for DS

- copy to salesforce etc

-

a) consumer of 'message.created'

- send push notification

-

a) consumer of 'email.created'

- sends the email and then pushes to a queue email.sent

-

a) consumer of 'email.sent'

- marks the email as sent

This is pretty much what we have now (maybe a bit more complicated but

not much), you can trivially add more reactive components listening to

specific topics, and you can fanout and etc just with rabbitmq.

It is super flexible and extendible, re-triable etc.

Of course it was not designed like that, but it grew over the years,

adding bits and pieces here and there, it is very easy to

unconsciously complicate it.

Everything was really good until I did some refactor and stopped

consuming one of the topics that was a clone of 'order.created', and I

forgot to unbind the queue, so it kept getting messages but nobody was

draining it, and a the RMQ node ran out of memory. Only 1 out of 3,

because we still use elixir for that process, we were relaying on

elixir's in-memory stability to keep a buffer of messages to resend if

need, which of course I killed when I restarted the cluster because I

wasn't sure what the fuck is going on.

That meant that 30% of all requests went to the abyss, the true abyss.

We had to stay to 5am to glue bits and pieces and to connect the state.

Caused the worse outage I have ever been firefighting, and once I was

involved in solving an outage that we were selling hotels for 1/100th

of the price, losing millions of euros.

Now lets discuss another implementation of the same thing:

'user' creating an 'order':

- user sends action to an endpoint /create-order

- backend code:

begin

insert the order

insert the message (push_notification_sent_at = null)

insert the email (sent_at = null, delivered_at = null)

insert log

commit

- a) secondly cronjob

select (for update) messages where push_notification_sent_at is null

send the push notification

update the message

- b) secondly cronjob

select (for update) email where sent_at is null

send the email

update the email table

Use the database as a queue, works totally fine, we can scale

postgress vertically forever. Why forever you ask? Because there are

~30000 restaurants in London, and we can geo-shard it, and there is

physical upper bound on amount of data in a region.

Fucking queues, it is so easy to overuse them, without knowing it

creeps up on you, and in the end you have infrastructure spaghetti.

Anyway We are migrating from queues to transactions and fuck it, I cant keep

it in my head, ultimately it all ends up in postgres anyway, just with

extra steps.

Fuck.

The morale of the story is:

If shit ends up in postgres anyway, and you can afford to directly

write to it (which is not always the case), just write to it.

Do boring technologoy, the way we wrote php3 shit 20 years ago, get

the state and write it in the database, even though mysql didn't have

transactions (it was helpfully accepting to BEGIN/COMMIT though haha),

it was ok.

PS:

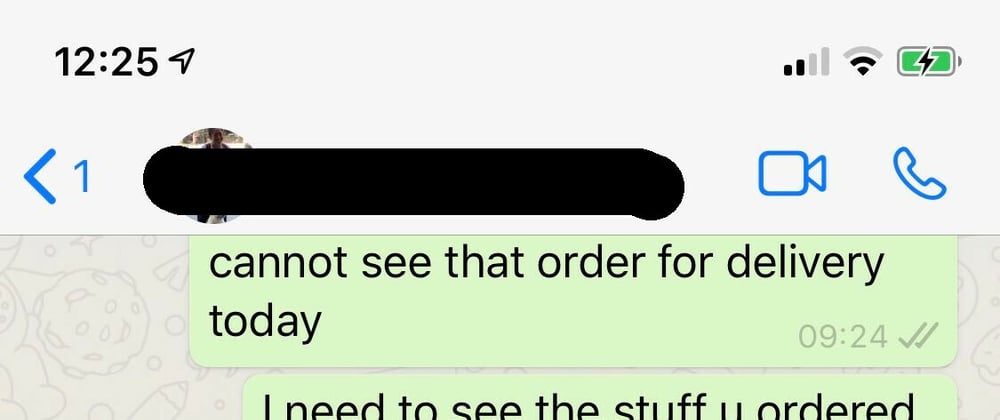

There were 2 missed deliveries, but CS handled them like a

king. Sending uber to pick up the things form the supplier and sending

it to the chef and etc. It is much easier to firefight when you know

CustomerSuccess has your back.

PPS: I think outages are the best, everyone groups up and we solve the

problem, some panic, some adrenalin, some pressure, but in the end the

whole company becomes more of a team.

Top comments (0)