Originally published on the Raygun Blog

If you've been anywhere near the IT industry over the last five years, you've very likely heard of the container platform Docker. Docker and containers are a new way of running software that is revolutionizing software development and delivery.

What is Docker?

Docker is a new technology that allows development teams to build, manage, and secure apps anywhere.

It's not possible to explain what Docker is without explaining what containers are, so let's look at a quick explanation of containers and how they work.

A container is a special type of process that is isolated from other processes. Containers are assigned resources that no other process can access, and they cannot access any resources not explicitly assigned to them.

So what's the big deal?

Processes that are not "containerized" can ask the operating system for access to any file on disk or any network socket.

Until containers became widely available, there was no reliable, guaranteed way to isolate a process to its own set of resources. A properly functioning container has absolutely no way to reach outside its resource "sandbox" to touch resources that were not explicitly assigned to it.

For example, two containers running on the same computer might as well be on two completely different computers, miles away from each other. They are entirely and effectively isolated from each other.

This isolation has several advantages:

Two containerized processes can run side-by-side on the same computer, but they can't interfere with each other.

They can't access each other's data unless explicitly configured to do so.

Two different applications can run containers on the same hardware with confidence that their processes and data are secure.

Shared hardware means less hardware. Gone are the days when a company needs thousands of servers to run applications. That hardware can be shared between different business units or entirely different enterprise clients. The result is massive new economies of scale for private and public centers alike.

Docker explained

Now that you know what containers are, let's get to Docker.

Docker is both a company and a product. Docker Inc. makes Docker, the container toolkit.

Containers aren't a singular technology. They are a collection of technologies that have been developed over more than ten years. The features of Linux (such as namespaces and cgroups) have been available for quite some time — since about 2008.

Why, then have containers not been used all that time?

The answer is that very few people knew how to make them. Only the most powerful Level-20 Linux Systems Developer Warrior Mage understood all the various technologies needed to create a container.

In those early days, willing to do the work to understand them, let alone creating containers, was a complex chore. The stakes are high — getting it wrong turns the benefits of containers to liabilities.

If containers don't contain, they can become the root cause of the latest Hacker News security breach headline.

The masses needed consistent, reliable container creation before containers could go mainstream.

Enter Docker Inc.

The primary features of Docker are:

- The Docker command-line interface (CLI)

- The Docker Engine

Docker made it easier to create containers by "wrapping" the complexity of the underlying OS syscalls needed to make them work. Docker's popularity snowballed, to put it mildly.

In March 2013, the creator of Docker, dotCloud, renamed itself to Docker Inc. and open-sourced Docker. In just a few years, containers have made a journey from relative obscurity, to the transformation of an industry. Docker's impact rivals the introduction of Virtual Machines in the early 2000s.

How popular is Docker?

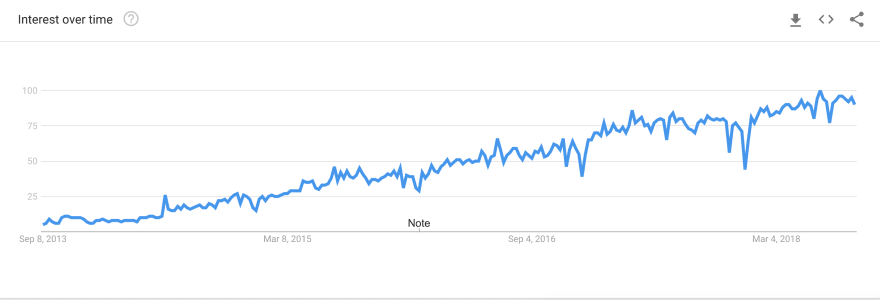

Here's a Google Trends graph of searches for the term "docker" over the last five years:

You can see that Google searches for Docker have seen steady, sustainable growth since its introduction in 2013. Docker has established itself as the de-facto standard for containerization. There are a few competing products, such as CoreOS/rkt, but they are reasonably far behind Docker in popularity and market awareness.

Docker's popularity was buoyed recently when Microsoft announced support for it in both Windows 10 and Windows Server 2016.

Why is Docker so popular and why the rise of containers?

Docker is popular because of the possibilities it opens for software delivery and deployment. Many common problems and inefficiencies are resolved with containers.

The six main reasons for Docker's popularity are as follows.

1. Ease of use

A large part of Docker's popularity is how easy it is to use. Docker can be learned quickly, mainly due to the many resources available to learn how to create and manage containers. Docker is open-source, so all you need to get started is a computer with an operating system that supports Virtualbox, Docker for Mac/Windows, or supports containers natively, such as Linux.

2. Faster scaling of systems

Containers allow much more work to be done by far less computing hardware. In the early days of the Internet, the only way to scale a website was to buy or lease more servers. The cost of popularity was bound, linearly, to the cost of scaling up. Popular sites became victims of their own success, shelling out tens of thousands of dollars for new hardware. Containers allow data center operators to cram far more workloads into less hardware. Shared hardware means lower costs. Operators can bank those profits or pass the savings along to their customers.

3. Better software delivery

Software delivery using containers can also be more efficient. Containers are portable. They are also entirely self-contained. Containers include an isolated disk volume. That volume goes with the container as it is developed and deployed to various environments. The software dependencies (libraries, runtimes, etc.) ship with the container. If a container works on your machine, it will run the same way in a Development, Staging, and Production environment. Containers can eliminate the configuration variance problems common when deploying binaries or raw code.

4. Flexibility

Operating containerized applications is more flexible and resilient than that of non-containerized applications. Container orchestrators handle the running and monitoring of hundreds or thousands of containers.

Container orchestrators are very powerful tools for managing large deployments and complex systems. Perhaps the only thing more popular than Docker right now is Kubernetes, currently the most popular container orchestrator.

5. Software-defined networking

Docker supports software-defined networking. The Docker CLI and Engine allow operators to define isolated networks for containers, without having to touch a single router. Developers and operators can design systems with complex network topologies and define the networks in configuration files. This is a security benefit, as well. An application's containers can run in an isolated virtual network, with tightly-controlled ingress and egress paths.

6. The rise of microservices architecture

The rise of microservices has also contributed to the popularity of Docker. Microservices are simple functions, usually accessed via HTTP/HTTPS, that do one thing — and do it well.

Software systems typically start as "monoliths," in which a single binary supports many different system functions. As they grow, monoliths can become difficult to maintain and deploy. Microservices break a system down into simpler functions that can be deployed independently. Containers are terrific hosts for microservices. They are self-contained, easily deployed, and efficient.

Should you use Docker?

A question like this is almost always best answered with caution and circumspection. No technology is a panacea. Each technology has drawbacks, tradeoffs, and caveats.

Having said all that...

Yes, use Docker.

I'm making some assumptions with this answer:

That you develop distributed software with the intent of squeezing every last cycle of processing power and byte of RAM out of your infrastructure.

You're designing your software for high loads and performance, even if you don't yet have high loads or need the best performance.

You want to achieve high deployment velocity and reap the benefits of same. If you aspire to DevOps practices in software delivery, containers are a key tool in that toolbox.

You either want the benefits of containers, need them, or both. If you already run high-load, distributed, monolithic or microservice applications, you need containers. If you aspire to someday run these high-load, high-performance applications, now is the time to get started with containers.

When you should not use Docker or containers

Developing, deploying, and operating software in containers is very different from traditional development and delivery. It is not without trials and tribulations.

There are tradeoffs to be considered:

If your team needs significant training

Your team's existing skillset is a significant consideration. If you lack the time or resources to take up containers slowly or to bring on a consulting partner to get you ramped up, you should wait. Container development and operations are not something you want to "figure out as you go," unless you move very slowly and deliberately.

When you have a high-risk profile

Your risk profile is another major consideration. If you are in a regulated industry or running revenue-generating workloads, be cautious with containers. Operating containers at scale with container orchestrators is very different than for non-containerized systems. The benefits of containers come with additional complexity in the systems that deliver, operate, and monitor them.

If you can't hire the talent

For all its popularity, Docker is a very new way of developing and delivering software. The ecosystem is constantly changing, and the population of engineers who are experts in it is still relatively small. During this early stage, many companies are opting to work with Enterprise ISV partners to get started with Docker and its related systems. If this is not an option for you, you'll want to balance the cost of taking up Docker on your own against the potential benefits.

Consider your system's complexity

Finally, consider your overall requirements. Are your systems sufficiently complex enough to justify the additional burden of taking on containerization? If your business is, for example, centered around creating static websites, you may just not need containers.

In conclusion, Docker is popular because it has revolutionized development

Docker, and the containers it makes possible has revolutionized the software industry and in five short years, their popularity as a tool and platform has skyrocketed.

The main reason is that containers create vast economies of scale. Systems that used to require expensive, dedicated hardware resources can now share hardware with other systems. Another is that containers are self-contained and portable. If a container works on one host, it will work just as well on any other, as long as that host provides a compatible runtime.

It's important to consider that Docker isn't a panacea (no technology is.) There are tradeoffs to consider when planning a technology strategy. Moving to containers is not a trivial undertaking.

Consider the tradeoffs before committing to a Docker-based strategy. A careful accounting of the benefits and costs of containerization may well lead you to adopt Docker. If the numbers add up, Docker and containers have the potential to open up new opportunities for your enterprise.

Wondering how you can monitor microservices for performance problems? Raygun APM, Real User Monitoring and Crash Reporting are designed with modern development practices in mind. See how the Raygun platform can help keep your containers performant.

Top comments (1)

Hello Dave,

thank you for your insights.

To complete the picture a bit, one might add:

The idea to compartmentalize the OS to efficiently use ressources is a bit older

So the big selling point of Docker was that it allowed to solve two problems at once; separating the Kernel from the Userland allowed

Each container comes with its own userland

Nice side-effect:

Additionally:

From what was said above, I believe it is popular because it made efficient deployment possible which is a different POV.

When using a single machine as a single deployment target with very few processes running. Say your typical OTS web application with a DB and low to medium traffic where it is even OK to run everything on a single box.