Introduction

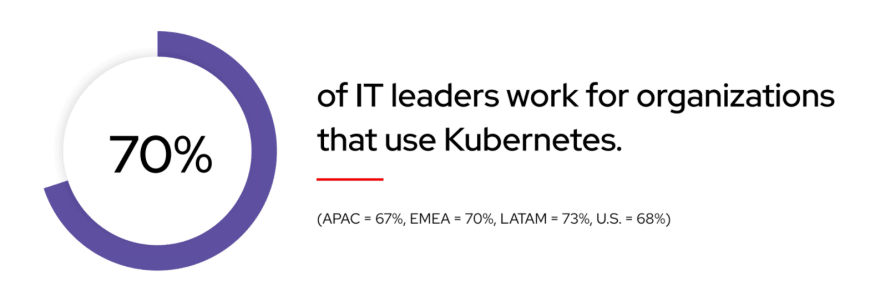

Kubernetes, which was first released on June 7th, 2014, has quickly established itself as the de facto industry standard for orchestrating containers. According to Red Hat's State of Enterprise Open Source 2022 report, 70% of the 1,296 IT leaders polled said their organizations use Kubernetes.

This figure is expected to rise further, as nearly one-third of the polled IT leaders said they planned to significantly increase their use of containers over the next 12 months, and there have been over a million downloads of this particular tool so far.

But what exactly is Kubernetes? How does it work? And why should you care about it? In this article, I'll try to answer those questions—and more!

What is Kubernetes?

Kubernetes is an open source container management system. It was originally developed by Google engineers to help them manage large-scale containerized applications, and it has since become the most popular solution for running containers in production.

Kubernetes is a platform for running containerized applications. It's designed to make it easier to deploy, scale, and manage those applications—which are usually made up of multiple containers that work together to provide features like web serving or data processing.

Kubernetes can be thought of as a distributed system for managing containerized applications at large scale. Kubernetes clusters are made up of one or more master nodes that control worker nodes, which run tasks on behalf of clients through API requests sent over HTTP or gRPC protocols.

The master node(s) manage and schedule the containers that make up each application. APIs are provided for monitoring, logging, and diagnostics, as well as configuration for applications running on the cluster.

Is Kubernetes built on top of other open source projects?

Yes, Kubernetes, in fact, relies on a number of other free and open source software packages. As a base, it relies on the Docker container runtime and the CoreOS Linux distribution, and it utilizes other open source projects for a number of its components, such as etcd for distributed key-value storage. The tool's core and control plane are both built in GO programming language, making it a completely Go-based application. Kubernetes itself is an open source project and has been used as a building block for other open source projects.

Kubernetes was originally developed by Google and donated to the Cloud Native Computing Foundation(CNCF) in 2015. Red Hat took over as the maintainer of Kubernetes 1.10 through 1.13 releases and then released version 1.14, which marked a new beginning for Kubernetes as a fully community-owned project. It is now maintained by a community of developers and users around the world.

How does Kubernetes work?

Kubernetes is used for automating the deployment, scaling, and management of containerized applications. It supports multiple platforms and infrastructure providers.

Kubernetes provides two primary things:

- A way to manage your container clusters.

- A way to get data from one place to another (such as from a database to an application)

Kubernetes also provides a lot of features that make it easier to manage your cluster of containers. It includes a built-in UI interface, allowing you to manage everything from a single location. It also has automatic recovery and self-healing capabilities, so if something goes wrong with one of your nodes (like an outage), Kubernetes will automatically restart it when the issue is resolved.

Not only that, it is also highly configurable. You can use it to deploy any type of application, from web servers to databases. It has support for many different cloud environments, including Google Cloud Platform (GCP), Amazon Web Services (AWS), and Microsoft Azure.

What and why containers?

Before we dive deep into kubernetes, let's talk about containers first.....

Containers are a way of packaging and running applications in a portable and isolated environment. They allow applications to be run in a consistent and predictable manner, regardless of the underlying infrastructure. This makes it easier to develop, test, and deploy applications, as well as to run multiple applications on the same host without them interfering with each other.

Containers have become popular recently because they provide a number of benefits over traditional deployment methods. For example, they are:

- Lightweight: A container is a single process that runs on a machine and includes only the libraries, configuration files, and other dependencies it needs to run. This means containers can be started quickly, as there is no need for the initial setup of a virtual machine (VM), which can take time depending on its size.

- Portable: Containers can be moved from one environment to another with minimal effort because their entire runtime state is included in each container. Containers also provide an additional layer of abstraction between an application's code and the underlying OS kernel, making it easier to move applications between servers while maintaining compatibility with all dependencies installed inside them—you don't have to worry about incompatibilities between your app's software stack and whatever server you choose!

- Isolated: To keep containers separate from each other on the same host machine, they run in separate namespaces—this means that even if two or more processes share an IP address outside their respective namespaces, they won't necessarily be able to access each other's files or ports! Using this approach reduces port conflicts since network services won't need specific ports opened up on hosts just so the operating system knows where to send data destined for certain applications....

The level of isolation provided by containers can vary depending on the container runtime and the specific configuration of the container. Some container runtimes, such as Docker, provide a high level of isolation by default, while others may provide more limited isolation. However, in general, containers are designed to provide a certain degree of isolation to ensure that they can run independently and reliably. This makes it easier to set up and manage complex infrastructures with many different applications, all running on the same host. You can also run multiple containers on the same hardware with minimal overhead since they're isolated from each other.

How do containers compare to virtual machines?

Containers are more lightweight than virtual machines (VMs) in that you don't need to run an entire operating system. Containers are also more portable, as they're typically created from a Dockerfile or other source code and can be moved around easily on your machine.

Containers are more efficient than virtual machines because they allow you to send just the right amount of data over the network. This is especially important for microservices, which need to share resources but often can't because different users have different needs (like different database sizes).

Containers are more scalable than virtual machines because it's easier to increase their size as needed; all one has to do is allocate more resources (RAM) and/or CPU cycles when deploying new containers onto existing hosts with Kubernetes' replication controller feature set built-in for this purpose!

Containers are "kind of" safer than virtual machines because there isn't much that can go wrong with them while they're running. For example, each container instance can start new processes while also handling requests from programs running outside of that container instance using its own IP address. This is very different from how things are handled inside a virtual machine, where processes running on the same host share resources with one another.

With containers, you can achieve true cloud portability

Containers can indeed provide a high degree of portability and allow applications to be easily moved between different environments. This is one of the key benefits of using containers, as it enables organizations to develop and deploy applications consistently and predictably, regardless of the underlying infrastructure.

Containers provide this portability by packaging the application and its dependencies into a self-contained unit that can quickly move and run on any host with the necessary container runtime. This means an application can be developed and tested on one system and then run in production on another without any changes or modifications.

Additionally, containers are designed to be portable and run on various infrastructures, from on-premises data centers to public clouds. This allows organizations to choose the best environment for their applications and to move them between different environments if needed quickly.

Rise of containers is promoting adoption of Kubernetes

The rise of containers has indeed played a significant role in promoting the adoption of Kubernetes. Kubernetes is a container orchestration platform, which means that it is designed to manage and deploy large numbers of containers across a cluster of machines.

As containers have become more widely used, there has been a growing need for effective ways to manage and deploy them at scale. This has led to the rise of Kubernetes and other container orchestration platforms, which provide the necessary tools and features for managing and deploying large numbers of containers.

Additionally, Kubernetes has been designed to work seamlessly with containers and to provide a consistent and predictable way to run and manage containerized applications. This has made it an attractive choice for organizations using containers and looking for a powerful and flexible platform for managing and deploying their applications.

Primary components that make up kubernetes

Kubernetes is currently maintained by Google, Red Hat, BIG organizations and community of expert developers. Kubernetes provides a highly scalable container management solution for any cloud or on-premises infrastructure. The platform consists of several main components:

Kubernetes Master: It is the central control plane for a Kubernetes cluster. It is responsible for managing the cluster and coordinating the activities of the Nodes. The Master includes several components, such as the API server, which exposes the Kubernetes API and allows other components to interact with the cluster; the Scheduler, which determines where to run each application; and the Controller Manager, which provides various cluster-level functions such as replication and self-healing.

Kubernetes Node: The Nodes are the worker machines in a Kubernetes cluster. They run the applications and workloads that are managed by the Master. Each Node includes the Kubernetes runtime, which is responsible for running the containers and providing the necessary resources and isolation. The Nodes communicate with the Master to receive instructions and to report on the status of their workloads.

Kubernetes Dashboard: A flexible user interface that provides a graphical view of cluster resources and allows users to manage and control workloads.

Other key components of Kubernetes include the etcd distributed key-value store, which is used for storing the cluster's configuration and state; the kubelet, which is the primary agent that runs on each Node and manages the containers; and the kubectl command-line interface, which is used to interact with the cluster and manage its applications.

Game-changing implications of Kubernetes

Kubernetes has several game-changing implications for how applications are developed, deployed, and managed. Some of the critical implications of Kubernetes include the following:

- Increased agility and speed: Kubernetes provides a powerful and flexible platform for running and managing applications, which allows organizations to deploy and update applications quickly and easily. This can reduce the time and effort required to develop and deploy applications and enable organizations to respond more rapidly to changing business needs.

- Improved scalability and reliability: Kubernetes includes features such as self-healing and autoscaling, which can help to ensure that applications are always available and running at the required capacity. This can improve the reliability and performance of applications and help organizations handle sudden increases in traffic or workloads.

- Reduced operational overhead: Kubernetes provides a consistent and centralized way to manage and deploy applications across a cluster of machines. This can simplify the operational complexity of running and managing applications and help organizations reduce the time and effort required to maintain their applications.

- Greater portability and flexibility: Kubernetes is designed to be portable and run on various infrastructures, from on-premises data centers to public clouds. This can give organizations greater flexibility and choice in where they run their applications and make it easier to move applications between different environments. Overall, Kubernetes has the potential to significantly change the way applications are developed, deployed, and managed and can provide organizations with many benefits in terms of agility, scalability, reliability, and simplicity.

These skills are becoming increasingly important for software engineers and other IT professionals.

The use of Kubernetes and containers is growing in importance for software developers and other IT professionals because to their widespread adoption and the many advantages they offer for creating, deploying, and maintaining applications. Some of the reasons why Kubernetes and containers skills are becoming increasingly important include:

Kubernetes help you manage your containerized applications. It helps you run and scale your applications, and it helps you do it at a low cost.

It is based on the idea that applications should be constructed as small, individual pieces (containers) rather than large, monolithic programs. In a containerized environment, you don't worry about individual machines; instead, you worry about how to run applications and services across potentially thousands of machines.

Kubernetes also offers built-in tools for managing applications and services across potentially thousands of machines. For example: You can create groups of containers (known as "pods") on which an application depends by defining labels in its manifest file; then Kubernetes will ensure they're always running together when needed by putting them into the same pod if possible. * Your pods can also be distributed across multiple hosts to provide automatic high availability or load balancing*. If one host fails or becomes unavailable for some reason due to hardware problems or network connectivity issues, Kubernetes will automatically move any pods running there over to another machine so they can continue running without interruption.

Kubernetes can help with Orchestration and Deployment

Kubernetes can help with orchestration and deployment in several ways. Some of the key ways in which Kubernetes can assist with these tasks include:

- Automated deployment and updates: Kubernetes provides a declarative approach to application deployment, which means that users define their applications' desired state, and Kubernetes ensures that the application is running as expected. This can make deploying and updating applications easier, as users don't need to worry about the specific steps required to bring the application up or down.

- Rolling updates and rollbacks: Kubernetes allows users to perform rolling updates and rollbacks, which means that they can update their applications incrementally and in a controlled manner. This can help reduce the risk of downtime or other issues during deployments and make it easier to recover from problems if they occur.

- Self-healing and autoscaling: Kubernetes includes features such as self-healing and autoscaling, which can help to ensure that applications are always running and available. If a container or application fails, Kubernetes will automatically replace it, and if the workload increases, Kubernetes will automatically scale the application to meet the demand. This can improve the reliability and performance of applications and can help to reduce the operational overhead of managing them.

- Scheduling and resource management: Kubernetes provides a robust scheduling and resource management system, allowing users to specify their applications' requirements and constraints and let Kubernetes handle the details of running and managing the applications. This can make it easier to deploy and manage applications at scale and help optimize the use of resources in the cluster.

Overall, Kubernetes is designed to provide many benefits for orchestration and deployment and help organizations develop, deploy, and manage applications more efficiently and reliably.

Configuring Kubernetes from Scratch (a brief walkthrough)

To set up and install Kubernetes from scratch, you will need to complete the following steps:

- Install a Linux operating system(highly preferred) on your computer or use a cloud provider that offers a Linux environment.

- Install Docker(obviously), which is used to run containers on Kubernetes.

- Install a container runtime, such as Containerd or CRI-O, to manage the containers on your system.

- Install and configure a Kubernetes master node. The master node is responsible for managing the cluster and scheduling workloads.

- Install and configure one or more worker nodes, which are the machines that will run your applications and services.

- Use kubectl, the Kubernetes command-line tool, to deploy and manage applications on the cluster.

- Optional: Install a Kubernetes dashboard or Helm chart, which provides a web-based interface for managing the cluster and deploying applications.

For more detailed instructions on how to complete each of these steps, see the Kubernetes documentation. It would be best if you started with the "Getting Started" guide, which provides step-by-step instructions for setting up a simple Kubernetes cluster.

Google, Amazon, and Microsoft are all getting behind Kubernetes

Kubernetes has been around for years, but it has only recently gained popularity as a tool for managing cloud-native applications. It's also become a way for vendors to differentiate themselves from their competitors and gain an edge in the highly competitive cloud market. Yes, Google, Amazon, and Microsoft are all supporting Kubernetes in various ways.

Google is the original creator of Kubernetes and continues to be a significant contributor to the project. Google offers Kubernetes as a service through its Google Kubernetes Engine (GKE), which allows users to easily create and manage Kubernetes clusters on the Google Cloud Platform. Google also provides support and tools for using Kubernetes on its cloud platform and offers training and certification for Kubernetes.

Amazon is also a significant supporter of Kubernetes. Amazon offers Kubernetes as a service through its Amazon Elastic Container Service for Kubernetes (EKS), which allows users to create and manage Kubernetes clusters on the Amazon Web Services (AWS) cloud platform. Amazon also provides tools and support for using Kubernetes on its cloud platform and offers training and certification for Kubernetes.

Microsoft is also supporting Kubernetes. Microsoft offers Kubernetes as a service through its Azure Kubernetes Service (AKS), which allows users to create and manage Kubernetes clusters on the Microsoft Azure cloud platform. Microsoft also provides tools and support for using Kubernetes on its cloud platform and offers training and certification for Kubernetes.

Overall, Kubernetes is a powerful tool that can greatly simplify the process of deploying and managing containerized applications in the cloud. It's a game-changer because it allows developers to build, deploy, and manage their applications in a more efficient and cost-effective way, without having to worry about the underlying infrastructure.

Conclusion

Hopefully, this article has helped you understand what Kubernetes is and why it’s an important technology. I have covered all the basic concepts(not all but just a brief concept) of Kubernetes along with its key features—now it’s time for you to go ahead and try out some of these things for yourself. Begin here!

Learn more about Kubernetes in depth👇

Top comments (3)

Awesome post @pramit!! As someone who is moving into the software engineering field this high-level explanation is very helpful!!

Too much text. Try to add visuals buddy

Kindly check my blog too and provide feedback if possible ❤️

🤦🏻♂️