React is arguably the most popular GUI framework for the web. The advent of WebAssembly and the Web Audio API — combined with cutting-edge, miniaturized speech technology — can enhance web apps with a Voice User Interface, or VUI, powered entirely within the web browser itself. With a handful of npm packages and about ten lines of code, you can voice-enable React, creating a proof-of-concept that understands natural commands and requires no cloud connection.

Start up a new React project

Create React App (CRA) is a popular starting point for building apps with React, and a natural choice. If you prefer, you could use any other React setup, such as Gatsby.

When you start up a brand-new CRA project, you’re greeted with a spinning React logo. We’re going to add Voice AI to control the scale, rotation, and translation of the logo via CSS properties. The idea is to keep the project as minimalistic as possible, showing just the process of integrating private speech recognition features, leaving the rest to the reader’s imagination.

If you haven’t already, get setup with Create React App. You can use npm or yarn for executing the commands. We’ll use yarn here to create our project, add voice packages, and start it up. Using TypeScript is optional, but tremendously helpful in my experience.

yarn create react-app react-voice-transform --template typescript

cd react-voice-transform

yarn add @picovoice/web-voice-processor @picovoice/rhino-react

yarn start

If you visit localhost:3000 in your web browser, you should see the React logo spinning on your screen. Now we can add code to voice-enable the page, and use CSS to change the logo so we can see a visual response.

What about the Web Speech API?

Speech APIs are not entirely new to browsers, per se, but support has been limited to Google Chrome. When SpeechRecognition is used in Chrome, your microphone data is being forwarded to Google’s cloud, so this is somewhat misleading in terms of being a browser API. To that point, SpeechRecognition does not work in Electron, even though it’s built from Chromium.

And while speech-to-text is a tremendously powerful tool, it can serve as a rather blunt instrument when we narrow our focus to voice commands. As intuitive as it is to transcribe speech, then scan that text for meaning, there are some major drawbacks to this approach, beyond the concerns about privacy and reliability with cloud-based APIs.

The fundamental issue with generic transcription is that you’re trying to anticipate every possible word and phrase in a spoken language when in reality your focus is often much narrower. An elevator panel might need to understand “lobby” and “penthouse”, and “take me to floor 5”, but it certainly doesn’t need to know how to have a conversation about the velocity of an unladen swallow.

Instead, the Rhino Speech-to-Intent engine that we are using is trained with a grammar that solves a particular problem, or context. In this tutorial, the speech context understands how to modify a transform by setting either the scale, rotation, or translation as a percentage value (that’s it!). The resulting model is bespoke for the purpose, skips transcription, and directly returns a JavaScript object indicating user intent. By focusing the speech recognition engine like this, we receive tremendous boosts to efficiency and accuracy over using an online ASR.

Voice-enabling code

Get an AccessKey for free from Picovoice Console. You will need it as part of the init function. Also, get the English Parameter for Rhino from GitHub and save it to the public directory. Rhino uses this file as the basis to understand English context (other languages are also supported).

With a trained Rhino speech context, (a .rhn file), we save it to the public directory and provide it as an argument to the init function from the useRhino React hook. This hook provides several variables and functions. The key for us is the process function. We can connect that to a button in React, providing a means to activate speech recognition.

We’ll make sure the button is only enabled when Rhino isLoaded but not isListening, making use of a few of the hook variables. The error hook value is also important for handling scenarios where a user does not have a microphone or refuses permission. The contextInfo lets us output the source YAML of the grammar which gives us an exhaustive representation of all possible phrases.

import { useEffect } from "react";

import { useRhino } from "@picovoice/rhino-react";

const {

inference,

contextInfo,

isLoaded,

isListening,

error,

init,

process

} = useRhino();

useEffect(() => {

init(

"${ACCESS_KEY}",

{ publicPath: "${PATH_TO_CONTEXT_FILE}" },

{ publicPath: "${PATH_TO_MODEL_FILE}" }

)

}, [])

<button

onClick={() => process()}

disabled={!isLoaded || isListening || error !== null}

>

Talk

</button>

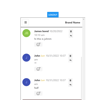

Here is the completed project in Replit. Make sure to give microphone permissions, else you might see the following screen:

Finally, we need a function for Rhino to call when it has reached a conclusion about the audio sent to it. Rhino needs to first determine if the command was understood (e.g. if you ask it to tell you a joke, it won’t understand that because it’s only listening for the grammar specified). If it was understood, a complete inference object will be returned indicating the intent of the user.

Our intents are quite simple: setScale, setTranslation, setRotation and reset. The first three also arrive with some additional information determined from the spoken utterance as key/value pairs, called slots. For instance, if a percentage value was specified in the utterance, we can access the slot value by using the key “pct” in the dictionary. Most of the actual application code is mapping the voice commands into CSS properties.

Try it out

Even if you haven’t been following along with the code, you can open this Replit instance which will let you try the demo.

Press the ‘Talk’ button, then say:

“Set the scale to sixty-five percent”

The logo will scale. There’s a CSS transition property added so that the change is animated, making it easier to see what’s happening (and pleasing to the eye).

If you’re interested in customizing the Rhino context or training a different one altogether, you can do so via the Picovoice Console (select the WebAssembly platform when training) using the Rhino Grammar.

Latest comments (0)