The author continues his narration for Otomato about eBPF capable tools.

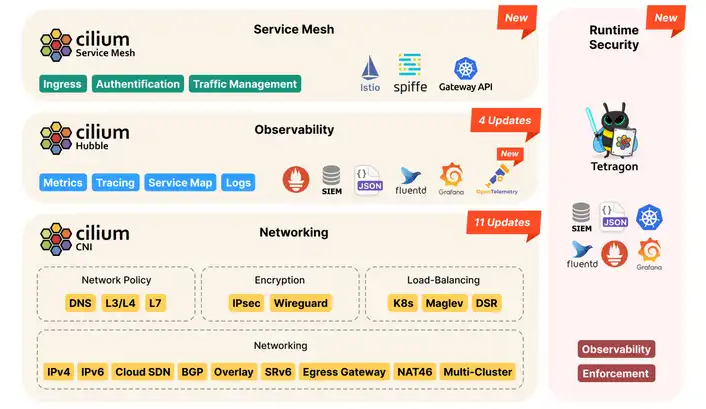

NOS stands for Networking, Observability, and Security.

Some details are good to be repeated

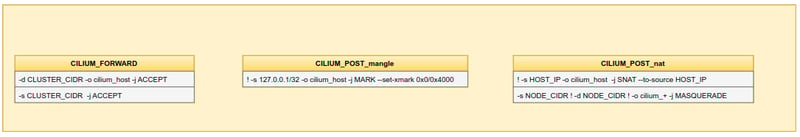

Well, you could know that Kubernetes uses iptables for kube-proxy, the component which implements services and load balancing by DNAT iptables rules, and also the most of CNI plugins are using iptables for Network Policies.

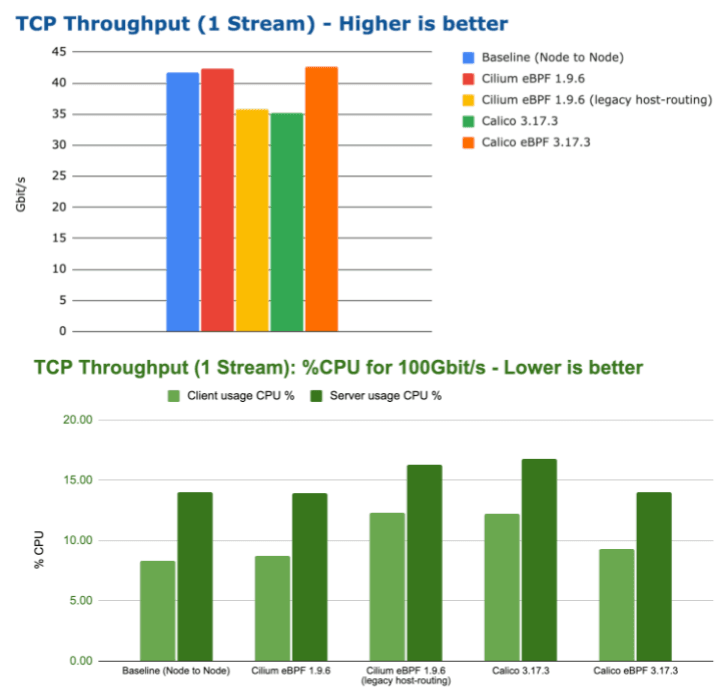

Performance suffers when there is a lot of traffic or when there have been many changes made to the iptables rules in a system. As the number of services increases, measurements show unexpected latency and decreased performance.

To overcome these troubles, eBPF technology was invented. It had been eventually implemented in Linux kernel 3.18.

Making full use of eBPF requires at least Linux 4.4 or above!

e[xtended]BPF, as we know it today, was created by Alexei Starovoitov and Daniel Borkmann, who are still maintainers but are joined by over a hundred contributors. Looking ahead, it is important to note that Daniel now works for Isovalent.

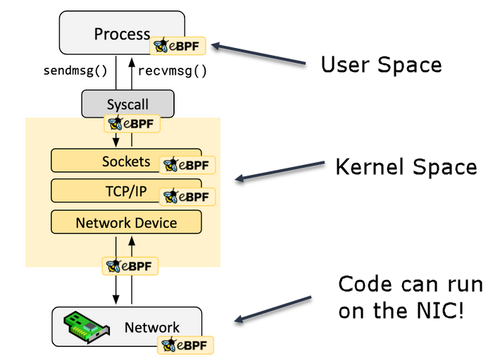

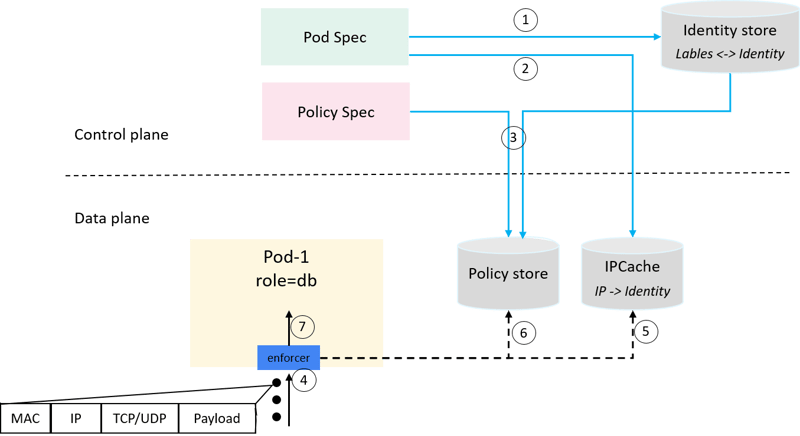

eBPF, which stands for extended Berkeley Packet Filter, is a linux kernel feature that allows to automatically collect network telemetry data such as full-body requests, resource and network metrics, application profiles, and more.

eBPF mechanics are useful when we need to collect, query and proceed with all telemetry data in the cluster. Providing a granular level of observability is possible thanks to eBPF that makes the kernel programmable in a safe and performant way.

Rather than relying on gauges and static counters exposed by the operating system, eBPF allows for the generation of visibility events and the collection and in-kernel aggregation of custom metrics based on a broad range of potential sources.

With the advent of eBPF, you could add logic to the kernel from user-space rather than altering the kernel code. This technique is significantly safer because to eBPF, in addition to being simplified. By implementing safety checks, the eBPF verification process ensures that the eBPF code you load into your kernel is secure to execute.

For instance, it ensures and enforces:

- your program runs in a limited amount of CPU time (no indefinite loops);

- your program does not crash or produce harmful, fatal bugs;

- your eBPF code is being loaded by a process with the necessary permissions;

- your code has a size restriction;

- no unreachable code is permitted.

So, think of it as a lightweight, fully-sandboxed virtual machine (VM) inside the Linux kernel. eBPF programs are event based, and are executed on a specific hook, such as network events, system calls, function entries, and kernel trace points.

Popular Projects Powered by eBPF

- Pixie, application troubleshooting platform for Kubernetes

- Cilium by Isovalent, eBPF Networking, Security and Observability for Kubernetes

- Falco , cloud-native runtime security

- Tracee by Aqua, runtime security and forensics

- Calico by Tigera, a direct competitor to Cilium as the CNI.

What is Cilium? What it features?

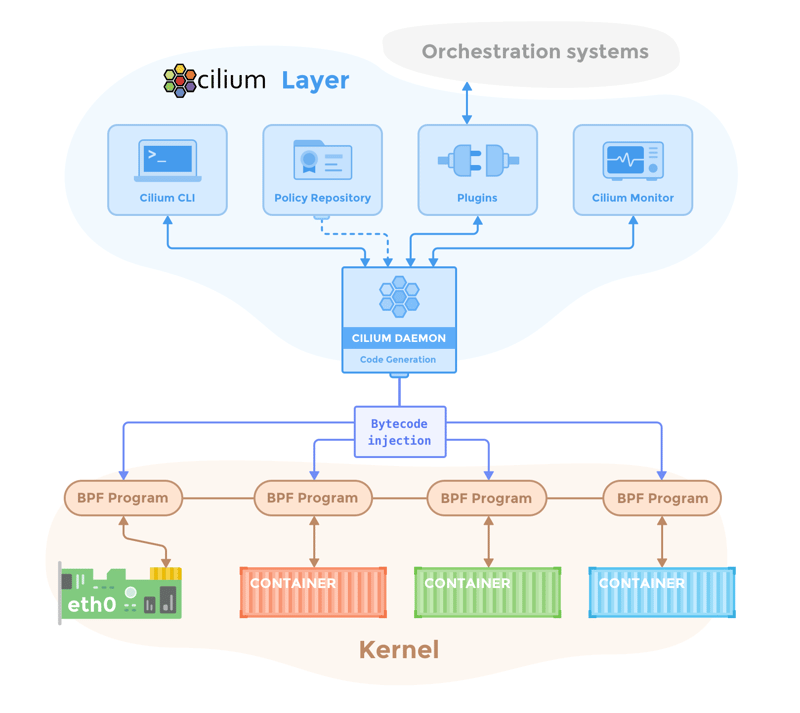

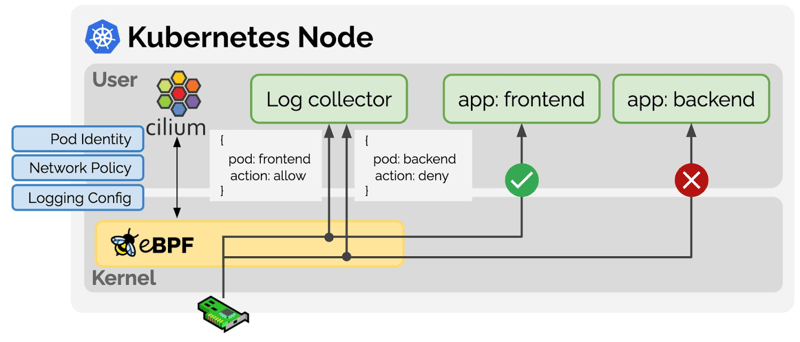

In order to fulfill the networking, security, and visibility requirements of container workloads, Cilium is an open source project built on top of eBPF. On top of eBPF, it offers a high-level abstraction.

What Kubernetes and container runtimes are to the Linux kernel's

namespaces,cgroups, andseccomp, Cilium is to the eBPF: the appropriate abstraction layer above it.

The Least Privilege security paradigm for network policy

Implementing the Least Privilege security when your K8s pods communicate with one another is a recommended practice. The fundamental K8s Network Policies (which function at L3/L4) are effective, but Cilium Network Policies allow you to improve upon them (operate broader, at L3-L7).

In the age of K8s and microservices, this can be quite helpful because monitoring and regulating network traffic with metadata (such as IPs and ports) doesn't add much value. As services arrive and leave, IPs and ports are always changing.

The approach's flexibility is that you can manage the traffic with Cilium by using Pod, HTTP, gRPC, Kafka, DNS, and other metadata.

For instance, you can create HTTP rules that specify the route, header, and request methods that let a particular Pod to make a specific API call. Another example is creating DNS rules based on FQDN to restrict access to only certain domains. Because of this, we are able to establish security policies that are more beneficial and practical for our real-world use-cases.

With Cilium v1.12 you may even install a route which informs other endpoints that the Pod is now unreachable (i.e., when a Pod is deleted)!

Multi-Cluster Connectivity & Load Balancing

Cilium enables K8s pods to communicate and be discovered across K8s clusters by utilizing a cluster mesh. High Availability and Multi-Cloud are a few use scenarios (connecting K8s clusters across cloud providers).

With Cilium, services are now capable of understanding topology and affinity. This means that instead of balancing evenly across load-balancing backends, you can choose to prefer backends only in the local or remote clusters.

kube-proxy may be changed to Cilium for eBPF; iptables, which is being superseded by eBPF, is used by kube-proxy.

As a result, performance is significantly enhanced by this adjustment.

Cilium's “underwater” features

- Between-pod transparent encryption. Support for IPsec and Wireguard protocols

- Network Performance Improvement

- Infra Scalability

- Enhanced visibility of traffic flows, including L7 protocols, in addition to IPs and ports.

- Monitoring and better network fault visibility between your microservices Metrics that work with Prometheus are offered by Cilium.

Cilium CNI supports Layer 7 (L7) policies. It competes with Istio on such feature. Cilium’s Layer 7 policy is simple to use with its own Envoy & proxylib based filter.

Observability matters!

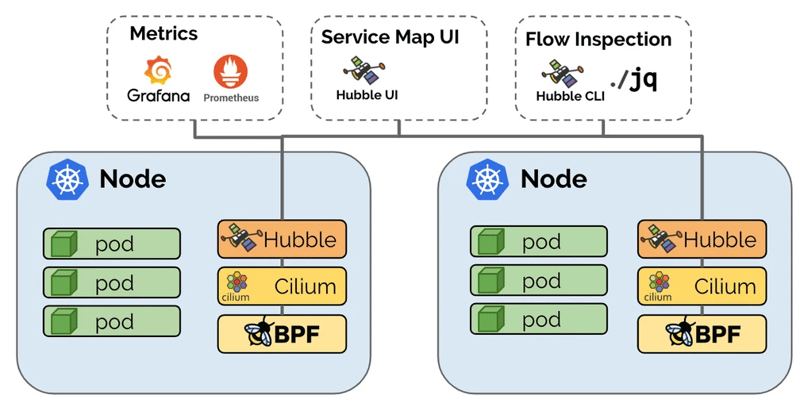

Cilium team also offers Hubble (yes, the name is the same as the famous far space crawling telescope's one, but for clouds), which is a fully distributed networking and security observability platform for cloud native workloads. Hubble is open source software and built on top of Cilium and eBPF to enable deep visibility into the communication and behavior of services as well as the networking infrastructure in a completely transparent manner.

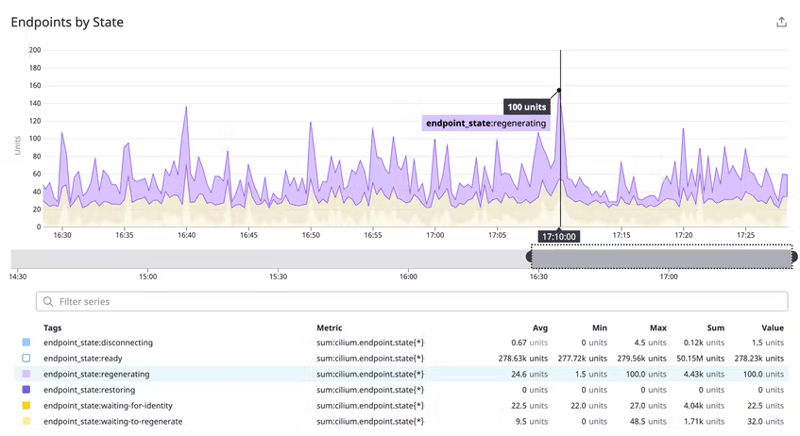

Only pods that are a part of the Cilium agent's network can have policies enforced on them. Unmanaged pods are frequently the outcome of delivering workloads to a cluster before installing or running Cilium, which can lead to the platform missing any recently produced pods in your environment. To identify which pods are not managed by the platform, you can compare a node's total pod count with the number of Cilium-managed pods on it (endpoint state).

According to Cilium's endpoint lifecycle, the metric's state tag offers further details about the stage that a set of endpoints are in. This allows you to keep track of how each of your pods is doing when Cilium applies and modifies network restrictions. The agent may be refreshing its networking configuration, for instance, if several endpoints have the regenerating status. It's crucial to keep an eye on pods in this condition to make sure they receive the required policy updates.

And no one understood Cilium based monitoring's nuances better than Datadog team. The illustration belongs to them.

Hubble's metrics, in contrast to Cilium metrics, allow you to keep an eye on the connectivity and security of the network in which your Cilium-managed Kubernetes pods are operating.

“How can I benefit from this?”

Well, the author's conviction: if useless to the masses, no new technology is needed!

GCP GKE

☁️ Google had already introduced so-called GKE Dataplane V2, an opinionated data plane that harnesses the power of eBPF and Cilium, in mid-2021. They also use Dataplane V2 to bring Kubernetes Network Policy logging to Google Kubernetes Engine (GKE).

To try out Kubernetes Network Policy logging for yourself, create a new GKE cluster with Dataplane V2 using the following command:

gcloud container clusters create <cluster-name> \

--enable-dataplane-v2 \

--enable-ip-alias \

--release-channel rapid \

{--region region-name | --zone zone-name}

The configuration of the Cilium agent and the Cilium Network Policy determines whether an endpoint accepts traffic from a source or not. Network policy enforcement is built into GKE Dataplane V2. You do not need to enable network policy enforcement in clusters that use GKE Dataplane V2.

AWS EKS

☁️ If you are on AWS, know the fact EKS Anywhere uses Cilium for pod networking and security.

Every form of Pod to Pod communication is by default permitted within a Kubernetes cluster. Although it might encourage experimentation, this flexibility is not seen as secure. You can limit network traffic between pods (often referred to as East/West traffic) and between pods and external services using Kubernetes network policies. The levels 3 and 4 of the OSI model are where Kubernetes network policies operate. Network policies can also employ IP addresses, port numbers, protocol numbers, or a combination of these to identify source and destination pods in addition to pod selectors and labels. Tigera's Calico is an open source policy engine that is compatible with EKS.

When combined with Istio, Calico enables expanded network policies with a broader set of functionality, including support for layer 7 rules, such as HTTP, in addition to providing the entire set of Kubernetes network policy features. The Cilium developers, Isovalent, have also expanded the network policies to offer a limited amount of support for layer 7 rules, such as HTTP. For limiting communication between Kubernetes Services/Pods and resources that run inside or outside of your VPC, Cilium additionally supports DNS hostnames.

Azure AKS

☁️ If you are on Azure, you may know AKS has only officially supported two CNI’s, Kubenet and Azure CNI. In April 2022 when they announced the ability to create an AKS cluster with no CNI. This means you can deploy any CNI you would like. In this blog post, British MVPs from Pixel Robots show how to create an AKS cluster with no CNI and then... deploy Cilium!

OpenShift

☁️ OpenShift: Cilium can also be installed on traditional VMs or bare-metal servers. OpenShift platform teams are able to implement label-based controls on the communication between application pods and external nodes by permitting VMs or bare-metal servers to join the Cilium cluster. On the other hand, the external nodes will have access to the cluster services and be able to resolve cluster names.

k3s & minikube

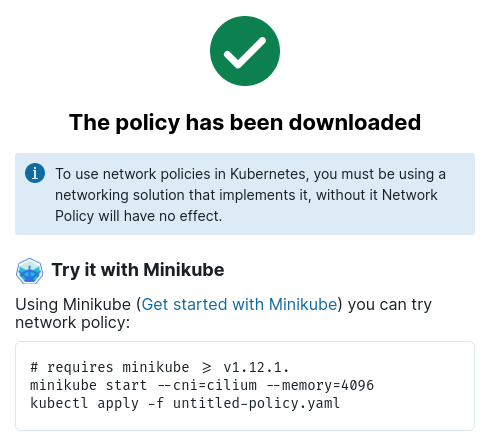

☁️ Eventually, you can play with Cilium on k3s, or even on minikube, just in the garage. 🚜

Moreover, by learning well with this chic Network Policy editor from Cilium, you will be able to expand the functionality of your laboratory stage. Manifests which designed there can be downloaded.

Hands-on labs on Instruqt®

You may try these interactive labs to learn more about the Cilium technology. You can learn and experiment in each lab's specialized live environment.

Good wishes & who else to read

Deploy with care! Strive to have gurus by your side!

The author wish to thank Yarel Maman, Johann Rehberger, Chris Mutchler, Lin Sun, Arthur Chiao, Glen Yu and Richard Hooper for their contribution to community.

P.S.: Hardcore devs may find some technical details here and here, on L7 parsing with proxylib in Golang.

Oldest comments (0)