VMware's NSX Datacenter product is designed for a bit more than single enterprise virtual networking and security.

When reviewing platform maximums (NSX-T 3.2 ConfigMax), the listed maximum number of Tier-1 routers is 4,000 logical routers. Achieving that number takes a degree of intentional design, however.

When building a multi-tenant cloud network leveraging NSX Data Center, the primary design elements are straightforward:

-

Shared Services Tenant

- A multi-tenant data center will always have common services like outbound connectivity, orchestration tooling, object storage, DNS.

- This tenant is commonly offered as a component to a physical fabric with a dedicated Workload Domain (WLD), but can be fully virtualized and run on the commodity compute

- Packaging shared services within a WLD will require repetitive instantiation of common services, but makes the service "anycast-like" in that it will be more resilient for relatively little effort

- Implementation with hierarchical Tier-0s is comically easy, just attach the shared Tier-1 to the Infrastructure Tier-0!

- When designing a shared services tenant, a "Globally Routable" prefix is highly recommended in IPv4 to ensure that no conflicts occur. With IPv6, all networks should have globally routable allocations

-

Scaling: Tenants, Tenants, and more Tenants!

- Most fabric routers have a BGP Peer cap of 256 speakers:

- Divide that number in half for dual-stack: 128 speakers

- Remove _ n _ spine nodes, 124 speakers

- Add 4-way redundancy (2 Edge Transport Nodes): 31 Speakers, or 8-way, 15 Speakers

- For customer-owned routing, the scalability maximum of 4,000 logical routers is achievable without good planning

Let's take a look at an infrastructure blueprint for scaling out network tenancy:

The more "boring" version of tenancy in this model supports highly scalable networking, where a customer owns the Tier-1 firewall and can self-service with vCloud Director:

VRF-Lite allows an NSX Engineer to support 100 network namespaces per Edge Transport Node cluster. When leveraging this feature, Tier-1 Logical Routers can connect to a non-default network namespace and peer traditionally with infrastructure that is not owned by the Cloud Provider via Layer 2 Interconnection or something more scalable (like MPLS or EVPN).

Empowering Cloud-Scale Networking with a feature like drop-shipping customer workloads into MPLS is an incredibly powerful tool , not just with scalability,but with ease of management. NSX-T VRFs can peer directly with the PE, simplifying any LDP or SR implementations required to support tenancy at scale.

With this design, we'd simply add a VRF to the "Customer Tier-0" construct, and peer BGP directly with the MPLS Provider Edge (PE). An NSX-T WLD with 3.0 or newer can support 1,600 instances this way, where most Clos fabrics can support ~4,000. The only difference here is that WLDs can be scaled horizontally with little effort, particularly when leveraging VCF.

A tenant VRF or network namespace will still receive infrastructure routes, but BGP engineering, Longest Prefix Match (LPM), AS-Path manipulation all can be used to ensure appropriate pathing for traffic to customer premise, shared infrastructure or other tenants with traditional telecommunications practices. Optionally, the customer's VRF can even override the advertised default route, steering internet-bound traffic to an external appliance.

This reference design solves another substantial maintainability problem with VRF-Lite implementations - VRF Leaking. The vast majority of hardware based routing platforms do not have a good path to traverse traffic between network namespaces, and software based routing platforms struggle with the maintainability issues associated with using the internal memory bus as a "warp dimension".

With overlay networking, this is easily controlled. VRF constructs in NSX-T inherit the parent BGP ASN, which prevents transit by default. The deterministic method to control route propagation between VRFs with eBGP is to replace the ASN of the tenant route with its own, e.g.:

| Original AS-Path | New AS-Path |

| 64906 64905 | 64905 64905 |

AS-Path rewrites provide an excellent balance between preventing transit by default and easily, safely, and maintainably providing transitive capabilities.

_ The more canonical, CCIE-worthy approach to solving this problem is not yet viable at scale. Inter-Tier-0 meshing of iBGP peers is the only feature available, confederations and route reflectors are not yet exposed to NSX-T from FRRouting. When this capability is included in NSX Data Center, iBGP will be the way to go._

Let's build the topology:

NSX Data Center's super-power is creating segments cheaply and easily, so a mesh of this fashion can be executed two ways.

Creating many /31s, one for each router member as overlay segments. NSX-T does this automatically for Tier-0 clustering and Tier-1 inter-links, and GENEVE IDs work to our advantage here. Meshing in this manner is best approach overall, and should be done with automation in a production scenario. I will probably write a mesh generator in a future post.

In this case, we'll do it IPv6-style, by creating a single interconnection segment and attaching a /24/64 to it. Tier-0 routers will mesh BGP with each other over this link. I'm using Ansible here to build this segment, not many of the knobs and dials are necessary so it saves both time and physical space.

tasks:

- name: "eng-lab-vn-segment-ix-10.7.200.0\_24:"

vmware.ansible\_for\_nsxt.nsxt\_policy\_segment:

hostname: "nsx.lab.engyak.net"

username: '`{{ lookup("env", "APIUSER") }}`'

password: '`{{ lookup("env", "APIPASS") }}`'

validate\_certs: False

state: present

display\_name: "eng-lab-vn-segment-ix-10.7.200.0\_24"

transport\_zone\_display\_name: "POTATOFABRIC-overlay-tz"

replication\_mode: "SOURCE"

admin\_state: UP

- name: "eng-lab-vn-segment-ix-10.7.201.0\_24:"

vmware.ansible\_for\_nsxt.nsxt\_policy\_segment:

hostname: "nsx.lab.engyak.net"

username: '`{{ lookup("env", "APIUSER") }}`'

password: '`{{ lookup("env", "APIPASS") }}`'

validate\_certs: False

state: present

display\_name: "eng-lab-vn-segment-ix-10.7.201.0\_24"

transport\_zone\_display\_name: "POTATOFABRIC-overlay-tz"

replication\_mode: "SOURCE"

admin\_state: UP

From here, we configure the following external ports and peers on the infrastructure Tier-0:

- eng-lab-t0r00-ix-1: 10.7.200.1/24 (AS64905, no peer)

- eng-lab-t0r00-ix-vrfs-1: 10.7.201.1/24 (AS64905, no peer)

- eng-lab-t0r00-ix-2: 10.7.200.2/24 (AS64905, no peer)

- eng-lab-t0r00-ix-vrfs-2: 10.7.201.2/24 (AS64905, no peer)

- eng-lab-t0r01-ix-1: 10.7.200.11/24 (AS64906)

- eng-lab-t0r10-ix-1: 10.7.201.100/24 (AS64906)

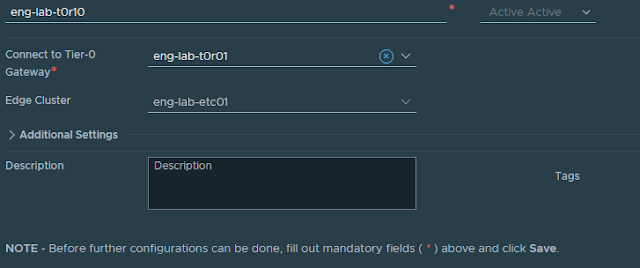

Let's build the tenant default Tier-0:

Then configure BGP and BGP Peers:

Voila! BGP peerings are up without any VLANs whatsoever! Next, the VRF:

For the sake of brevity, I'm skipping some of the configuration after that. The notable differences are that you cannot change the BGP ASN:

That's about it! Peering between NSX-T Tier-0 routers is a snap.

The next stage to building a cloud must be automation at this point. Enabling self-service instantiating Tier-1s and/or VRFs will empower a business to onboard customers quickly, so consistency is key. Building the infrastructure is just the beginning of the journey, as always.

Lessons Learned

The Service Provider community has only just scratched the surface of what VMware's NSBU has made possible. NSX Data Center is built from the ground up to provide carrier-grade telecommunications features at scale, and blends the two "SPs" (Internet and Cloud Service Providers) into one software suite. I envision that this new form of company will become some type of "Value-Added Telecom" and take the world by the horns in the near future.

Diving deeper into NSX-T's Service Provider features is a rewarding experience. The sky is the limit! I did discover a few neat possibilities with this structure and design pattern that may be interesting (or make/break a deployment!)

- Any part of this can be replaced with a Virtual Network Function. Customers like a preferred Network Operating System (NOS), or simply want a NGFW in-place. VMware doesn't even try to prevent this practice, enabling it as a VM or a CNF (someday). If a Service Provider has 200 Fortinet customers, 1,000 Palo Alto customers, and 400 Checkpoint customers, all of them will be happy to know they can simply drop whatever they want, wherever they want.

- Orchestration and automation tools can build fully functional simulated networks for a quick "what if?" lab as a vApp.

Credit where credit is due

The team at 27 Virtual provided the design scenario and the community opportunity to fully realize this idea, and were extremely tolerant of me taking an exercise completely off the rails. You can see my team's development work here: https://github.com/ngschmidt/nsx-ninja-design-v3

Top comments (0)