I've always found generative AI one of the most interesting branches in Computer Science. As in any other AI subfield, the range of complexity for the algorithms under this name is very wide. That's why I like Markov chains so much; while they have really simple logic behind, the results can be very interesting and funny.

What are Markov chains?

Wikipedia describes a Markov chain as a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event.

Let's break it down a little:

- Stochastic model: a model that presents randomness to some extent.

- The probability of each event depends only on the state attained in the previous event: this refers to the Markov property, that holds when the future state depends only on the present state. In other words, given the present, the future doesn't depend on the past. Yes, math and computer science can get pretty philosophical sometimes.

So a Markov chain describes a sequence of events, that are not deterministic, and where each event only depends on the previous one.

Minimal example:

This diagram represents a Markov chain with two possible states/events: Sunny and Rainy.

- When it is Sunny, there's a 90% probability that the next state will be Sunny and 10% it will be Rainy.

- When it is Rainy, there's the probabilities of the next state being Sunnny or Rainy are 50/50.

And in each state, it doesn't matter how many times it has been Sunny or Rainy before: a Markov chain has no memory, so no transition will make the probabilities change.

Realism of Markov chains

With the last example you can start to imagine that a lot of real world phenomena, like weather and speech, are not Markovian, because the weather of the past does influence the current, and what's going to be said depends a lot on what's been said earlier. For this reason, don't expect to program the new Cervantes with a Markov chain.

However, this is an interesting starting point and, also, an approach with multiple use cases, like:

- Text generation and prediction (your autocorrect, for example, uses a Markov chain and a Levenshtein automaton, but we will talk about that another day).

- Music generation.

- Modeling relatively complex state machines for weather simulations or NPC behavior in videogames.

- Google's PageRank is a Markov chain.

Text generation

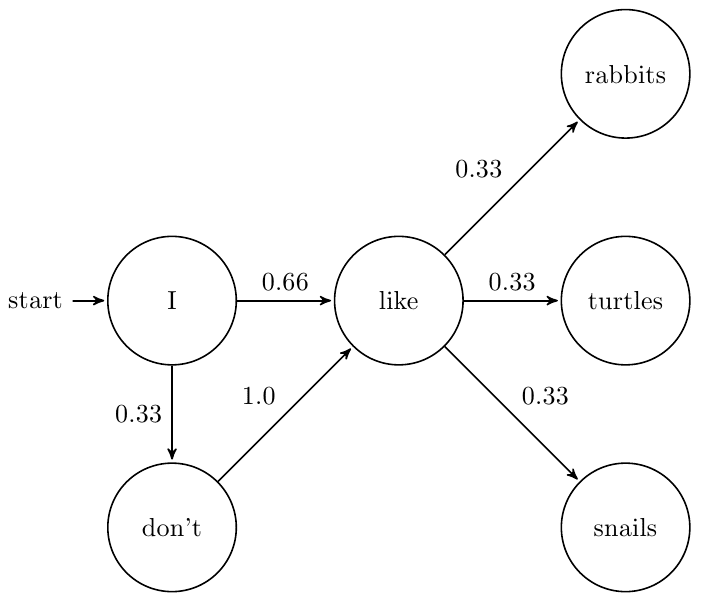

We can model words as events in a Markov process.

Possible strings generated by this model are:

- I like turtles.

- I don't like snails.

- I like rabbits.

- I don't like rabbits.

Order of a Markov chain

In the last example, we only took into account a single word per state, so we say that the chain is of first-order. For a N-th order chain, each state represents the last N words. But doesn't it break the Markov principle? Not quite, because we are still using only the present state to decide the next one, only that we define present in a broader sense, as if instead of using "now" to refer to the current hour, you refered to the current day.

This concept will play a very important role in the training process (when we build the chain from existing text). We will see this in more detail when we talk about the implementation.

Conclusion

Markov chains have a wide variety of uses. With a very simple model, easy to build and easy to use, we can simulate rather complex behaviors. Now that we've covered the theory basics, I will explain it's implementation step by step in different programming languages.

Let me know your thoughts in the comments!

References

- https://en.wikipedia.org/wiki/Markov_chain

- https://deepai.org/machine-learning-glossary-and-terms/markov-chain

- https://medium.com/swlh/machine-learning-algorithms-markov-chains-8e62290bfe12

Recommended reading

Take full advantage of high order functions - Examples in Javascript

MiguelMJ ・ Jun 22 '21

You can follow me on Twitter! 🐦

Top comments (0)