Flask used to be (and may be still is) the go-to HTTP server for python devs just starting out. It's fairly lightweight, mature, well-documented, and it's been around for long enough that tutorials and guides have sprung up around it. There are others of course, with their own merits. FastAPI is one of the newer ones on the block, new enough that it isn't automatically suggested as "you should learn this if you want to make APIs with python", but rapidly gaining users.

I've recently upgraded several of our API endpoints over from Flask to FastAPI, and honestly I haven't felt such a breath of fresh air in development for a long time. I want to summarise some of the main improvements that I felt just by doing that, in case anyone else was wondering how the two compare.

API-first

The most immediate thing you'll notice is that FastAPI is an API-first web server, which makes sense given the name. This means by default the error messages are JSON format, and the return value expected by the handlers are dictionaries. Contrast this with Flask, whose errors are HTML pages by default, and return JSON need to be jsonify()'d

Having an HTML page as an error is somewhat annoying when developing APIs, as this will cause REST clients to issue a JSON decode error since it couldn't parse the HTML page as an error, rather than give you the error body directly.

VM21892:1 Uncaught SyntaxError: Unexpected token < in JSON at position 0

json.decoder.JSONDecodeError: Expecting value: line 1 column 1 (char 0)

This is also hugely messy in the logs as there's a lot of superfluous formatting tags in the HTML page.

Flask can be configured to output a JSON formatted error of course. But FastAPI comes out of the box ready to build APIs.

Query, path, body, all as arguments

In Flask, you access both query and request body via the request singleton, which is a magic instance that when referenced inside and endpoint handler contains stuff relating to the request.

@app.route("/example/<uid>", methods=["POST"])

def handle_example(uid):

arg = request.args.get('bleh')

data = request.get_json()

return jsonify(arg=arg, data=data, uid=uid)

This is actually highly annoying. The function handle_example() now relies on the existence of a request object. Meaning this function relies on Flask, and is also somewhat impure and therefore harder to debug or test.

FastAPI's json body input comes in as a function argument. I didn't like this at first (it's different, therefore bad), but after using it for a while, it made total sense:

@app.post("/example/{uid}")

def handle_example(uid: str, arg: str, data: MyData):

return {"arg": arg, "data": data, "uid": uid}

It's easy to see that handle_example() requires three inputs: uid (a string), arg (a string), and data (an object of type Data). The function doesn't care where these values come from (path, argument, or body; those are handled by the decorator or other FastAPI-specific means), so it's nice separation of concerns: FastAPI decorator deals with routing this data in; while the function deals with business logic. And this also makes the function purer, and therefore easier to understand, debug, and test.

Validation

The thing with running an API is that a lot of problems are the user's fault. Someone sent you badly formatted data, and that's causing a weird error in your API. However, it's not really an option to tell users reporting the error that this was their fault and go RTFM; that's bad design. Instead, the API should be validating input against the API spec and rejecting data that doesn't match, and hopefully also explaining to the user (which by the way, might be you, or a colleague, or a client) what they did wrong.

With Flask, there aren't many options for this; you either write a lot of if statements to check every possible part of the data coming in, and then manually make sure to go update your API documentation somewhere, or you use some kind of data validation library.

I've mostly been defining API specs using JSONschema, and using the python jsonschema libraries. This is nice because those libraries will return human-readable explanations for why their input didn't match the schema. However, I still had to update the documentation.

The actual validation in a Flask endpoint looked something like the below, having defined the schema as a JSONschema, and loaded it.

data = request.get_json()

try:

validator.validate(data)

except ValidationError as err:

return jsonify(error="JSON Schema Validation Error", message=str(err)), 422

For sake of argument, a simple JSONschema might look something like this:

{

"$schema": "http://json-schema.org/draft-07/schema#",

"title": "Chat Entry",

"description": "A chat message",

"required": [

"version",

"message",

"recipient"

],

"properties": {

"version": {

"const": "3.1",

"description": "Protocol version"

},

"message": {

"type": "string"

"description": "Message body"

},

"recipient": {

"type": "string",

"description": "Receiver of message"

},

"author": {

"type": "string",

"description": "Sender of message"

},

"timestamp": {

"type": "number",

"description": "Unix timestamp"

}

}

}

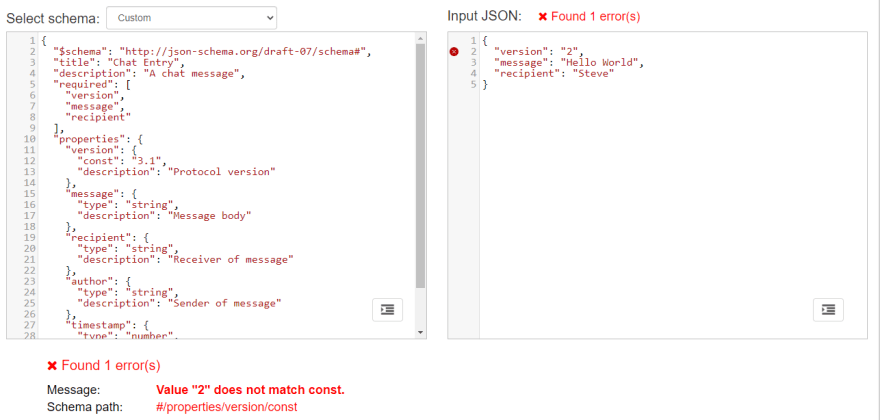

Here's an example of a malformatted payload (according to the spec), using the Newtonsoft online schema validator:

As can be seen, JSONschema provides sensible validation messages, and can help a user identify where their payload has errors. Certainly better than the alternatives of having to write out every check by hand, or letting formatted data cause downstream errors.

However, JSONschema leaves a lot to be desired, while the above example looks relatively straightforward, there are a few issues with how it handles nesting and references. A lot of implementations of the validator expect the schema to live on the web, where it can be requested over an HTTP endpoint; this is not always easy to achieve for internal schema, and you end up having to deal with internal HTTP endpoints that exist solely for serving your schema, or having to have custom URI resolvers to load the schema from file.

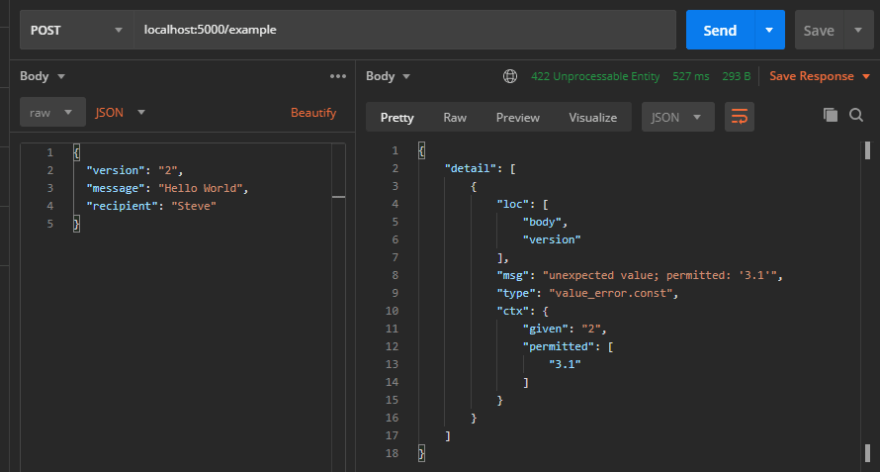

FastAPI on the other hand, uses pydantic to provide schema validation, and generates sensible human-readable error messages, and no extra code or libraries are needed.

The pydantic version of the above jsonschema might look like:

class ChatEntry(BaseModel):

""" A chat message """

version: str = Field("3.1", const=True, description="Protocol version")

message: str = Field(..., description="Message body")

recipient: str = Field(..., description="Receiver of message")

author: Optional[str] = Field(..., description="Sender of message")

timestamp: Optional[float] = Field(..., description="Unix timestamp")

As with JSONSchema, inputs are validated and a 422 error returned containing human-readable validation issues. Here, I'm actually POSTing to the endpoint.

The benefit of pydantic over JSONSchema is many - first and foremost, pydantic models are written in Python rather than JSON, and that's a good thing for us python devs who are allergic to curly braces. But on a serious note, it also means they can be included and distributed in the same way as our python libraries.

The second benefit is it's integrated into FastAPI, so there's no extra libraries or boilerplate code to deal with. Just by referencing a pydantic model as a type hint in the arguments will cause it to be validated at runtime.

The third benefit is that the pydantic model can also be used to construct payloads as well as validating them:

>>> entry = ChatEntry(message="Hello", recipient="Dave")

>>> entry.timestamp = time.time()

>>> entry

ChatEntry(version='3.1', message='Hello', recipient='Dave', author=None, timestamp=1599307407.9207017)

>>> dict(entry)

{'version': '3.1', 'message': 'Hello', 'recipient': 'Dave', 'author': None, 'timestamp': 1599307407.9207017}

Here it can be seen that a payload can be constructed using either arguments of the constructor, or dot property access; and that the payload can be cast to a dict and use directly as part of an HTTP request, or JSON return value.

It should be noted though, that pydantic and other similar libraries could still be used with flask, but the validation would need to be done as a manual call.

And that's not all, there are two other major benefits of this pydantic integration too! I'll explain in separate sections:

Type hinting/checking

Python is well-known as a dynamic typed language, and python benefits from that by being easy and quick to write. BUT... the tradeoff of being easy to write with respect to types is it makes it very easy to make mistakes that cause runtime errors, and runtime errors tend to be hard to catch as some may only occur under a rare set of circumstances. Whether that tardeoff matters though, depends on what the code is doing and where it's running.

Algorithms for a simple data-science project? No problems, be as fast and loose as you want; the worst-case scenario is you have to fix the code a bit and re-run. APIs on the other hand? Errors are costly to fix, and may cause downtime for your service, which could cost you customers. APIs are exactly the kind of software where the trade-off between being easy-to-write, and reducing mistakes is skewed strongly in favour of reducing mistakes; and therefore we should take on as many tests, checks, and validation as we can.

Because request body is defined in pydantic, which uses type hints, it means the data that is handled in the endpoint code also benefit from type hints. Type hinting is a big subject so I won't try to explain here, but take a look at an example of type hint error that could well have caused a runtime error, but was easily caught at development time:

@app.post("/example", response_model=StatusResponse)

def example(chat_entry: ChatEntry):

message = "Received chat message at timestamp " + chat_entry.timestamp

timeout = chat_entry.timestamp + 3600

There are two type errors here. Recall that timestamp is defined as an Optional[float] meaning it can either be None, or a float.

The first issue is that we've used a pretty dumb string catenation, which would break when you tried to add a float/None to the string. Forgetting for a sec that there are at least three better ways to do this in python that wouldn't cause a runtime issue (e.g. f-string with interpolation), this would be caught easily by type checks. Mypy tells us off:

error: Unsupported operand types for + ("str" and "float")

error: Unsupported operand types for + ("str" and "None")

note: Right operand is of type "Optional[float]"

Here, mypy is saying that we can't simply + a "str" and a "float" together, or a "str" and a "None" together. And mypy is right. Upon seeing this, the programmer would go to refactor this with a more appropriate option.

The second issue is a little harder to notice. Since timestamp should be a float, adding 3600 to it shouldn't have been a problem. However, someone would have had to have remembered that timestamp can also be None, and you can't add 3600 to None; Since we were smart and are using type annotations, Mypy will tell us off before we ever get to run this in production and crash.

error: Unsupported operand types for + ("None" and "int")

note: Left operand is of type "Optional[float]"

Simply adding a check makes the type error go away (let's assume this doesn't leave timeout undefined)

if chat_entry.timestamp:

timeout = chat_entry.timestamp + 3600

It's worth noting that since Python 3.5, we've had type hinting. pydantic isn't new in that respect, but having pydantic be integrated into FastAPI just makes the whole experience streamlined, since you can define the type hints for Python and the validation in one go. Without pydantic, and using just JSONschema would have required a lot of duplication in defining the schema in one place, and the types again in another.

Automatic documentation generation

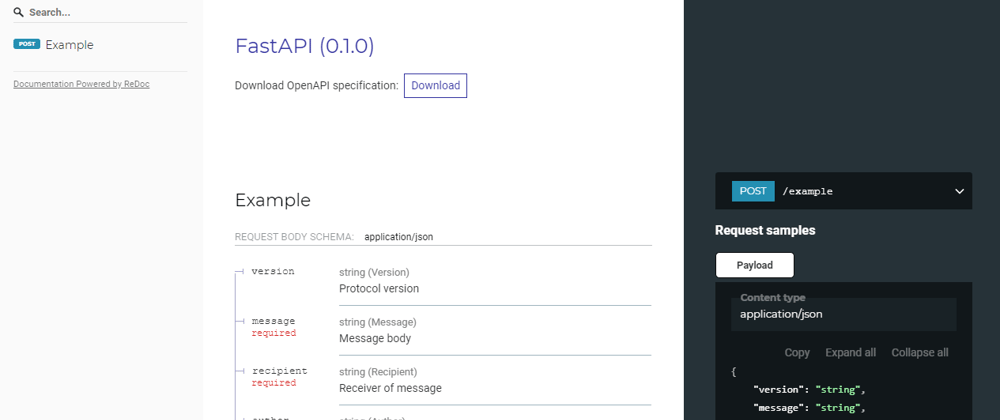

Another major benefit of this whole pydantic+FastAPI integration is that you also get automatic documentation.

API documentation is annoying to write, not only is there a lot of detail you have to get in, but there's also nothing more frustrating for end-users than having implementation not match up with documentation. This is where automatic API documentation come in: if your documentation is generated directly from the schema that is doing the validation, then you never have to worry about documentation going out of date or not matching the validator. You won't get into a situation where the docs is saying something is valid, but the validator rejecting it, or vice-versa.

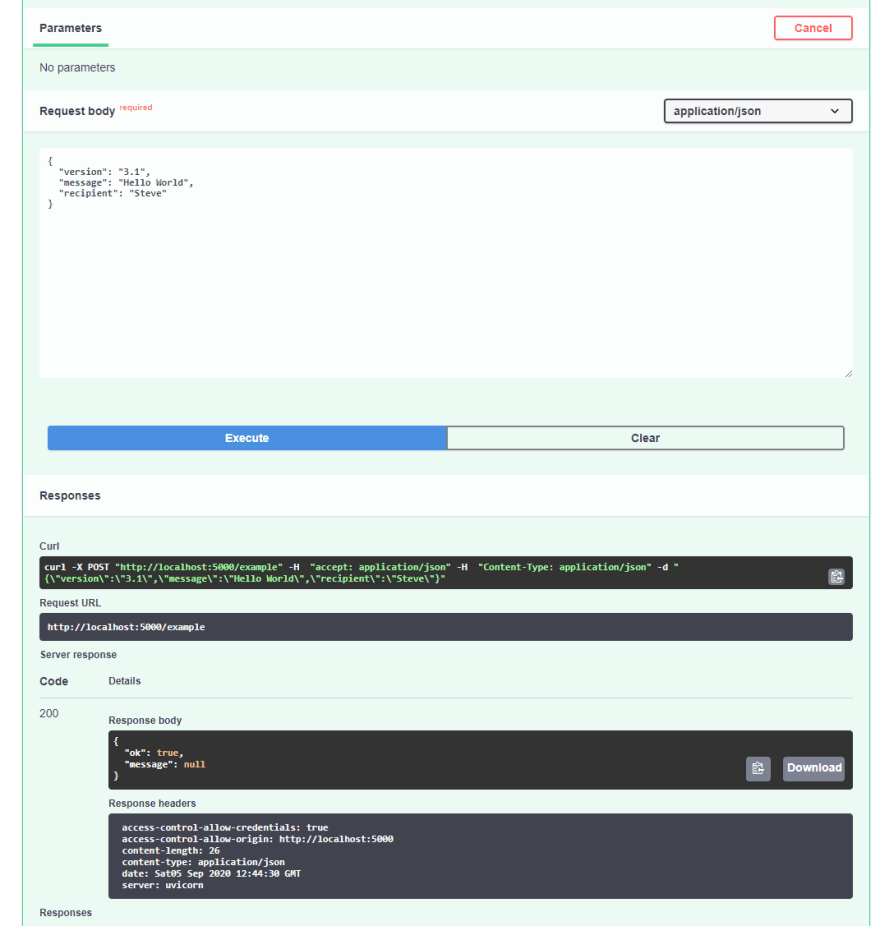

FastAPI is bundled with a couple of UIs that automatically provide you with a documentation endpoints right out of the box. The above examples produce the following user interfaces with zero extra configuration:

Swagger UI (which includes a convenient interface for trying out the endpoints directly from the browser):

openapi.json is also available directly, which you can plug into whatever other tools or UI are desired, including automatic client library generators:

For example, you could import the endpoints directly into Postman using this URL, which is great for testing and development for you and your end-users

Asyncio

One problem I had with Flask was the lack of Asyncio support. Asyncio is pretty important for HTTP endpoints, which tend to do a lot of waiting around for IO and network chatter, making it a good

candidate for concurrency using async.

As a result of a lack of asyncio in Flask, I'd been using Tornado instead, which is a much larger framework, and it feels a bit bulky to be using for HTTP APIs.

FastAPI supports asyncio by default, which means I can now use one framework for all my endpoints, and without the overhead of Tornado, whether they need async or not.

Async and asyncio is too big of a topic to discuss here, but for those wanting to find out more, this page on RealPython is worth a read

Websockets

Websockets are another feature that Flask does't support, and which I had to turn to Tornado for. Tornado with websockets, and asyncio is quite a nice option, particularly for backends that deal with monitoring and closer-to-realtime things, and if the backend can hook into pub-sub.

I have a few projects that have involved a client (sometimes Vue.js, sometimes a game client) that needed to become part of a larger distributed system that communicated with pub/sub (often aioredis) with other clients and servers spanning multiple machines and VMs. Websocket are one of the few options for a browser-based client.

Because in these applications, my backend is relatively light, it's main job is to bridge messages between websocket and pub/sub, FastAPI would make an idea replacement for Tornado for this.

GraphQL

GraphQL is a new take on APIs, made by Facebook. It defines the schema of how entities relate to each other, and allows the client to describe what data they want as part of their request. This resolves a common problem maintainers of REST endpoints encounter - having to create new endpoints every time a consumer requires data to be formatted or joined in a different way.

Another problem solved by GraphQL is that it allows multiple different databases and data sources to be integrated together under one data graph. You could have a user table in PostgreSQL, but store messages in Elasticsearch. Instead of continuously having to write code that cross-references the two, GraphQL takes the effort out of it by letting you define resolver functions that will automatically be run by GraphQL only when needed to when a user requests them.

There are some downsides however, there's a fair bit of overhead in resolving all these entities, and GraphQL's resolvers aren't as efficient as a well-written JOIN query in SQL. So GraphQL ends up being easier to write, but less performant on some tasks.

For bigger APIs with heavily relational data, GraphQL makes sense; this easier maintainability is extremely important. But for smaller and simpler APIs and microservices, GraphQL may be too much overhead.

GraphQL and Python has been a bit of a dream-team. I use SQLAlchemy to maintain and access relational databases; and Graphene (one of Python's GraphQL implementations) has a nice integration that lets me pull the SQLAlchemy ORM and automatically provide resolvers for it. Since GraphQL also provides introspection and automatic documentation generation, and since database migrations can be handled automatically using alembic, it means whenever I need to add a column, I only need to do it in one place: the SQLAlchemy ORM. From there, alembic will handle the database migration to add that column; GraphQL will automatically be up to date thanks to the SQLAlchemy integration; and the documentation is also automatically generated. Contrast this to before where manual updates needed to have been made to queries, documentation, the database, and the APIs.

To date, I've been using the flask-graphql/GraphQLView integration. And again, because FastAPI comes with GraphQL and Graphene integration out of the box (via the Starlette library), I've been more than happy to switch over my GraphQL APIs from Flask to Graphql. Since both use Graphene, switching over was trivial.

One surprising feature of Starlette's Graphene integration is that if you don't opt to use asynchronous resolvers, then Starlette will execute your query in a separate thread, which provides better concurrency than flask-graphql does, without any additional thought needed. A clear win for FastAPI/Starlette, given GraphQL tends to be a heavier-weight backend.

My only slight issue is that Starlette currently uses an older version of GraphiQL than flask-graphql, but that's a minor problem and I'm sure it'll get updated soon.

(image from Starlette website)

Speed

FastAPI is really fast. But... that's all I have to say about that since speed hasn't been a priority for me (many of my endpoints deal with hefty processing tasks, so the actual HTTP portion doesn't feature highly on my list of priorities). I haven't done any benchmarks, but I can confirm that it's certainly clippy, enough that you don't have to worry that this is going to be a bad tradeoff.

Overall, Sebastián Ramírez (@tiangolo ), the creator of FastAPI, and python super-villain if his Salvador Dali moustache is anything to go by, has created an amazing Python API framework, which for me has instantly replaced three or four tools, and improved the robustness, documentation quality, and development speed across multiple APIs in one go.

It's rare to see a single package make such a big change across a whole tech stack, and I believe firmly that FastAPI will be part of the toolbox of today's Python backend developer.

Having said that, I've been a bit biased in this post, since Flask still has plenty going for it - Views, Templating, and a lot of other use-cases that aren't for building APIs. So while it's clear to me that FastAPI is a much better framework than Flask for APIs, Flask still remains a good choice for many other HTTP tasks.

Here are some links, in case you want to find out more:

Top comments (3)

Thanks for the article, Yuan. I'm drawn more towards FastAPI TBH - especially because you say the framework has a more streamlined experience overall. My colleague wrote this comparison piece which you might find interesting: stxnext.com/blog/fastapi-vs-flask-...

Great timing. I'm beginning a quest to learn Python, including for web development, and my first project will be an API. I was trying to decide between Django, Flask, and FastAPI. This comparison was useful.

Well written! I actually love FastAPI due to the fact that it gives automatic Swagger documentation and the async methods thta it exposes by default. The benchmarks also show that :)